User's Guide to ML-Lex and ML-Yacc

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

COMMUNICATION STUDIES REGISTRATION INFORMATION DO NOT E-Mail the Professor to Request Permission for Communication Studies Class

COMMUNICATION STUDIES REGISTRATION INFORMATION DO NOT e-mail the professor to request permission for Communication Studies classes! We follow our departmental waitlist priorities found on the Department web page at: http://www.lsa.umich.edu/comm/undergraduate/generalinformation/majorpolicies. Students on the waitlist are expected to attend the first two lectures and the first discussion section for the class, just as any enrolled student. Students who do not do attend will lose their spot in the class or on the waitlist! ---------------------------------------------------------------------------------------------------------------------- Comm 101 & 102 are restricted to students with freshman and sophomore standing. If you have over 54 credits, including the current semester, you will not be able to register for these. Juniors and seniors wishing to take Comm 101 and 102 may attend the first two lectures and first discussion section of the course and ask the instructor to add them to the waitlist. Instructors will issue permissions from the waitlist after classes begin, according to department waitlist priorities. There may be some exceptions to this policy, but you will need to e-mail [email protected] 2 (TWO) days prior to your registration date to find out if you qualify for an exception. Provide the following: Name: UMID#: Registration Date: Registration Term: Course: Top 3 section choices: Exception eligibility - AP credit has pushed you over the 54 credits for junior standing, OR you have junior standing but have already completed Comm 101 or 102 to start the Comm prerequisites, OR you are a transfer student with junior standing OR you are an MDDP student with more than sophomore standing. -

Text Editing in UNIX: an Introduction to Vi and Editing

Text Editing in UNIX A short introduction to vi, pico, and gedit Copyright 20062009 Stewart Weiss About UNIX editors There are two types of text editors in UNIX: those that run in terminal windows, called text mode editors, and those that are graphical, with menus and mouse pointers. The latter require a windowing system, usually X Windows, to run. If you are remotely logged into UNIX, say through SSH, then you should use a text mode editor. It is possible to use a graphical editor, but it will be much slower to use. I will explain more about that later. 2 CSci 132 Practical UNIX with Perl Text mode editors The three text mode editors of choice in UNIX are vi, emacs, and pico (really nano, to be explained later.) vi is the original editor; it is very fast, easy to use, and available on virtually every UNIX system. The vi commands are the same as those of the sed filter as well as several other common UNIX tools. emacs is a very powerful editor, but it takes more effort to learn how to use it. pico is the easiest editor to learn, and the least powerful. pico was part of the Pine email client; nano is a clone of pico. 3 CSci 132 Practical UNIX with Perl What these slides contain These slides concentrate on vi because it is very fast and always available. Although the set of commands is very cryptic, by learning a small subset of the commands, you can edit text very quickly. What follows is an outline of the basic concepts that define vi. -

Binpac: a Yacc for Writing Application Protocol Parsers

binpac: A yacc for Writing Application Protocol Parsers Ruoming Pang∗ Vern Paxson Google, Inc. International Computer Science Institute and New York, NY, USA Lawrence Berkeley National Laboratory [email protected] Berkeley, CA, USA [email protected] Robin Sommer Larry Peterson International Computer Science Institute Princeton University Berkeley, CA, USA Princeton, NJ, USA [email protected] [email protected] ABSTRACT Similarly, studies of Email traffic [21, 46], peer-to-peer applica- A key step in the semantic analysis of network traffic is to parse the tions [37], online gaming, and Internet attacks [29] require under- traffic stream according to the high-level protocols it contains. This standing application-level traffic semantics. However, it is tedious, process transforms raw bytes into structured, typed, and semanti- error-prone, and sometimes prohibitively time-consuming to build cally meaningful data fields that provide a high-level representation application-level analysis tools from scratch, due to the complexity of the traffic. However, constructing protocol parsers by hand is a of dealing with low-level traffic data. tedious and error-prone affair due to the complexity and sheer num- We can significantly simplify the process if we can leverage a ber of application protocols. common platform for various kinds of application-level traffic anal- This paper presents binpac, a declarative language and com- ysis. A key element of such a platform is application-protocol piler designed to simplify the task of constructing robust and effi- parsers that translate packet streams into high-level representations cient semantic analyzers for complex network protocols. We dis- of the traffic, on top of which we can then use measurement scripts cuss the design of the binpac language and a range of issues that manipulate semantically meaningful data elements such as in generating efficient parsers from high-level specifications. -

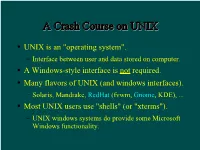

A Crash Course on UNIX

AA CCrraasshh CCoouurrssee oonn UUNNIIXX UNIX is an "operating system". Interface between user and data stored on computer. A Windows-style interface is not required. Many flavors of UNIX (and windows interfaces). Solaris, Mandrake, RedHat (fvwm, Gnome, KDE), ... Most UNIX users use "shells" (or "xterms"). UNIX windows systems do provide some Microsoft Windows functionality. TThhee SShheellll A shell is a command-line interface to UNIX. Also many flavors, e.g. sh, bash, csh, tcsh. The shell provides commands and functionality beyond the basic UNIX tools. E.g., wildcards, shell variables, loop control, etc. For this tutorial, examples use tcsh in RedHat Linux running Gnome. Differences are minor for the most part... BBaassiicc CCoommmmaannddss You need these to survive: ls, cd, cp, mkdir, mv. Typically these are UNIX (not shell) commands. They are actually programs that someone has written. Most commands such as these accept (or require) "arguments". E.g. ls -a [show all files, incl. "dot files"] mkdir ASTR688 [create a directory] cp myfile backup [copy a file] See the handout for a list of more commands. AA WWoorrdd AAbboouutt DDiirreeccttoorriieess Use cd to change directories. By default you start in your home directory. E.g. /home/dcr Handy abbreviations: Home directory: ~ Someone else's home directory: ~user Current directory: . Parent directory: .. SShhoorrttccuuttss To return to your home directory: cd To return to the previous directory: cd - In tcsh, with filename completion (on by default): Press TAB to complete filenames as you type. Press Ctrl-D to print a list of filenames matching what you have typed so far. Completion works with commands and variables too! Use ↑, ↓, Ctrl-A, & Ctrl-E to edit previous lines. -

Puremessage for Unix Help Contents Getting Started

PureMessage for Unix help Contents Getting Started......................................................................................................................................... 1 Welcome to PureMessage for Unix.............................................................................................. 1 Deployment Strategies.................................................................................................................. 6 Installing PureMessage............................................................................................................... 18 Upgrading PureMessage.............................................................................................................51 Quick Reference Guide...............................................................................................................65 Contacting Sophos...................................................................................................................... 75 Managing PureMessage........................................................................................................................ 77 Dashboard Tab............................................................................................................................77 Policy Tab....................................................................................................................................79 Quarantine Tab..........................................................................................................................130 -

![[MS-MAIL-Diff]: Remote Mailslot Protocol](https://docslib.b-cdn.net/cover/6097/ms-mail-diff-remote-mailslot-protocol-1656097.webp)

[MS-MAIL-Diff]: Remote Mailslot Protocol

[MS-MAIL-Diff]: Remote Mailslot Protocol Intellectual Property Rights Notice for Open Specifications Documentation ▪ Technical Documentation. Microsoft publishes Open Specifications documentation (“this documentation”) for protocols, file formats, data portability, computer languages, and standards support. Additionally, overview documents cover inter-protocol relationships and interactions. ▪ Copyrights. This documentation is covered by Microsoft copyrights. Regardless of any other terms that are contained in the terms of use for the Microsoft website that hosts this documentation, you can make copies of it in order to develop implementations of the technologies that are described in this documentation and can distribute portions of it in your implementations that use these technologies or in your documentation as necessary to properly document the implementation. You can also distribute in your implementation, with or without modification, any schemas, IDLs, or code samples that are included in the documentation. This permission also applies to any documents that are referenced in the Open Specifications documentation. ▪ No Trade Secrets. Microsoft does not claim any trade secret rights in this documentation. ▪ Patents. Microsoft has patents that might cover your implementations of the technologies described in the Open Specifications documentation. Neither this notice nor Microsoft's delivery of this documentation grants any licenses under those patents or any other Microsoft patents. However, a given Open Specifications document might be covered by the Microsoft Open Specifications Promise or the Microsoft Community Promise. If you would prefer a written license, or if the technologies described in this documentation are not covered by the Open Specifications Promise or Community Promise, as applicable, patent licenses are available by contacting [email protected]. -

Mail Reference Manual

MAIL REFERENCE MANUAL Kurt Shoens Revised by Craig Leres and Mark Andrews Version 5.5 March 14, 1997 1. Introduction Mail provides a simple and friendly environment for sending and receiving mail. It divides incoming mail into its constituent messages and allows the user to deal with them in any order. In addition, it pro- vides a set of ed-like commands for manipulating messages and sending mail. Mail offers the user simple editing capabilities to ease the composition of outgoing messages, as well as providing the ability to define and send to names which address groups of users. Finally, Mail is able to send and receive messages across such networks as the ARPANET, UUCP, and Berkeley network. This document describes how to use the Mail program to send and receive messages. The reader is not assumed to be familiar with other message handling systems, but should be familiar with the UNIX1 shell, the text editor, and some of the common UNIX commands. “The UNIX Programmer’s Manual,” “An Introduction to Csh,” and “Text Editing with Ex and Vi” can be consulted for more information on these topics. Here is how messages are handled: the mail system accepts incoming messages for you from other people and collects them in a file, called your system mailbox. When you login, the system notifies you if there are any messages waiting in your system mailbox. If you are a csh user, you will be notified when new mail arrives if you inform the shell of the location of your mailbox. On version 7 systems, your system mailbox is located in the directory /var/mail in a file with your login name. -

Research on UNIX Forensic Analysis

International Conference on Intelligent Control and Computer Application (ICCA 2016) Research on UNIX Forensic Analysis Lianfu Yin Nahu College, Jiaxing University Jiaxing, Zhejiang 314033, China [email protected] Abstract—UNIX is one of the most mainstream operating systems, it has great practical significance to research the methodology of B. Initial response information preservation UNIX forensic analysis. This paper firstly introduces the method The initial response information can be saved in the to capture the volatile data from UNIX systems, then introduces following ways: the concrete steps and method of UNIX forensic analysis. 1) Save data on the local hard disk; Keywords- computer crime; computer forensics; UNIX 2) Save data in remote media such as floppy disks, USB forensics Analysis drives or tape drives; 3) Manually record information; Ⅰ. INTRODUCTION As UNIX is one of the most common mainstream operating 4) Use netcat (or cryptcat) command, and transfer the systems, it is quite important to analysis the forensic method of located data to the forensic analytical engine through the UNIX system. Before we do forensic analysis, we shall obtain network. forensic data first. Forensics data are divided into two main Try not to save the data on the local hard disk. When we do categories, one is volatile data, and the other is nonvolatile data. data recovery or forensic analysis, the data stored on the local Volatile data are those data which will all disappear when hard disk would overwrite the deleted data located in the computer shuts down. These data are usually in internal unallocated spaces, for these data may provide evidence while memory, mainly including information like the status of the investigating. -

NCCU Programming Languages Concepts 程式語言

NCCU Programming Languages: Concepts & Principles 程式語言 Instructor: 資科系 陳恭副教授 Spring 2006 Lecture 1 Contacts • Instructor: Dr. Kung Chen – E-mail: [email protected] –Office: 大仁樓200210 – Office Hours: Tuesday: 11am-12am • Teaching assistants: 資科系研究生 – E-mail: [email protected] [email protected] –Office: 資科系程式語言與軟體方法實驗室 • Class web page: – http://www.cs.nccu.edu/~chenk/Courses/PL What shall we study? Don’t Get Confused by the Course Name 教哪個程式語言? C++? Java? C#? … No! A “principles” course aims to teach the underlying concepts behind all programming languages (Concept-driven). Course Pre-requisite • Required course for CS major (junior) – Implies that it’s not an easy course • Prerequisite – Experience in C programming – Experience in C++ or Java programming • Non-CS major students – Better talk to the instructor or be well-motivated Programming languages Programming languages you have used you’ve heard of • C, Java, C++, C#, • Lisp, … •Basic, … •… Programming Languages’ Tower of Babel • Why are there so many programming languages? "I speak Spanish to God, Italian to women, French to men, and German to my horse." — Emperor Charles V (1500-1558) Why So Many Languages? • Application domains have distinctive (and conflicting) needs • Examples: – Scientific Computing: high performance – Business: report generation – Artificial intelligence: symbolic computation – Systems programming: low-level access – Real-time systems: timing constraints – Special purpose languages –… Why So Many Languages? (Cont’d) • Evolution: Our understanding of programming evolves, so do languages grow! • The advance of programming methods usually lead to new programming languages: – Structured programming • Pascal – Object-Oriented Programming • Simula 67, Smalltalk, C++ – Functional programming • Socio-economic factors – Proprietary interests, commercial advantage • Personal preference Different Programming Language Design Philosophies C Other languages If all you have is a hammer, then everything looks like a nail. -

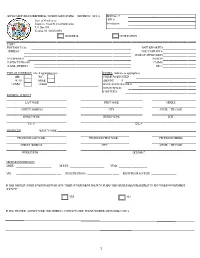

Life Aut Comm Applicati Part I Insuranc Address Telephon Contact E-Mail Ad Type of C Insured / Producer Pr Vehicle in Make: Vin

APPLICATION FRAUD REFERRAL / NOTIFICATION FORM - OIFP/BFD-2 (04/13) BFD Case #: / / State of New Jersey OIFP #: Insurance Fraud Referral/Notification Investigator: P.O. Box 094 Trenton, NJ 08625-0094 REFERRAL NOTIFICATION PART I INSURANCE CO.: DATE REPORTED: ADDRESS: NAIC COMPANY #: DATE OF APPLICATION: TELEPHONE #: POLICY#: CONTACT PERSON: CLAIM #: E-MAIL ADDRESS: SIU #: TYPE OF COVERAGE (check appropriate box) STATUS (indicate as appropriate) LIFE W.C. PREMIUUM ADJUSTED: AUTO HOME AMOUNT: $ COMM. OTHER APPLICATION DECLINED: NON-RENEWAL: CANCELED: INSURED / SUBJECT LAST NAME FIRST NAME MIIDDLE STREET ADDRESS CITY STATE / ZIP CODE HOME PHONE WORK PHONE DOB S.S. # D.L. # PRODUCER AGENCY NAME: PRODUCER LAST NAME PRODUCER FIRST NAAME PRODUCER MIDDLE STREET ADDRESS CITY STATE / ZIP CODE WORK PHONE LICENSE # VEHICLE INFORMATION MAKE: MODEL: YEAR: VIN: REGISTRATION #: REGISTRATION STATE: IS THIS MATTER UNDER INVESTIGATION BY ANY OTHER GOVERNMENT AGENCY OR HAS THIS MATTER BEEN REFERRED TO ANY OTHER GOVERNMENT AGENCY? YES NO IF YES, PROVIDE: AGENCY NAME AND ADDRESS, CONTACT NAME, PHONE NUMBER AND E--MAIL; CASE # 1 PART II Provision(s) OF N.J.S.A. 17:33A-4 RELATING TO APPLICATIONS THAT MAY HAVE BEEN VIOLATED: (CHECK APPROPRIATE BOX) a.(3){5} - conceals relevant evidence: CONCEALS OR KNOWINGLY FAILS TO DISCLOSE AN EVENT AFFECTING ANY PERSON’S INITIAL RIGHT TO AN INSURANCE BENEFIT OR THE AMOUNT OF A BENEFIT. N.J.S.A 17:33A-4a(3) a.(4)(A) – Prepares or makes any written or oral statement: INTENDED TO BE PRESENTED TO ANY INSURANCE COMPANY OR PRODUCER FOR THE PURPOSE OF OBTAINING A MOTOR VEHICLE INSURANCE POLICY, THAT THE PERSON TO BE THE INSURED RESIDES OR IS DOMICILED IN THIS STATE WHEN, IN FACT, THAT PERSON RESIDES OR IS DOMICILED IN A STATE OTHER THAN THIS STATE N.J.S.A. -

Mail Administration Guide

Mail Administration Guide Sun Microsystems, Inc. 2550 Garcia Avenue Mountain View, CA 94043-1100 U.S.A. Part No: 802-5827 August 1997 Copyright 1997 Sun Microsystems, Inc. 901 San Antonio Road, Palo Alto, California 94303-4900 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, SunSoft, SunDocs, SunExpress, SunOS, OpenWindows, DeskSet, ONC, NFS, and Solaris are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. The OPEN LOOK and SunTM Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. Sun holds a non-exclusive license from Xerox to the Xerox Graphical User Interface, which license also covers Sun’s licensees who implement OPEN LOOK GUIs and otherwise comply with Sun’s written license agreements. -

Lesson 1 Basic Unix Commands

Sequence Analysis Spring 2000 Dr. Richard Friedman (212)305-6901 (76901) [email protected] 130BB Lesson 1 Basic Unix Commands Assignment 1 1. Read: Introduction to Computing on the Columbia University Cancer Center Computer and Informatics Resource Center's Dec-Alpha. 2. Read Unix Primer Plus: p. 19-31, 35-39, 59-76, 134-144, 207-210 3. Login. Change your password. Use the who, u, and finger commands. logout. 4. Learn to use Pine. Send e-mail back and forth and use the reply, forward, save, and export functions. 5. Work through the files module of the learn program. (section files 10.1a of learn does not work properly. Carl Yazstremski is a baseball player. Also, section files 13.01a, dealing with ctrl-S and ctrl-Q to stop and restart output will not work, because these commands do not work in the system implementation described here). Summary of commands: Note: In this document different fonts have different meanings: Times is used to explain commands and otherwise address the reader directly. Courier is used to indicate commands and command options. Courier italics are used to indicate command parameters, for example, filenames. Courier bold is used to indicate commands that are not displayed. Courier bold italics are used to indicate computer- generated output. Helvetica is used to indicate menu items. login gets you onto the system. passwd changes your password. who identifies users on the system in detail. u gives usernames of users on system. 1 finger username gives information about the user named. logout gets you off the system.