Research on UNIX Forensic Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ocaml Standard Library Ocaml V

OCaml Standard Library OCaml v. 3.12.0 | June 7, 2011 | Copyright c 2011 OCamlPro SAS | http://www.ocamlpro.com/ Standard Modules module List module Array Basic Data Types let len = List.length l let t = Array.create len v let t = Array.init len (fun pos -> v_at_pos) Pervasives All basic functions List.iter (fun ele -> ... ) l; let v = t.(pos) String Functions on Strings let l' = List.map(fun ele -> ... ) l t.(pos) <- v; Array Functions on Polymorphic Arrays let l' = List.rev l1 let len = Array.length t List Functions on Polymorphic Lists let acc' = List.fold left (fun acc ele -> ...) acc l let t' = Array.sub t pos len Char Functions on Characters let acc' = List.fold right (fun ele acc -> ...) l acc let t = Array.of_list list Int32 Functions on 32 bits Integers if List.mem ele l then ... let list = Array.to_list t Int64 Functions on 64 bits Integers if List.for all (fun ele -> ele >= 0) l then ... Array.iter (fun v -> ... ) t; Nativeint Functions on Native Integers if List.exists (fun ele -> ele < 0) l then ... let neg = List.find (fun x -> x < 0) ints Array.iteri (fun pos v -> ... ) t; Advanced Data Types let negs = List.find all (fun x -> x < 0) ints let t' = Array.map (fun v -> ... ) t let t' = Array.mapi (fun pos v -> ... ) t Buffer Automatically resizable strings let (negs,pos) = List.partition (fun x -> x < 0) ints let ele = List.nth 2 list let concat = Array.append prefix suffix Complex Complex Numbers Array.sort compare t; Digest MD5 Checksums let head = List.hd list Hashtbl Polymorphic Hash Tables let tail = List.tl list let value = List.assoc key assocs Queue Polymorphic FIFO module Char Stack Polymorphic LIFO if List.mem assoc key assocs then .. -

COMMUNICATION STUDIES REGISTRATION INFORMATION DO NOT E-Mail the Professor to Request Permission for Communication Studies Class

COMMUNICATION STUDIES REGISTRATION INFORMATION DO NOT e-mail the professor to request permission for Communication Studies classes! We follow our departmental waitlist priorities found on the Department web page at: http://www.lsa.umich.edu/comm/undergraduate/generalinformation/majorpolicies. Students on the waitlist are expected to attend the first two lectures and the first discussion section for the class, just as any enrolled student. Students who do not do attend will lose their spot in the class or on the waitlist! ---------------------------------------------------------------------------------------------------------------------- Comm 101 & 102 are restricted to students with freshman and sophomore standing. If you have over 54 credits, including the current semester, you will not be able to register for these. Juniors and seniors wishing to take Comm 101 and 102 may attend the first two lectures and first discussion section of the course and ask the instructor to add them to the waitlist. Instructors will issue permissions from the waitlist after classes begin, according to department waitlist priorities. There may be some exceptions to this policy, but you will need to e-mail [email protected] 2 (TWO) days prior to your registration date to find out if you qualify for an exception. Provide the following: Name: UMID#: Registration Date: Registration Term: Course: Top 3 section choices: Exception eligibility - AP credit has pushed you over the 54 credits for junior standing, OR you have junior standing but have already completed Comm 101 or 102 to start the Comm prerequisites, OR you are a transfer student with junior standing OR you are an MDDP student with more than sophomore standing. -

Netcat and Trojans/Backdoors

Netcat and Trojans/Backdoors ECE4883 – Internetwork Security 1 Agenda Overview • Netcat • Trojans/Backdoors ECE 4883 - Internetwork Security 2 Agenda Netcat • Netcat ! Overview ! Major Features ! Installation and Configuration ! Possible Uses • Netcat Defenses • Summary ECE 4883 - Internetwork Security 3 Netcat – TCP/IP Swiss Army Knife • Reads and Writes data across the network using TCP/UDP connections • Feature-rich network debugging and exploration tool • Part of the Red Hat Power Tools collection and comes standard on SuSE Linux, Debian Linux, NetBSD and OpenBSD distributions. • UNIX and Windows versions available at: http://www.atstake.com/research/tools/network_utilities/ ECE 4883 - Internetwork Security 4 Netcat • Designed to be a reliable “back-end” tool – to be used directly or easily driven by other programs/scripts • Very powerful in combination with scripting languages (eg. Perl) “If you were on a desert island, Netcat would be your tool of choice!” - Ed Skoudis ECE 4883 - Internetwork Security 5 Netcat – Major Features • Outbound or inbound connections • TCP or UDP, to or from any ports • Full DNS forward/reverse checking, with appropriate warnings • Ability to use any local source port • Ability to use any locally-configured network source address • Built-in port-scanning capabilities, with randomizer ECE 4883 - Internetwork Security 6 Netcat – Major Features (contd) • Built-in loose source-routing capability • Can read command line arguments from standard input • Slow-send mode, one line every N seconds • Hex dump of transmitted and received data • Optional ability to let another program service established connections • Optional telnet-options responder ECE 4883 - Internetwork Security 7 Netcat (called ‘nc’) • Can run in client/server mode • Default mode – client • Same executable for both modes • client mode nc [dest] [port_no_to_connect_to] • listen mode (-l option) nc –l –p [port_no_to_connect_to] ECE 4883 - Internetwork Security 8 Netcat – Client mode Computer with netcat in Client mode 1. -

High Performance Multi-Node File Copies and Checksums for Clustered File Systems∗

High Performance Multi-Node File Copies and Checksums for Clustered File Systems∗ Paul Z. Kolano, Robert B. Ciotti NASA Advanced Supercomputing Division NASA Ames Research Center, M/S 258-6 Moffett Field, CA 94035 U.S.A. {paul.kolano,bob.ciotti}@nasa.gov Abstract To achieve peak performance from such systems, it is Mcp and msum are drop-in replacements for the stan- typically necessary to utilize multiple concurrent read- dard cp and md5sum programs that utilize multiple types ers/writers from multiple systems to overcome various of parallelism and other optimizations to achieve maxi- single-system limitations such as number of processors mum copy and checksum performance on clustered file and network bandwidth. The standard cp and md5sum systems. Multi-threading is used to ensure that nodes are tools of GNU coreutils [11] found on every modern kept as busy as possible. Read/write parallelism allows Unix/Linux system, however, utilize a single execution individual operations of a single copy to be overlapped thread on a single CPU core of a single system, hence using asynchronous I/O. Multi-node cooperation allows cannot take full advantage of the increased performance different nodes to take part in the same copy/checksum. of clustered file system. Split file processing allows multiple threads to operate This paper describes mcp and msum, which are drop- concurrently on the same file. Finally, hash trees allow in replacements for cp and md5sum that utilize multi- inherently serial checksums to be performed in parallel. ple types of parallelism to achieve maximum copy and This paper presents the design of mcp and msum and de- checksum performance on clustered file systems. -

Text Editing in UNIX: an Introduction to Vi and Editing

Text Editing in UNIX A short introduction to vi, pico, and gedit Copyright 20062009 Stewart Weiss About UNIX editors There are two types of text editors in UNIX: those that run in terminal windows, called text mode editors, and those that are graphical, with menus and mouse pointers. The latter require a windowing system, usually X Windows, to run. If you are remotely logged into UNIX, say through SSH, then you should use a text mode editor. It is possible to use a graphical editor, but it will be much slower to use. I will explain more about that later. 2 CSci 132 Practical UNIX with Perl Text mode editors The three text mode editors of choice in UNIX are vi, emacs, and pico (really nano, to be explained later.) vi is the original editor; it is very fast, easy to use, and available on virtually every UNIX system. The vi commands are the same as those of the sed filter as well as several other common UNIX tools. emacs is a very powerful editor, but it takes more effort to learn how to use it. pico is the easiest editor to learn, and the least powerful. pico was part of the Pine email client; nano is a clone of pico. 3 CSci 132 Practical UNIX with Perl What these slides contain These slides concentrate on vi because it is very fast and always available. Although the set of commands is very cryptic, by learning a small subset of the commands, you can edit text very quickly. What follows is an outline of the basic concepts that define vi. -

Netcat Starter

www.allitebooks.com Instant Netcat Starter Learn to harness the power and versatility of Netcat, and understand why it remains an integral part of IT and Security Toolkits to this day K.C. Yerrid BIRMINGHAM - MUMBAI www.allitebooks.com Instant Netcat Starter Copyright © 2013 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: January 2013 Production Reference: 1170113 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-84951-996-0 www.packtpub.com www.allitebooks.com Credits Author Project Coordinators K.C. "K0nsp1racy" Yerrid Shraddha Bagadia Esha Thakker Reviewer Jonathan Craton Proofreader Kelly Hutchison IT Content and Commissioning Editor Graphics Grant Mizen Aditi Gajjar Commissioning Editor Production Coordinator Priyanka Shah Melwyn D'sa Technical Editor Cover Work Ameya Sawant Melwyn D'sa Copy Editor Cover Image Alfida Paiva Conidon Miranda www.allitebooks.com About the author K.C. -

NOMADS User Guide V1.0

NOMADS User Guide V1.0 Table of Contents • Introduction o Explanation of "Online" and "Offline" Data o Obtaining Offline Data o Offline Order Limitations • Distributed Data Access o Functional Overview o Getting Started with OPeNDAP o Desktop Services ("Clients") o OPeNDAP Servers • Quick Start to Retrieve or Plot Data o Getting Started o Using FTP to Retrieve Data o Using HTTP to Retrieve Data o Using wget to Retrieve Data o Using GDS Clients to Plot Data o Using the NOMADS Server to Create Plots • Advanced Data Access Methods o Subsetting Parameters and Levels Using FTP4u o Obtain ASCII Data Using GDS o Scripting Wget in a Time Loop o Mass GRIB Subsetting: Utilizing Partial-file HTTP Transfers • General Data Structure o Organization of Data Directories o Organization of Data Files o Use of Templates in GrADS Data Descriptor Files • MD5 Hash Files o Purpose of MD5 Files o Identifying MD5 Files o Using md5sum on MD5 Files (Linux) o Using gmd5sum on MD5 Files (FreeBSD) o External Links • Miscellaneous Information o Building Dataset Collections o Working with Timeseries Data o Creating Plots with GrADS o Scheduled Downtime Notification Introduction The model data repository at NCEI contains both deep archived (offline) and online model data. We provide a variety of ways to access our weather and climate model data. You can access the online data using traditional access methods (web-based or FTP), or you can use open and distributed access methods promoted under the collaborative approach called the NOAA National Operational Model Archive and Distribution System (NOMADS). On the Data Products page you are presented with a table that contains basic information about each dataset, as well as links to the various services available for each dataset. -

Singularityce User Guide Release 3.8

SingularityCE User Guide Release 3.8 SingularityCE Project Contributors Aug 16, 2021 CONTENTS 1 Getting Started & Background Information3 1.1 Introduction to SingularityCE......................................3 1.2 Quick Start................................................5 1.3 Security in SingularityCE........................................ 15 2 Building Containers 19 2.1 Build a Container............................................. 19 2.2 Definition Files.............................................. 24 2.3 Build Environment............................................ 35 2.4 Support for Docker and OCI....................................... 39 2.5 Fakeroot feature............................................. 79 3 Signing & Encryption 83 3.1 Signing and Verifying Containers.................................... 83 3.2 Key commands.............................................. 88 3.3 Encrypted Containers.......................................... 90 4 Sharing & Online Services 95 4.1 Remote Endpoints............................................ 95 4.2 Cloud Library.............................................. 103 5 Advanced Usage 109 5.1 Bind Paths and Mounts.......................................... 109 5.2 Persistent Overlays............................................ 115 5.3 Running Services............................................. 118 5.4 Environment and Metadata........................................ 129 5.5 OCI Runtime Support.......................................... 140 5.6 Plugins................................................. -

Netcat − Network Connections Made Easy

Netcat − network connections made easy A lot of the shell scripting I do involves piping the output of one command into another, such as: $ cat /var/log/messages | awk '{print $4,$5,$6,$7,$8,$9,$10,$11,$12,$13,$14,$15}' | sed −e 's/\[[0−9]*\]:/:/' | sort | uniq | less which shows me the system log file after removing the timestamps and [12345] pids and removing duplicates (1000 neatly ordered unique lines is a lot easier to scan than 6000 mixed together). The above technique works well when all the processing can be done on one machine. What happens if I want to somehow send this data to another machine right in the pipe? For example, instead of viewing it with less on this machine, I want to somehow send the output to my laptop so I can view it there. Ideally, the command would look something like: $ cat /var/log/messages | awk '{print $4,$5,$6,$7,$8,$9,$10,$11,$12,$13,$14,$15}' | sed −e 's/\[[0−9]*\]:/:/' | sort | uniq | laptop That exact syntax won't work because the shell thinks it needs to hand off the data to a program called laptop − which doesn't exist. There's a way to do it, though. Enter Netcat Netcat was written 5 years ago to perform exactly this kind of magic − allowing the user to make network connections between machines without any programming. Let's look at some examples of how it works. Let's say that I'm having trouble with a web server that's not returning the content I want for some reason. -

Binpac: a Yacc for Writing Application Protocol Parsers

binpac: A yacc for Writing Application Protocol Parsers Ruoming Pang∗ Vern Paxson Google, Inc. International Computer Science Institute and New York, NY, USA Lawrence Berkeley National Laboratory [email protected] Berkeley, CA, USA [email protected] Robin Sommer Larry Peterson International Computer Science Institute Princeton University Berkeley, CA, USA Princeton, NJ, USA [email protected] [email protected] ABSTRACT Similarly, studies of Email traffic [21, 46], peer-to-peer applica- A key step in the semantic analysis of network traffic is to parse the tions [37], online gaming, and Internet attacks [29] require under- traffic stream according to the high-level protocols it contains. This standing application-level traffic semantics. However, it is tedious, process transforms raw bytes into structured, typed, and semanti- error-prone, and sometimes prohibitively time-consuming to build cally meaningful data fields that provide a high-level representation application-level analysis tools from scratch, due to the complexity of the traffic. However, constructing protocol parsers by hand is a of dealing with low-level traffic data. tedious and error-prone affair due to the complexity and sheer num- We can significantly simplify the process if we can leverage a ber of application protocols. common platform for various kinds of application-level traffic anal- This paper presents binpac, a declarative language and com- ysis. A key element of such a platform is application-protocol piler designed to simplify the task of constructing robust and effi- parsers that translate packet streams into high-level representations cient semantic analyzers for complex network protocols. We dis- of the traffic, on top of which we can then use measurement scripts cuss the design of the binpac language and a range of issues that manipulate semantically meaningful data elements such as in generating efficient parsers from high-level specifications. -

Download Instructions—Portal

Download instructions These instructions are recommended to download big files. How to download and verify files from downloads.gvsig.org • H ow to download files • G NU/Linux Systems • MacO S X Systems • Windows Systems • H ow to validate the downloaded files How to download files The files distributed on this site can be downloaded using different access protocols, the ones currently available are FTP, HTTP and RSYNC. The base URL of the site for the different protocols is: • ftp://gvsig.org/ • http://downloads.gvsig.org/ • r sync://gvsig.org/downloads/ To download files using the first two protocols is recommended to use client programs able to resume partial downloads, as it is usual to have transfer interruptions when downloading big files like DVD images. There are multiple free (and multi platform) programs to download files using different protocols (in our case we are interested in FTP and HTTP), from them we can highlight curl (http://curl.haxx.se/) and wget (http://www.gnu.org/software/wget/) from the command line ones and Free Download Manager from the GUI ones (this one is only for Windows systems). The curl program is included in MacOS X and is available for almost all GNU/Linux distributions. It can be downloaded in source code or in binary form for different operating systems from the project web site. The wget program is also included in almost all GNU/Linux distributions and its source code or binaries of the program for different systems can be downloaded from this page. Next we will explain how to download files from the most usual operating systems using the programs referenced earlier: • G NU/Linux Systems • MacO S X Systems • Windows Systems The use of rsync (available from the URL http://samba.org/rsync/) it is left as an exercise for the reader, we will only said that it is advised to use the --partial option to avoid problems when there transfers are interrupted. -

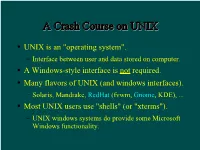

A Crash Course on UNIX

AA CCrraasshh CCoouurrssee oonn UUNNIIXX UNIX is an "operating system". Interface between user and data stored on computer. A Windows-style interface is not required. Many flavors of UNIX (and windows interfaces). Solaris, Mandrake, RedHat (fvwm, Gnome, KDE), ... Most UNIX users use "shells" (or "xterms"). UNIX windows systems do provide some Microsoft Windows functionality. TThhee SShheellll A shell is a command-line interface to UNIX. Also many flavors, e.g. sh, bash, csh, tcsh. The shell provides commands and functionality beyond the basic UNIX tools. E.g., wildcards, shell variables, loop control, etc. For this tutorial, examples use tcsh in RedHat Linux running Gnome. Differences are minor for the most part... BBaassiicc CCoommmmaannddss You need these to survive: ls, cd, cp, mkdir, mv. Typically these are UNIX (not shell) commands. They are actually programs that someone has written. Most commands such as these accept (or require) "arguments". E.g. ls -a [show all files, incl. "dot files"] mkdir ASTR688 [create a directory] cp myfile backup [copy a file] See the handout for a list of more commands. AA WWoorrdd AAbboouutt DDiirreeccttoorriieess Use cd to change directories. By default you start in your home directory. E.g. /home/dcr Handy abbreviations: Home directory: ~ Someone else's home directory: ~user Current directory: . Parent directory: .. SShhoorrttccuuttss To return to your home directory: cd To return to the previous directory: cd - In tcsh, with filename completion (on by default): Press TAB to complete filenames as you type. Press Ctrl-D to print a list of filenames matching what you have typed so far. Completion works with commands and variables too! Use ↑, ↓, Ctrl-A, & Ctrl-E to edit previous lines.