Graph Theoretic Properties of the Darkweb

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Secure Messaging1

SoK: Secure Messaging1 Nik Unger∗, Sergej Dechandy Joseph Bonneauzx, Sascha Fahl{, Henning Perl{ Ian Goldberg∗, Matthew Smithy ∗ University of Waterloo, y University of Bonn, z Stanford University, x Electronic Frontier Foundation, { Fraunhofer FKIE Abstract—Motivated by recent revelations of widespread state insecure ways. However, as will become clear over the course surveillance of personal communication, many products now of this paper, the academic research community is also failing claim to offer secure and private messaging. This includes both a to learn some lessons from tools in the wild. large number of new projects and many widely adopted tools that have added security features. The intense pressure in the past two Furthermore, there is a lack of coherent vision for the future years to deliver solutions quickly has resulted in varying threat of secure messaging. Most solutions focus on specific issues models, incomplete objectives, dubious security claims, and a lack and have different goals and threat models. This is com- of broad perspective on the existing cryptographic literature on pounded by differing security vocabularies and the absence of secure communication. a unified evaluation of prior work. Outside of academia, many In this paper, we evaluate and systematize current secure messaging solutions and propose an evaluation framework for products mislead users by advertising with grandiose claims their security, usability, and ease-of-adoption properties. We con- of “military grade encryption” or by promising impossible sider solutions from academia, but also identify innovative and features such as self-destructing messages [7]–[10]. The recent promising approaches used “in the wild” that are not considered EFF Secure Messaging Scorecard evaluated tools for basic by the academic literature. -

2017 the Human the JOURNAL of POETRY, Touch PROSE and VISUAL ART

VOLUME 10 2017 The Human THE JOURNAL OF POETRY, Touch PROSE AND VISUAL ART UNIVERSITY OF COLORADO ANSCHUTZ MEDICAL CAMPUS THE HUMAN TOUCH Volume 10 2017 GRAPHIC DESIGN EDITORS IN CHIEF Scott Allison Laura Kahn [email protected] Michael Berger ScottAllison.org James Yarovoy PRINTING EDITORIAL BOARD Bill Daley Amanda Glickman Citizen Printing, Fort Collins Carolyn Ho 970.545.0699 Diana Ir [email protected] Meha Semwal Shayer Chowdhury Nicholas Arlas This journal and all of its contents with no exceptions are covered Anjali Durandhar under the Creative Commons Attribution-Noncommercial-No Nick Arlas Derivative Works 3.0 License. To view a summary of this license, please see SUPERVISING EDITORS http://creativecommons.org/licenses/by-nc-nd/3.0/us/. Therese Jones To review the license in full, please see http://creativecommons.org/licenses/by-nc-nd/3.0/us/legalcode. Fair use and other rights are not affected by this license. To learn more about this and other Creative Commons licenses, please see http://creativecommons.org/about/licenses/meet-the-licenses. To honor the creative expression of the journal’s contributors, the unique and deliberate formats of their work have been preserved. © All Authors/Artists Hold Their Own Copyright CONTENTS CONTENTS PREFACE Regarding Henry Tess Jones .......................................................10 Relative Inadequacy Bonnie Stanard .........................................................61 Lines in Elegy (For Henry Claman) Bruce Ducker ...........................................12 -

Somebody Told Me You Died

University of Montana ScholarWorks at University of Montana Graduate Student Theses, Dissertations, & Professional Papers Graduate School 2020 Somebody Told Me You Died Barry E. Maxwell Follow this and additional works at: https://scholarworks.umt.edu/etd Part of the Nonfiction Commons Let us know how access to this document benefits ou.y Recommended Citation Maxwell, Barry E., "Somebody Told Me You Died" (2020). Graduate Student Theses, Dissertations, & Professional Papers. 11606. https://scholarworks.umt.edu/etd/11606 This Thesis is brought to you for free and open access by the Graduate School at ScholarWorks at University of Montana. It has been accepted for inclusion in Graduate Student Theses, Dissertations, & Professional Papers by an authorized administrator of ScholarWorks at University of Montana. For more information, please contact [email protected]. SOMEBODY TOLD ME YOU DIED By BARRY EUGENE MAXWELL Associate of Arts in Creative Writing, Austin Community College, Austin, TX, 2015 Bachelor of Arts with Honors, The University of Texas at Austin, Austin, TX, 2017 Thesis presented in partial fulfillment of the requirements for the degree of Master of Fine Arts in Nonfiction The University of Montana Missoula, MT May 2020 Approved by: Scott Whittenburg Dean of The Graduate School Judy Blunt Director, Creative Writing Department of English Kathleen Kane Department of English Mary-Ann Bowman Department of Social Work Maxwell, Barry, Master of Fine Arts, Spring 2020 Creative Writing, Nonfiction Somebody Told Me You Died Chairperson: Judy Blunt Somebody Told Me You Died is a sampling of works exploring the author’s transition from “normal” life to homelessness, his adaptations to that world and its ways, and his eventual efforts to return from it. -

Rock in the Reservation: Songs from the Leningrad Rock Club 1981-86 (1St Edition)

R O C K i n t h e R E S E R V A T I O N Songs from the Leningrad Rock Club 1981-86 Yngvar Bordewich Steinholt Rock in the Reservation: Songs from the Leningrad Rock Club 1981-86 (1st edition). (text, 2004) Yngvar B. Steinholt. New York and Bergen, Mass Media Music Scholars’ Press, Inc. viii + 230 pages + 14 photo pages. Delivered in pdf format for printing in March 2005. ISBN 0-9701684-3-8 Yngvar Bordewich Steinholt (b. 1969) currently teaches Russian Cultural History at the Department of Russian Studies, Bergen University (http://www.hf.uib.no/i/russisk/steinholt). The text is a revised and corrected version of the identically entitled doctoral thesis, publicly defended on 12. November 2004 at the Humanistics Faculty, Bergen University, in partial fulfilment of the Doctor Artium degree. Opponents were Associate Professor Finn Sivert Nielsen, Institute of Anthropology, Copenhagen University, and Professor Stan Hawkins, Institute of Musicology, Oslo University. The pagination, numbering, format, size, and page layout of the original thesis do not correspond to the present edition. Photographs by Andrei ‘Villi’ Usov ( A. Usov) are used with kind permission. Cover illustrations by Nikolai Kopeikin were made exclusively for RiR. Published by Mass Media Music Scholars’ Press, Inc. 401 West End Avenue # 3B New York, NY 10024 USA Preface i Acknowledgements This study has been completed with the generous financial support of The Research Council of Norway (Norges Forskningsråd). It was conducted at the Department of Russian Studies in the friendly atmosphere of the Institute of Classical Philology, Religion and Russian Studies (IKRR), Bergen University. -

Improving Signal's Sealed Sender

Improving Signal’s Sealed Sender Ian Martiny∗, Gabriel Kaptchuky, Adam Avivz, Dan Rochex, Eric Wustrow∗ ∗University of Colorado Boulder, fian.martiny, [email protected] yBoston University, [email protected] zGeorge Washington University, [email protected] xU.S. Naval Avademy, [email protected] Abstract—The Signal messaging service recently deployed a confidential support [25]. In these cases, merely knowing to sealed sender feature that provides sender anonymity by crypto- whom Alice is communicating combined with other contextual graphically hiding a message’s sender from the service provider. information is often enough to infer conversation content with- We demonstrate, both theoretically and empirically, that this out reading the messages themselves. Former NSA and CIA one-sided anonymity is broken when two parties send multiple director Michael Hayden succinctly illustrated this importance messages back and forth; that is, the promise of sealed sender of metadata when he said the US government “kill[s] people does not compose over a conversation of messages. Our attack is in the family of Statistical Disclosure Attacks (SDAs), and is made based on metadata” [29]. particularly effective by delivery receipts that inform the sender Signal’s recent sealed sender feature aims to conceal this that a message has been successfully delivered, which are enabled metadata by hiding the message sender’s identity. Instead of by default on Signal. We show using theoretical and simulation- based models that Signal could link sealed sender users in as seeing a message from Alice to Bob, Signal instead observes few as 5 messages. Our attack goes beyond tracking users via a message to Bob from an anonymous sender. -

Issue 4 October 1, 2019

WELCOME! Issue 4 October 1, 2019 Welcome to Fleas on the Dog! We’re a no frills brown bag BYOW(eed) online lit rag. (We like to think we’re underground with our heads sticking out.). We don’t care about pretty pictures or fancy layouts. We’re interested in one thing and one thing only: GOOD WRITING. Our sole mandate is quality which means if your mother likes your writing we probably won’t. With this issue we are introducing 2 new categories. The first is Poetry. We were deluged with it even though we only call for short fiction and nonfiction. Apart from the obscene sonnets we carved into washroom walls, we don’t know a heck of a lot about it. (The Wasteland is an album by U2, right?) So we coerced, no, invited, bardo-bard Hezekiah Scretch to abase himself as our Poetry Editor. The fact that he despises verse of any kind is only important if you’re a nitpicker. And so the dude quintet has become the dude sextet. The other new category is Plays (Drama). Since all six of us agreed that Shakespeare’s Death of a Salesman is our all-time favourite comedy, we knew we were on the right track. Besides, what Streetcar wouldn’t Desire such a category? So if your name’s Sam Shepard or David Mamet (and even if it isn’t) you’re welcome to submit your play, previously performed or perennially rejected. We’re proud to announce two writers are making their publishing debut in Issue 4. -

AVMA Guidelines for the Depopulation of Animals: 2019 Edition

AVMA Guidelines for the Depopulation of Animals: 2019 Edition Members of the Panel on Animal Depopulation Steven Leary, DVM, DACLAM (Chair); Fidelis Pharmaceuticals, High Ridge, Missouri Raymond Anthony, PhD (Ethicist); University of Alaska Anchorage, Anchorage, Alaska Sharon Gwaltney-Brant, DVM, PhD, DABVT, DABT (Lead, Companion Animals Working Group); Veterinary Information Network, Mahomet, Illinois Samuel Cartner, DVM, PhD, DACLAM (Lead, Laboratory Animals Working Group); University of Alabama at Birmingham, Birmingham, Alabama Renee Dewell, DVM, MS (Lead, Bovine Working Group); Iowa State University, Ames, Iowa Patrick Webb, DVM (Lead, Swine Working Group); National Pork Board, Des Moines, Iowa Paul J. Plummer, DVM, DACVIM-LA (Lead, Small Ruminant Working Group); Iowa State University, Ames, Iowa Donald E. Hoenig, VMD (Lead, Poultry Working Group); American Humane Association, Belfast, Maine William Moyer, DVM, DACVSMR (Lead, Equine Working Group); Texas A&M University College of Veterinary Medicine, Billings, Montana Stephen A. Smith, DVM, PhD (Lead, Aquatics Working Group); Virginia-Maryland College of Veterinary Medicine, Blacksburg, Virginia Andrea Goodnight, DVM (Lead, Zoo and Wildlife Working Group); The Living Desert Zoo and Gardens, Palm Desert, California P. Gary Egrie, VMD (nonvoting observing member); USDA APHIS Veterinary Services, Riverdale, Maryland Axel Wolff, DVM, MS (nonvoting observing member); Office of Laboratory Animal Welfare (OLAW), Bethesda, Maryland AVMA Staff Consultants Cia L. Johnson, DVM, MS, MSc; Director, Animal Welfare Division Emily Patterson-Kane, PhD; Animal Welfare Scientist, Animal Welfare Division The following individuals contributed substantively through their participation in the Panel’s Working Groups, and their assistance is sincerely appreciated. Companion Animals—Yvonne Bellay, DVM, MS; Allan Drusys, DVM, MVPHMgt; William Folger, DVM, MS, DABVP; Stephanie Janeczko, DVM, MS, DABVP, CAWA; Ellie Karlsson, DVM, DACLAM; Michael R. -

Improving Signal's Sealed Sender

Improving Signal’s Sealed Sender Ian Martiny∗, Gabriel Kaptchuky, Adam Avivz, Dan Rochex, Eric Wustrow∗ ∗University of Colorado Boulder, fian.martiny, [email protected] yBoston University, [email protected] zGeorge Washington University, [email protected] xU.S. Naval Avademy, [email protected] Abstract—The Signal messaging service recently deployed a confidential support [25]. In these cases, merely knowing to sealed sender feature that provides sender anonymity by crypto- whom Alice is communicating combined with other contextual graphically hiding a message’s sender from the service provider. information is often enough to infer conversation content with- We demonstrate, both theoretically and empirically, that this out reading the messages themselves. Former NSA and CIA one-sided anonymity is broken when two parties send multiple director Michael Hayden succinctly illustrated this importance messages back and forth; that is, the promise of sealed sender of metadata when he said the US government “kill[s] people does not compose over a conversation of messages. Our attack is in the family of Statistical Disclosure Attacks (SDAs), and is made based on metadata” [29]. particularly effective by delivery receipts that inform the sender Signal’s recent sealed sender feature aims to conceal this that a message has been successfully delivered, which are enabled metadata by hiding the message sender’s identity. Instead of by default on Signal. We show using theoretical and simulation- based models that Signal could link sealed sender users in as seeing a message from Alice to Bob, Signal instead observes few as 5 messages. Our attack goes beyond tracking users via a message to Bob from an anonymous sender. -

Manual De Usuario

QuickManual Start De Usuario Guide Premier ElitePremier Ricochet® 412/816/832 Enabled Kits IssueINS626 2 Premier Elite Ricochet Kits Quick Guide Contents Contents .................................................................... 2 Introduction ............................................................................ 3 Additional Items Required ........................................ 3 System Architecture ................................................. 3 System Design & Installation Considerations ..................... 4 Wiring the Control Panel .......................................... 4 Connecting AC Mains .............................................. 4 Design the Layout .................................................................. 5 Control Panel ........................................................... 5 System Keypads ...................................................... 5 Movement Sensors .................................................. 5 Door Contacts .......................................................... 5 Shock Sensors ......................................................... 5 External Sounder ..................................................... 5 Smoke Detector ....................................................... 5 Device Locations ................................................................... 5 Installation Sequence ............................................................ 6 Control Panel ........................................................... 6 Keypad Types ....................................................................... -

Download: Brill.Com/Brill-Typeface

Poets of Hope and Despair Russian History and Culture Editors-in-Chief Jeffrey P. Brooks (The Johns Hopkins University) Christina Lodder (University of Kent) Volume 21 The titles published in this series are listed at brill.com/rhc Poets of Hope and Despair The Russian Symbolists in War and Revolution, 1914-1918 Second Revised Edition By Ben Hellman This title is published in Open Access with the support of the University of Helsinki Library. This is an open access title distributed under the terms of the CC BY-NC-ND 4.0 license, which permits any non-commercial use, distribution, and reproduction in any medium, provided no alterations are made and the original author(s) and source are credited. Further information and the complete license text can be found at https://creativecommons.org/licenses/by-nc-nd/4.0/ The terms of the CC license apply only to the original material. The use of material from other sources (indicated by a reference) such as diagrams, illustrations, photos and text samples may require further permission from the respective copyright holder. Cover illustration: Angel with sword, from the cover of Voina v russkoi poezii (1915, War in Russian Poetry). Artist: Nikolai K. Kalmakov (1873-1955). Brill has made all reasonable efforts to trace all rights holders to any copyrighted material used in this work. In cases where these efforts have not been successful the publisher welcomes communications from copyright holders, so that the appropriate acknowledgements can be made in future editions, and to settle other permission matters. The Library of Congress Cataloging-in-Publication Data is available online at http://catalog.loc.gov Typeface for the Latin, Greek, and Cyrillic scripts: “Brill”. -

Remedial Action Work Plan for Ricochet Area Munitions Response Site in State Game Lands 211, Pennsylvania

FINAL REMEDIAL ACTION WORK PLAN FOR RICOCHET AREA MUNITIONS RESPONSE SITE IN STATE GAME LANDS 211, PENNSYLVANIA Contract No.: W912DR-09-D-0006 January 2014 Prepared for: U.S. Army Corps of Engineers Army National Guard Directorate Baltimore District Arlington, VA 22204 Baltimore, MD 21203 and Pennsylvania Army National Guard Department of Military and Veterans Affairs Fort Indiantown Gap Military Reservation Annville, PA 17003 Prepared by: : ® Weston Solutions, Inc. West Chester, PA 19380 13P-0287-5 FINAL REMEDIAL ACTION WORK PLAN FOR RICOCHET AREA MUNITIONS RESPONSE SITE IN STATE GAME LANDS 211, PENNSYLVANIA CONTRACT NO.: W912DR-09-D-0006 DELIVERY ORDER NO. 0009 Prepared For: U.S. ARMY CORPS OF ENGINEERS ARMY NATIONAL GUARD BALTIMORE DISTRICT DIRECTORATE 10 South Howard Street 111 South George Mason Drive Baltimore, MD 21203 Arlington, VA 22204 and PENNSYLVANIA ARMY NATIONAL GUARD Department of Military and Veterans Affairs Fort Indiantown Gap Military Reservation Annville, PA 17003 Prepared by: Weston Solutions, Inc. 1400 Weston Way West Chester, PA 19380 WESTON PROJECT NO.: 03886.551.009.3120 JANUARY 2014 FINAL REMEDIAL ACTION WORK PLAN FOR RICOCHET AREA MUNITIONS RESPONSE SITE IN STATE GAME LANDS 211, PENNSYLVANIA CONTRACT NO.: W912DR-09-D-0006 __________________________________________ 1/07/2014 John Gerhard Date Project Manager 1/07/2014 David Holland Date MEC Operations Manager 1/07/2014 Gregory Daloisio, PMP Date Program Manager Contract No: W912DR-09-D-0006, DO 0009 iii Revision 0 Project No.: 03886.551.009 1/7/2014 TABLE OF CONTENTS Section Page 1. INTRODUCTION.......................................................................................................... 1-1 1.1 AUTHORIZATION ............................................................................................. 1-1 1.2 PURPOSE AND SCOPE .................................................................................... -

Digital Communications Protocols

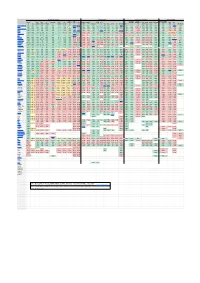

Security & Privacy Compatibility Features & Usability Sustainability E2E E2E Offline Local Decentralized Active Open Open On Anonymous E2E E2E Default Audit FIDO1 Desktop Mobile Apple AOSP Messages messaging File Audio Video Phoneless or Federated Open IETF [1] TLS Client Server Premise [2] Private Group [3] [4] / U2F Web Web Android iOS [5] Win macOS Linux *BSD Terminal MDM [6] [7] [8] Share Call Call [9] [10] Spec [11] Introduced Element/Matrix TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE PARTIAL TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE FALSE 2014 XMPP TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE PARTIAL [12]TRUE FALSE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE FALSE [13] TRUE TRUE TRUE TRUE TRUE TRUE TRUE 1999 NextCloud Talk TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE FALSE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE FALSE TRUE TRUE FALSE TRUE TRUE TRUE TRUE TRUE FALSE FALSE 2018 Wire TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE FALSE TRUE FALSE TRUE TRUE FALSE TRUE TRUE TRUE TRUE PARTIAL TRUE TRUE FALSE TRUE TRUE TRUE TRUE FALSE FALSE FALSE 2014 Jami TRUE TRUE TRUE N/A [14] N/A TRUE TRUE FALSE [15] TRUE FALSE N/A [16] FALSE FALSE TRUE TRUE TRUE TRUE TRUE TRUE TRUE FALSE TRUE FALSE FALSE TRUE TRUE TRUE TRUE TRUE PARTIAL PARTIAL[17] [18] 2016 Briar [19] TRUE TRUE TRUE N/A [20] N/A TRUE TRUE TRUE TRUE TRUE N/A [21] FALSE FALSE TRUE FALSE TRUE FALSE FALSE FALSE FALSE FALSE FALSE TRUE TRUE FALSE FALSE FALSE FALSE TRUE FALSE FALSE 2016 Tox TRUE TRUE TRUE