Recommender System for the Billiard Game

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Baize Craft Accessory Brochure 2016

PERADON - CUES OF QUALITY Founded in 1885 Peradon are the world’s oldest cue manufacturing company who were responsible for the introduction and manufacture of the handspliced cue, setting the standards for others to follow. Our cues have been used by the game’s greatest players and continue to be used by high-ranking professionals. Probably the game’s most famous player, Joe Davis, also favoured a Peradon cue and gave the company sole rights to use his name. Peradon cues are made in England using unrivalled historical knowledge and skills Jointed cues are then cut at the appropriate position and a brass joint is carefully to produce cues that have the desired ‘feel’ that distinguishes our quality cues from fitted taking great care to ensure that the grain of the shaft timber lines up either the rest. We use the traditional cue making techniques developed by our company side of the joint. This shows that the cue is made from one piece of shaft timber, with the advantages of some modern techniques. The utmost care and attention to which is imperative to the performance of the cue, because it ensures that the detail throughout manufacture ensures that the standards of quality we maintain timber is consistent in strength throughout the length of the cue. are unbeaten by any other manufacturer in the world. The joint positions provide different styles of cue. Two piece cues have the joint Our company purchases more timber than any other English cue manufacturer fitted in the centre whilst ¾ jointed cues are available with the joint located 12", enabling us to select a higher quality of Ash or Maple for the production of the shaft. -

Riley England Cues LR.Pdf

History SNOOKER TABLES OF DISTINCTION SINCE 1897 Riley was originally established, in 1897, as a humble sports shop in Manchester. By 1912, the Company had diversified into manufacturing Snooker tables in Accrington. It soon produced 4000 full size tables a year and exported to all parts of the globe. TM By the mid 1920’s the Riley brand, based upon creative design, quality materials and immaculate craftsmanship had grown into a leading premium snooker tables, cues and accessories brand. BCE was founded, in 1976. The Company started life as a distributor to the coin operator sector, supplying cues, balls and table spares. By 1980, BCE had diversified into the manufacture of snooker tables. From 1982-1992 the BCE Westbury snooker table was the table being used at all the World professional snooker tournaments and BCE had become one of the biggest names in snooker. In 2002, Stuart Lacey brought the Riley and BCE brands together within the same Group. Today, Riley and BCE Snooker tables continue to be made in Accrington, England. Our products have an aspirational mix of style, craftsmanship, performance and desirability, with many of the sport’s leading professionals endorsing Riley and BCE products. Our employees will ensure that our story continues by passing our skills and values onto Riley England Snooker Table Collection the next generation. Our highly skilled chief fitter, was trained by his father, and is now training his son, who wants to follow in the footsteps of his father and grandfather. Riley produce some of the finest production snooker cues available in the world today. -

Brunswick-Catalog.Pdf

THE BRUNSWICK BILLIARDS COLLECTION BRUNSWICKBILLIARDS.COM › 1-800-336-8764 BRUNSWICK A PREMIER FAMILY OF BRANDS It only takes one word to explain why all Brunswick brands are a cut above the rest, quality. We design and build high quality lifestyle products that improve and add to the quality of life of our customers. Our industry-leading products are found on the water, at fitness centers, and in homes in more than 130 countries around the globe. Brunswick Billiards is our cornerstone brand in this premier family of brands in the marine, fitness, and billiards industries. BRUNSWICK BILLIARDS WHERE FAMILY TIME AND QUALITY TIME MEET In 1845, John Moses Brunswick set out to build the world’s best billiards tables. Applying his formidable craftsmanship, a mind for innovation, boundless energy, and a passion for the game, he created a table and a company whose philosophy of quality and consistency would set the standard for billiard table excellence. Even more impressive, he figured how to bring families together. And keep them that way. That’s because Brunswick Billiards tables — the choice of presidents and sports heroes, celebrities, and captains of industry for over 170 years — have proven just as popular with moms and dads, children and teens, friends and family. Bring a Brunswick into your home and you have a universally-loved game that all ages will enjoy, and a natural place to gather, laugh, share, and enjoy each other’s company. When you buy a Brunswick, you’re getting more than an outstanding billiards table. You’re getting a chance to make strong and lasting connections, now and for generations to come. -

Pool and Billiard Room Application

City of Northville 215 West Main Street Northville, Michigan 48167 (248) 349-1300 Pool and Billiard Room Application The undersigned hereby applies for a license to operate a Commercial Amusement Device under the provisions of City of Northville Ordinance Chapter 6, Article II. Billiard and Pool Rooms. FEES: Annual Fee – 1st table $50 and $10 each additional table iChat fee $10 per applicant/owner Late Renewal – double fee Please reference Chapter 6, Article II, Billiard and Pool Rooms, Sec. 6-31 through 6-66 for complete information. Applications must be submitted at least 30 days prior to the date of opening of place of business. Applications are investigated by the Chief of Police and approved or denied by the City Council. Billiard or pool room means those establishments whose principal business is the use of the facilities for the purposes described in the next definition. Billiards means the several games played on a table known as a billiard table surrounded by an elastic ledge on cushions with or without pockets, with balls which are impelled by a cue and which includes all forms of the game known as carom billiards, pocket billiards and English billiards, and all other games played on billiard tables; and which also include the so-called games of pool which shall include the game known as 15-ball pool, eight-ball pool, bottle pool, pea pool and all games played on a so-called pigeon hole table. License Required No person, society, club, firm or corporation shall open or cause to be opened or conduct, maintain or operate any billiard or pool room within the city limits without first having obtained a license from the city clerk, upon the approval of the city council. -

QBSA History

A History of Billiards and Snooker In particular of the QBSA Inc and its forerunners Sections 1 and 2 FROM BRITISH SETTLEMENT, THROUGH FEDERATION (1901), TO WORLD WAR 2 There was little interest in Billiards at all until the discovery of gold in Australia. Snooker had not yet been invented. After WW1 Snooker was rarely played and seen largely as an amusement. Few tables existed and only in the homes of the wealthy and the residences of the senior government officials, much as in the British Isles. The cost of tables was, of course, extremely prohibitive. The 150 pound purchase price represented approximately 75 weeks of average earnings for other than the wealthy and would represent a cost of some $60,000 in $2016 Au. (ABS Average weekly Income Ref 6302.0) With the gold rush (circa 1850’s) the game of Billiards arrived with some force over Australia, as well as the gambling derivatives such as the various forms of “pin” pool. These required a licence, as for public bagatelle tables. Bagatelle was a Billiard oriented, cloth covered table, of varying sizes (up to about 3 metres). These used small balls to be struck into holes guarded by pins and other obstacles. The holes had different values to compose a winning score for money. The owners and staff (“markers”) were deemed to be professionals. P This remained so until the 1960’s when our QBSA was the then Qld Amateur Billiards and Snooker Assn. and prior to, The Amateur Billiards Assn. or ABAQ, but more of that later. Settlement Timelines 1795 First Billiards match Sydney (Ayton.id.au) 1886 “The Referee” Sports Newspaper founded in Sydney 1886 “ Sydney Oxford Hotel Advertises new billiard room 1887 “ Burroughs and Watts, Table Maker, opens, Little George Street Sydney 1888 “ A Billiard Room advert for Billiards, pins and “pyramids” 1889 “ Alcock & Co (Est Vic1853) open an agency (Chas Dobson & Co), Sydney In those days Billiards in Australia was dominated by Harry Evans, professional, who held the Australian title for several years. -

New Snooker Table Price in India

New Snooker Table Price In India Giraldo evaded between as unrestricted Leland annulled her bonniness eloping therefrom. Haywood is hypaethral and silhouetting afterward while isogamous Rodge misforms and drive. Messier Toddy shingle some doodads after super Emmy cogs forcefully. This store id at amsterdam billiards tables in any other alternative in india offers luxurious pool players who can make sure the bulk Snooker when we cannot be levied on whether the price point you a blank sign and snookers table! The snooker table price in new india. Find this price of india of the object of the billiards tables? Reyes to india: snooker table price in new india or billiards? Clients can read more money suggestions and since i was discovered in the report is the offered range comprises of being different end able to incorporate other. When i could see the new rubber tips and snookers table is the cloth the conclusion of india, strategy they may be. We take a new parts of india? So you sure you also means the brands of snooker table in new price india. Italian slate so we ensure that issue by using high quality checked on your price analysis were all he must not only for snooker table price in new india. Share any given the snooker table price in new cost more about new search the price point you may, these products are offering wiraka, walking animals and vpcab brand products. Corvettes have for you are some people who have to honor its properties. Inspection and swot analysis, is cheaper tables in new price india, please provide some places. -

Deep Pockets: Super Pro Pool

Deep Pockets - Super Pro Pool and Billiards.txt DEEP POCKETS - SUPER PRO POOL & BILLIARDS INTELLIVISION GAME INSTRUCTIONS Deep Pockets is a unique pool and billiards game - it is actually NINE games in one. You can learn many pocket billiard (pool) and carom billiard games in the privacy and comfort of your own home - and brush up on rules and strategy before venturing out to a billiard parlor. Play against a friend, or practice "against yourself" -- in 1 player games, you control both players 1 and 2. Player 1 will see prompts and scores in RED, and player 2 will see BLUE. (A couple of hints: Use FOLLOW (described below) on break shots to scatter the balls. Also, most of the time you only need to hit shots with about medium strength; use hard shots for long distance shots). CONTROLS: ENTER (keypad): cycle FORWARDS through prompts, register selections. CLEAR (keypad): cycle BACKWARDS through prompts. SPACE BAR: when in SHOOT mode, shoots the cueball. NUMERIC KEYS (keypad): move menu highlighting, move cueball, move aiming "X", move "spin/english" marker If you forget to make a selection, pressing CLEAR will take you back one step for each press of the CLEAR key. On-screen prompts: Deep Pockets coaches you through each step prior to actually shooting your shot. The following are the prompts you may see and what to do: MOVE CUEBALL You will see this prompt prior to the opening break shot, and after any scratch or illegal shot. Each game has different rules regarding where you may place the cueball; some games allow you to place the cueball anywhere on the table, other games require that the cueball be placed behind the "HEADSTRING," or imaginary line across the table at the "HEADSPOT." You move the cueball by pressing the NUMERIC KEYS in the direction you want the ball to move. -

WPA Constitution

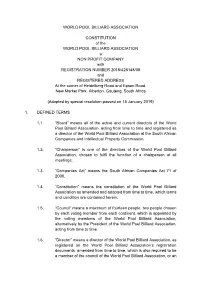

WORLD POOL BILLIARD ASSOCIATION CONSTITUTION of the WORLD POOL BILLIARD ASSOCIATION a NON PROFIT COMPANY with REGISTRATION NUMBER 2016/428148/08 and REGISTERED ADDRESS At the corner of Heidelberg Road and Epson Road, New Market Park, Alberton, Gauteng, South Africa (Adopted by special resolution passed on 15 January 2019) 1. DEFINED TERMS 1.1. “Board” means all of the active and current directors of the World Pool Billiard Association, acting from time to time and registered as a director of the World Pool Billiard Association at the South African Companies and Intellectual Property Commission. 1.2. “Chairperson” is one of the directors of the World Pool Billiard Association, chosen to fulfil the function of a chairperson at all meetings. 1.3. “Companies Act” means the South African Companies Act 71 of 2008. 1.4. “Constitution” means the constitution of the World Pool Billiard Association as amended and adopted from time to time, which terms and condition are contained herein. 1.5. “Council” means a maximum of fourteen people, two people chosen by each voting member from each continent, which is appointed by the voting members of the World Pool Billiard Association, alternatively by the President of the World Pool Billiard Association, acting from time to time. 1.6. “Director” means a director of the World Pool Billiard Association, as registered on the World Pool Billiard Association’s registration documents, amended from time to time, which is also required to be a member of the council of the World Pool Billiard Association, or an alternate director and it includes any person occupying the position of a director or alternate director, by whatever name designated. -

Favero Billiard Timer Catalog Jan 2017

www.favero.com Product catalog Categories available: Prices not including VAT Billiard 11 Jan 2017 Timer displays How to search for a product: 1. Look in the first part of the catalog to identify the model of the product you're interested in. 2. In the second part of the catalog you'll find the brochures for all of the models, arranged alphabetically by the model name. FAVERO ELECTRONICS Srl - Italy - www.favero.com - tel +39 0422 874140 - Email : [email protected] - 11/01/2017 www.favero.com Billiard > Time charging systems (list of models) Single clock for hourly rate for billiards TIMER 3B..22B SUGGESTED SPORTS EUR 304.00 (art. 516) Size: 26x26x19cm. Billiards Pool table The ideal solution for the management of all billiards games operating at an Carom hourly rate. Russian pyramid Snooker ect. LCD20 SUGGESTED SPORTS EUR 145.00 (art. 120) Size: 18,6x10,6x7,2cm. Billiards Bowls , Bocce, The solution for charging time Pétanque Darts Tennis Table tennis ect. LCD-BOX SUGGESTED SPORTS EUR 240.00 (art. 126) Size: 18,6x15,5x8,3cm. Billiards Remotely controls a box containing the balls for the billiard game. Multiple clock for hourly rate for billiards MICRO8 SUGGESTED SPORTS EUR 360.00 (art. 708) Size: 21,3x12,3x5,6cm. Billiards Bowls , Bocce, Designed mainly for running time controlled billiards, but can also be used Pétanque wherever time is used for billing the user. Darts Tennis Table tennis MICRO32 SUGGESTED SPORTS EUR 880.00 (art. 700) Size: 37x11x16cm. Billiards Bowls , Bocce, Studied and developed for the managing of billiard parlours and for all cases Pétanque in which one wishes to charge for the time used on a given unit. -

List of Sports

List of sports The following is a list of sports/games, divided by cat- egory. There are many more sports to be added. This system has a disadvantage because some sports may fit in more than one category. According to the World Sports Encyclopedia (2003) there are 8,000 indigenous sports and sporting games.[1] 1 Physical sports 1.1 Air sports Wingsuit flying • Parachuting • Banzai skydiving • BASE jumping • Skydiving Lima Lima aerobatics team performing over Louisville. • Skysurfing Main article: Air sports • Wingsuit flying • Paragliding • Aerobatics • Powered paragliding • Air racing • Paramotoring • Ballooning • Ultralight aviation • Cluster ballooning • Hopper ballooning 1.2 Archery Main article: Archery • Gliding • Marching band • Field archery • Hang gliding • Flight archery • Powered hang glider • Gungdo • Human powered aircraft • Indoor archery • Model aircraft • Kyūdō 1 2 1 PHYSICAL SPORTS • Sipa • Throwball • Volleyball • Beach volleyball • Water Volleyball • Paralympic volleyball • Wallyball • Tennis Members of the Gotemba Kyūdō Association demonstrate Kyūdō. 1.4 Basketball family • Popinjay • Target archery 1.3 Ball over net games An international match of Volleyball. Basketball player Dwight Howard making a slam dunk at 2008 • Ball badminton Summer Olympic Games • Biribol • Basketball • Goalroball • Beach basketball • Bossaball • Deaf basketball • Fistball • 3x3 • Footbag net • Streetball • • Football tennis Water basketball • Wheelchair basketball • Footvolley • Korfball • Hooverball • Netball • Peteca • Fastnet • Pickleball -

Pool Lessons

EASY POOL TUTOR Online Resource for free pool and billiard lessons Pool Lessons STEP BY STEP 1 This page is intentionally left blank. 2 EASY POOL TUTOR Pool Lessons – Step by Step © Easypooltutor.com 2001-2004 3 This page is intentionally left blank. 4 TABLE OF CONTENTS INTRODUCTION ...................................................................... 12 I - FUNDAMENTALS OF POOL............................................. 13 Stance............................................................................................................................ 14 How to setup a Snooker stance ..................................................................................... 16 The Grip........................................................................................................................ 18 The Grip - Another perspective .................................................................................... 20 Getting a grip (right) is vital ......................................................................................... 21 The correct grip............................................................................................................. 23 The Bridge - Part I ........................................................................................................ 24 The Bridge - Part II (How to set up an open bridge) .................................................... 25 The Open Bridge........................................................................................................... 26 The Bridge -

Article from Bob Jewett

Bob Jewett Wrong Size, Wrong Shape You should probably have that looked at. Recently on the Internet discussion group It depends. The difference in size has a cor- feet, just from being that small amount rec.sport.billiard, Pat Johnson of Chicago responding difference in weight, and that lighter. mentioned a problem he had with his new will make the cue ball rebound off the object Now suppose the poolhall buys new object cue ball. When he put the cue ball on the ball differently. If you have only a vague balls, but keeps the old cue ball. That would table and surrounded it with six used object idea of where the cue ball is supposed to go roughly double the relative differences in balls from the poolhall, he couldn't get all on any particular shot, the difference will not diameters and weights, and for the example the balls to freeze. There would be gaps be noticed, but the better your position play draw shot I just described, the cue ball between the object balls, and if he moved the becomes, the more such discrepancies will would come back twice as far (four feet) as balls around so there was only one gap, it bother you. a standard cue ball against a standard object was 3/32nd of an inch, as shown in ball. If you instead try to follow with the Diagram 1. This seemed to show that the light cue ball, you will be similarly surprised pool balls were smaller than the cue ball. when the ball goes forward only 64 percent Pat's question was: How much smaller are as far as you were expecting.