Econometric Analysis of International Financial Markets

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Financial Econometrics Lecture Notes

Financial Econometrics Lecture Notes Professor Doron Avramov The Hebrew University of Jerusalem & Chinese University of Hong Kong Introduction: Why do we need a course in Financial Econometrics? 2 Professor Doron Avramov, Financial Econometrics Syllabus: Motivation The past few decades have been characterized by an extraordinary growth in the use of quantitative methods in the analysis of various asset classes; be it equities, fixed income securities, commodities, and derivatives. In addition, financial economists have routinely been using advanced mathematical, statistical, and econometric techniques in a host of applications including investment decisions, risk management, volatility modeling, interest rate modeling, and the list goes on. 3 Professor Doron Avramov, Financial Econometrics Syllabus: Objectives This course attempts to provide a fairly deep understanding of such techniques. The purpose is twofold, to provide research tools in financial economics and comprehend investment designs employed by practitioners. The course is intended for advanced master and PhD level students in finance and economics. 4 Professor Doron Avramov, Financial Econometrics Syllabus: Prerequisite I will assume prior exposure to matrix algebra, distribution theory, Ordinary Least Squares, Maximum Likelihood Estimation, Method of Moments, and the Delta Method. I will also assume you have some skills in computer programing beyond Excel. MATLAB and R are the most recommended for this course. OCTAVE could be used as well, as it is a free software, and is practically identical to MATLAB when considering the scope of the course. If you desire to use STATA, SAS, or other comparable tools, please consult with the TA. 5 Professor Doron Avramov, Financial Econometrics Syllabus: Grade Components Assignments (36%): there will be two problem sets during the term. -

Recent Developments in Macro-Econometric Modeling: Theory and Applications

econometrics Editorial Recent Developments in Macro-Econometric Modeling: Theory and Applications Gilles Dufrénot 1,*, Fredj Jawadi 2,* and Alexander Mihailov 3,* ID 1 Faculty of Economics and Management, Aix-Marseille School of Economics, 13205 Marseille, France 2 Department of Finance, University of Evry-Paris Saclay, 2 rue du Facteur Cheval, 91025 Évry, France 3 Department of Economics, University of Reading, Whiteknights, Reading RG6 6AA, UK * Correspondence: [email protected] (G.D.); [email protected] (F.J.); [email protected] (A.M.) Received: 6 February 2018; Accepted: 2 May 2018; Published: 14 May 2018 Developments in macro-econometrics have been evolving since the aftermath of the Second World War. Essentially, macro-econometrics benefited from the development of mathematical, statistical, and econometric tools. Such a research programme has attained a meaningful success as the methods of macro-econometrics have been used widely over about half a century now to check the implications of economic theories, to model macroeconomic relationships, to forecast business cycles, and to help policymakers to make appropriate decisions. However, despite this progress, important methodological and interpretative questions in macro-econometrics remain (Stock 2001). Additionally, the recent global financial crisis of 2008–2009 and the subsequent deep and long economic recession signaled the end of the “great moderation” (early 1990s–2007) and suggested some limitations of the widely employed and by then dominant macro-econometric framework. One of these deficiencies was that current macroeconomic models have failed to predict this huge economic downturn, perhaps because they did not take into account indicators of contagion, systemic risk, and the financial cycle, or the inter-connectedness of asset markets, in particular housing, with the macro-economy. -

Advanced Financial Econometrics

MFE 2013-14 Trinity Term | Advanced Financial Econometrics Advanced Financial Econometrics Kevin Sheppard Department of Economics Oxford-Man Institute of Quantitative Finance Course Site: http://www.kevinsheppard.com/Advanced_Econometrics Weblearn Site: https://weblearn.ox.ac.uk/portal/site/socsci/sbs/mfe2012_2013/ttafe 1 Aims, objectives and intended learning outcomes 1.1 Basic aims This course aims to extend the core tools of Financial Econometrics I & II to some advanced forecasting problems. The first component covers techniques for working with many forecasting models – often referred to as data snoop- ing. The second component covers techniques for handling many predictors – including the case where the number of predictors is much larger than the number of data points available. 1.2 Specific objectives Part I (Week 1 – 4) Revisiting the bootstrap and extensions appropriate for time-series data • Technical trading rules and large number of forecasts • Formalized data-snooping robust inference • False-discovery rates • Detecting sets of models that are statistically indistinguishable • Part I (Week 5 – 8) Dynamic Factor Models and Principal Component Analysis • The Kalman Filter and Expectations-Maximization Algorithm • Partial Least Squares and the 3-pass Regression Filter • Regularized Reduced Rank Regression • 1.3 Intended learning outcomes Following class attendance, successful completion of assigned readings, assignments, both theoretical and empiri- cal, and recommended private study, students should be able to Detect superior performance using methods that are robust to data snooping • Produce and evaluate forecasts in for macro-financial time series in data-rich environments • 1 2 Teaching resources 2.1 Lecturing Lectures are provided by Kevin Sheppard. Kevin Sheppard is an Associate Professor and a fellow at Keble College. -

Information Product Fee Schedule

INFORMATION PRODUCT FEE SCHEDULE Applicable from: 1 January 2020 (Version 7.0) CONTENTS GENERAL ................................................................................................................................................ 1 DIRECT ACCESS FEES ............................................................................................................................... 2 REDISTRIBUTION LICENCE FEES ............................................................................................................... 3 Real Time Redistribution Licence Fees ............................................................................................................... 3 Delayed Redistribution Licence Fees .................................................................................................................. 4 WHITE LABEL FEES .................................................................................................................................. 6 PUBLIC DISPLAY FEES .............................................................................................................................. 8 DISPLAY USE FEES ................................................................................................................................... 9 NON-PROFESSIONAL FEES1 ................................................................................................................... 11 PAGE VIEW FEES*................................................................................................................................. -

An Introduction to Financial Econometrics

An introduction to financial econometrics Jianqing Fan Department of Operation Research and Financial Engineering Princeton University Princeton, NJ 08544 November 14, 2004 What is the financial econometrics? This simple question does not have a simple answer. The boundary of such an interdisciplinary area is always moot and any attempt to give a formal definition is unlikely to be successful. Broadly speaking, financial econometrics is to study quantitative problems arising from finance. It uses sta- tistical techniques and economic theory to address a variety of problems from finance. These include building financial models, estimation and inferences of financial models, volatility estimation, risk management, testing financial economics theory, capital asset pricing, derivative pricing, portfolio allocation, risk-adjusted returns, simulating financial systems, hedging strategies, among others. Technological invention and trade globalization have brought us into a new era of financial markets. Over the last three decades, enormous number of new financial products have been created to meet customers’ demands. For example, to reduce the impact of the fluctuations of currency exchange rates on a firm’s finance, which makes its profit more predictable and competitive, a multinational corporation may decide to buy the options on the future of foreign exchanges; to reduce the risk of price fluctuations of a commodity (e.g. lumbers, corns, soybeans), a farmer may enter into the future contracts of the commodity; to reduce the risk of weather exposures, amuse parks (too hot or too cold reduces the number of visitors) and energy companies may decide to purchase the financial derivatives based on the temperature. An important milestone is that in the year 1973, the world’s first options exchange opened in Chicago. -

Compliance Statement

Compliance Statement Administrator: Euronext Dublin Full name: Euronext Dublin (The Irish Stock Exchange plc) Relevant National Competent Authority: CBI Compliance Statement Euronext Indices Euronext Dublin Version notes latest version February 2020 Version Version notes Euronext Dublin 1 October 2019 Initial version February 2020 Updated version including all new indices since 2 initial version. 3 4 5 6 Note: addition of indices does not lead to a new version of this statement. The lists will be kept up to date.The most recent update of the list was issued 09-Jun-2020. Only changes in significant indices and cessations of indices are marked as new version of the Compliance statement. This publication is for information purposes only and is not a recommendation to engage in investment activities. This publication is provided “as is” without representation or warranty of any kind. Whilst all reasonable care has been taken to ensure the accuracy of the content, Euronext does not guarantee its accuracy or completeness. Euronext will not be held liable for any loss or damages of any nature ensuing from using, trusting or acting on information provided. All proprietary rights and interest in or connected with this publication shall vest in Euronext. No part of it may be redistributed or reproduced in any form without the prior written permission of Euronext. Euronext refers to Euronext N.V. and its affiliates. Information regarding trademarks and intellectual property rights of Euronext is located at terms of use euronext For further information in relation to Euronext Indices please contact: [email protected] (c) 2020 Euronext N.V. -

Financial Market Data for R/Rmetrics

Financial Market Data for R/Rmetrics Diethelm Würtz Andrew Ellis Yohan Chalabi Rmetrics Association & Finance Online R/Rmetrics eBook Series R/Rmetrics eBooks is a series of electronic books and user guides aimed at students and practitioner who use R/Rmetrics to analyze financial markets. A Discussion of Time Series Objects for R in Finance (2009) Diethelm Würtz, Yohan Chalabi, Andrew Ellis R/Rmetrics Meielisalp 2009 Proceedings of the Meielisalp Workshop 2011 Editor Diethelm Würtz Basic R for Finance (2010), Diethelm Würtz, Yohan Chalabi, Longhow Lam, Andrew Ellis Chronological Objects with Rmetrics (2010), Diethelm Würtz, Yohan Chalabi, Andrew Ellis Portfolio Optimization with R/Rmetrics (2010), Diethelm Würtz, William Chen, Yohan Chalabi, Andrew Ellis Financial Market Data for R/Rmetrics (2010) Diethelm W?rtz, Andrew Ellis, Yohan Chalabi Indian Financial Market Data for R/Rmetrics (2010) Diethelm Würtz, Mahendra Mehta, Andrew Ellis, Yohan Chalabi Asian Option Pricing with R/Rmetrics (2010) Diethelm Würtz R/Rmetrics Singapore 2010 Proceedings of the Singapore Workshop 2010 Editors Diethelm Würtz, Mahendra Mehta, David Scott, Juri Hinz R/Rmetrics Meielisalp 2011 Proceedings of the Meielisalp Summer School and Workshop 2011 Editor Diethelm Würtz III tinn-R Editor (2010) José Cláudio Faria, Philippe Grosjean, Enio Galinkin Jelihovschi and Ri- cardo Pietrobon R/Rmetrics Meielisalp 2011 Proceedings of the Meielisalp Summer Scholl and Workshop 2011 Editor Diethelm Würtz R/Rmetrics Meielisalp 2012 Proceedings of the Meielisalp Summer Scholl and Workshop 2012 Editor Diethelm Würtz Topics in Empirical Finance with R and Rmetrics (2013), Patrick Hénaff FINANCIAL MARKET DATA FOR R/RMETRICS DIETHELM WÜRTZ ANDREW ELLIS YOHAN CHALABI RMETRICS ASSOCIATION &FINANCE ONLINE Series Editors: Prof. -

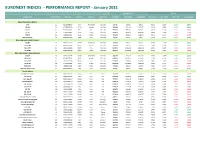

EURONEXT INDICES - PERFORMANCE REPORT - January 2021

EURONEXT INDICES - PERFORMANCE REPORT - January 2021 Closing Levels Returns Index Name Nr. of Constit. ISIN Code Mnemo Location Index Type 1/29/2021 12/31/2020 12/31/2020 Ann. 3 Year Ann. 5 Year 2021 YtD January 2021 Blue-Chip Indices (Price) AEX 25 NL0000000107 AEX Amsterdam Blue Chip 637,11 624,61 624,61 4,36% 8,12% 2,00% 2,00% BEL 20 20 BE0389555039 BEL20 Brussels Blue Chip 3623,60 3621,28 3621,28 -4,12% 0,78% 0,06% 0,06% CAC 40 40 FR0003500008 PX1 Paris Blue Chip 5399,21 5551,41 5551,41 -0,51% 4,10% -2,74% -2,74% PSI 20 18 PTING0200002 PSI20 Lisbon Blue Chip 4794,55 4898,36 4898,36 -5,40% -1,09% -2,12% -2,12% ISEQ 20 20 IE00B0500264 ISE20 Dublin Blue Chip 1231,18 1288,72 1288,72 2,39% 3,15% -4,46% -4,46% OBX Price index 25 NO0007035376 OBXP Oslo Blue Chip 471,53 469,24 469,24 1,291% 7,01% 0,49% 0,49% Blue-Chip Indices (Net Return) AEX NR 25 QS0011211156 AEXNR Amsterdam Blue Chip 1960,58 1922,11 1922,11 7,13% 11,22% 2,00% 2,00% BEL 20 NR 20 BE0389558066 BEL2P Brussels Blue Chip 7933,79 7924,32 7924,32 -1,98% 3,23% 0,12% 0,12% CAC 40 NR 40 QS0011131826 PX1NR Paris Blue Chip 11519,19 11831,28 11831,28 1,61% 6,44% -2,64% -2,64% PSI 20 NR 18 QS0011211180 PSINR Lisbon Blue Chip 9964,13 10179,88 10179,88 -2,44% 1,78% -2,12% -2,12% Blue-Chip Indices (Gross Return) AEX GR 25 QS0011131990 AEXGR Amsterdam Blue Chip 2257,47 2213,17 2213,17 7,64% 11,72% 2,00% 2,00% BEL 20 GR 20 BE0389557050 BEL2I Brussels Blue Chip 10425,53 10410,61 10410,61 -1,16% 4,15% 0,14% 0,14% CAC 40 GR 40 QS0011131834 PX1GR Paris Blue Chip 15034,98 15436,40 15436,40 2,48% -

Linear Cointegration of Nonlinear Time Series with an Application to Interest Rate Dynamics

Finance and Economics Discussion Series Divisions of Research & Statistics and Monetary Affairs Federal Reserve Board, Washington, D.C. Linear Cointegration of Nonlinear Time Series with an Application to Interest Rate Dynamics Barry E. Jones and Travis D. Nesmith 2007-03 NOTE: Staff working papers in the Finance and Economics Discussion Series (FEDS) are preliminary materials circulated to stimulate discussion and critical comment. The analysis and conclusions set forth are those of the authors and do not indicate concurrence by other members of the research staff or the Board of Governors. References in publications to the Finance and Economics Discussion Series (other than acknowledgement) should be cleared with the author(s) to protect the tentative character of these papers. Linear Cointegration of Nonlinear Time Series with an Application to Interest Rate Dynamics Barry E. Jones Binghamton University Travis D. Nesmith Board of Governors of the Federal Reserve System November 29, 2006 Abstract We derive a denition of linear cointegration for nonlinear stochastic processes using a martingale representation theorem. The result shows that stationary linear cointegrations can exhibit nonlinear dynamics, in contrast with the normal assump- tion of linearity. We propose a sequential nonparametric method to test rst for cointegration and second for nonlinear dynamics in the cointegrated system. We apply this method to weekly US interest rates constructed using a multirate lter rather than averaging. The Treasury Bill, Commercial Paper and Federal Funds rates are cointegrated, with two cointegrating vectors. Both cointegrations behave nonlinearly. Consequently, linear models will not fully replicate the dynamics of monetary policy transmission. JEL Classication: C14; C32; C51; C82; E4 Keywords: cointegration; nonlinearity; interest rates; nonparametric estimation Corresponding author: 20th and C Sts., NW, Mail Stop 188, Washington, DC 20551 E-mail: [email protected] Melvin Hinich provided technical advice on his bispectrum computer program. -

Final Report Amending ITS on Main Indices and Recognised Exchanges

Final Report Amendment to Commission Implementing Regulation (EU) 2016/1646 11 December 2019 | ESMA70-156-1535 Table of Contents 1 Executive Summary ....................................................................................................... 4 2 Introduction .................................................................................................................... 5 3 Main indices ................................................................................................................... 6 3.1 General approach ................................................................................................... 6 3.2 Analysis ................................................................................................................... 7 3.3 Conclusions............................................................................................................. 8 4 Recognised exchanges .................................................................................................. 9 4.1 General approach ................................................................................................... 9 4.2 Conclusions............................................................................................................. 9 4.2.1 Treatment of third-country exchanges .............................................................. 9 4.2.2 Impact of Brexit ...............................................................................................10 5 Annexes ........................................................................................................................12 -

An Econometric Model of the Yield Curve with Macroeconomic Jump Effects

An Econometric Model of the Yield Curve with Macroeconomic Jump Effects Monika Piazzesi∗ UCLA and NBER First Draft: October 15, 1999 This Draft: April 10, 2001 Abstract This paper develops an arbitrage-free time-series model of yields in continuous time that incorporates central bank policy. Policy-related events, such as FOMC meetings and releases of macroeconomic news the Fed cares about, are modeled as jumps. The model introduces a class of linear-quadratic jump-diffusions as state variables, which allows for a wide variety of jump types but still leads to tractable solutions for bond prices. I estimate a version of this model with U.S. interest rates, the Federal Reserve's target rate, and key macroeconomic aggregates. The estimated model improves bond pricing, especially at short maturities. The \snake-shape" of the volatility curve is linked to monetary policy inertia. A new monetary policy shock series is obtained by assuming that the Fed reacts to information available right before the FOMC meeting. According to the estimated policy rule, the Fed is mainly reacting to information contained in the yield-curve. Surprises in analyst forecasts turn out to be merely temporary components of macro variables, so that the \hump-shaped" yield response to these surprises is not consistent with a Taylor-type policy rule. ∗I am still looking for words that express my gratitude to Darrell Duffie. I would like to thank Andrew Ang, Michael Brandt, John Cochrane, Heber Farnsworth, Silverio Foresi, Lars Hansen, Ken Judd, Thomas Sargent, Ken Singleton, John Shoven, John Taylor, and Harald Uhlig for helpful suggestions; and Martin Schneider for extensive discussions. -

Econometric Theory

ECONOMETRIC THEORY MODULE – I Lecture - 1 Introduction to Econometrics Dr. Shalabh Department of Mathematics and Statistics Indian Institute of Technology Kanpur 2 Econometrics deals with the measurement of economic relationships. It is an integration of economic theories, mathematical economics and statistics with an objective to provide numerical values to the parameters of economic relationships. The relationships of economic theories are usually expressed in mathematical forms and combined with empirical economics. The econometrics methods are used to obtain the values of parameters which are essentially the coefficients of mathematical form of the economic relationships. The statistical methods which help in explaining the economic phenomenon are adapted as econometric methods. The econometric relationships depict the random behaviour of economic relationships which are generally not considered in economics and mathematical formulations. It may be pointed out that the econometric methods can be used in other areas like engineering sciences, biological sciences, medical sciences, geosciences, agricultural sciences etc. In simple words, whenever there is a need of finding the stochastic relationship in mathematical format, the econometric methods and tools help. The econometric tools are helpful in explaining the relationships among variables. 3 Econometric models A model is a simplified representation of a real world process. It should be representative in the sense that it should contain the salient features of the phenomena under study. In general, one of the objectives in modeling is to have a simple model to explain a complex phenomenon. Such an objective may sometimes lead to oversimplified model and sometimes the assumptions made are unrealistic. In practice, generally all the variables which the experimenter thinks are relevant to explain the phenomenon are included in the model.