1 GSW… Ethernet the Ethernet Family1 of Protocols Are the Most Popular Local Area Network Protocols in the World2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

End-To-End Performance of 10-Gigabit Ethernet on Commodity Systems

END-TO-END PERFORMANCE OF 10-GIGABIT ETHERNET ON COMMODITY SYSTEMS INTEL’SNETWORK INTERFACE CARD FOR 10-GIGABIT ETHERNET (10GBE) ALLOWS INDIVIDUAL COMPUTER SYSTEMS TO CONNECT DIRECTLY TO 10GBE ETHERNET INFRASTRUCTURES. RESULTS FROM VARIOUS EVALUATIONS SUGGEST THAT 10GBE COULD SERVE IN NETWORKS FROM LANSTOWANS. From its humble beginnings as such performance to bandwidth-hungry host shared Ethernet to its current success as applications via Intel’s new 10GbE network switched Ethernet in local-area networks interface card (or adapter). We implemented (LANs) and system-area networks and its optimizations to Linux, the Transmission anticipated success in metropolitan and wide Control Protocol (TCP), and the 10GbE area networks (MANs and WANs), Ethernet adapter configurations and performed sever- continues to evolve to meet the increasing al evaluations. Results showed extraordinari- demands of packet-switched networks. It does ly higher throughput with low latency, so at low implementation cost while main- indicating that 10GbE is a viable intercon- taining high reliability and relatively simple nect for all network environments. (plug and play) installation, administration, Justin (Gus) Hurwitz and maintenance. Architecture of a 10GbE adapter Although the recently ratified 10-Gigabit The world’s first host-based 10GbE adapter, Wu-chun Feng Ethernet standard differs from earlier Ether- officially known as the Intel PRO/10GbE LR net standards, primarily in that 10GbE oper- server adapter, introduces the benefits of Los Alamos National ates only over fiber and only in full-duplex 10GbE connectivity into LAN and system- mode, the differences are largely superficial. area network environments, thereby accom- Laboratory More importantly, 10GbE does not make modating the growing number of large-scale obsolete current investments in network infra- cluster systems and bandwidth-intensive structure. -

40 and 100 Gigabit Ethernet Overview

Extreme Networks White Paper 40 and 100 Gigabit Ethernet Overview Abstract This paper takes a look at the main forces that are driving Ethernet bandwidth upwards. It looks at the standards and architectural practices adopted by the different segments, how the different speeds of Ethernet are used and how its popularity has resulted in an ecosystem spanning data centers, carrier networks, enterprise networks, and consumers. Make Your Network Mobile © 2011 Extreme Networks, Inc. All rights reserved. Do not reproduce. Extreme Networks White Paper: 40 and 100 Gigabit Ethernet Overview and how its popularity has resulted in a complex ecosys- Overview tem between carrier networks, enterprise networks, and consumers. There are many reasons driving the need for higher bandwidth Ethernet, however, the main reason is our insatiable appetite for content. The definition of content Driving the Need for Speed in itself has evolved over time – where once the majority of traffic on an Ethernet network may have been occa- Ethernet in the Enterprise and Data sional file transfers, emails and the like, today technology Center is allowing us to push and receive richer content such Data center virtualization, which includes storage and as voice, video and high definition multimedia. Simi- server virtualization, is all about the efficient use of larly, mechanisms for delivering content have evolved resources. In the data center this is multifaceted. On over time to reflect this demand. While there were a few the one hand data center managers are trying to bring technologies competing for LAN dominance in the early power, cooling and space utilization under control, while days of networks, Ethernet has become the clear choice. -

Mikrodenetleyicili Endüstriyel Seri Protokol Çözümleyici Sisteminin Programi

YILDIZ TEKNİK ÜNİVERSİTESİ FEN BİLİMLERİ ENSTİTÜSÜ MİKRODENETLEYİCİLİ ENDÜSTRİYEL SERİ PROTOKOL ÇÖZÜMLEYİCİ SİSTEMİNİN PROGRAMI Elektronik ve Haberleşme Müh. Kemal GÜNSAY FBE Elektronik ve Haberleşme Anabilim Dalı Elektronik Programında Hazırlanan YÜKSEK LİSANS TEZİ Tez Danışmanı : Yrd. Doç. Dr. Tuncay UZUN (YTÜ) İSTANBUL, 2009 YILDIZ TEKNİK ÜNİVERSİTESİ FEN BİLİMLERİ ENSTİTÜSÜ MİKRODENETLEYİCİLİ ENDÜSTRİYEL SERİ PROTOKOL ÇÖZÜMLEYİCİ SİSTEMİNİN PROGRAMI Elektronik ve Haberleşme Müh. Kemal GÜNSAY FBE Elektronik ve Haberleşme Anabilim Dalı Elektronik Programında Hazırlanan YÜKSEK LİSANS TEZİ Tez Danışmanı : Yrd. Doç. Dr. Tuncay UZUN (YTÜ) İSTANBUL, 2009 İÇİNDEKİLER Sayfa KISALTMA LİSTESİ ................................................................................................................ v ŞEKİL LİSTESİ ...................................................................................................................... viii ÇİZELGE LİSTESİ .................................................................................................................... x ÖNSÖZ ...................................................................................................................................... xi ÖZET ........................................................................................................................................ xii ABSTRACT ............................................................................................................................ xiii 1. GİRİŞ ...................................................................................................................... -

Gigabit Ethernet - CH 3 - Ethernet, Fast Ethernet, and Gigabit Ethern

Switched, Fast, and Gigabit Ethernet - CH 3 - Ethernet, Fast Ethernet, and Gigabit Ethern.. Page 1 of 36 [Figures are not included in this sample chapter] Switched, Fast, and Gigabit Ethernet - 3 - Ethernet, Fast Ethernet, and Gigabit Ethernet Standards This chapter discusses the theory and standards of the three versions of Ethernet around today: regular 10Mbps Ethernet, 100Mbps Fast Ethernet, and 1000Mbps Gigabit Ethernet. The goal of this chapter is to educate you as a LAN manager or IT professional about essential differences between shared 10Mbps Ethernet and these newer technologies. This chapter focuses on aspects of Fast Ethernet and Gigabit Ethernet that are relevant to you and doesn’t get into too much technical detail. Read this chapter and the following two (Chapter 4, "Layer 2 Ethernet Switching," and Chapter 5, "VLANs and Layer 3 Switching") together. This chapter focuses on the different Ethernet MAC and PHY standards, as well as repeaters, also known as hubs. Chapter 4 examines Ethernet bridging, also known as Layer 2 switching. Chapter 5 discusses VLANs, some basics of routing, and Layer 3 switching. These three chapters serve as a precursor to the second half of this book, namely the hands-on implementation in Chapters 8 through 12. After you understand the key differences between yesterday’s shared Ethernet and today’s Switched, Fast, and Gigabit Ethernet, evaluating products and building a network with these products should be relatively straightforward. The chapter is split into seven sections: l "Ethernet and the OSI Reference Model" discusses the OSI Reference Model and how Ethernet relates to the physical (PHY) and Media Access Control (MAC) layers of the OSI model. -

Ethernet and Wifi

Ethernet and WiFi hp://xkcd.com/466/ CSCI 466: Networks • Keith Vertanen • Fall 2011 Overview • Mul?ple access networks – Ethernet • Long history • Dominant wired technology – 802.11 • Dominant wireless technology 2 Classic Ethernet • Ethernet – luminferous ether through which electromagne?c radiaon once thought to propagate – Carrier Sense, Mul?ple Access with Collision Detec?on (CSMA/CD) – IEEE 802.3 Robert Metcalfe, co- inventor of Ethernet 3 Classic Ethernet • Ethernet – Xerox Ethernet standardized as IEEE 802.3 in 1983 – Xerox not interested in commercializing – Metcalfe leaves and forms 3Com 4 Ethernet connec?vity • Shared medium – All hosts hear all traffic on cable – Hosts tapped the cable – 2500m maximum length – May include repeaters amplifying signal – 10 Mbps bandwidth 5 Classic Ethernet cabling Cable aSer being "vampire" tapped. Thick Ethernet cable (yellow), 10BASE-5 transceivers, cable tapping tool (orange), 500m maximum length. Thin Ethernet cable (10BASE2) with BNC T- connector, 185m maximum length. 6 Ethernet addressing • Media Access Control address (MAC) – 48-bit globally unique address • 281,474,976,710,656 possible addresses • Should last ?ll 2100 • e.g. 01:23:45:67:89:ab – Address of all 1's is broadcast • FF:FF:FF:FF:FF:FF 7 Ethernet frame format • Frame format – Manchester encoded – Preamble products 10-Mhz square wave • Allows clock synch between sender & receiver – Pad to at least 64-bytes (collision detec?on) Ethernet 802.3 AlternaWng 0's 48-bit MAC and 1's (except addresses SoF of 11) 8 Ethernet receivers • Hosts listens to medium – Deliver to host: • Any frame with host's MAC address • All broadcast frames (all 1's) • Mul?cast frames (if subscribed to) • Or all frames if in promiscuous mode 9 MAC sublayer • Media Access Control (MAC) sublayer – Who goes next on a shared medium – Ethernet hosts can sense if medium in use – Algorithm for sending data: 1. -

HP Switch Zl2 Modules Installation Guide

HP Switch zl2 Modules Installation Guide Part Number: 5998-5076a Published: July 2016 Edition: 2 © Copyright 2014, 2016 Hewlett Packard Enterprise Development LP The information contained herein is subject to change without notice. The only warranties for Hewlett Packard Enterprise products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Hewlett Packard Enterprise shall not be liable for technical or editorial errors or omissions contained herein. Confidential computer software. Valid license from Hewlett Packard Enterprise required for possession, use, or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor's standard commercial license. Links to third-party websites take you outside the Hewlett Packard Enterprise website. Hewlett Packard Enterprise has no control over and is not responsible for information outside the Hewlett Packard Enterprise website. Acknowledgments Intel®, Itanium®, Pentium®, Intel Inside®, and the Intel Inside logo are trademarks of Intel Corporation in the United States and other countries. Microsoft® and Windows® are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. Adobe® and Acrobat® are trademarks of Adobe Systems Incorporated. Java® and Oracle® are registered trademarks of -

Fact Sheet: Single-Pair Ethernet Trade Article

Fact sheet Single Pair Ethernet Matthias Fritsche – product manager device connectivity & Jonas Diekmann – technical editor HARTING Technology group – October 2016– November 2016 Wireless technology and optical cable have already been often heralded as the future transmission technology. However, simple twisted pair cable based on plain old copper, often pronounced dead, is the most common transmission medium. Simple, robust, and perhaps with 100GBASE T1 soon to be also incredibly fast. From the beginnings of Ethernet in the 1970s, then via diverse multi-pair Ethernet developments with multiple parallel transmission paths, now apparently we are taking a step back. Back to single twisted-pair. With a new protocol and new PHYs transmission rates of up to 10 Gbit/s and PoDL capacities of up to 60 W are no longer a problem. Ultimately one pair is enough. When the team surrounding David Boggs and Robert Metcalf in the 1970s developed Ethernet at the Xerox Palo Alto Research Center (PARC), no one could foresee that this transmission method would develop so dynamically and dominate data transmission worldwide to this day. The original 10BASE5 Ethernet still used coax cable as the common medium. Today, next to wireless and optical cables, twisted-pair cable, often pronounced dead, is the most frequently used transmission medium. Starting in 1990 with 10BASE-T, the data transmission rate of the IEEE standards increased by a factor of 10 approximately every 5 years over 100BASE-TX and 1000BASE-T up to 10GBASE-T. This series could not be continued for the jump to 100GBASE-T, instead however four new IEEE standards were finalized in 2016. -

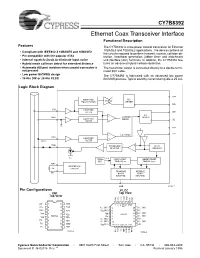

Ethernet Coax Transceiver Interface 1CY7B8392 Functional Description

CY7B8392 Ethernet Coax Transceiver Interface 1CY7B8392 Functional Description Features The CY7B8392 is a low power coaxial transceiver for Ethernet 10BASE5 and 10BASE2 applications. The device contains all • Compliant with IEEE802.3 10BASE5 and 10BASE2 the circuits required to perform transmit, receive, collision de- • Pin compatible with the popular 8392 tection, heartbeat generation, jabber timer and attachment • Internal squelch circuit to eliminate input noise unit interface (AUI) functions. In addition, the CY7B8392 fea- • Hybrid mode collision detect for extended distance tures an advanced hybrid collision detection. • Automatic AUI port isolation when coaxial connector is The transmitter output is connected directly to a double termi- not present nated 50Ω cable. • Low power BiCMOS design The CY7B8392 is fabricated with an advanced low power • 16-Pin DIP or 28-Pin PLCC BiCMOS process. Typical standby current during idle is 25 mA. Logic Block Diagram RX+ HIGH PASS AUI CCM EQUALIZATION DRIVER RX– RXI LOW PASS FILTER CD+ AUI GND – CARRIER DRIVER LOW PASS SENSE CD– FILTER + TX+ TX– – COLLISION CDS LOW PASS + RCV FILTER TXO WAVEFORM + DC/AC SHAPING SQUELCH – VEE 10 MHz CLK WATCHDOG JABBER RESET OSC TIMER26 ms TIMER0.4 sec RR+ REFERENCE 1K CIRCUIT TRANSMIT RECEIVE RR– STATE STATE MACHINE MACHINE HBE 8392–1 Pin Configurations PLCC DIP Top View Top View – RX+ CD CD+ CDS NC RXI TXO CD+ 1 16 CDS 432 1 28 2726 CD– 2 15 TXO VEE (NC) 5 25 VEE (NC) RX+ 3 14 RXI VEE (NC) 6 24 VEE V VEE 7 23 VEE (NC) EE 4 13 VEE 7B8392 7B8392 VEE 8 22 VEE (NC) VEE 5 12 RR– VEE (NC) 9 21 VEE RX– 6 11 RR+ VEE (NC) 10 20 VEE (NC) TX+ 7 10 GND VEE (NC) 11 121314 15 16 1718 RR– TX– 8 9 HBE – – TX+ TX RX 8392–3 RR+ HBE GND GND 8392–2 Cypress Semiconductor Corporation • 3901 North First Street • San Jose • CA 95134 • 408-943-2600 Document #: 38-02016 Rev. -

Automotive Ethernet Kirsten Matheus , Thomas Königseder Frontmatter More Information

Cambridge University Press 978-1-108-84195-5 — Automotive Ethernet Kirsten Matheus , Thomas Königseder Frontmatter More Information Automotive Ethernet Third Edition Learn about the latest developments in Automotive Ethernet technology and imple- mentation with this fully revised third edition. Including 20% new material and greater technical depth, coverage is expanded to include Detailed explanations of the new PHY technologies 10BASE-T1S (including multi- drop) and 2.5, 5, and 10GBASE-T1 Discussion of EMC interference models Descriptions of the new TSN standards for automotive use More on security concepts An overview of power saving possibilities with Automotive Ethernet Explanation of functional safety in the context of Automotive Ethernet An overview of test strategies The main lessons learned Industry pioneers share the technical and non-technical decisions that have led to the success of Automotive Ethernet, covering everything from electromagnetic require- ments and physical layer technologies, QoS, and the use of VLANs, IP, and service discovery, to network architecture and testing. The guide for engineers, technical managers, and researchers designing components for in-car electronics, and those interested in the strategy of introducing a new technology. Kirsten Matheus is a communications engineer who is responsible for the in-vehicle networking strategy at BMW and who has established Ethernet-based communication as a standard technology within the automotive industry. She has previously worked for Volkswagen, NXP, and Ericsson. In 2019 she was awarded the IEEE-SA Standards Medallion “For vision, leadership, and contributions to developing auto- motive Ethernet networking.” Thomas Königseder is CTO at Technica Engineering, supporting the smooth introduc- tion of Ethernet-based systems for automotive customers. -

Experiment Number: 01 Title: Setup a Wired

Computer Networks Lab T.E. Computer Experiment Number: 01 Title: Setup a wired LAN PROBLEM STATEMENT Setup a wired LAN using Layer 2 Switch and then IP switch of minimum four computers. It includes preparation of cable, testing of cable using line tester, configuration machine using IP addresses, testing using PING utility and demonstrate the PING packets captured traces using Wireshark Packet Analyzer Tool. OBJECTIVES: 1. To understand the structure and working of various networks including the interconnecting devices used in them. 2. To get hands on experience of making and testing cables TYPES OF NETWORK Common examples of area network types are: LAN - Local Area Network WLAN - Wireless Local Area Network WAN - Wide Area Network MAN - Metropolitan Area Network SAN - Storage Area Network, System Area Network, Server Area Network, or sometimes Small Area Network CAN - Campus Area Network, Controller Area Network, or sometimes Cluster Area Network PAN - Personal Area Network DAN - Desk Area Network LAN - Local Area Network 1 Computer Networks Lab T.E. Computer A LAN connects network devices over a relatively short distance. A networked office building, school, or home usually contains a single LAN, though sometimes one building will contain a few small LANs (perhaps one per room), and occasionally a LAN will span a group of nearby buildings. MAN-Metropolitan Area Network A network spanning a physical area larger than a LAN but smaller than a WAN, such as a city. A MAN is typically owned and operated by a single entity such as a government body or large corporation. WAN - Wide Area Network As the term implies, a WAN spans a large physical distance. -

Faculty of Engineering

NEAR EAST UNIVERSITY Faculty of Engineering Department of Computer Engineering LOCAL AREA NETWORKS Graduation Project COM-400 Student: Azhar Ali Awan (992292) Supervisor: Dr. Jamal Fathi Nicosia-2002 ACKNOWLEDGEMENTS Foremost I would like to pay my special thanks to my parents, who helped me on every phase of my life. They boosted me up about my studies as well as my life. I am very much thankful and grateful to my mother whose prayers and love for me has encouraged me so make this day come true. It is only because of them that today I am capable of completing my degree. Secondly I would like to thank to my supervisor Dr. Jamal Fathi, without whom this project would have not been possible, whose words of encouragement kept us doing my project. His faith in my work and me and his invaluable knowledge for the project has'made me taking keen interest in my project. He is an excellent teacher and advisor. I would also like to thank my all friends and housemate who helped me so much in doing my project. They encouraged me a lot in completing my project, as it is not single man's work. I want to thank them as they contributed their time and provided me with very helpful suggestions. CONTENTS ABSTRACT 1 1. LOCAL AREA NETWORKS IN WORKPLACE 2 1. 1 Overview 2 1 .2 How and Why Network Exists? 2 1.3 Goals of Computer Networks 4 1 .4 Classification of Computer Networks 4 1.5 Local Area Networks 7 1.6 Major Components of LANs 9 1.7 Types of Local Area Networks 10 1.7.1 Peer-to-Peer 10 1.7.2 Client-Server 10 1.8 Local Area Networks Connectivity Devices 10 1.8.1 Repeaters 10 1 .8.2 Bridges 11 1 .8.3 Routers 11 1.8.4 Brouters 11 1.8.5 Gateways 11 1.9 Local Area Networks (LAN) in the Workplace and its advantages 12 1. -

Ethernet Basics-1/5 BB-WP3 © 2002 by B&B Electronics

Ethernet Basics-1/5 BB-WP3 © 2002 by B&B Electronics. All rights reserved.1005 ETHERNET BASICS B&B ELECTRONICS Regular, Fast, and Ultrafast Ethernet 10BASE5—Thick Ethernet or Thicknet—is the original Institute of Electronic and Electrical Engineers (IEEE) 802.3 Ethernet. 10BASE2—Thin Ethernet, Thinnet, or Cheapernet—resembles 10BASE5, was introduced to reduce the cost and complexity of installation, and became very popular for replacing Thick Ethernet as an office-cabling solution. 10BASE-T, a completely new physical layer, is IEEE 802.3i and uses two pairs of unshielded twisted-pair (UTP) B&B B&B ELECTRONICS telephone-type cable: one to transmit; the other to receive. 10BASE-F refers to three different fiber-optic specifications: . 10BASE-FL (Fiber Link) replaces the 1987 Fiber Optic Inter-Repeater Link (FOIRL) specification and is backward compatible with existing FOIRL devices. It is the most popular 10-Mbps fiber standard. 10BASE-FP and 10BASE-FB are dead. P means passive; B means backbone. Fast Ethernet 100BASE-T is 10BASE-T with the original Ethernet Media Access Controller (MAC) at 10 times the speed. It allows three physical-layer implementations, all part of IEEE 802.3u: 100BASE-TX, which has two pairs of Category 5 UTP or Type 1 STP cabling and is most popular for horizontal connections; 100BASE-FX, which has two strands of multimode fiber and is most popular for vertical or backbone connections; 100BASE-T4, which as four pairs of Category 3 or better cabling and is not common. Gigabit or 1000-Mb Ethernet is the 1998 IEEE 802.3z standard that includes the Gigabit Ethernet MAC and three physical layers.