Contentful Neural Conversation with On-Demand Machine Reading

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

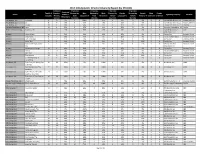

2017 DGA Episodic Director Diversity Report (By STUDIO)

2017 DGA Episodic Director Diversity Report (by STUDIO) Combined # Episodes # Episodes # Episodes # Episodes Combined Total # of Female + Directed by Male Directed by Male Directed by Female Directed by Female Male Female Studio Title Female + Signatory Company Network Episodes Minority Male Caucasian % Male Minority % Female Caucasian % Female Minority % Unknown Unknown Minority % Episodes Caucasian Minority Caucasian Minority A+E Studios, LLC Knightfall 2 0 0% 2 100% 0 0% 0 0% 0 0% 0 0 Frank & Bob Films II, LLC History Channel A+E Studios, LLC Six 8 4 50% 4 50% 1 13% 3 38% 0 0% 0 0 Frank & Bob Films II, LLC History Channel A+E Studios, LLC UnReal 10 4 40% 6 60% 0 0% 2 20% 2 20% 0 0 Frank & Bob Films II, LLC Lifetime Alameda Productions, LLC Love 12 4 33% 8 67% 0 0% 4 33% 0 0% 0 0 Alameda Productions, LLC Netflix Alcon Television Group, Expanse, The 13 2 15% 11 85% 2 15% 0 0% 0 0% 0 0 Expanding Universe Syfy LLC Productions, LLC Amazon Hand of God 10 5 50% 5 50% 2 20% 3 30% 0 0% 0 0 Picrow, Inc. Amazon Prime Amazon I Love Dick 8 7 88% 1 13% 0 0% 7 88% 0 0% 0 0 Picrow Streaming Inc. Amazon Prime Amazon Just Add Magic 26 7 27% 19 73% 0 0% 4 15% 1 4% 0 2 Picrow, Inc. Amazon Prime Amazon Kicks, The 9 2 22% 7 78% 0 0% 0 0% 2 22% 0 0 Picrow, Inc. Amazon Prime Amazon Man in the High Castle, 9 1 11% 8 89% 0 0% 0 0% 1 11% 0 0 Reunion MITHC 2 Amazon Prime The Productions Inc. -

Mplc Studio Liste 2020

MPLC STUDIO LISTE 2020 STUDIOS – PRODUZENTEN – FERNSEHSTATIONEN MPLC STUDIO LISTE 2020 STUDIOS – PRODUCTEURS – STATIONS DE TÉLÉVISION MPLC LISTA STUDIO 2020 CASE CINEMATOGRAFICHE – PRODUTTORI – STAZIONI TELEVISIVE MOTION PICTURE LICENSING COMPANY: DER WELTWEIT GRÖSSTE LIZENZGEBER IN DER FILM- & TV-BRANCHE. MPLC Switzerland GmbH Münchhaldenstrasse 10, Postfach CH-8034 Zürich MPLC ist der weltweit grösste Lizenzgeber für öffentliche Vorführrechte im non-theatrical Bereich und in über 30 Länder tätig. Ihre Vorteile + Einfache und unkomplizierte Lizenzierung + Event, Title by Title und Umbrella Lizenzen möglich + Deckung sämtlicher Majors (Walt Disney, Universal, Warner Bros., Sony, FOX, Paramount und Miramax) + Benutzung aller legal erworbenen Medienträger erlaubt + Von Dokumentar- und Independent-, über Animationsfilmen bis hin zu Blockbustern ist alles gedeckt + Für sämtliche Vorführungen ausserhalb des Kinos Index MAJOR STUDIOS EDUCATION AND SPECIAL INTEREST TV STATIONS SWISS DISTRIBUTORS MPLC TBT RIGHTS FOR NON THEATRICAL USE (OPEN AIR SHOW WITH FEE – FOR DVD/BLURAY ONLY) WARNER BROS. FOX DISNEY UNIVERSAL PARAMOUNT PRAESENS FILM FILM & VIDEO PRODUCTION GEHRIG FILM GLOOR FILM HÄSELBARTH FILM SCHWEIZ KOTOR FILM LANG FILM PS FILM SCHWEIZER FERNSEHEN (SRF) MIRAMAX SCM HÄNSSLER FIRST HAND FILMS STUDIO 100 MEDIA VEGA FILM COCCINELLE FILM PLACEMENT ELITE FILM AG (ASCOT ELITE) CONSTANTIN FILM CINEWORX Label Apollo Media Apollo Media Distribution Gmbh # Apollo Media Nova Gmbh 101 Films Apollo ProMedia 12 Yard Productions Apollo ProMovie 360Production -

Audiobooks Biography Nonfiction

Audiobooks 1st case / James Patterson and Chris Tebbetts.","AUD CD MYS PATTERSON" "White fragility : why it's so hard for white people to talk about racism / Robin DiAngelo.","AUD CD 305.8 DIA" "The guest list : a novel / Lucy Foley, author of The hunting party.","AUD CD FIC FOLEY" Biography "Beyond valor : a World War II story of extraordinary heroism, sacrificial love, and a race against time / Jon Erwin and William Doyle.","B ERWIN" "Chasing the light : writing, directing, and surviving Platoon, Midnight Express, Scarface, Salvador, and the movie game / Oliver Stone.","B STONE" "Disloyal : a memoir : the true story of the former personal attorney to the President of the United States / Michael Cohen.","B COHEN" Horse crazy : the story of a woman and a world in love with an animal / Sarah Maslin Nir.","B NIR" "Melania and me : the rise and fall of my friendship with the First Lady / Stephanie Winston Wolkoff.","B TRUMP" "Off the record : my dream job at the White House, how I lost it, and what I learned / Madeline Westerhout.","B WESTERHOUT" "A knock at midnight : a story of hope, justice, and freedom / Brittany K. Barnett.","B BARNETT" "The meaning of Mariah Carey / Mariah Carey with Michaela Angela Davis.","B CAREY" "Solutions and other problems / Allie Brosh.","B BROSH" "Doc : the life of Roy Halladay / Todd Zolecki.","B HALLADAY" Nonfiction "Caste : the origins of our discontents / Isabel Wilkerson.","305.512 WIL" "Children of Ash and Elm : a history of the Vikings / Neil Price.","948.022 PRI" "Chiquis keto : the 21-day starter kit for taco, tortilla, and tequila lovers / Chiquis Rivera with Sarah Koudouzian.","641.597 RIV" "Clean : the new science of skin / James Hamblin.","613 HAM" "Do it afraid : embracing courage in the face of fear / Joyce Meyer.","248.8 MEY" "The end of gender : debunking the myths about sex and identity in our society / Dr. -

STEVE YEDLIN, ASC Director of Photography Yedlin.Net PROJECTS Partial List DIRECTORS STUDIOS/PRODUCERS UNT

STEVE YEDLIN, ASC Director of Photography yedlin.net PROJECTS Partial List DIRECTORS STUDIOS/PRODUCERS UNT. BENOIT BLANC SEQUEL Rian Johnson T-STREET / NETFLIX Ram Bergman, Tom Karnowski KNIVES OUT Rian Johnson T-STREET / MRC / LIONSGATE Ram Bergman STAR WARS: THE LAST JEDI Rian Johnson LUCASFILM / DISNEY Ram Bergman, Kathleen Kennedy SAN ANDREAS Brad Peyton NEW LINE CINEMA / WARNER BROS. Rob Cowan, Beau Flynn DANNY COLLINS Dan Fogelman INIMITABLE PICTURES Monica Levinson, Denise Di Novi Jessie Nelson CARRIE Kimberly Peirce MGM / Miles Dale, Kevin Misher GIRL MOST LIKELY Robert Pulcini LIONSGATE / Pam Hirsch, Alix Official Selection – Toronto Film Festival Shari Springer Berman Madigan Steve Golin, Celine Rattray, Trudie Styler LOOPER Rian Johnson ENDGAME ENTERTAINMENT Official Selection – Toronto Film Festival Ram Bergman FATHER OF INVENTION Trent Cooper TRIGGER STREET Danny Stillman, Beau Rogers Jonathan Krane THE OTHER WOMAN Don Roos IFC FILMS Marc Platt, Carol Cuddy THE WITNESS: FROM THE Adam Pertofsky HBO BALCONY OF RM. 306 R. Stephan Mohammed Nomination, Best Documentary Short – Margaret Hyde Academy Award TENURE Mike Million Jared Goldman, Tai Duncan Paul Schiff AMERICAN VIOLET Tim Disney Mark Donadio, Bill Haney BROTHERS BLOOM Rian Johnson Ram Bergman, James Stern Wendy Japhet LOVELY BY SURPRISE Kirt Gunn RADICAL MEDIA Winner, New American Special Jury Prize – Michael Hilliard Seattle Film Festival ALTERED Eduardo Sánchez UNIVERSAL / ROGUE / Gregg Hale UNKNOWN Simon Brand WEINSTEIN / Rick Lashbrook THE DEAD ONE Brian Cox Susan Rodgers, Larry Rattner CONVERSATIONS WITH Hans Canosa FABRICATION FILMS OTHER WOMEN Ram Bergman BRICK Rian Johnson FOCUS FEATURES Winner, Special Grand Jury Prize – Ram Bergman, Mark Mathis Sundance Film Festival Nomination, John Cassavetes Award – Ind. -

MPLC Studioliste Juli21-2.Pdf

MPLC ist der weltweit grösste Lizenzgeber für öffentliche Vorführrechte im non-theatrical Bereich und in über 30 Länder tätig. Ihre Vorteile + Einfache und unkomplizierte Lizenzierung + Event, Title by Title und Umbrella Lizenzen möglich + Deckung sämtlicher Majors (Walt Disney, Universal, Warner Bros., Sony, FOX, Paramount und Miramax) + Benutzung aller legal erworbenen Medienträger erlaubt + Von Dokumentar- und Independent-, über Animationsfilmen bis hin zu Blockbustern ist alles gedeckt + Für sämtliche Vorführungen ausserhalb des Kinos Index MAJOR STUDIOS EDUCATION AND SPECIAL INTEREST TV STATIONS SWISS DISTRIBUTORS MPLC TBT RIGHTS FOR NON THEATRICAL USE (OPEN AIR SHOW WITH FEE – FOR DVD/BLURAY ONLY) WARNER BROS. FOX DISNEY UNIVERSAL PARAMOUNT PRAESENS FILM FILM & VIDEO PRODUCTION GEHRIG FILM GLOOR FILM HÄSELBARTH FILM SCHWEIZ KOTOR FILM LANG FILM PS FILM SCHWEIZER FERNSEHEN (SRF) MIRAMAX SCM HÄNSSLER FIRST HAND FILMS STUDIO 100 MEDIA VEGA FILM COCCINELLE FILM PLACEMENT ELITE FILM AG (ASCOT ELITE) CONSTANTIN FILM CINEWORX DCM FILM DISTRIBUTION (SCHWEIZ) CLAUSSEN+PUTZ FILMPRODUKTION Label Anglia Television Animal Planet Productions # Animalia Productions 101 Films Annapurna Productions 12 Yard Productions APC Kids SAS 123 Go Films Apnea Film Srl 20th Century Studios (f/k/a Twentieth Century Fox Film Corp.) Apollo Media Distribution Gmbh 2929 Entertainment Arbitrage 365 Flix International Archery Pictures Limited 41 Entertaiment LLC Arclight Films International 495 Productions ArenaFilm Pty. 4Licensing Corporation (fka 4Kids Entertainment) Arenico Productions GmbH Ascot Elite A Asmik Ace, Inc. A Really Happy Film (HK) Ltd. (fka Distribution Workshop) Astromech Records A&E Networks Productions Athena Abacus Media Rights Ltd. Atlantic 2000 Abbey Home Media Atlas Abot Hameiri August Entertainment About Premium Content SAS Avalon (KL Acquisitions) Abso Lutely Productions Avalon Distribution Ltd. -

Classes of 2019 and 2020 Hiring Organizations

HIRING ORGANIZATIONS CLASSES OF 2019 AND 2020 HIRING ORGANIZATIONS RECENT EMPLOYERS The following firms hired at least one UCLA Anderson student during the 2018–2019 school year (combining both full-time employment and summer internships): A Breakwater Investment Edison International Hewlett Packard Enterprise Lionsgate Entertainment Inc . O A G. Spanos Companies Management Education Pioneers Company Lionstone Investments Openpath Security Inc . A+E Television Networks ByteDance Edwards Lifesciences Hines Liquid Stock Oracle Corp . Accenture C Electronic Arts Inc . HomeAway Logitech P ACHS Califia Farms Honda R&D Americas Inc . Pabst Brewing Company Engine Biosciences L’Oreal USA Adobe Systems Inc . Cambridge Associates LLC Honeywell International Inc . Pacific Coast Capital Partners Epson America Inc . Los Angeles Chargers Advisor Group Capital Group Houlihan Lokey Palm Tree LLC Los Angeles Football Club Age of Learning Inc . Capstone Equities Esports One HOVER Inc . Pandora Media M Agoda Caruso EVgo Hulu Paramount Pictures M&T Bank Corporation Altair Engineering Inc . Casa Verde Capital Evolus I Park Lane Investment Bank Alteryx Inc . Cedars-Sinai Health System Experian Illumina Inc . Mailchimp Phoenix Suns Amazon Inc . Cenocore Inc . EY-Parthenon IMAX Marble Capital PIMCO American Airlines Inc . Century Park Capital Partners F InnoVision Solutions Group March Capital Partners Pine Street Group Amgen Inc . Charles Schwab FabFitFun Instacart Marvell Technology Group Ltd . Piper Jaffray & Co . Anaplan Checchi Capital Advisers Facebook Inc . Intel Corporation MarVista Entertainment PlayVS Apple Inc . Cisco Systems Inc . Intuit Inc . Mattel Inc . PLG Ventures Falabella Retail S .A . Applied Ventures The Clorox Company Intuitive Surgical Inc . McKinsey & Company The Pokemon Company Fandango Arc Capital Partners LLC Clutter Inversiones PS Medallia Inc . -

Media Release

MEDIA RELEASE Heineken® reveals Spectre TV ad starring Daniel Craig as James Bond, plus world’s first ever selfie from space Amsterdam, 21 September 2015 - As part of its integrated global Spectre campaign, Heineken® has unveiled a new TV ad featuring Daniel Craig as James Bond, in a high speed boat chase. In addition, it also announced an exciting digital campaign featuring the world’s first ever selfie from space, dubbed the ‘Spyfie’. Heineken®’s Spectre campaign is the brand’s largest global marketing platform of 2015. Spectre, the 24th James Bond adventure, from Albert R. Broccoli’s EON Productions, Metro-Goldwyn-Mayer Studios, and Sony Pictures Entertainment, will be released in the UK on October 26 and in the US on November 6. The Heineken® TVC will be launched mobile-first via Facebook, and will be shown on TV and cinema screens worldwide 24 hours later. Heineken® is the only Spectre partner who has created a TVC starring Daniel Craig. Heineken®’s TVC uses Spectre cinematographers and stuntmen to ensure the action sequences are authentically Bond. The added twist involves a young woman, Zara, who accidentally becomes involved in a high-speed boat chase where she helps the world’s favourite spy to save the day. Heineken®’s Spectre TVC: http://youtu.be/vuMvhJaWIUg For its digital Spectre campaign, Heineken® will once again be pushing the boundaries of modern technology, and will be taking the world’s first ever selfie from space. For the ‘Spyfie’, Heineken® has partnered with Urthecast to take ultra HD imagery using its camera on the Deimos satellite, currently in orbit 600km above the Earth’s surface. -

Following Is a Listing of Public Relations Firms Who Have Represented Films at Previous Sundance Film Festivals

Following is a listing of public relations firms who have represented films at previous Sundance Film Festivals. This is just a sample of the firms that can help promote your film and is a good guide to start your search for representation. 11th Street Lot 11th Street Lot Marketing & PR offers strategic marketing and publicity services to independent films at every stage of release, from festival premiere to digital distribution, including traditional publicity (film reviews, regional and trade coverage, interviews and features); digital marketing (social media, email marketing, etc); and creative, custom audience-building initiatives. Contact: Lisa Trifone P: 646.926-4012 E: [email protected] www.11thstreetlot.com 42West 42West is a US entertainment public relations and consulting firm. A full service bi-coastal agency, 42West handles film release campaigns, awards campaigns, online marketing and publicity, strategic communications, personal publicity, and integrated promotions and marketing. With a presence at Sundance, Cannes, Toronto, Venice, Tribeca, SXSW, New York and Los Angeles film festivals, 42West plays a key role in supporting the sales of acquisition titles as well as launching a film through a festival publicity campaign. Past Sundance Films the company has represented include Joanna Hogg’s THE SOUVENIR (winner of World Cinema Grand Jury Prize: Dramatic), Lee Cronin’s THE HOLE IN THE GROUND, Paul Dano’s WILDLIFE, Sara Colangelo’s THE KINDERGARTEN TEACHER (winner of Director in U.S. competition), Maggie Bett’s NOVITIATE -

Nysba Fall/Winter 2018 | Vol

NYSBA FALL/WINTER 2018 | VOL. 29 | NO. 3 Entertainment, Arts and Sports Law Journal A publication of the Entertainment, Arts and Sports Law Section of the New York State Bar Association www.nysba.org/EASL Table of Contents Page Greetings from Lawyersville, by Barry Skidelsky, EASL Chair .......................................................................................4 Editor’s Note .......................................................................................................................................................................6 Letter from Governor Andrew M. Cuomo......................................................................................................................7 Letter from Senator Kirsten E. Gillibrand .......................................................................................................................8 Pro Bono Update .................................................................................................................................................................9 Law Student Initiative Writing Contest .........................................................................................................................12 The Phil Cowan Memorial/BMI Scholarship Writing Competition .........................................................................13 NYSBA Guidelines for Obtaining MCLE Credit for Writing .....................................................................................15 Brave New World: Unsilencing the Authenticators .....................................................................................................16 -

Deal Making and Business Development in Media Mktg

DEAL MAKING AND BUSINESS DEVELOPMENT IN MEDIA MKTG-GB.2123.30 Professor Joshua W. Walker E-Mail Address: [email protected] Office Hours: By appointment Course Schedule: Fall 2017 – Mondays, 6 p.m. to 9 p.m. – September 25 through November 6 Room: KMC ___ COURSE OVERVIEW: This course is designed to provide students with an understanding of the business development and deal-making process in the media space, using television content as the primary example for what goes into cutting a deal. The course will explore the deal process from the perspective of the different players in media, focusing on how each player looks to maximize value. Students will learn the process of striking a deal, from corporate planning, to business development, to the negotiation process and contractual agreements, through to deal implementation. The process will be evaluated in the context of the factors that play into reaching an agreement, such as exclusivity, windowing, multi-platform rights and timing. Students will learn about negotiations strategies for maximizing value in content deals, identifying common issues in the deal process and effective paths to reaching resolution and striking a deal. COURSE OBJECTIVES: To understand the basics of the process for making a deal in the media industry To appreciate the factors that play into maximizing value through the deal process, including understanding the relative position of the players in media To learn negotiating strategies and how to navigate the business development and deal- making process COURSE REQUIREMENTS: Class attendance and participation (15%) Written analysis of case studies (35%) Final project (50%) READINGS AND COURSE MATERIALS: Text: The Business of Media Distribution: Monetizing Film, TV, and Video Content in an Online World, Jeffrey C. -

Title # of Eps. # Episodes Male Caucasian % Episodes Male

2020 DGA Episodic TV Director Inclusion Report (BY SHOW TITLE) # Episodes % Episodes # Episodes % Episodes # Episodes # Episodes # Episodes # Episodes # of % Episodes % Episodes % Episodes % Episodes Male Male Female Female Title Male Males of Female Females of Network Studio / Production Co. Eps. Male Caucasian Males of Color Female Caucasian Females of Color Unknown/ Unknown/ Unknown/ Unknown/ Caucasian Color Caucasian Color Unreported Unreported Unreported Unreported 100, The 12 6.0 50.0% 2.0 16.7% 3.0 25.0% 1.0 8.3% 0.0 0.0% 0.0 0.0% CW Warner Bros Companies Paramount Pictures 13 Reasons Why 10 6.0 60.0% 2.0 20.0% 2.0 20.0% 0.0 0.0% 0.0 0.0% 0.0 0.0% Netflix Corporation Paramount 68 Whiskey 10 7.0 70.0% 0.0 0.0% 3.0 30.0% 0.0 0.0% 0.0 0.0% 0.0 0.0% Network CBS Companies 9-1-1 18 4.0 22.2% 6.0 33.3% 5.0 27.8% 3.0 16.7% 0.0 0.0% 0.0 0.0% FOX Disney/ABC Companies 9-1-1: Lone Star 10 5.0 50.0% 2.0 20.0% 3.0 30.0% 0.0 0.0% 0.0 0.0% 0.0 0.0% FOX Disney/ABC Companies Absentia 6 0.0 0.0% 0.0 0.0% 6.0 100.0% 0.0 0.0% 0.0 0.0% 0.0 0.0% Amazon Sony Companies Alexa & Katie 16 3.0 18.8% 3.0 18.8% 5.0 31.3% 5.0 31.3% 0.0 0.0% 0.0 0.0% Netflix Netflix Alienist: Angel of Darkness, Paramount Pictures The 8 5.0 62.5% 0.0 0.0% 3.0 37.5% 0.0 0.0% 0.0 0.0% 0.0 0.0% TNT Corporation All American 16 4.0 25.0% 8.0 50.0% 2.0 12.5% 2.0 12.5% 0.0 0.0% 0.0 0.0% CW Warner Bros Companies All Rise 20 10.0 50.0% 2.0 10.0% 5.0 25.0% 3.0 15.0% 0.0 0.0% 0.0 0.0% CBS Warner Bros Companies Almost Family 12 6.0 50.0% 0.0 0.0% 3.0 25.0% 3.0 25.0% 0.0 0.0% 0.0 0.0% FOX NBC Universal Electric Global Almost Paradise 3 3.0 100.0% 0.0 0.0% 0.0 0.0% 0.0 0.0% 0.0 0.0% 0.0 0.0% WGN America Holdings, Inc. -

SPA Updated Syllabus

Sony Pictures Animation - Marshall MBA Project 2017 Project Faculty: Violina Rindova Project Description Sony Pictures Animation was founded in 2002, and is a division of the Sony Pictures Motion Picture Group. Our mission is to produce a wide variety of animated family entertainment for audiences around the world. The studio is best known for Surfs Up (which received an Academy Award® nomination for Best Animated Feature Film), Cloudy with a Chance of Meatballs, and the Hotel Transylvania franchise. We recently released The Emoji Movie and we are currently working on an inspirational film called The Star which releases in November 2017, Hotel Transylvania 3 which release in July 2018; and a Spider-Man animated feature which releases in December 2018. The main focus of our efforts to date has been the production of feature length animated motion pictures for theatrical release. Because of the lengthy production process for animation, we generally release two motion pictures each year. But, our business is challenged in a variety of ways: overall theatrical motion picture attendance has been falling and DVD revenue is 50% of what it was ten years ago; our business is dominated by motion pictures from Disney, Pixar and Illumination which makes it difficult to find good, non-competitive release dates as well as compete against Disney and Universal’s ability to promote their movies across multiple outlets, including theme parks, cruise ships and retail stores; because of the decline of DVD’s, theatrical performance has become more important and motion pictures are much more dependent on favorable reviews to get people out of their houses; and, audience viewing patterns are shifting, especially to streaming and other formers of non-theatrical viewing.