Automated Unit Test Generation During Software Development: a Controlled Experiment and Think-Aloud Observations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Parasoft Static Application Security Testing (SAST) for .Net - C/C++ - Java Platform

Parasoft Static Application Security Testing (SAST) for .Net - C/C++ - Java Platform Parasoft® dotTEST™ /Jtest (for Java) / C/C++test is an integrated Development Testing solution for automating a broad range of testing best practices proven to improve development team productivity and software quality. dotTEST / Java Test / C/C++ Test also seamlessly integrates with Parasoft SOAtest as an option, which enables end-to-end functional and load testing for complex distributed applications and transactions. Capabilities Overview STATIC ANALYSIS ● Broad support for languages and standards: Security | C/C++ | Java | .NET | FDA | Safety-critical ● Static analysis tool industry leader since 1994 ● Simple out-of-the-box integration into your SDLC ● Prevent and expose defects via multiple analysis techniques ● Find and fix issues rapidly, with minimal disruption ● Integrated with Parasoft's suite of development testing capabilities, including unit testing, code coverage analysis, and code review CODE COVERAGE ANALYSIS ● Track coverage during unit test execution and the data merge with coverage captured during functional and manual testing in Parasoft Development Testing Platform to measure true test coverage. ● Integrate with coverage data with static analysis violations, unit testing results, and other testing practices in Parasoft Development Testing Platform for a complete view of the risk associated with your application ● Achieve test traceability to understand the impact of change, focus testing activities based on risk, and meet compliance -

Email: [email protected] Website

Email: [email protected] Website: http://chrismatech.com Experienced software and systems engineer who has successfully deployed custom & industry-standard embedded, desktop and networked systems for commercial and DoD customers. Delivered systems operate on airborne, terrestrial, maritime, and space based vehicles and platforms. Expert in performing all phases of the software and system development life-cycle including: Creating requirements and design specifications. Model-driven software development, code implementation, and unit test. System integration. Requirements-based system verification with structural coverage at the system and module levels. Formal qualification/certification test. Final product packaging, delivery, and site installation. Post-delivery maintenance and customer support. Requirements management and end-to-end traceability. Configuration management. Review & control of change requests and defect reports. Quality assurance. Peer reviews/Fagan inspections, TIMs, PDRs and CDRs. Management and project planning proficiencies include: Supervising, coordinating, and mentoring engineering staff members. Creating project Software Development Plans (SDPs). Establishing system architectures, baseline designs, and technical direction. Creating & tracking project task and resource scheduling, costs, resource utilization, and metrics (e.g., Earned Value Analysis). Preparing proposals in response to RFPs and SOWs. Project Management • Microsoft Project, Excel, Word, PowerPoint, Visio & Documentation: • Adobe Acrobat Professional -

Survey of Verification and Validation Techniques for Small Satellite Software Development

Survey of Verification and Validation Techniques for Small Satellite Software Development Stephen A. Jacklin NASA Ames Research Center Presented at the 2015 Space Tech Expo Conference May 19-21, Long Beach, CA Summary The purpose of this paper is to provide an overview of the current trends and practices in small-satellite software verification and validation. This document is not intended to promote a specific software assurance method. Rather, it seeks to present an unbiased survey of software assurance methods used to verify and validate small satellite software and to make mention of the benefits and value of each approach. These methods include simulation and testing, verification and validation with model-based design, formal methods, and fault-tolerant software design with run-time monitoring. Although the literature reveals that simulation and testing has by far the longest legacy, model-based design methods are proving to be useful for software verification and validation. Some work in formal methods, though not widely used for any satellites, may offer new ways to improve small satellite software verification and validation. These methods need to be further advanced to deal with the state explosion problem and to make them more usable by small-satellite software engineers to be regularly applied to software verification. Last, it is explained how run-time monitoring, combined with fault-tolerant software design methods, provides an important means to detect and correct software errors that escape the verification process or those errors that are produced after launch through the effects of ionizing radiation. Introduction While the space industry has developed very good methods for verifying and validating software for large communication satellites over the last 50 years, such methods are also very expensive and require large development budgets. -

An Eclipse Plug-In for Testing and Debugging

GZoltar: An Eclipse Plug-In for Testing and Debugging José Campos André Riboira Alexandre Perez Rui Abreu Department of Informatics Engineering Faculty of Engineering, University of Porto Portugal {jose.carlos.campos, andre.riboira, alexandre.perez}@fe.up.pt; [email protected] ABSTRACT coverage). Several debugging tools exist which are based on Testing and debugging is the most expensive, error-prone stepping through the execution of the program (e.g., GDB phase in the software development life cycle. Automated and DDD). These traditional, manual fault localization ap- testing and diagnosis of software faults can drastically proaches have a number of important limitations. The place- improve the efficiency of this phase, this way improving ment of print statements as well as the inspection of their the overall quality of the software. In this paper we output are unstructured and ad-hoc, and are typically based present a toolset for automatic testing and fault localiza- on the developer's intuition. In addition, developers tend to use only test cases that reveal the failure, and therefore do tion, dubbed GZoltar, which hosts techniques for (regres- sion) test suite minimization and automatic fault diagno- not use valuable information from (the typically available) sis (namely, spectrum-based fault localization). The toolset passing test cases. provides the infrastructure to automatically instrument the Aimed at drastic cost reduction, much research has been source code of software programs to produce runtime data. performed in developing automatic testing and fault local- Subsequently the data was analyzed to both minimize the ization techniques and tools. As far as testing is concerned, test suite and return a ranked list of diagnosis candidates. -

Eclipse Project Briefing Materials

[________________________] Eclipse project briefing materials. Copyright (c) 2002, 2003 IBM Corporation and others. All rights reserved. This content is made available to you by Eclipse.org under the terms and conditions of the Common Public License Version 1.0 ("CPL"), a copy of which is available at http://www.eclipse.org/legal/cpl-v10.html The most up-to-date briefing materials on the Eclipse project are found on the eclipse.org website at http://eclipse.org/eclipse/ 200303331 1 EclipseEclipse ProjectProject 200303331 3 Eclipse Project Aims ■ Provide open platform for application development tools – Run on a wide range of operating systems – GUI and non-GUI ■ Language-neutral – Permit unrestricted content types – HTML, Java, C, JSP, EJB, XML, GIF, … ■ Facilitate seamless tool integration – At UI and deeper – Add new tools to existing installed products ■ Attract community of tool developers – Including independent software vendors (ISVs) – Capitalize on popularity of Java for writing tools 200303331 4 Eclipse Overview Another Eclipse Platform Tool Java Workbench Help Development Tools JFace (JDT) SWT Team Your Tool Plug-in Workspace Development Debug Environment (PDE) Their Platform Runtime Tool Eclipse Project 200303331 5 Eclipse Origins ■ Eclipse created by OTI and IBM teams responsible for IDE products – IBM VisualAge/Smalltalk (Smalltalk IDE) – IBM VisualAge/Java (Java IDE) – IBM VisualAge/Micro Edition (Java IDE) ■ Initially staffed with 40 full-time developers ■ Geographically dispersed development teams – OTI Ottawa, OTI Minneapolis, -

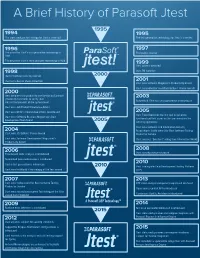

A Brief History of Parasoft Jtest

A Brief History of Parasoft Jtest The static analysis technology for Jtest is invented The test generation technology for Jtest is invented The patent for Jtest’s test generation technology is First public release filed The patent for Jtest’s static analysis technology is filed Jtest patents awarded Jtest TM awarded Jtest introduces security rule set Jtest wins Best in Show at DevCon Jtest wins Software Magazine’s Productivity award Jtest nominated for JavaWorld Editors’ Choice awards Jtest becomes first product to use Design by Contract (Jcontract) comments to verify Java Automated JUnit test case generation is introduced classes/components at the system level Jtest wins Jolt Product Excellence Award Jtest wins Writer’s Choice Award from Java Report Jtest Tracer becomes the first tool to generate Jtest wins Software Business Magazines’s Best functional unit test cases as the user exercises the Development Tool Award working application Jtest wins Software and Information Industry Association’s Codie award for Best Software Testing Jtest wins JDJ Editors’ Choice Award Product or Service Jtest wins Software Development Magazines’s Jtest receives “Excellent” rating from Information World Productivity Award Jtest security edition released Flow-based static analysis is introduced Automated peer code review is introduced Cactus test generation is introduced Jtest is integrated into Development Testing Platform Jtest wins InfoWorld’s Technology of the Year award (DTP) Jtest wins Codie award for Best Software Testing DTP static analysis components -

Artificial Intelligence and Knowledge Engineering

UNIVERSAL UNIT TESTS GENERATOR BASED ON SOFTWARE MODELS Sarunas Packevicius, Andrej Usaniov, Eduardas Bareisa Kaunas University of Technology, Department of Software Engineering, Studentų 50, Kaunas, Lithuania, [email protected], [email protected], [email protected] Abstract. Unit tests are viewed as a coding result of software developers. Unit tests are usually created by developers and implemented directly using specific language and unit testing framework. Unit test generation tools usually does the same thing – generates tests for specific language using specific unit testing framework. Thus such generator is suitable for only one situation. Another drawback of these generators – they mainly use software code as a source for generation. In this paper we are presenting tests generator model which could be able to generate unit tests for any language using any unit testing framework. It will be able to use not only software under test code, but and other artifacts: models, specifications. Keywords: unit testing, tests generator, model based testing 1 Introduction Software testing automation is seen as a mean for reducing software construction costs by eliminating or reducing manual software testing phase. Software tests generators are used for software testing automation. Software tests generators have to fulfill such goals: 1. Create repeatable tests. 2. Create tests for bug detection. 3. Create self-checking tests. Current unit tests generators (for example Parasoft JTest) fulfill only some of these goals. They usually generate repeatable tests, but a tester has to specify the tests oracle (a generator generates test inputs, but provides no way to verify if test execution outcomes are correct) – tests are not self-checking. -

Accelerate Software Innovation Through Continuous Quality

Accelerate Software Innovation Through Continuous Quality 1 Software quality is recognized as the #1 issue IT executives are trying to mitigate. Enterprise organizations strive to accelerate the delivery of a compelling user experience to their customers in order to drive revenue. Software quality is recognized as the #1 issue IT executives are trying to mitigate. QA teams know they have issues and are actively looking for solutions to save time, increase quality, improve security, and more. The most notable difficulties are in identifying the right areas to test, the availability of flexible and reliable test environments and test data, and the realization of benefits from automation. You may be facing many challenges with delivering software to meet the high expectations for quality, cost, and schedule driven by the business. An effective software testing strategy can address these issues. If you’re looking to improve your software quality while achieving your business goals, Parasoft can help. With over 30 years of making testing easier for our customers, we have the innovation you need and the experience you trust. Our extensive continuous quality suite spans every testing need and enables you to reach new heights. 3 QUALITY-FIRST APPROACH You can’t test quality into an application at the end of the software development life cycle (SDLC). You need to ensure that your software development process and practices put a priority on quality- driven development and integrate a comprehensive testing strategy to verify that the application’s functionality meets the requirements. Shift testing left to the start of your development process to bring quality to the forefront. -

Abstract: Modern Open Source (“OS”) Software Projects Are Increasingly Funded by Commercial Firms That Expect to Earn a Profit from Their Investment

GSPP10-006 Abstract: Modern open source (“OS”) software projects are increasingly funded by commercial firms that expect to earn a profit from their investment. This is usually done by bundling OS software with proprietary goods like cell phones or services like tech support. This article asks how judges and policymakers should manage this emerging business phenomenon. It begins by examining how private companies have adapted traditional OS institutions in a commercial setting. It then analyzes how OS methods change companies’ willingness to invest in software. On the one hand, OS cost-sharing often leads to increased output and benefits to consumers. On the other, these benefits tend to be limited. This is because sharing guarantees that no OS company can offer consumers better software than any other OS company. This suppresses incentives to invest much as a formal cartel would. In theory, vigorous competition from non-OS companies can mitigate this effect and dramatically increase OS output. In practice, however, de facto cartelization usually makes the OS sector so profitable that relatively few proprietary companies compete. This poses a central challenge to judges and policymakers. Antitrust law has long recognized that the benefits of R&D sharing frequently justify the accompanying cartel effect. This article argues that most commercial OS collaborations can similarly be organized in ways that satisfy the Rule of Reason. It also identifies two safe harbors where unavoidable cartel effects should normally be tolerated. That said, many OS licenses contain so-called “viral” clauses that require users who would prefer to develop proprietary products to join OS collaborations instead. -

Eclipsereview 200612.Pdf

A BZ Media Publication Volume 1 I Number 4 Fall 2006 www.eclipsereview.com SOAP? XML? WSDL? Java? Eclipse!! IMPROVING CODE WITH STATIC ANALYSIS Web 2.0! AJAX Meets JavaServer Faces 2727 MMusustt-Have-Have EclipseEclipse Plug-InsPlug-Ins Legacy Modernization with... NXTware™ ESB ...another Eclipse plug-in you can’t live without. Implementing XML and Service Oriented Architecture doesn’t mean starting from scratch. Why not integrate and modernize application functions as you need them in Eclipse? NXTware ESB provides just-in-time modernization from within an Eclipse development environment. Lower costs and reduce risk by creating SOA interfaces for the business functions you need, when you need them, with NXTware ESB for Eclipse. eCube Systems Learn more about Enterprise Evolution and Enabling Enterprise Evolution NXTware SOA for Eclipse. Call (866) 493-4224 Copyright eCube Systems LLC or visit www.ecubesystems.com. San Mateo - Boston - Houston Legacy Modernization with... NXTware™ ESB ...another Eclipse plug-in you can’t live without. Implementing XML and Service Oriented Architecture doesn’t mean starting from scratch. Why not integrate and modernize application functions as you need them in Eclipse? NXTware ESB provides just-in-time modernization from within an Eclipse development environment. Lower costs and reduce risk by creating SOA interfaces for the business functions you need, when you need them, with NXTware ESB for Eclipse. eCube Systems Learn more about Enterprise Evolution and Enabling Enterprise Evolution NXTware SOA for Eclipse. Call (866) 493-4224 Copyright eCube Systems LLC or visit www.ecubesystems.com. San Mateo - Boston - Houston DGB[LQGG $0 Vol. 1 I No. -

Comparing Four Static Analysis Tools for Java Concurrency Bugs

Comparing Four Static Analysis Tools for Java Concurrency Bugs Md. Abdullah Al Mamun, Aklima Khanam, Håkan Grahn, and Robert Feldt School of Computing, Blekinge Institute of Technology SE-371 79 Karlskrona, Sweden [email protected], [email protected], {hakan.grahn,robert.feldt}@bth.se ABSTRACT (v4.4.1) [3] and Jtest (v8.4.11) [13], and two open source, Static analysis (SA) tools are being used for early detection i.e., FindBugs (v1.3.9) [8] and Jlint (v3.1) [1], static analysis of software defects. Concurrency bugs are different from tools for their ability to detect Java multithreaded bugs and bugs in sequential programs, and they are often harder to bug patterns. To evaluate the SA tools, we use a benchmark detect. This paper presents the evaluation of four static suite containing Java programs with concurrency bugs [5], a analysis tools and their capabilities to detect Java concur- collection of bug patterns from a library of antipatterns [10], rency bugs and bug patterns. The tools, i.e., Coverity Pre- and the selected tools. We address the following questions: vent, Jtest, FindBugs, and Jlint, are evaluated using concur- rent benchmark programs and a collection of multithreaded RQ1: How effective are static analysis tools in detect- bug patterns. In addition, we have categorized the bug pat- ing Java concurrency bugs and bug patterns? tern detectors of the tools and also identified 87 unique bug RQ2: Are commercial SA tools better than open source patterns from the tools’ detectors and literature. SA tools in detecting Java concurrency defects? 1. INTRODUCTION We conducted an experiment [25] to answer these ques- Multicore processors have become the main computing plat- tions where the SA tools acted as subjects, and the bench- form from small embedded systems to large-scale servers. -

How Parasoft and Experitest Enable End-To-End Continuous Testing

How Parasoft and Experitest enable end- to-end continuous testing Guy Arieli, CTO, Experitest Mark Lambert, VP of Products, Parasoft Today’s Speakers Guy Arieli Mark Lambert CTO, Experitest VP of Products, Parasoft Experitest Snapshot Leading continuous testing platform provider for web & mobile apps, available as a SaaS or on-premise deployment 5 global Open source 1000+ datacenters support: customers • US, CA • Appium • UK All verticles: • Germany • Espresso banking, telecom, • Israel • XCTest insurance etc. • Australia • Selenium • 2 more planned Functional DevOps Available as Performance users: SaaS or Visual testing developers on-premise and testers deployment 5 SeeTest Continuous Testing platform for web and mobile apps Integrations: Mobile Desktop devices browsers Deployment models: Digital • SaaS Lab • On-premise Emulators & simulators 6 About Parasoft Pushing the bounds of what we can automate in software testing for the development lifecycle Jtest, C/C++test, dotTEST Developer SOAtest Tester Virtualize Load Test Parasoft DTP Manager Testing in a rapidly changing digital environment Mobile First Increased Test Team Collaboration Strategy Complexity Increasing complexity of test requirements Application Under Test Web Automated Testing with Parasoft SOAtest Parasoft SOAtest Application Under Test Web SOAtest SOAtest Omni/Multi-Channel Testing with Parasoft SOAtest Unavailable or fee-based 3rd Parasoft party systems SOAtest Application Under Test Uncontrollable behavior Web “Agile Roadblock” SOAtest Unable to ‘shift- left’ performance