Survey of Verification and Validation Techniques for Small Satellite Software Development

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bottleneck Discovery and Overlay Management in Network Coded Peer-To-Peer Systems

Bottleneck Discovery and Overlay Management in Network Coded Peer-to-Peer Systems ∗ Mahdi Jafarisiavoshani Christina Fragouli EPFL EPFL Switzerland Switzerland mahdi.jafari@epfl.ch christina.fragouli@epfl.ch Suhas Diggavi Christos Gkantsidis EPFL Microsoft Research Switzerland United Kingdom suhas.diggavi@epfl.ch [email protected] ABSTRACT 1. INTRODUCTION The performance of peer-to-peer (P2P) networks depends critically Peer-to-peer (P2P) networks have proved a very successful dis- on the good connectivity of the overlay topology. In this paper we tributed architecture for content distribution. The design philoso- study P2P networks for content distribution (such as Avalanche) phy of such systems is to delegate the distribution task to the par- that use randomized network coding techniques. The basic idea of ticipating nodes (peers) themselves, rather than concentrating it to such systems is that peers randomly combine and exchange linear a low number of servers with limited resources. Therefore, such a combinations of the source packets. A header appended to each P2P non-hierarchical approach is inherently scalable, since it ex- packet specifies the linear combination that the packet carries. In ploits the computing power and bandwidth of all the participants. this paper we show that the linear combinations a node receives Having addressed the problem of ensuring sufficient network re- from its neighbors reveal structural information about the network. sources, P2P systems still face the challenge of how to efficiently We propose algorithms to utilize this observation for topology man- utilize these resources while maintaining a decentralized operation. agement to avoid bottlenecks and clustering in network-coded P2P Central to this is the challenging management problem of connect- systems. -

Developer Condemns City's Attitude Aican Appeal on Hold Avalanche

Developer condemns city's attitude TERRACE -- Although city under way this spring, law when owners of lots adja- ment." said. council says it favours develop- The problem, he explained, cent to the sewer line and road However, alderman Danny ment, it's not willing to put its was sanitary sewer lines within developed their properties. Sheridan maintained, that is not Pointing out the development money where its mouth is, says the sub-division had to be hook- Council, however, refused the case. could still proceed if Shapitka a local developer. ed up to an existing city lines. both requests. The issue, he said, was paid the road and sewer connec- And that, adds Stan The nearest was :at Mountain • "It just seems the city isn't whether the city had subsidized tion costs, Sheridan said other Shapitka, has prompted him to Vista Drive, approximately too interested in lending any developers in the past -- "I'm developers had done so in the drop plans for what would have 850ft. fr0m-the:sou[hwest cur: type of assistance whatsoever," pretty sure it hasn't" -- and past. That included the city been the city's largest residential her of the development proper- Shapitka said, adding council whether it was going to do so in itself when it had developed sub-division project in many ty. appeared to want the estimated this case. "Council didn't seem properties it owned on the Birch years. Shapitka said he asked the ci- $500,000 increased tax base the willing to do that." Ave. bench and deJong Cres- In August of last year ty to build that line and to pave sub-division :would bring but While conceding Shapitka cent. -

Parasoft Static Application Security Testing (SAST) for .Net - C/C++ - Java Platform

Parasoft Static Application Security Testing (SAST) for .Net - C/C++ - Java Platform Parasoft® dotTEST™ /Jtest (for Java) / C/C++test is an integrated Development Testing solution for automating a broad range of testing best practices proven to improve development team productivity and software quality. dotTEST / Java Test / C/C++ Test also seamlessly integrates with Parasoft SOAtest as an option, which enables end-to-end functional and load testing for complex distributed applications and transactions. Capabilities Overview STATIC ANALYSIS ● Broad support for languages and standards: Security | C/C++ | Java | .NET | FDA | Safety-critical ● Static analysis tool industry leader since 1994 ● Simple out-of-the-box integration into your SDLC ● Prevent and expose defects via multiple analysis techniques ● Find and fix issues rapidly, with minimal disruption ● Integrated with Parasoft's suite of development testing capabilities, including unit testing, code coverage analysis, and code review CODE COVERAGE ANALYSIS ● Track coverage during unit test execution and the data merge with coverage captured during functional and manual testing in Parasoft Development Testing Platform to measure true test coverage. ● Integrate with coverage data with static analysis violations, unit testing results, and other testing practices in Parasoft Development Testing Platform for a complete view of the risk associated with your application ● Achieve test traceability to understand the impact of change, focus testing activities based on risk, and meet compliance -

Email: [email protected] Website

Email: [email protected] Website: http://chrismatech.com Experienced software and systems engineer who has successfully deployed custom & industry-standard embedded, desktop and networked systems for commercial and DoD customers. Delivered systems operate on airborne, terrestrial, maritime, and space based vehicles and platforms. Expert in performing all phases of the software and system development life-cycle including: Creating requirements and design specifications. Model-driven software development, code implementation, and unit test. System integration. Requirements-based system verification with structural coverage at the system and module levels. Formal qualification/certification test. Final product packaging, delivery, and site installation. Post-delivery maintenance and customer support. Requirements management and end-to-end traceability. Configuration management. Review & control of change requests and defect reports. Quality assurance. Peer reviews/Fagan inspections, TIMs, PDRs and CDRs. Management and project planning proficiencies include: Supervising, coordinating, and mentoring engineering staff members. Creating project Software Development Plans (SDPs). Establishing system architectures, baseline designs, and technical direction. Creating & tracking project task and resource scheduling, costs, resource utilization, and metrics (e.g., Earned Value Analysis). Preparing proposals in response to RFPs and SOWs. Project Management • Microsoft Project, Excel, Word, PowerPoint, Visio & Documentation: • Adobe Acrobat Professional -

An Eclipse Plug-In for Testing and Debugging

GZoltar: An Eclipse Plug-In for Testing and Debugging José Campos André Riboira Alexandre Perez Rui Abreu Department of Informatics Engineering Faculty of Engineering, University of Porto Portugal {jose.carlos.campos, andre.riboira, alexandre.perez}@fe.up.pt; [email protected] ABSTRACT coverage). Several debugging tools exist which are based on Testing and debugging is the most expensive, error-prone stepping through the execution of the program (e.g., GDB phase in the software development life cycle. Automated and DDD). These traditional, manual fault localization ap- testing and diagnosis of software faults can drastically proaches have a number of important limitations. The place- improve the efficiency of this phase, this way improving ment of print statements as well as the inspection of their the overall quality of the software. In this paper we output are unstructured and ad-hoc, and are typically based present a toolset for automatic testing and fault localiza- on the developer's intuition. In addition, developers tend to use only test cases that reveal the failure, and therefore do tion, dubbed GZoltar, which hosts techniques for (regres- sion) test suite minimization and automatic fault diagno- not use valuable information from (the typically available) sis (namely, spectrum-based fault localization). The toolset passing test cases. provides the infrastructure to automatically instrument the Aimed at drastic cost reduction, much research has been source code of software programs to produce runtime data. performed in developing automatic testing and fault local- Subsequently the data was analyzed to both minimize the ization techniques and tools. As far as testing is concerned, test suite and return a ranked list of diagnosis candidates. -

The Fourth Paradigm

ABOUT THE FOURTH PARADIGM This book presents the first broad look at the rapidly emerging field of data- THE FOUR intensive science, with the goal of influencing the worldwide scientific and com- puting research communities and inspiring the next generation of scientists. Increasingly, scientific breakthroughs will be powered by advanced computing capabilities that help researchers manipulate and explore massive datasets. The speed at which any given scientific discipline advances will depend on how well its researchers collaborate with one another, and with technologists, in areas of eScience such as databases, workflow management, visualization, and cloud- computing technologies. This collection of essays expands on the vision of pio- T neering computer scientist Jim Gray for a new, fourth paradigm of discovery based H PARADIGM on data-intensive science and offers insights into how it can be fully realized. “The impact of Jim Gray’s thinking is continuing to get people to think in a new way about how data and software are redefining what it means to do science.” —Bill GaTES “I often tell people working in eScience that they aren’t in this field because they are visionaries or super-intelligent—it’s because they care about science The and they are alive now. It is about technology changing the world, and science taking advantage of it, to do more and do better.” —RhyS FRANCIS, AUSTRALIAN eRESEARCH INFRASTRUCTURE COUNCIL F OURTH “One of the greatest challenges for 21st-century science is how we respond to this new era of data-intensive -

Eclipse Project Briefing Materials

[________________________] Eclipse project briefing materials. Copyright (c) 2002, 2003 IBM Corporation and others. All rights reserved. This content is made available to you by Eclipse.org under the terms and conditions of the Common Public License Version 1.0 ("CPL"), a copy of which is available at http://www.eclipse.org/legal/cpl-v10.html The most up-to-date briefing materials on the Eclipse project are found on the eclipse.org website at http://eclipse.org/eclipse/ 200303331 1 EclipseEclipse ProjectProject 200303331 3 Eclipse Project Aims ■ Provide open platform for application development tools – Run on a wide range of operating systems – GUI and non-GUI ■ Language-neutral – Permit unrestricted content types – HTML, Java, C, JSP, EJB, XML, GIF, … ■ Facilitate seamless tool integration – At UI and deeper – Add new tools to existing installed products ■ Attract community of tool developers – Including independent software vendors (ISVs) – Capitalize on popularity of Java for writing tools 200303331 4 Eclipse Overview Another Eclipse Platform Tool Java Workbench Help Development Tools JFace (JDT) SWT Team Your Tool Plug-in Workspace Development Debug Environment (PDE) Their Platform Runtime Tool Eclipse Project 200303331 5 Eclipse Origins ■ Eclipse created by OTI and IBM teams responsible for IDE products – IBM VisualAge/Smalltalk (Smalltalk IDE) – IBM VisualAge/Java (Java IDE) – IBM VisualAge/Micro Edition (Java IDE) ■ Initially staffed with 40 full-time developers ■ Geographically dispersed development teams – OTI Ottawa, OTI Minneapolis, -

The Methbot Operation

The Methbot Operation December 20, 2016 1 The Methbot Operation White Ops has exposed the largest and most profitable ad fraud operation to strike digital advertising to date. THE METHBOT OPERATION 2 Russian cybercriminals are siphoning At this point the Methbot operation has millions of advertising dollars per day become so embedded in the layers of away from U.S. media companies and the the advertising ecosystem, the only way biggest U.S. brand name advertisers in to shut it down is to make the details the single most profitable bot operation public to help affected parties take discovered to date. Dubbed “Methbot” action. Therefore, White Ops is releasing because of references to “meth” in its results from our research with that code, this operation produces massive objective in mind. volumes of fraudulent video advertising impressions by commandeering critical parts of Internet infrastructure and Information available for targeting the premium video advertising download space. Using an army of automated web • IP addresses known to belong to browsers run from fraudulently acquired Methbot for advertisers and their IP addresses, the Methbot operation agencies and platforms to block. is “watching” as many as 300 million This is the fastest way to shut down the video ads per day on falsified websites operation’s ability to monetize. designed to look like premium publisher inventory. More than 6,000 premium • Falsified domain list and full URL domains were targeted and spoofed, list to show the magnitude of impact enabling the operation to attract millions this operation had on the publishing in real advertising dollars. -

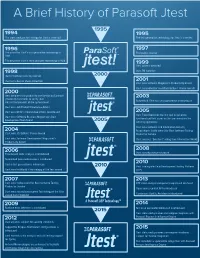

A Brief History of Parasoft Jtest

A Brief History of Parasoft Jtest The static analysis technology for Jtest is invented The test generation technology for Jtest is invented The patent for Jtest’s test generation technology is First public release filed The patent for Jtest’s static analysis technology is filed Jtest patents awarded Jtest TM awarded Jtest introduces security rule set Jtest wins Best in Show at DevCon Jtest wins Software Magazine’s Productivity award Jtest nominated for JavaWorld Editors’ Choice awards Jtest becomes first product to use Design by Contract (Jcontract) comments to verify Java Automated JUnit test case generation is introduced classes/components at the system level Jtest wins Jolt Product Excellence Award Jtest wins Writer’s Choice Award from Java Report Jtest Tracer becomes the first tool to generate Jtest wins Software Business Magazines’s Best functional unit test cases as the user exercises the Development Tool Award working application Jtest wins Software and Information Industry Association’s Codie award for Best Software Testing Jtest wins JDJ Editors’ Choice Award Product or Service Jtest wins Software Development Magazines’s Jtest receives “Excellent” rating from Information World Productivity Award Jtest security edition released Flow-based static analysis is introduced Automated peer code review is introduced Cactus test generation is introduced Jtest is integrated into Development Testing Platform Jtest wins InfoWorld’s Technology of the Year award (DTP) Jtest wins Codie award for Best Software Testing DTP static analysis components -

Artificial Intelligence and Knowledge Engineering

UNIVERSAL UNIT TESTS GENERATOR BASED ON SOFTWARE MODELS Sarunas Packevicius, Andrej Usaniov, Eduardas Bareisa Kaunas University of Technology, Department of Software Engineering, Studentų 50, Kaunas, Lithuania, [email protected], [email protected], [email protected] Abstract. Unit tests are viewed as a coding result of software developers. Unit tests are usually created by developers and implemented directly using specific language and unit testing framework. Unit test generation tools usually does the same thing – generates tests for specific language using specific unit testing framework. Thus such generator is suitable for only one situation. Another drawback of these generators – they mainly use software code as a source for generation. In this paper we are presenting tests generator model which could be able to generate unit tests for any language using any unit testing framework. It will be able to use not only software under test code, but and other artifacts: models, specifications. Keywords: unit testing, tests generator, model based testing 1 Introduction Software testing automation is seen as a mean for reducing software construction costs by eliminating or reducing manual software testing phase. Software tests generators are used for software testing automation. Software tests generators have to fulfill such goals: 1. Create repeatable tests. 2. Create tests for bug detection. 3. Create self-checking tests. Current unit tests generators (for example Parasoft JTest) fulfill only some of these goals. They usually generate repeatable tests, but a tester has to specify the tests oracle (a generator generates test inputs, but provides no way to verify if test execution outcomes are correct) – tests are not self-checking. -

The Failure of Mandated Disclosure

BEN-SHAHAR_FINAL.DOC (DO NOT DELETE) 2/3/2011 11:53 AM ARTICLE THE FAILURE OF MANDATED DISCLOSURE † †† OMRI BEN-SHAHAR & CARL E. SCHNEIDER This Article explores the spectacular prevalence, and failure, of the single most common technique for protecting personal autonomy in modern society: mandated disclosure. The Article has four Parts: (1) a comprehensive sum- mary of the recurring use of mandated disclosures, in many forms and circums- tances, in the areas of consumer and borrower protection, patient informed con- sent, contract formation, and constitutional rights; (2) a survey of the empirical literature documenting the failure of the mandated disclosure regime in informing people and in improving their decisions; (3) an account of the multitude of reasons mandated disclosures fail, focusing on the political dy- namics underlying the enactments of these mandates, the incentives of disclosers to carry them out, and, most importantly, on the ability of disclosees to use them; and (4) an argument that mandated disclosure not only fails to achieve its stated goal but also leads to unintended consequences that often harm the very people it intends to serve. INTRODUCTION ......................................................................................649 A. The Argument ................................................................. 649 B. The Method ..................................................................... 651 C. The Style ......................................................................... 652 I. THE DISCLOSURE EMPIRE: THE PERVASIVENESS OF MANDATED DISCLOSURE ...................................................................................652 † Frank & Bernice J. Greenberg Professor of Law, University of Chicago. †† Chauncey Stillman Professor of Law & Professor of Internal Medicine, Universi- ty of Michigan. Helpful comments were provided by workshop participants at The University of Pennsylvania, Georgetown University, The University of Michigan, Tel- Aviv University, and the Federal Reserve Banks of Chicago and Cleveland. -

Accelerate Software Innovation Through Continuous Quality

Accelerate Software Innovation Through Continuous Quality 1 Software quality is recognized as the #1 issue IT executives are trying to mitigate. Enterprise organizations strive to accelerate the delivery of a compelling user experience to their customers in order to drive revenue. Software quality is recognized as the #1 issue IT executives are trying to mitigate. QA teams know they have issues and are actively looking for solutions to save time, increase quality, improve security, and more. The most notable difficulties are in identifying the right areas to test, the availability of flexible and reliable test environments and test data, and the realization of benefits from automation. You may be facing many challenges with delivering software to meet the high expectations for quality, cost, and schedule driven by the business. An effective software testing strategy can address these issues. If you’re looking to improve your software quality while achieving your business goals, Parasoft can help. With over 30 years of making testing easier for our customers, we have the innovation you need and the experience you trust. Our extensive continuous quality suite spans every testing need and enables you to reach new heights. 3 QUALITY-FIRST APPROACH You can’t test quality into an application at the end of the software development life cycle (SDLC). You need to ensure that your software development process and practices put a priority on quality- driven development and integrate a comprehensive testing strategy to verify that the application’s functionality meets the requirements. Shift testing left to the start of your development process to bring quality to the forefront.