Using Crash Hoare Logic for Certifying the FSCQ File System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Development of a Verified Flash File System ⋆

Development of a Verified Flash File System ? Gerhard Schellhorn, Gidon Ernst, J¨orgPf¨ahler,Dominik Haneberg, and Wolfgang Reif Institute for Software & Systems Engineering University of Augsburg, Germany fschellhorn,ernst,joerg.pfaehler,haneberg,reifg @informatik.uni-augsburg.de Abstract. This paper gives an overview over the development of a for- mally verified file system for flash memory. We describe our approach that is based on Abstract State Machines and incremental modular re- finement. Some of the important intermediate levels and the features they introduce are given. We report on the verification challenges addressed so far, and point to open problems and future work. We furthermore draw preliminary conclusions on the methodology and the required tool support. 1 Introduction Flaws in the design and implementation of file systems already lead to serious problems in mission-critical systems. A prominent example is the Mars Explo- ration Rover Spirit [34] that got stuck in a reset cycle. In 2013, the Mars Rover Curiosity also had a bug in its file system implementation, that triggered an au- tomatic switch to safe mode. The first incident prompted a proposal to formally verify a file system for flash memory [24,18] as a pilot project for Hoare's Grand Challenge [22]. We are developing a verified flash file system (FFS). This paper reports on our progress and discusses some of the aspects of the project. We describe parts of the design, the formal models, and proofs, pointing out challenges and solutions. The main characteristic of flash memory that guides the design is that data cannot be overwritten in place, instead space can only be reused by erasing whole blocks. -

Membrane: Operating System Support for Restartable File Systems Swaminathan Sundararaman, Sriram Subramanian, Abhishek Rajimwale, Andrea C

Membrane: Operating System Support for Restartable File Systems Swaminathan Sundararaman, Sriram Subramanian, Abhishek Rajimwale, Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau, Michael M. Swift Computer Sciences Department, University of Wisconsin, Madison Abstract and most complex code bases in the kernel. Further, We introduce Membrane, a set of changes to the oper- file systems are still under active development, and new ating system to support restartable file systems. Mem- ones are introduced quite frequently. For example, Linux brane allows an operating system to tolerate a broad has many established file systems, including ext2 [34], class of file system failures and does so while remain- ext3 [35], reiserfs [27], and still there is great interest in ing transparent to running applications; upon failure, the next-generation file systems such as Linux ext4 and btrfs. file system restarts, its state is restored, and pending ap- Thus, file systems are large, complex, and under develop- plication requests are serviced as if no failure had oc- ment, the perfect storm for numerous bugs to arise. curred. Membrane provides transparent recovery through Because of the likely presence of flaws in their imple- a lightweight logging and checkpoint infrastructure, and mentation, it is critical to consider how to recover from includes novel techniques to improve performance and file system crashes as well. Unfortunately, we cannot di- correctness of its fault-anticipation and recovery machin- rectly apply previous work from the device-driver litera- ery. We tested Membrane with ext2, ext3, and VFAT. ture to improving file-system fault recovery. File systems, Through experimentation, we show that Membrane in- unlike device drivers, are extremely stateful, as they man- duces little performance overhead and can tolerate a wide age vast amounts of both in-memory and persistent data; range of file system crashes. -

Elinos Product Overview

SYSGO Product Overview ELinOS 7 Industrial Grade Linux ELinOS is a SYSGO Linux distribution to help developers save time and effort by focusing on their application. Our Industrial Grade Linux with user-friendly IDE goes along with the best selection of software packages to meet our cog linux Qt LOCK customers needs, and with the comfort of world-class technical support. ELinOS now includes Docker support Feature LTS Qt Open SSH Configurator Kernel embedded Open VPN in order to isolate applications running on the same system. laptop Q Bug Shield-Virus Docker Eclipse-based QEMU-based Application Integrated Docker IDE HW Emulators Debugging Firewall Support ELINOS FEATURES MANAGING EMBEDDED LINUX VERSATILITY • Industrial Grade Creating an Embedded Linux based system is like solving a puzzle and putting • Eclipse-based IDE for embedded the right pieces together. This requires a deep knowledge of Linux’s versatility Systems (CODEO) and takes time for the selection of components, development of Board Support • Multiple Linux kernel versions Packages and drivers, and testing of the whole system – not only for newcomers. incl. Kernel 4.19 LTS with real-time enhancements With ELinOS, SYSGO offers an ‘out-of-the-box’ experience which allows to focus • Quick and easy target on the development of competitive applications itself. ELinOS incorporates the system configuration appropriate tools, such as a feature configurator to help you build the system and • Hardware Emulation (QEMU) boost your project success, including a graphical configuration front-end with a • Extensive file system support built-in integrity validation. • Application debugging • Target analysis APPLICATION & CONFIGURATION ENVIRONMENT • Runs out-of-the-box on PikeOS • Validated and tested for In addition to standard tools, remote debugging, target system monitoring and PowerPC, x86, ARM timing behaviour analyses are essential for application development. -

Filesystem Considerations for Embedded Devices ELC2015 03/25/15

Filesystem considerations for embedded devices ELC2015 03/25/15 Tristan Lelong Senior embedded software engineer Filesystem considerations ABSTRACT The goal of this presentation is to answer a question asked by several customers: which filesystem should you use within your embedded design’s eMMC/SDCard? These storage devices use a standard block interface, compatible with traditional filesystems, but constraints are not those of desktop PC environments. EXT2/3/4, BTRFS, F2FS are the first of many solutions which come to mind, but how do they all compare? Typical queries include performance, longevity, tools availability, support, and power loss robustness. This presentation will not dive into implementation details but will instead summarize provided answers with the help of various figures and meaningful test results. 2 TABLE OF CONTENTS 1. Introduction 2. Block devices 3. Available filesystems 4. Performances 5. Tools 6. Reliability 7. Conclusion Filesystem considerations ABOUT THE AUTHOR • Tristan Lelong • Embedded software engineer @ Adeneo Embedded • French, living in the Pacific northwest • Embedded software, free software, and Linux kernel enthusiast. 4 Introduction Filesystem considerations Introduction INTRODUCTION More and more embedded designs rely on smart memory chips rather than bare NAND or NOR. This presentation will start by describing: • Some context to help understand the differences between NAND and MMC • Some typical requirements found in embedded devices designs • Potential filesystems to use on MMC devices 6 Filesystem considerations Introduction INTRODUCTION Focus will then move to block filesystems. How they are supported, what feature do they advertise. To help understand how they compare, we will present some benchmarks and comparisons regarding: • Tools • Reliability • Performances 7 Block devices Filesystem considerations Block devices MMC, EMMC, SD CARD Vocabulary: • MMC: MultiMediaCard is a memory card unveiled in 1997 by SanDisk and Siemens based on NAND flash memory. -

A Brief Introduction to the Design of UBIFS Document Version 0.1 by Adrian Hunter 27.3.2008

A Brief Introduction to the Design of UBIFS Document version 0.1 by Adrian Hunter 27.3.2008 A file system developed for flash memory requires out-of-place updates . This is because flash memory must be erased before it can be written to, and it can typically only be written once before needing to be erased again. If eraseblocks were small and could be erased quickly, then they could be treated the same as disk sectors, however that is not the case. To read an entire eraseblock, erase it, and write back updated data typically takes 100 times longer than simply writing the updated data to a different eraseblock that has already been erased. In other words, for small updates, in-place updates can take 100 times longer than out-of-place updates. Out-of-place updating requires garbage collection. As data is updated out-of-place, eraseblocks begin to contain a mixture of valid data and data which has become obsolete because it has been updated some place else. Eventually, the file system will run out of empty eraseblocks, so that every single eraseblock contains a mixture of valid data and obsolete data. In order to write new data somewhere, one of the eraseblocks must be emptied so that it can be erased and reused. The process of identifying an eraseblock with a lot of obsolete data, and moving the valid data to another eraseblock, is called garbage collection. Garbage collection suggests the benefits of node-structure. In order to garbage collect an eraseblock, a file system must be able to identify the data that is stored there. -

Monitoring Raw Flash Memory I/O Requests on Embedded Linux

Flashmon V2: Monitoring Raw NAND Flash Memory I/O Requests on Embedded Linux Pierre Olivier Jalil Boukhobza Eric Senn Univ. Europeenne de Bretagne Univ. Europeenne de Bretagne Univ. Europeenne de Bretagne Univ. Bretagne Occidentale, Univ. Bretagne Occidentale, Univ. Bretagne Sud, UMR6285, Lab-STICC, UMR6285, Lab-STICC, UMR6285, Lab-STICC, F29200 Brest, France, F29200 Brest, France, F56100 Lorient, France [email protected] [email protected] [email protected] ABSTRACT management mechanisms. One of these mechanisms is This paper presents Flashmon version 2, a tool for monitoring implemented by the Operating System (OS) in the form of embedded Linux NAND flash memory I/O requests. It is designed dedicated Flash File Systems (FFS). That solution is adopted in for embedded boards based devices containing raw flash chips. devices using raw flash chips on embedded boards, such as Flashmon is a kernel module and stands for "flash monitor". It smartphones, tablet PCs, set-top boxes, etc. Linux is a major traces flash I/O by placing kernel probes at the NAND driver operating system in such devices, and provides a wide support for level. It allows tracing at runtime the 3 main flash operations: several NAND flash memory models. In these devices the flash page reads / writes and block erasures. Flashmon is (1) generic as chip itself does not embed any particular controller. it was successfully tested on the three most widely used flash file This paper presents Flashmon version 2, a tool for monitoring systems that are JFFS2, UBIFS and YAFFS, and several NAND embedded Linux I/O operations on NAND secondary storage. -

Cross-Checking Semantic Correctness: the Case of Finding File System Bugs

Cross-checking Semantic Correctness: The Case of Finding File System Bugs Paper #171 Abstract 1. Introduction Today, systems software is too complex to be bug-free. To System software is buggy. It is often implemented in un- find bugs in systems software, developers often rely oncode safe languages (e.g., C) for achieving better performance or checkers, like Sparse in Linux. However, the capability of directly accessing the hardware, thereby facilitating the intro- existing tools used in commodity, large-scale systems is duction of tedious bugs. At the same time, it is also complex. limited to finding only shallow bugs that tend to be introduced For example, Linux consists of 17 millions lines of pure code by the simple mistakes of programmers and require no deep and accepts around 190 commits every day. understanding of code. Unfortunately, a majority of and To help this situation, researchers often use memory-safe difficult-to-find bugs are semantic ones, which violate high- languages in the first place. For example, Singularity [32] level rules or invariants (e.g., missing a permission check). uses C# and Unikernel [40] use OCaml for their OS develop- Thus, it is difficult for code-checking tools that lack the ment. However, in practice, developers usually rely on code understanding of a programmer’s true intention, to reason checkers. For example, Linux has integrated static code anal- about semantic correctness. ysis tools (e.g., Sparse) in its build process to detect common To solve this problem, we present JUXTA, a tool that au- coding errors (e.g., if system calls validate arguments that tomatically infers high-level semantics directly from source come from userspace). -

Sibylfs: Formal Specification and Oracle-Based Testing for POSIX and Real-World File Systems

SibylFS: formal specification and oracle-based testing for POSIX and real-world file systems Tom Ridge1 David Sheets2 Thomas Tuerk3 Andrea Giugliano1 Anil Madhavapeddy2 Peter Sewell2 1University of Leicester 2University of Cambridge 3FireEye http://sibylfs.io/ Abstract 1. Introduction Systems depend critically on the behaviour of file systems, Problem File systems, in common with several other key but that behaviour differs in many details, both between systems components, have some well-known but challeng- implementations and between each implementation and the ing properties: POSIX (and other) prose specifications. Building robust and portable software requires understanding these details and differences, but there is currently no good way to system- • they provide behaviourally complex abstractions; atically describe, investigate, or test file system behaviour • there are many important file system implementations, across this complex multi-platform interface. each with its own internal complexities; In this paper we show how to characterise the envelope • different file systems, while broadly similar, nevertheless of allowed behaviour of file systems in a form that enables behave quite differently in some cases; and practical and highly discriminating testing. We give a math- • other system software and applications often must be ematically rigorous model of file system behaviour, SibylFS, written to be portable between file systems, and file sys- that specifies the range of allowed behaviours of a file sys- tems themselves are sometimes ported from one OS to tem for any sequence of the system calls within our scope, another, or written to support application portability. and that can be used as a test oracle to decide whether an ob- served trace is allowed by the model, both for validating the File system behaviour, and especially these variations in be- model and for testing file systems against it. -

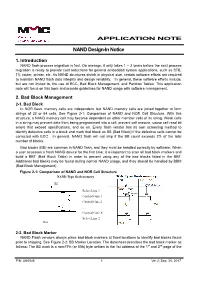

NAND Design-In Notice

APPLICATION NOTE NAND Design-In Notice 1. Introduction NAND flash process migration is fast. On average, it only takes 1 ~ 2 years before the next process migration is ready to provide cost reductions for general embedded system applications, such as STB, TV, router, printer, etc. As NAND structures shrink in physical size, certain software efforts are required to maintain NAND flash data integrity and design reliability. In general, these software efforts include, but are not limited to, the use of ECC, Bad Black Management, and Partition Tables. This application note will focus on this topic and provide guidelines for NAND usage with software management. 2. Bad Block Management 2-1. Bad Block In NOR flash, memory cells are independent, but NAND memory cells are joined together to form strings of 32 or 64 cells. See Figure 2-1: Comparison of NAND and NOR Cell Structure. With this structure, a NAND memory cell may become dependent on other member cells of its string. Weak cells in a string may prevent data from being programmed into a cell, prevent cell erasure, cause cell read bit errors that exceed specifications, and so on. Every flash vendor has its own screening method to identify defective cells in a block and mark that block as BB (Bad Block) if the defective cells cannot be corrected with ECC. In general, NAND flash will not ship if the BB count exceeds 2% of the total number of blocks. Bad blocks (BB) are common in NAND flash, and they must be handled correctly by software. When a user accesses a fresh NAND device for the first time, it is important to scan all bad block markers and build a BBT (Bad Block Table) in order to prevent using any of the bad blocks listed in the BBT. -

A File System for Large-Scale NAND Flash Memory Based Storage System

Journal of The Korea Society of Computer and Information www.ksci.re.kr Vol. 22 No. 9, pp. 1-8, September 2017 https://doi.org/10.9708/jksci.2017.22.09.001 A File System for Large-scale NAND Flash Memory Based Storage System 1)Sunghoon Son* Abstract In this paper, we propose a file system for flash memory which remedies shortcomings of existing flash memory file systems. Besides supporting large block size, the proposed file system reduces time in initializing file system significantly by adopting logical address comprised of erase block number and bitmap for pages in the block to find a page. The file system is suitable for embedded systems with limited main memory since it has small in-memory data structures. It also provides efficient management of obsolete blocks and free blocks, which contribute to the reduction of file update time. Finally the proposed file system can easily configure the maximum file size and file system size limits, which results in portability to emerging larger flash memories. By conducting performance evaluation studies, we show that the proposed file system can contribute to the performance improvement of embedded systems. ▸Keyword: NAND Flash memory, Flash file system, Embedded system I. Introduction 최근 수년간 플래시 메모리는 용량이나 접근 속도 면에서 비 용 파일시스템의 사용은 필수적이다. 현재 YAFFS, JFFS2 등 약적인 발전을 거듭하고 있다. 현재 휴대용 디지털 기기에는 주 과 같은 플래시 메모리를 위한 몇몇 전용 파일시스템들이 개발 로 수십 GB의 NAND 플래시 메모리가 저장장치로 사용되고 되어서 사용되고 있다. 이 파일시스템들은 플래시 메모리의 특 있으며, 최신의 12 ~ 15nm 수준의 반도체 제작 공정 하에서는 성을 고려하여 개발된 것이기는 하지만, 최근 출시되고 있는 대 512GB 급의 NAND 플래시 메모리 칩이 개발된 바 있다. -

Extending the Lifetime of Flash-Based Storage Through Reducing Write Amplification from File Systems

Extending the Lifetime of Flash-based Storage through Reducing Write Amplification from File Systems Youyou Lu Jiwu Shu∗ Weimin Zheng Department of Computer Science and Technology, Tsinghua University Tsinghua National Laboratory for Information Science and Technology ∗Corresponding author: [email protected] [email protected], [email protected] Abstract storage systems. However, the increased density of flash memory requires finer voltage steps inside each cell, Flash memory has gained in popularity as storage which is less tolerant to leakage and noise interference. devices for both enterprise and embedded systems As a result, the reliability and lifetime of flash memory because of its high performance, low energy and reduced have declined dramatically, producing the endurance cost. The endurance problem of flash memory, however, problem [18, 12, 17]. is still a challenge and is getting worse as storage Wear leveling and data reduction are two common density increases with the adoption of multi-level cells methods to extend the lifetime of the flash storage. With (MLC). Prior work has addressed wear leveling and wear leveling, program/erase (P/E) operations tend to be data reduction, but there is significantly less work on distributed across the flash blocks to make them wear out using the file system to improve flash lifetimes. Some evenly [11, 14]. Data reduction is used in both the flash common mechanisms in traditional file systems, such as translation layers (FTLs) and the file systems. The FTLs journaling, metadata synchronization, and page-aligned introduce deduplication and compression techniques to update, can induce extra write operations and aggravate avoid updates of redundant data [15, 33, 34]. -

The Kernel Report

The Kernel Report RTLWS 11 edition Jonathan Corbet LWN.net [email protected] “Famous last words, but the actual patch volume _has_ to drop off one day. We have to finish this thing one day." -- Andrew Morton September, 2005 (2.6.14) 2.6.27 -> 2.6.31++ (October 9, 2008 to September 18, 2009) 48,000 changesets merged 2,500 developers 400 employers The kernel grew by 2.5 million lines 2.6.27 -> 2.6.31++ (October 9, 2008 to September 18, 2009) 48,000 changesets merged 2,500 developers 400 employers The kernel grew by 2.5 million lines That come out to: 140 changesets merged per day 7267 lines of code added every day The employer stats None 19% Atheros 2% Red Hat 12% academics 2% Intel 7% Analog Devices 2% IBM 6% AMD 1% Novell 6% Nokia 1% unknown 5% Wolfson Micro 1% Oracle 4% Vyatta 1% consultants 3% HP 1% Fujitsu 2% Parallels 1% Renesas Tech 2% Sun 1% 2.6.27 (October 9, 2008) Ftrace UBIFS Multiqueue networking gspca video driver set Block layer integrity checking 2.6.28 (December 24, 2008) GEM graphics memory manager ext4 is no longer experimental -staging tree Wireless USB Container freezer Tracepoints 2.6.29 (March 23, 2009) Kernel mode setting Filesystems Btrfs Squashfs WIMAX support 4096 CPU support 2.6.30 (June 9) TOMOYO Linux Object storage device support Integrity measurement FS-Cache ext4 robustness fixes Nilfs R6xx/R7xx graphics support preadv()/pwritev() Adaptive spinning Threaded interrupt mutexes handlers 2.6.31 (September 9) Performance counter support Char devices in user space Kmemleak fsnotify infrastructure TTM and Radeon KMS support Storage topology ...about finished? ...about finished? ...so what's left? 2.6.32 (early December) Devtmpfs Lots of block scalability work Performance counter improvements Scheduler tweaks Kernel Shared Memory HWPOISON Networking “Based on all the measurements I'm aware of, Linux has the fastest & most complete stack of any OS.” -- Van Jacobson But..