Robots As Powerful Allies for the Study of Embodied Cognition from the Bottom Up, in A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lower Body Design of the `Icub' a Human-Baby Like Crawling Robot

CORE Metadata, citation and similar papers at core.ac.uk Provided by University of Salford Institutional Repository Lower Body Design of the ‘iCub’ a Human-baby like Crawling Robot N.G.Tsagarakis M. Sinclair F. Becchi Center of Robotics and Automation Center of Robotics and Automation TELEROBOT University of Salford University of Salford Advanced Robotics Salford , M5 4WT, UK Salford , M5 4WT, UK 16128 Genova, Italy [email protected] [email protected] [email protected] G. Metta G. Sandini D.G.Caldwell LIRA-Lab, DIST LIRA-Lab, DIST Center of Robotics and Automation University of Genova University of Genova University of Salford 16145 Genova, Italy 16145 Genova, Italy Salford , M5 4WT, UK [email protected] [email protected] [email protected] Abstract – The development of robotic cognition and a human like robots has led to the development of H6 and H7 greater understanding of human cognition form two of the [2]. Within the commercial arena there were also robots of current greatest challenges of science. Within the considerable distinction including those developed by RobotCub project the goal is the development of an HONDA. Their second prototype, P2, was introduced in 1996 embodied robotic child (iCub) with the physical and and provided an important step forward in the development of ultimately cognitive abilities of a 2 ½ year old human full body humanoid systems [3]. P3 introduced in 1997 was a baby. The ultimate goal of this project is to provide the scaled down version of P2 [4]. ASIMO (Advanced Step in cognition research community with an open human like Innovative Mobility) a child sized robot appeared in 2000. -

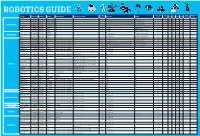

Robotics Section Guide for Web.Indd

ROBOTICS GUIDE Soldering Teachers Notes Early Higher Hobby/Home The Robot Product Code Description The Brand Assembly Required Tools That May Be Helpful Devices Req Software KS1 KS2 KS3 KS4 Required Available Years Education School VEX 123! 70-6250 CLICK HERE VEX No ✘ None No ✔ ✔ ✔ ✔ Dot 70-1101 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ Dash 70-1100 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ ✔ Ready to Go Robot Cue 70-1108 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ Codey Rocky 75-0516 CLICK HERE Makeblock No ✘ PC, Laptop Free downloadb ale Mblock Software ✔ ✔ ✔ ✔ Ozobot 70-8200 CLICK HERE Ozobot No ✘ PC, Laptop Free downloads ✔ ✔ ✔ ✔ Ohbot 76-0000 CLICK HERE Ohbot No ✘ PC, Laptop Free downloads for Windows or Pi ✔ ✔ ✔ ✔ SoftBank NAO 70-8893 CLICK HERE No ✘ PC, Laptop or Ipad Choregraph - free to download ✔ ✔ ✔ ✔ ✔ ✔ ✔ Robotics Humanoid Robots SoftBank Pepper 70-8870 CLICK HERE No ✘ PC, Laptop or Ipad Choregraph - free to download ✔ ✔ ✔ ✔ ✔ ✔ ✔ Robotics Ohbot 76-0001 CLICK HERE Ohbot Assembly is part of the fun & learning! ✘ PC, Laptop Free downloads for Windows or Pi ✔ ✔ ✔ ✔ FABLE 00-0408 CLICK HERE Shape Robotics Assembly is part of the fun & learning! ✘ PC, Laptop or Ipad Free Downloadable ✔ ✔ ✔ ✔ VEX GO! 70-6311 CLICK HERE VEX Assembly is part of the fun & learning! ✘ Windows, Mac, -

Behavior Trees in Robotics and AI

Michele Colledanchise and Petter Ogren¨ Behavior Trees in Robotics and AI An Introduction arXiv:1709.00084v4 [cs.RO] 3 Jun 2020 Contents 1 What are Behavior Trees? ....................................... 3 1.1 A Short History and Motivation of BTs . .4 1.2 What is wrong with FSMs? The Need for Reactiveness and Modularity . .5 1.3 Classical Formulation of BTs. .6 1.3.1 Execution Example of a BT . .9 1.3.2 Control Flow Nodes with Memory . 11 1.4 Creating a BT for Pac-Man from Scratch . 12 1.5 Creating a BT for a Mobile Manipulator Robot . 14 1.6 Use of BTs in Robotics and AI . 15 1.6.1 BTs in autonomous vehicles . 16 1.6.2 BTs in industrial robotics . 18 1.6.3 BTs in the Amazon Picking Challenge . 20 1.6.4 BTs inside the social robot JIBO . 21 2 How Behavior Trees Generalize and Relate to Earlier Ideas . 23 2.1 Finite State Machines . 23 2.1.1 Advantages and disadvantages . 24 2.2 Hierarchical Finite State Machines . 24 2.2.1 Advantages and disadvantages . 24 2.2.2 Creating a FSM that works like a BTs . 29 2.2.3 Creating a BT that works like a FSM . 32 2.3 Subsumption Architecture . 32 2.3.1 Advantages and disadvantages . 33 2.3.2 How BTs Generalize the Subsumption Architecture . 33 2.4 Teleo-Reactive programs . 33 2.4.1 Advantages and disadvantages . 34 2.4.2 How BTs Generalize Teleo-Reactive Programs . 35 2.5 Decision Trees . 35 2.5.1 Advantages and disadvantages . -

Minding the Body Interacting Socially Through Embodied Action

Linköping Studies in Science and Technology Dissertation No. 1112 Minding the Body Interacting socially through embodied action by Jessica Lindblom Department of Computer and Information Science Linköpings universitet SE-581 83 Linköping, Sweden Linköping 2007 © Jessica Lindblom 2007 Cover designed by Christine Olsson ISBN 978-91-85831-48-7 ISSN 0345-7524 Printed by UniTryck, Linköping 2007 Abstract This dissertation clarifies the role and relevance of the body in social interaction and cognition from an embodied cognitive science perspective. Theories of embodied cognition have during the past two decades offered a radical shift in explanations of the human mind, from traditional computationalism which considers cognition in terms of internal symbolic representations and computational processes, to emphasizing the way cognition is shaped by the body and its sensorimotor interaction with the surrounding social and material world. This thesis develops a framework for the embodied nature of social interaction and cognition, which is based on an interdisciplinary approach that ranges historically in time and across different disciplines. It includes work in cognitive science, artificial intelligence, phenomenology, ethology, developmental psychology, neuroscience, social psychology, linguistics, communication, and gesture studies. The theoretical framework presents a thorough and integrated understanding that supports and explains the embodied nature of social interaction and cognition. It is argued that embodiment is the part and parcel of social interaction and cognition in the most general and specific ways, in which dynamically embodied actions themselves have meaning and agency. The framework is illustrated by empirical work that provides some detailed observational fieldwork on embodied actions captured in three different episodes of spontaneous social interaction in situ. -

Fetishism and the Culture of the Automobile

FETISHISM AND THE CULTURE OF THE AUTOMOBILE James Duncan Mackintosh B.A.(hons.), Simon Fraser University, 1985 THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF ARTS in the Department of Communication Q~amesMackintosh 1990 SIMON FRASER UNIVERSITY August 1990 All rights reserved. This work may not be reproduced in whole or in part, by photocopy or other means, without permission of the author. APPROVAL NAME : James Duncan Mackintosh DEGREE : Master of Arts (Communication) TITLE OF THESIS: Fetishism and the Culture of the Automobile EXAMINING COMMITTEE: Chairman : Dr. William D. Richards, Jr. \ -1 Dr. Martih Labbu Associate Professor Senior Supervisor Dr. Alison C.M. Beale Assistant Professor \I I Dr. - Jerry Zqlove, Associate Professor, Department of ~n~lish, External Examiner DATE APPROVED : 20 August 1990 PARTIAL COPYRIGHT LICENCE I hereby grant to Simon Fraser University the right to lend my thesis or dissertation (the title of which is shown below) to users of the Simon Fraser University Library, and to make partial or single copies only for such users or in response to a request from the library of any other university, or other educational institution, on its own behalf or for one of its users. I further agree that permission for multiple copying of this thesis for scholarly purposes may be granted by me or the Dean of Graduate Studies. It is understood that copying or publication of this thesis for financial gain shall not be allowed without my written permission. Title of Thesis/Dissertation: Fetishism and the Culture of the Automobile. Author : -re James Duncan Mackintosh name 20 August 1990 date ABSTRACT This thesis explores the notion of fetishism as an appropriate instrument of cultural criticism to investigate the rites and rituals surrounding the automobile. -

Robot Mediated Communication

UNIVERSITY OF THE WEST OF ENGLAND ROBOT MEDIATED COMMUNICATION: ENHANCING TELE-PRESENCE USING AN AVATAR HAMZAH ZIYAAD HOSSEN MAMODE 10/08/2015 A thesis submitted in partial fulfilment of the requirements of the University of the West of England, Bristol for the degree of Doctor of Philosophy Faculty of Environment and Technology University of the West of England, Bristol August 2015 Robot Mediated Communication: Enhancing Tele-presence using an Avatar “If words of command are not clear and distinct, if orders are not thoroughly understood, then the general is to blame.” – Sun Tzu (c. 6th century BCE) P-2 Robot Mediated Communication: Enhancing Tele-presence using an Avatar Declaration I declare that the work in this dissertation was carried out in accordance with the requirements of the University's Regulations and Code of Practice for Research Degree Programmes and that it has not been submitted for any other academic award. Except where indicated by specific reference in the text, the work is the candidate's own work. Work done in collaboration with, or with the assistance of, others, is indicated as such. Any views expressed in the dissertation are those of the author. Signed: Date: P-3 Robot Mediated Communication: Enhancing Tele-presence using an Avatar Abstract In the past few years there has been a lot of development in the field of tele-presence. These developments have caused tele-presence technologies to become easily accessible and also for the experience to be enhanced. Since tele-presence is not only used for tele-presence assisted group meetings but also in some forms of Computer Supported Cooperative Work (CSCW), these activities have also been facilitated. -

Lower Body Design of the 'Icub' a Human-Baby Like Crawling Robot

Lower Body Design of the ‘iCub’ a Human-baby like Crawling Robot N.G.Tsagarakis M. Sinclair F. Becchi Center of Robotics and Automation Center of Robotics and Automation TELEROBOT University of Salford University of Salford Advanced Robotics Salford , M5 4WT, UK Salford , M5 4WT, UK 16128 Genova, Italy [email protected] G Metta G. Sandini D.G.Caldwell LIRA-Lab, DIST LIRA-Lab, DIST Center of Robotics and Automation University of Genova University of Genova University of Salford 16145 Genova, Italy 16145 Genova, Italy Salford , M5 4WT, UK [email protected] [email protected] [email protected] Abstract – The development of robotic cognition and a which weights 131.4kg forms a complete human like figure greater understanding of human cognition form two of the [1]. At the University of Tokyo which also has a long history current greatest challenges of science. Within the RobotCub of humanoid development, research efforts on human like project the goal is the development of an embodied robotic child robots has led in recent times to the development of H6 and (iCub)with the physical and ultimately cognitive abilities of a 2 H7. H6 has a total of 35 degrees of freedom (D.O.F) and ½ year old human baby. The ultimate goal of this project is weighs 55Kg [2]. Within the commercial arena there were to provide the cognition research community with an open also robots of considerable distinction including those human like platform for understanding of cognitive developed by HONDA. Their second prototype, P2, was systems through the study of cognitive development. -

Design and Realization of a Humanoid Robot for Fast and Autonomous Bipedal Locomotion

TECHNISCHE UNIVERSITÄT MÜNCHEN Lehrstuhl für Angewandte Mechanik Design and Realization of a Humanoid Robot for Fast and Autonomous Bipedal Locomotion Entwurf und Realisierung eines Humanoiden Roboters für Schnelles und Autonomes Laufen Dipl.-Ing. Univ. Sebastian Lohmeier Vollständiger Abdruck der von der Fakultät für Maschinenwesen der Technischen Universität München zur Erlangung des akademischen Grades eines Doktor-Ingenieurs (Dr.-Ing.) genehmigten Dissertation. Vorsitzender: Univ.-Prof. Dr.-Ing. Udo Lindemann Prüfer der Dissertation: 1. Univ.-Prof. Dr.-Ing. habil. Heinz Ulbrich 2. Univ.-Prof. Dr.-Ing. Horst Baier Die Dissertation wurde am 2. Juni 2010 bei der Technischen Universität München eingereicht und durch die Fakultät für Maschinenwesen am 21. Oktober 2010 angenommen. Colophon The original source for this thesis was edited in GNU Emacs and aucTEX, typeset using pdfLATEX in an automated process using GNU make, and output as PDF. The document was compiled with the LATEX 2" class AMdiss (based on the KOMA-Script class scrreprt). AMdiss is part of the AMclasses bundle that was developed by the author for writing term papers, Diploma theses and dissertations at the Institute of Applied Mechanics, Technische Universität München. Photographs and CAD screenshots were processed and enhanced with THE GIMP. Most vector graphics were drawn with CorelDraw X3, exported as Encapsulated PostScript, and edited with psfrag to obtain high-quality labeling. Some smaller and text-heavy graphics (flowcharts, etc.), as well as diagrams were created using PSTricks. The plot raw data were preprocessed with Matlab. In order to use the PostScript- based LATEX packages with pdfLATEX, a toolchain based on pst-pdf and Ghostscript was used. -

History of Robotics: Timeline

History of Robotics: Timeline This history of robotics is intertwined with the histories of technology, science and the basic principle of progress. Technology used in computing, electricity, even pneumatics and hydraulics can all be considered a part of the history of robotics. The timeline presented is therefore far from complete. Robotics currently represents one of mankind’s greatest accomplishments and is the single greatest attempt of mankind to produce an artificial, sentient being. It is only in recent years that manufacturers are making robotics increasingly available and attainable to the general public. The focus of this timeline is to provide the reader with a general overview of robotics (with a focus more on mobile robots) and to give an appreciation for the inventors and innovators in this field who have helped robotics to become what it is today. RobotShop Distribution Inc., 2008 www.robotshop.ca www.robotshop.us Greek Times Some historians affirm that Talos, a giant creature written about in ancient greek literature, was a creature (either a man or a bull) made of bronze, given by Zeus to Europa. [6] According to one version of the myths he was created in Sardinia by Hephaestus on Zeus' command, who gave him to the Cretan king Minos. In another version Talos came to Crete with Zeus to watch over his love Europa, and Minos received him as a gift from her. There are suppositions that his name Talos in the old Cretan language meant the "Sun" and that Zeus was known in Crete by the similar name of Zeus Tallaios. -

The Routledge Handbook of Embodied Cognition

THE ROUTLEDGE HANDBOOK OF EMBODIED COGNITION Embodied cognition is one of the foremost areas of study and research in philosophy of mind, philosophy of psychology and cognitive science. The Routledge Handbook of Embodied Cognition is an outstanding guide and reference source to the key philosophers, topics and debates in this exciting subject and essential reading for any student and scholar of philosophy of mind and cognitive science. Comprising over thirty chapters by a team of international contributors, the Handbook is divided into six parts: Historical underpinnings Perspectives on embodied cognition Applied embodied cognition: perception, language, and reasoning Applied embodied cognition: social and moral cognition and emotion Applied embodied cognition: memory, attention, and group cognition Meta-topics. The early chapters of the Handbook cover empirical and philosophical foundations of embodied cognition, focusing on Gibsonian and phenomenological approaches. Subsequent chapters cover additional, important themes common to work in embodied cognition, including embedded, extended and enactive cognition as well as chapters on embodied cognition and empirical research in perception, language, reasoning, social and moral cognition, emotion, consciousness, memory, and learning and development. Lawrence Shapiro is a professor in the Department of Philosophy, University of Wisconsin – Madison, USA. He has authored many articles spanning the range of philosophy of psychology. His most recent book, Embodied Cognition (Routledge, 2011), won the American Philosophical Association’s Joseph B. Gittler award in 2013. Routledge Handbooks in Philosophy Routledge Handbooks in Philosophy are state-of-the-art surveys of emerging, newly refreshed, and important fields in philosophy, providing accessible yet thorough assessments of key problems, themes, thinkers, and recent developments in research. -

DAC-H3: a Proactive Robot Cognitive Architecture to Acquire and Express

Preprint version; final version available at http://ieeexplore.ieee.org/ IEEE Transactions on Cognitive and Developmental Systems (Accepted) DOI: 10.1109/TCDS.2017.2754143 1 DAC-h3: A Proactive Robot Cognitive Architecture to Acquire and Express Knowledge About the World and the Self Clément Moulin-Frier*, Tobias Fischer*, Maxime Petit, Grégoire Pointeau, Jordi-Ysard Puigbo, Ugo Pattacini, Sock Ching Low, Daniel Camilleri, Phuong Nguyen, Matej Hoffmann, Hyung Jin Chang, Martina Zambelli, Anne-Laure Mealier, Andreas Damianou, Giorgio Metta, Tony J. Prescott, Yiannis Demiris, Peter Ford Dominey, and Paul F. M. J. Verschure Abstract—This paper introduces a cognitive architecture for I. INTRODUCTION a humanoid robot to engage in a proactive, mixed-initiative exploration and manipulation of its environment, where the HE so-called Symbol Grounding Problem (SGP, [1], [2], initiative can originate from both the human and the robot. The T [3]) refers to the way in which a cognitive agent forms an framework, based on a biologically-grounded theory of the brain internal and unified representation of an external word referent and mind, integrates a reactive interaction engine, a number of from the continuous flow of low-level sensorimotor data state-of-the art perceptual and motor learning algorithms, as well as planning abilities and an autobiographical memory. The generated by its interaction with the environment. In this paper, architecture as a whole drives the robot behavior to solve the we focus on solving the SGP in the context of human-robot symbol grounding problem, acquire language capabilities, execute interaction (HRI), where a humanoid iCub robot [4] acquires goal-oriented behavior, and express a verbal narrative of its own and expresses knowledge about the world by interacting with experience in the world. -

Intelligence Without Representation: a Historical Perspective

systems Commentary Intelligence without Representation: A Historical Perspective Anna Jordanous School of Computing, University of Kent, Chatham Maritime, Kent ME4 4AG, UK; [email protected] Received: 30 June 2020; Accepted: 10 September 2020; Published: 15 September 2020 Abstract: This paper reflects on a seminal work in the history of AI and representation: Rodney Brooks’ 1991 paper Intelligence without representation. Brooks advocated the removal of explicit representations and engineered environments from the domain of his robotic intelligence experimentation, in favour of an evolutionary-inspired approach using layers of reactive behaviour that operated independently of each other. Brooks criticised the current progress in AI research and believed that removing complex representation from AI would help address problematic areas in modelling the mind. His belief was that we should develop artificial intelligence by being guided by the evolutionary development of our own intelligence and that his approach mirrored how our own intelligence functions. Thus, the field of behaviour-based robotics emerged. This paper offers a historical analysis of Brooks’ behaviour-based robotics approach and its impact on artificial intelligence and cognitive theory at the time, as well as on modern-day approaches to AI. Keywords: behaviour-based robotics; representation; adaptive behaviour; evolutionary robotics 1. Introduction In 1991, Rodney Brooks published the paper Intelligence without representation, a seminal work on behaviour-based robotics [1]. This paper influenced many aspects of research into artificial intelligence and cognitive theory. In Intelligence without representation, Brooks described his behaviour-based robotics approach to artificial intelligence. He highlighted a number of points that he considers fundamental in modelling intelligence.