Service Robots 7 Mouser Staff

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Remote and Autonomous Controlled Robotic Car Based on Arduino with Real Time Obstacle Detection and Avoidance

Universal Journal of Engineering Science 7(1): 1-7, 2019 http://www.hrpub.org DOI: 10.13189/ujes.2019.070101 Remote and Autonomous Controlled Robotic Car based on Arduino with Real Time Obstacle Detection and Avoidance Esra Yılmaz, Sibel T. Özyer* Department of Computer Engineering, Çankaya University, Turkey Copyright©2019 by authors, all rights reserved. Authors agree that this article remains permanently open access under the terms of the Creative Commons Attribution License 4.0 International License Abstract In robotic car, real time obstacle detection electronic devices. The environment can be any physical and obstacle avoidance are significant issues. In this study, environment such as military areas, airports, factories, design and implementation of a robotic car have been hospitals, shopping malls, and electronic devices can be presented with regards to hardware, software and smartphones, robots, tablets, smart clocks. These devices communication environments with real time obstacle have a wide range of applications to control, protect, image detection and obstacle avoidance. Arduino platform, and identification in the industrial process. Today, there are android application and Bluetooth technology have been hundreds of types of sensors produced by the development used to implementation of the system. In this paper, robotic of technology such as heat, pressure, obstacle recognizer, car design and application with using sensor programming human detecting. Sensors were used for lighting purposes on a platform has been presented. This robotic device has in the past, but now they are used to make life easier. been developed with the interaction of Android-based Thanks to technology in the field of electronics, incredibly device. -

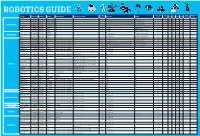

Robotics Section Guide for Web.Indd

ROBOTICS GUIDE Soldering Teachers Notes Early Higher Hobby/Home The Robot Product Code Description The Brand Assembly Required Tools That May Be Helpful Devices Req Software KS1 KS2 KS3 KS4 Required Available Years Education School VEX 123! 70-6250 CLICK HERE VEX No ✘ None No ✔ ✔ ✔ ✔ Dot 70-1101 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ Dash 70-1100 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ ✔ Ready to Go Robot Cue 70-1108 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ Codey Rocky 75-0516 CLICK HERE Makeblock No ✘ PC, Laptop Free downloadb ale Mblock Software ✔ ✔ ✔ ✔ Ozobot 70-8200 CLICK HERE Ozobot No ✘ PC, Laptop Free downloads ✔ ✔ ✔ ✔ Ohbot 76-0000 CLICK HERE Ohbot No ✘ PC, Laptop Free downloads for Windows or Pi ✔ ✔ ✔ ✔ SoftBank NAO 70-8893 CLICK HERE No ✘ PC, Laptop or Ipad Choregraph - free to download ✔ ✔ ✔ ✔ ✔ ✔ ✔ Robotics Humanoid Robots SoftBank Pepper 70-8870 CLICK HERE No ✘ PC, Laptop or Ipad Choregraph - free to download ✔ ✔ ✔ ✔ ✔ ✔ ✔ Robotics Ohbot 76-0001 CLICK HERE Ohbot Assembly is part of the fun & learning! ✘ PC, Laptop Free downloads for Windows or Pi ✔ ✔ ✔ ✔ FABLE 00-0408 CLICK HERE Shape Robotics Assembly is part of the fun & learning! ✘ PC, Laptop or Ipad Free Downloadable ✔ ✔ ✔ ✔ VEX GO! 70-6311 CLICK HERE VEX Assembly is part of the fun & learning! ✘ Windows, Mac, -

Executive Summary World Robotics 2019 Service Robots 11

Executive Summary World Robotics 2019 Service Robots 11 Executive Summary World Robotics 2019 Service Robots Service robotics encompasses a broad field of applications, most of which having unique designs and different degrees of automation – from full tele-operation to fully autonomous operation. Hence, the industry is more diverse than the industrial robot industry (which is extensively treated in the companion publication World Robotics – Industrial Robots 2019). IFR Statistical Department is currently aware of more than 750 companies producing service robots or doing commercial research for marketable products. A full list is included in chapter 4 of World Robotics Service Robots 2019. IFR Statistical Department is continuously seeking for new service robot producers, so please contact [email protected] if you represent such a company and would like to participate in the annual survey conducted in the first quarter of each year. Participants receive the survey results free of charge. Sales of professional service robots on the rise The total number of professional service robots sold in 2018 rose by 61% to more than 271,000 units, up from roughly 168,000 in 2017. The sales value increased by 32% to USD 9.2 billion. Autonomous guided vehicles (AGVs) represent the largest fraction in the professional service robot market (41% of all units sold). They are established in non-manufacturing environments, mainly logistics, and have lots of potential in manufacturing. The second largest category (39% of all units sold), inspection and maintenance robots, covers a wide range of robots from rather low-priced standard units to expensive custom solutions. Service robots for defense applications accounted for 5% of the total number of service robots for professional use sold in 2018. -

Fetishism and the Culture of the Automobile

FETISHISM AND THE CULTURE OF THE AUTOMOBILE James Duncan Mackintosh B.A.(hons.), Simon Fraser University, 1985 THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF ARTS in the Department of Communication Q~amesMackintosh 1990 SIMON FRASER UNIVERSITY August 1990 All rights reserved. This work may not be reproduced in whole or in part, by photocopy or other means, without permission of the author. APPROVAL NAME : James Duncan Mackintosh DEGREE : Master of Arts (Communication) TITLE OF THESIS: Fetishism and the Culture of the Automobile EXAMINING COMMITTEE: Chairman : Dr. William D. Richards, Jr. \ -1 Dr. Martih Labbu Associate Professor Senior Supervisor Dr. Alison C.M. Beale Assistant Professor \I I Dr. - Jerry Zqlove, Associate Professor, Department of ~n~lish, External Examiner DATE APPROVED : 20 August 1990 PARTIAL COPYRIGHT LICENCE I hereby grant to Simon Fraser University the right to lend my thesis or dissertation (the title of which is shown below) to users of the Simon Fraser University Library, and to make partial or single copies only for such users or in response to a request from the library of any other university, or other educational institution, on its own behalf or for one of its users. I further agree that permission for multiple copying of this thesis for scholarly purposes may be granted by me or the Dean of Graduate Studies. It is understood that copying or publication of this thesis for financial gain shall not be allowed without my written permission. Title of Thesis/Dissertation: Fetishism and the Culture of the Automobile. Author : -re James Duncan Mackintosh name 20 August 1990 date ABSTRACT This thesis explores the notion of fetishism as an appropriate instrument of cultural criticism to investigate the rites and rituals surrounding the automobile. -

Hospitality Robots at Your Service WHITEPAPER

WHITEPAPER Hospitality Robots At Your Service TABLE OF CONTENTS THE SERVICE ROBOT MARKET EXAMPLES OF SERVICE ROBOTS IN THE HOSPITALITY SPACE IN DEPTH WITH SAVIOKE’S HOSPITALITY ROBOTS PEPPER PROVIDES FRIENDLY, FUN CUSTOMER ASSISTANCE SANBOT’S HOSPITALITY ROBOTS AIM FOR HOTELS, BANKING EXPECT MORE ROBOTS DOING SERVICE WORK roboticsbusinessreview.com 2 MOBILE AND HUMANOID ROBOTS INTERACT WITH CUSTOMERS ACROSS THE HOSPITALITY SPACE Improvements in mobility, autonomy and software drive growth in robots that can provide better service for customers and guests in the hospitality space By Ed O’Brien Across the business landscape, robots have entered many different industries, and the service market is no difference. With several applications in the hospitality, restaurant, and healthcare markets, new types of service robots are making life easier for customers and employees. For example, mobile robots can now make deliveries in a hotel, move materials in a hospital, provide security patrols on large campuses, take inventories or interact with retail customers. They offer expanded capabilities that can largely remove humans from having to perform repetitive, tedious, and often unwanted tasks. Companies designing and manufacturing such robots are offering unique approaches to customer service, providing systems to help fill in areas where labor shortages are prevalent, and creating increased revenues by offering new delivery channels, literally and figuratively. However, businesses looking to use these new robots need to be mindful of reviewing the underlying demand to ensure that such investments make sense in the long run. In this report, we will review the different types of robots aimed at providing hospitality services, their various missions, and expectations for growth in the near-to-immediate future. -

How Humanoid Robots Influence Service Experiences and Food Consumption

Marketing Science Institute Working Paper Series 2017 Report No. 17-125 Service Robots Rising: How Humanoid Robots Influence Service Experiences and Food Consumption Martin Mende, Maura L. Scott, Jenny van Doorn, Ilana Shanks, and Dhruv Grewal “Service Robots Rising: How Humanoid Robots Influence Service Experiences and Food Consumption” © 2017 Martin Mende, Maura L. Scott, Jenny van Doorn, Ilana Shanks, and Dhruv Grewal; Report Summary © 2017 Marketing Science Institute MSI working papers are distributed for the benefit of MSI corporate and academic members means, electronic or mechanical, without written permission. Report Summary Interactions between consumers and humanoid service robots (i.e., robots with a human-like morphology such as a face, arms, and legs) soon will be part of routine marketplace experiences, and represent a primary arena for innovation in services and shopper marketing. At the same time, it is not clear whether humanoid robots, relative to human employees, trigger positive or negative consequences for consumers and companies. Although creating robots that appear as much like humans as possible is the “holy grail” in robotics, there is a risk that consumers will respond negatively to highly human-like robots, due to the feelings of discomfort that such robots can evoke. Here, Martin Mende, Maura Scott, Jenny van Doorn, Ilana Shanks, and Dhruv Grewal investigate whether humanoid service robots (HSRs) trigger discomfort and what the consequences might be for customers’ service experiences. They focus on the effects of HSRs in a food consumption context. Six experimental studies, conducted in the context of restaurant services, reveal that consumers report lower assessments of the server when their food is served by a humanoid service robot than by a human server, but their desire for food and their actual food consumption increases. -

Special Feature on Advanced Mobile Robotics

applied sciences Editorial Special Feature on Advanced Mobile Robotics DaeEun Kim School of Electrical and Electronic Engineering, Yonsei University, Shinchon, Seoul 03722, Korea; [email protected] Received: 29 October 2019; Accepted: 31 October 2019; Published: 4 November 2019 1. Introduction Mobile robots and their applications are involved with many research fields including electrical engineering, mechanical engineering, computer science, artificial intelligence and cognitive science. Mobile robots are widely used for transportation, surveillance, inspection, interaction with human, medical system and entertainment. This Special Issue handles recent development of mobile robots and their research, and it will help find or enhance the principle of robotics and practical applications in real world. The Special Issue is intended to be a collection of multidisciplinary work in the field of mobile robotics. Various approaches and integrative contributions are introduced through this Special Issue. Motion control of mobile robots, aerial robots/vehicles, robot navigation, localization and mapping, robot vision and 3D sensing, networked robots, swarm robotics, biologically-inspired robotics, learning and adaptation in robotics, human-robot interaction and control systems for industrial robots are covered. 2. Advanced Mobile Robotics This Special Issue includes a variety of research fields related to mobile robotics. Initially, multi-agent robots or multi-robots are introduced. It covers cooperation of multi-agent robots or formation control. Trajectory planning methods and applications are listed. Robot navigations have been studied as classical robot application. Autonomous navigation examples are demonstrated. Then services robots are introduced as human-robot interaction. Furthermore, unmanned aerial vehicles (UAVs) or autonomous underwater vehicles (AUVs) are shown for autonomous navigation or map building. -

Concept and Functional Structure of a Service Robot

International Journal of Advanced Robotic Systems ARTICLE Concept and Functional Structure of a Service Robot Regular Paper Luis A. Pineda1*, Arturo Rodríguez1, Gibran Fuentes1, Caleb Rascon1 and Ivan V. Meza1 1 National Autonomous University of Mexico, Mexico * Corresponding author(s) E-mail: [email protected] Received 13 June 2014; Accepted 28 November 2014 DOI: 10.5772/60026 © 2015 The Author(s). Licensee InTech. This is an open access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/3.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Abstract reflection upon the present concept of a service robot and its associated functional specifications, and the potential In this paper, we present a concept of service robot and a impact of such a conceptual model in the study, develop‐ framework for its functional specification and implemen‐ ment and application of service robots in general. tation. The present discussion is grounded in Newell’s system levels hierarchy which suggests organizing robotics Keywords Concept of a service robot, Service robots research in three different layers, corresponding to Marr’s system levels, Conceptual model of service robots, Robot‐ computational, algorithmic and implementation levels, as ics’ task structure, Practical tasks, Structure of a general follows: (1) the service robot proper, which is the subject of purpose service robot, RoboCup@Home competition, The the present paper, (2) perception and action algorithms, Golem-II+ robot and (3) the systems programming level. The concept of a service robot is articulated in practice through the intro‐ duction of a conceptual model for particular service robots; this consists of the specification of a set of basic robotic 1. -

PROTOTYPE for QUADRUPED ROBOT USING Iot to DELIVER MEDICINES and ESSENTIALS to COVID-19 PATIENT

International Journal of Advanced Research in Engineering and Technology (IJARET) 4Volume 12, Issue 5, May 2021, pp. 147-155, Article ID: IJARET_12_05_014 Available online at https://iaeme.com/Home/issue/IJARET?Volume=12&Issue=5 ISSN Print: 0976-6480 and ISSN Online: 0976-6499 DOI: 10.34218/IJARET.12.5.2021.014 © IAEME Publication Scopus Indexed PROTOTYPE FOR QUADRUPED ROBOT USING IoT TO DELIVER MEDICINES AND ESSENTIALS TO COVID-19 PATIENT Anita Chaudhari, Jeet Thakur and Pratiksha Mhatre Department of Information Technology, St. John College of Engineering and Management, Palghar, Maharashtra, India ABSTRACT The Wheeled robots cannot work properly on the rocky surface or uneven surface. They consume a lot of power and struggle when they go on rocky surface. To tackle the disadvantages of wheeled robot we replaced the wheels like shaped legs with the spider legs or spider arms. Quadruped robot has complicated moving patterns and it can also move on surfaces where wheeled shaped robots would fail. They can easily walk on rocky or uneven surface. The main purpose of the project is to develop a consistent platform that enables the implementation of steady and fast static/dynamic walking on ground/Platform to deliver medicines to covid-19 patients. The main advantage of spider robot also called as quadruped robot is that it is Bluetooth controlled robot, through the android application, we can control robot from anywhere; it avoids the obstacle using ultrasonic sensor. Key words: Bluetooth module, Quadruped, Rough-terrain robots. Cite this Article: Anita Chaudhari, Jeet Thakur and Pratiksha Mhatre, Prototype for Quadruped Robot using IoT to Deliver Medicines and Essentials to Covid-19 Patient, International Journal of Advanced Research in Engineering and Technology, 12(5), 2021, pp. -

Lio - a Personal Robot Assistant for Human-Robot Interaction and Care Applications

Lio - A Personal Robot Assistant for Human-Robot Interaction and Care Applications Justinas Miseikis,ˇ Pietro Caroni, Patricia Duchamp, Alina Gasser, Rastislav Marko, Nelija Miseikienˇ e,˙ Frederik Zwilling, Charles de Castelbajac, Lucas Eicher, Michael Fruh,¨ Hansruedi Fruh¨ Abstract— Lio is a mobile robot platform with a multi- careers [4]. A possible staff shortage of 500’000 healthcare functional arm explicitly designed for human-robot interaction employees is estimated in Europe by the year of 2030 [5]. and personal care assistant tasks. The robot has already Care robotics is not an entirely new field. There has been deployed in several health care facilities, where it is functioning autonomously, assisting staff and patients on an been significant development in this direction. One of the everyday basis. Lio is intrinsically safe by having full coverage most known robots is Pepper by SoftBank Robotics, which in soft artificial-leather material as well as having collision was created for interaction and entertainment tasks. It is detection, limited speed and forces. Furthermore, the robot has capable of voice interactions with humans, face and mood a compliant motion controller. A combination of visual, audio, recognition. In the healthcare sector Pepper is used for laser, ultrasound and mechanical sensors are used for safe navigation and environment understanding. The ROS-enabled interaction with dementia patients [6]. setup allows researchers to access raw sensor data as well as Another example is the robot RIBA by RIKEN. It is have direct control of the robot. The friendly appearance of designed to carry around patients. The robot is capable of Lio has resulted in the robot being well accepted by health localising a voice source and lifting patients weighing up to care staff and patients. -

Service Robots Are Increasingly Broadening Our Horizons Beyond the Factory Floor

Toward a Science of Autonomy for Physical Systems: Service Peter Allen Henrik I. Christensen [email protected] [email protected] Columbia University Georgia Institute of Technology Computing Community Consortium Version 1: June 23, 20151 1 Overview A recent study by the Robotic Industries Association [2] has highlighted how service robots are increasingly broadening our horizons beyond the factory floor. From robotic vacuums, bomb retrievers, exoskeletons and drones, to robots used in surgery, space exploration, agriculture, home assistance and construction, service robots are building a formidable resume. In just the last few years we have seen service robots deliver room service meals, assist shoppers in finding items in a large home improvement store, checking in customers and storing their luggage at hotels, and pour drinks on cruise ships. Personal robots are here to educate, assist and entertain at home. These domestic robots can perform daily chores, assist people with disabilities and serve as companions or pets for entertainment [1]. By all accounts, the growth potential for service robotics is quite large [2, 3]. Due to their multitude of forms and structures as well as application areas, service robots are not easy to define. The International Federation of Robots (IFR) has created some preliminary definitions of service robots [5]. A service robot is a robot that performs useful tasks for humans or equipment excluding industrial automation applications. Some examples are domestic servant robots, automated wheelchairs, and personal mobility assist robots. A service robot for professional use is a service robot used for a commercial task, usually operated by a properly trained operator. -

Sviluppo Di Un'app Android Per Il Robot Sanbot

Università degli Studi di Padova Dipartimento di Matematica "Tullio Levi-Civita" Corso di Laurea in Informatica Sviluppo di un’app Android per il robot Sanbot Tesi di laurea triennale Relatore Prof. Mauro Conti Laureando Nicolae Andrei Tabacariu Anno Accademico 2017-2018 Nicolae Andrei Tabacariu: Sviluppo di un’app Android per il robot Sanbot, Tesi di laurea triennale, c Settembre 2018. “Life is really simple, but we insist on making it complicated” — Confucius Ringraziamenti Innanzitutto, vorrei esprimere la mia gratitudine al Prof. Mauro Conti, relatore della mia tesi, per l’aiuto e il sostegno fornitomi durante l’attività di stage e la stesura del presente documento. Desidero ringraziare con affetto la mia famiglia e la mia ragazza Erica, che mi hanno sostenuto economicamente ed emotivamente in questi anni di studio, senza i quali probabilmente non sarei mai arrivato alla fine di questo percorso. Desidero poi ringraziare gli amici e i compagni che mi hanno accompagnato in questi anni e che mi hanno dato sostegno ed affetto. Infine porgo i miei ringraziamenti a tutti i componenti di Omitech S.r.l. per l’opportunità di lavorare con loro, per il rispetto e la cordialità con cui mi hanno trattato. Padova, Settembre 2018 Nicolae Andrei Tabacariu iii Indice 1 Introduzione1 1.1 L’azienda . .1 1.2 Il progetto di stage . .2 1.3 Organizzazione dei contenuti . .2 2 Descrizione dello stage3 2.1 Il progetto di stage . .3 2.1.1 Analisi dei rischi . .4 2.1.2 Obiettivi fissati . .4 2.1.3 Pianificazione del lavoro . .5 2.1.4 Interazione con i tutor .