Android Science - Toward a New Cross-Interdisciplinary Framework

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Robotic Pets in Human Lives: Implications for the Human–Animal Bond and for Human Relationships with Personified Technologies ∗ Gail F

Journal of Social Issues, Vol. 65, No. 3, 2009, pp. 545--567 Robotic Pets in Human Lives: Implications for the Human–Animal Bond and for Human Relationships with Personified Technologies ∗ Gail F. Melson Purdue University Peter H. Kahn, Jr. University of Washington Alan Beck Purdue University Batya Friedman University of Washington Robotic “pets” are being marketed as social companions and are used in the emerging field of robot-assisted activities, including robot-assisted therapy (RAA). However,the limits to and potential of robotic analogues of living animals as social and therapeutic partners remain unclear. Do children and adults view robotic pets as “animal-like,” “machine-like,” or some combination of both? How do social behaviors differ toward a robotic versus living dog? To address these issues, we synthesized data from three studies of the robotic dog AIBO: (1) a content analysis of 6,438 Internet postings by 182 adult AIBO owners; (2) observations ∗ Correspondence concerning this article should be addressed to Gail F. Melson, Depart- ment of CDFS, 101 Gates Road, Purdue University, West Lafayette, IN 47907-20202 [e-mail: [email protected]]. We thank Brian Gill for assistance with statistical analyses. We also thank the following individuals (in alphabetical order) for assistance with data collection, transcript preparation, and coding: Jocelyne Albert, Nathan Freier, Erik Garrett, Oana Georgescu, Brian Gilbert, Jennifer Hagman, Migume Inoue, and Trace Roberts. This material is based on work supported by the National Science Foundation under Grant No. IIS-0102558 and IIS-0325035. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation. -

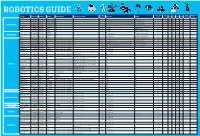

Robotics Section Guide for Web.Indd

ROBOTICS GUIDE Soldering Teachers Notes Early Higher Hobby/Home The Robot Product Code Description The Brand Assembly Required Tools That May Be Helpful Devices Req Software KS1 KS2 KS3 KS4 Required Available Years Education School VEX 123! 70-6250 CLICK HERE VEX No ✘ None No ✔ ✔ ✔ ✔ Dot 70-1101 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ Dash 70-1100 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ ✔ Ready to Go Robot Cue 70-1108 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ Codey Rocky 75-0516 CLICK HERE Makeblock No ✘ PC, Laptop Free downloadb ale Mblock Software ✔ ✔ ✔ ✔ Ozobot 70-8200 CLICK HERE Ozobot No ✘ PC, Laptop Free downloads ✔ ✔ ✔ ✔ Ohbot 76-0000 CLICK HERE Ohbot No ✘ PC, Laptop Free downloads for Windows or Pi ✔ ✔ ✔ ✔ SoftBank NAO 70-8893 CLICK HERE No ✘ PC, Laptop or Ipad Choregraph - free to download ✔ ✔ ✔ ✔ ✔ ✔ ✔ Robotics Humanoid Robots SoftBank Pepper 70-8870 CLICK HERE No ✘ PC, Laptop or Ipad Choregraph - free to download ✔ ✔ ✔ ✔ ✔ ✔ ✔ Robotics Ohbot 76-0001 CLICK HERE Ohbot Assembly is part of the fun & learning! ✘ PC, Laptop Free downloads for Windows or Pi ✔ ✔ ✔ ✔ FABLE 00-0408 CLICK HERE Shape Robotics Assembly is part of the fun & learning! ✘ PC, Laptop or Ipad Free Downloadable ✔ ✔ ✔ ✔ VEX GO! 70-6311 CLICK HERE VEX Assembly is part of the fun & learning! ✘ Windows, Mac, -

Cybernetic Human HRP-4C: a Humanoid Robot with Human-Like Proportions

Cybernetic Human HRP-4C: A humanoid robot with human-like proportions Shuuji KAJITA, Kenji KANEKO, Fumio KANEIRO, Kensuke HARADA, Mitsuharu MORISAWA, Shin’ichiro NAKAOKA, Kanako MIURA, Kiyoshi FUJIWARA, Ee Sian NEO, Isao HARA, Kazuhito YOKOI, Hirohisa HIRUKAWA Abstract Cybernetic human HRP-4C is a humanoid robot whose body dimensions were designed to match the average Japanese young female. In this paper, we ex- plain the aim of the development, realization of human-like shape and dimensions, research to realize human-like motion and interactions using speech recognition. 1 Introduction Cybernetics studies the dynamics of information as a common principle of com- plex systems which have goals or purposes. The systems can be machines, animals or a social systems, therefore, cybernetics is multidiciplinary from its nature. Since Norbert Wiener advocated the concept in his book in 1948[1], the term has widely spreaded into academic and pop culture. At present, cybernetics has diverged into robotics, control theory, artificial intelligence and many other research fields, how- ever, the original unified concept has not yet lost its glory. Robotics is one of the biggest streams that branched out from cybernetics, and its goal is to create a useful system by combining mechanical devices with information technology. From a practical point of view, a robot does not have to be humanoid; nevertheless we believe the concept of cybernetics can justify the research of hu- manoid robots for it can be an effective hub of multidiciplinary research. WABOT-1, -

Fetishism and the Culture of the Automobile

FETISHISM AND THE CULTURE OF THE AUTOMOBILE James Duncan Mackintosh B.A.(hons.), Simon Fraser University, 1985 THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF ARTS in the Department of Communication Q~amesMackintosh 1990 SIMON FRASER UNIVERSITY August 1990 All rights reserved. This work may not be reproduced in whole or in part, by photocopy or other means, without permission of the author. APPROVAL NAME : James Duncan Mackintosh DEGREE : Master of Arts (Communication) TITLE OF THESIS: Fetishism and the Culture of the Automobile EXAMINING COMMITTEE: Chairman : Dr. William D. Richards, Jr. \ -1 Dr. Martih Labbu Associate Professor Senior Supervisor Dr. Alison C.M. Beale Assistant Professor \I I Dr. - Jerry Zqlove, Associate Professor, Department of ~n~lish, External Examiner DATE APPROVED : 20 August 1990 PARTIAL COPYRIGHT LICENCE I hereby grant to Simon Fraser University the right to lend my thesis or dissertation (the title of which is shown below) to users of the Simon Fraser University Library, and to make partial or single copies only for such users or in response to a request from the library of any other university, or other educational institution, on its own behalf or for one of its users. I further agree that permission for multiple copying of this thesis for scholarly purposes may be granted by me or the Dean of Graduate Studies. It is understood that copying or publication of this thesis for financial gain shall not be allowed without my written permission. Title of Thesis/Dissertation: Fetishism and the Culture of the Automobile. Author : -re James Duncan Mackintosh name 20 August 1990 date ABSTRACT This thesis explores the notion of fetishism as an appropriate instrument of cultural criticism to investigate the rites and rituals surrounding the automobile. -

Hospitality Robots at Your Service WHITEPAPER

WHITEPAPER Hospitality Robots At Your Service TABLE OF CONTENTS THE SERVICE ROBOT MARKET EXAMPLES OF SERVICE ROBOTS IN THE HOSPITALITY SPACE IN DEPTH WITH SAVIOKE’S HOSPITALITY ROBOTS PEPPER PROVIDES FRIENDLY, FUN CUSTOMER ASSISTANCE SANBOT’S HOSPITALITY ROBOTS AIM FOR HOTELS, BANKING EXPECT MORE ROBOTS DOING SERVICE WORK roboticsbusinessreview.com 2 MOBILE AND HUMANOID ROBOTS INTERACT WITH CUSTOMERS ACROSS THE HOSPITALITY SPACE Improvements in mobility, autonomy and software drive growth in robots that can provide better service for customers and guests in the hospitality space By Ed O’Brien Across the business landscape, robots have entered many different industries, and the service market is no difference. With several applications in the hospitality, restaurant, and healthcare markets, new types of service robots are making life easier for customers and employees. For example, mobile robots can now make deliveries in a hotel, move materials in a hospital, provide security patrols on large campuses, take inventories or interact with retail customers. They offer expanded capabilities that can largely remove humans from having to perform repetitive, tedious, and often unwanted tasks. Companies designing and manufacturing such robots are offering unique approaches to customer service, providing systems to help fill in areas where labor shortages are prevalent, and creating increased revenues by offering new delivery channels, literally and figuratively. However, businesses looking to use these new robots need to be mindful of reviewing the underlying demand to ensure that such investments make sense in the long run. In this report, we will review the different types of robots aimed at providing hospitality services, their various missions, and expectations for growth in the near-to-immediate future. -

PETMAN: a Humanoid Robot for Testing Chemical Protective Clothing

372 日本ロボット学会誌 Vol. 30 No. 4, pp.372~377, 2012 解説 PETMAN: A Humanoid Robot for Testing Chemical Protective Clothing Gabe Nelson∗, Aaron Saunders∗, Neil Neville∗, Ben Swilling∗, Joe Bondaryk∗, Devin Billings∗, Chris Lee∗, Robert Playter∗ and Marc Raibert∗ ∗Boston Dynamics 1. Introduction Petman is an anthropomorphic robot designed to test chemical protective clothing (Fig. 1). Petman will test Individual Protective Equipment (IPE) in an envi- ronmentally controlled test chamber, where it will be exposed to chemical agents as it walks and does basic calisthenics. Chemical sensors embedded in the skin of the robot will measure if, when and where chemi- cal agents are detected within the suit. The robot will perform its tests in a chamber under controlled temper- ature and wind conditions. A treadmill and turntable integrated into the wind tunnel chamber allow for sus- tained walking experiments that can be oriented rela- tive to the wind. Petman’s skin is temperature con- trolled and even sweats in order to simulate physiologic conditions within the suit. When the robot is per- forming tests, a loose fitting Intelligent Safety Harness (ISH) will be present to support or catch and restart the robot should it lose balance or suffer a mechani- cal failure. The integrated system: the robot, chamber, treadmill/turntable, ISH and electrical, mechanical and software systems for testing IPE is called the Individual Protective Ensemble Mannequin System (Fig. 2)andis Fig. 1 The Petman robot walking on a treadmill being built by a team of organizations.† In 2009 when we began the design of Petman,there where the external fixture attaches to the robot. -

Modelling User Preference for Embodied Artificial Intelligence and Appearance in Realistic Humanoid Robots

Article Modelling User Preference for Embodied Artificial Intelligence and Appearance in Realistic Humanoid Robots Carl Strathearn 1,* and Minhua Ma 2,* 1 School of Computing and Digital Technologies, Staffordshire University, Staffordshire ST4 2DE, UK 2 Falmouth University, Penryn Campus, Penryn TR10 9FE, UK * Correspondence: [email protected] (C.S.); [email protected] (M.M.) Received: 29 June 2020; Accepted: 29 July 2020; Published: 31 July 2020 Abstract: Realistic humanoid robots (RHRs) with embodied artificial intelligence (EAI) have numerous applications in society as the human face is the most natural interface for communication and the human body the most effective form for traversing the manmade areas of the planet. Thus, developing RHRs with high degrees of human-likeness provides a life-like vessel for humans to physically and naturally interact with technology in a manner insurmountable to any other form of non-biological human emulation. This study outlines a human–robot interaction (HRI) experiment employing two automated RHRs with a contrasting appearance and personality. The selective sample group employed in this study is composed of 20 individuals, categorised by age and gender for a diverse statistical analysis. Galvanic skin response, facial expression analysis, and AI analytics permitted cross-analysis of biometric and AI data with participant testimonies to reify the results. This study concludes that younger test subjects preferred HRI with a younger-looking RHR and the more senior age group with an older looking RHR. Moreover, the female test group preferred HRI with an RHR with a younger appearance and male subjects with an older looking RHR. -

Design and Realization of a Humanoid Robot for Fast and Autonomous Bipedal Locomotion

TECHNISCHE UNIVERSITÄT MÜNCHEN Lehrstuhl für Angewandte Mechanik Design and Realization of a Humanoid Robot for Fast and Autonomous Bipedal Locomotion Entwurf und Realisierung eines Humanoiden Roboters für Schnelles und Autonomes Laufen Dipl.-Ing. Univ. Sebastian Lohmeier Vollständiger Abdruck der von der Fakultät für Maschinenwesen der Technischen Universität München zur Erlangung des akademischen Grades eines Doktor-Ingenieurs (Dr.-Ing.) genehmigten Dissertation. Vorsitzender: Univ.-Prof. Dr.-Ing. Udo Lindemann Prüfer der Dissertation: 1. Univ.-Prof. Dr.-Ing. habil. Heinz Ulbrich 2. Univ.-Prof. Dr.-Ing. Horst Baier Die Dissertation wurde am 2. Juni 2010 bei der Technischen Universität München eingereicht und durch die Fakultät für Maschinenwesen am 21. Oktober 2010 angenommen. Colophon The original source for this thesis was edited in GNU Emacs and aucTEX, typeset using pdfLATEX in an automated process using GNU make, and output as PDF. The document was compiled with the LATEX 2" class AMdiss (based on the KOMA-Script class scrreprt). AMdiss is part of the AMclasses bundle that was developed by the author for writing term papers, Diploma theses and dissertations at the Institute of Applied Mechanics, Technische Universität München. Photographs and CAD screenshots were processed and enhanced with THE GIMP. Most vector graphics were drawn with CorelDraw X3, exported as Encapsulated PostScript, and edited with psfrag to obtain high-quality labeling. Some smaller and text-heavy graphics (flowcharts, etc.), as well as diagrams were created using PSTricks. The plot raw data were preprocessed with Matlab. In order to use the PostScript- based LATEX packages with pdfLATEX, a toolchain based on pst-pdf and Ghostscript was used. -

History of Robotics: Timeline

History of Robotics: Timeline This history of robotics is intertwined with the histories of technology, science and the basic principle of progress. Technology used in computing, electricity, even pneumatics and hydraulics can all be considered a part of the history of robotics. The timeline presented is therefore far from complete. Robotics currently represents one of mankind’s greatest accomplishments and is the single greatest attempt of mankind to produce an artificial, sentient being. It is only in recent years that manufacturers are making robotics increasingly available and attainable to the general public. The focus of this timeline is to provide the reader with a general overview of robotics (with a focus more on mobile robots) and to give an appreciation for the inventors and innovators in this field who have helped robotics to become what it is today. RobotShop Distribution Inc., 2008 www.robotshop.ca www.robotshop.us Greek Times Some historians affirm that Talos, a giant creature written about in ancient greek literature, was a creature (either a man or a bull) made of bronze, given by Zeus to Europa. [6] According to one version of the myths he was created in Sardinia by Hephaestus on Zeus' command, who gave him to the Cretan king Minos. In another version Talos came to Crete with Zeus to watch over his love Europa, and Minos received him as a gift from her. There are suppositions that his name Talos in the old Cretan language meant the "Sun" and that Zeus was known in Crete by the similar name of Zeus Tallaios. -

Theory of Mind for a Humanoid Robot

Theory of Mind for a Humanoid Robot Brian Scassellati MIT Artificial Intelligence Lab 545 Technology Square – Room 938 Cambridge, MA 02139 USA [email protected] http://www.ai.mit.edu/people/scaz/ Abstract. If we are to build human-like robots that can interact naturally with people, our robots must know not only about the properties of objects but also the properties of animate agents in the world. One of the fundamental social skills for humans is the attribution of beliefs, goals, and desires to other people. This set of skills has often been called a “theory of mind.” This paper presents the theories of Leslie [27] and Baron-Cohen [2] on the development of theory of mind in human children and discusses the potential application of both of these theories to building robots with similar capabilities. Initial implementation details and basic skills (such as finding faces and eyes and distinguishing animate from inanimate stimuli) are introduced. I further speculate on the usefulness of a robotic implementation in evaluating and comparing these two models. 1 Introduction Human social dynamics rely upon the ability to correctly attribute beliefs, goals, and percepts to other people. This set of metarepresentational abilities, which have been collectively called a “theory of mind” or the ability to “mentalize”, allows us to understand the actions and expressions of others within an intentional or goal-directed framework (what Dennett [15] has called the intentional stance). The recognition that other individuals have knowl- edge, perceptions, and intentions that differ from our own is a critical step in a child’s development and is believed to be instrumental in self-recognition, in providing a perceptual grounding during language learning, and possibly in the development of imaginative and creative play [9]. -

Are You There, God? It's I, Robot: Examining The

ARE YOU THERE, GOD? IT’S I, ROBOT: EXAMINING THE HUMANITY OF ANDROIDS AND CYBORGS THROUGH YOUNG ADULT FICTION BY EMILY ANSUSINHA A Thesis Submitted to the Graduate Faculty of WAKE FOREST UNIVERSITY GRADUATE SCHOOL OF ARTS AND SCIENCES in Partial Fulfillment of the Requirements for the Degree of MASTER OF ARTS Bioethics May 2014 Winston-Salem, North Carolina Approved By: Nancy King, J.D., Advisor Michael Hyde, Ph.D., Chair Kevin Jung, Ph.D. ACKNOWLEDGMENTS I would like to give a very large thank you to my adviser, Nancy King, for her patience and encouragement during the writing process. Thanks also go to Michael Hyde and Kevin Jung for serving on my committee and to all the faculty and staff at the Wake Forest Center for Bioethics, Health, and Society. Being a part of the Bioethics program at Wake Forest has been a truly rewarding experience. A special thank you to Katherine Pinard and McIntyre’s Books; this thesis would not have been possible without her book recommendations and donations. I would also like to thank my family for their continued support in all my academic pursuits. Last but not least, thank you to Professor Mohammad Khalil for changing the course of my academic career by introducing me to the Bioethics field. ii TABLE OF CONTENTS List of Tables and Figures ................................................................................... iv List of Abbreviations ............................................................................................. iv Abstract ................................................................................................................ -

Time Parameterization of Humanoid Robot Paths

SUBMITTED TO IEEE TRANS. ROBOT. , VOL. XX, NO. XX, 2010 1 Time Parameterization of Humanoid Robot Paths Wael Suleiman, Fumio Kanehiro, Member, IEEE, Eiichi Yoshida, Member, IEEE, Jean-Paul Laumond, Fellow Member, IEEE, and Andr´eMonin Abstract—This paper proposes a unified optimization frame- work to solve the time parameterization problem of humanoid robot paths. Even though the time parameterization problem is well known in robotics, the application to humanoid robots has not been addressed. This is because of the complexity of the kinematical structure as well as the dynamical motion equation. The main contribution in this paper is to show that the time pa- rameterization of a statically stable path to be transformed into a dynamical stable trajectory within the humanoid robot capacities can be expressed as an optimization problem. Furthermore we propose an efficient method to solve the obtained optimization problem. The proposed method has been successfully validated on the humanoid robot HRP-2 by conducting several experiments. These results have revealed the effectiveness and the robustness (a) Initial configuration (b) Final configuration of the proposed method. Index Terms—Timing; Robot dynamics; Stability criteria; Fig. 1. Dynamically stable motion Optimization methods; Humanoid Robot. these approaches are based on time-optimal control theory [3], I. INTRODUCTION [4], [5], [6], [7]. The automatic generation of motions for robots which are In the framework of mobile robots, the time parameteriza- collision-free motions and at the same time inside the robots tion problem arises also to transform a feasible path into a capacities is one of the most challenging problems. Many feasible trajectory [8], [9].