Towards a Demonstrator for Autonomous Object Detection on Board Gaia Shan Mignot

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sapphire-Like Payload for Space Situational Awareness Final

Sapphire-like Payload for Space Situational Awareness John Hackett, Lisa Li COM DEV Ltd., Cambridge, Ontario, Canada ([email protected]) ABSTRACT The Sapphire satellite payload has been developed by COM DEV for a Surveillance of Space mission of the Canadian Department of National Defence, which is scheduled to be launched later in 2012. This paper presents a brief overview of the payload, along with the potential for using this optical instrument as a low cost, proven Space Situational Awareness hosted payload on geostationary satellites. The Sapphire payload orbits on a dedicated satellite and hence the payload was not required to actively point. The proposed hosted payload version of Sapphire would be enhanced by incorporating a two dimensional scan capability to increase the spatial coverage. Simulations of the hosted payload version of Sapphire performance are presented, including spatial coverage, approximate sensitivity and positional accuracy for detected resident space objects. The moderate size, power and cost of the Sapphire payload make it an excellent candidate for a hosted payload space situational awareness application. 1. INTRODUCTION The Sapphire payload is described elsewhere [1] and was developed by COM DEV on a moderate budget for MDA Systems Ltd. and the Canadian Department of National Defence and is scheduled to fly in 2012. The Sapphire payload combines the SBV heritage [2] through the telescope design/contractor, with high quantum efficiency CCDs and advanced high reliability electronics. The Sapphire mission [3] was developed by the Canadian Department of National Defence (DND) as part of its Surveillance of Space project. MDA Systems Ltd. was the mission prime contractor, and COM DEV was the payload prime contractor. -

Advanced Virgo: Status of the Detector, Latest Results and Future Prospects

universe Review Advanced Virgo: Status of the Detector, Latest Results and Future Prospects Diego Bersanetti 1,* , Barbara Patricelli 2,3 , Ornella Juliana Piccinni 4 , Francesco Piergiovanni 5,6 , Francesco Salemi 7,8 and Valeria Sequino 9,10 1 INFN, Sezione di Genova, I-16146 Genova, Italy 2 European Gravitational Observatory (EGO), Cascina, I-56021 Pisa, Italy; [email protected] 3 INFN, Sezione di Pisa, I-56127 Pisa, Italy 4 INFN, Sezione di Roma, I-00185 Roma, Italy; [email protected] 5 Dipartimento di Scienze Pure e Applicate, Università di Urbino, I-61029 Urbino, Italy; [email protected] 6 INFN, Sezione di Firenze, I-50019 Sesto Fiorentino, Italy 7 Dipartimento di Fisica, Università di Trento, Povo, I-38123 Trento, Italy; [email protected] 8 INFN, TIFPA, Povo, I-38123 Trento, Italy 9 Dipartimento di Fisica “E. Pancini”, Università di Napoli “Federico II”, Complesso Universitario di Monte S. Angelo, I-80126 Napoli, Italy; [email protected] 10 INFN, Sezione di Napoli, Complesso Universitario di Monte S. Angelo, I-80126 Napoli, Italy * Correspondence: [email protected] Abstract: The Virgo detector, based at the EGO (European Gravitational Observatory) and located in Cascina (Pisa), played a significant role in the development of the gravitational-wave astronomy. From its first scientific run in 2007, the Virgo detector has constantly been upgraded over the years; since 2017, with the Advanced Virgo project, the detector reached a high sensitivity that allowed the detection of several classes of sources and to investigate new physics. This work reports the Citation: Bersanetti, D.; Patricelli, B.; main hardware upgrades of the detector and the main astrophysical results from the latest five years; Piccinni, O.J.; Piergiovanni, F.; future prospects for the Virgo detector are also presented. -

Neossat: a Collaborative Microsatellite Project for Space

SSC08-III-5 NEOSSat : A Collaborative Microsatellite Project for Space Based Object Detection William Harvey Canadian Space Agency 6767, Route de l'Aéroport, St-Hubert, QC, Canada, J3Y 8Y9; (450) 926-4477 [email protected] Tony Morris Defence Research and Development Canada 3701 Carling Avenue, Ottawa, Ontario, K1A 0Z4; 613 990-4326 [email protected] ABSTRACT Recognizing the importance of space-based Near Earth Object (NEO) detection and Surveillance of Space (SoS), the Canadian Space Agency and Defence Research and Development Canada are proceeding with the development and construction of NEOSSat. NEOSSat's two missions are to make observations to discover asteroids and comets near Earth's orbit (Near Earth Space Surveillance or NESS) and to demonstrate surveillance of satellites and space debris (High Earth Orbit Space Surveillance or HEOSS). This micro-satellite project will deploy a 15 cm telescope into a 630 km low earth orbit in 2010. This will be the first space-based system of its kind. NEOSSat represents a win-win opportunity for CSA and DRDC who recognized there were significant common interests with this microsatellite project. The project will yield data that can be used by the NEOSSat team and leveraged by external stakeholders to address important issues with NEOs, satellite metric data and debris cataloguing. In the contexts of risks and rewards as well as confidence building, NEOSSat is providing CSA and DRDC with valuable insights about collaboration on a microsatellite program and this paper presents our views and lessons learned. INTRODUCTION The NEOSSat microsatellite project satisfies several The Near Earth Object Surveillance Satellite objectives: 1) discover and determine the orbits of (NEOSSat) is the first jointly sponsored microsatellite Near-Earth Objects (NEO's) that cannot be efficiently project between the Canadian Space Agency CSA and detected from the ground, 2) demonstrate the ability of Defence Research and Development Canada (DRDC). -

Chronology of NASA Expendable Vehicle Missions Since 1990

Chronology of NASA Expendable Vehicle Missions Since 1990 Launch Launch Date Payload Vehicle Site1 June 1, 1990 ROSAT (Roentgen Satellite) Delta II ETR, 5:48 p.m. EDT An X-ray observatory developed through a cooperative program between Germany, the U.S., and (Delta 195) LC 17A the United Kingdom. Originally proposed by the Max-Planck-Institut für extraterrestrische Physik (MPE) and designed, built and operated in Germany. Launched into Earth orbit on a U.S. Air Force vehicle. Mission ended after almost nine years, on Feb. 12, 1999. July 25, 1990 CRRES (Combined Radiation and Release Effects Satellite) Atlas I ETR, 3:21 p.m. EDT NASA payload. Launched into a geosynchronous transfer orbit for a nominal three-year mission to (AC-69) LC 36B investigate fields, plasmas, and energetic particles inside the Earth's magnetosphere. Due to onboard battery failure, contact with the spacecraft was lost on Oct. 12, 1991. May 14, 1991 NOAA-D (TIROS) (National Oceanic and Atmospheric Administration-D) Atlas-E WTR, 11:52 a.m. EDT A Television Infrared Observing System (TIROS) satellite. NASA-developed payload; USAF (Atlas 50-E) SLC 4 vehicle. Launched into sun-synchronous polar orbit to allow the satellite to view the Earth's entire surface and cloud cover every 12 hours. Redesignated NOAA-12 once in orbit. June 29, 1991 REX (Radiation Experiment) Scout 216 WTR, 10:00 a.m. EDT USAF payload; NASA vehicle. Launched into 450 nm polar orbit. Designed to study scintillation SLC 5 effects of the Earth's atmosphere on RF transmissions. 114th launch of Scout vehicle. -

Space Security Index 2013

SPACE SECURITY INDEX 2013 www.spacesecurity.org 10th Edition SPACE SECURITY INDEX 2013 SPACESECURITY.ORG iii Library and Archives Canada Cataloguing in Publications Data Space Security Index 2013 ISBN: 978-1-927802-05-2 FOR PDF version use this © 2013 SPACESECURITY.ORG ISBN: 978-1-927802-05-2 Edited by Cesar Jaramillo Design and layout by Creative Services, University of Waterloo, Waterloo, Ontario, Canada Cover image: Soyuz TMA-07M Spacecraft ISS034-E-010181 (21 Dec. 2012) As the International Space Station and Soyuz TMA-07M spacecraft were making their relative approaches on Dec. 21, one of the Expedition 34 crew members on the orbital outpost captured this photo of the Soyuz. Credit: NASA. Printed in Canada Printer: Pandora Print Shop, Kitchener, Ontario First published October 2013 Please direct enquiries to: Cesar Jaramillo Project Ploughshares 57 Erb Street West Waterloo, Ontario N2L 6C2 Canada Telephone: 519-888-6541, ext. 7708 Fax: 519-888-0018 Email: [email protected] Governance Group Julie Crôteau Foreign Aairs and International Trade Canada Peter Hays Eisenhower Center for Space and Defense Studies Ram Jakhu Institute of Air and Space Law, McGill University Ajey Lele Institute for Defence Studies and Analyses Paul Meyer The Simons Foundation John Siebert Project Ploughshares Ray Williamson Secure World Foundation Advisory Board Richard DalBello Intelsat General Corporation Theresa Hitchens United Nations Institute for Disarmament Research John Logsdon The George Washington University Lucy Stojak HEC Montréal Project Manager Cesar Jaramillo Project Ploughshares Table of Contents TABLE OF CONTENTS TABLE PAGE 1 Acronyms and Abbreviations PAGE 5 Introduction PAGE 9 Acknowledgements PAGE 10 Executive Summary PAGE 23 Theme 1: Condition of the space environment: This theme examines the security and sustainability of the space environment, with an emphasis on space debris; the potential threats posed by near-Earth objects; the allocation of scarce space resources; and the ability to detect, track, identify, and catalog objects in outer space. -

Spacecraft Thermal Control Systems, Missions and Needs

SPACECRAFT THERMAL CONTROL SYSTEMS, MISSIONS AND NEEDS SPACECRAFT THERMAL CONTROL ..................................................................................................... 1 What is STCS, TC and STC ..................................................................................................................... 1 What makes STC different to thermal control on ground ..................................................................... 4 Systems engineering ................................................................................................................................. 4 Systems design ...................................................................................................................................... 5 Control and management ...................................................................................................................... 6 Quality assurance .................................................................................................................................. 6 Spacecraft missions and thermal problems ............................................................................................... 7 Missions phases..................................................................................................................................... 8 Missions types according to human life ................................................................................................ 8 Missions types according to payload ................................................................................................... -

Solar Particle Events and Evaluation of Their Effects During Spacecraft Design Seps in Environment Specifications

SOLAR PARTICLE EVENTS AND EVALUATION OF THEIR EFFECTS DURING SPACECRAFT DESIGN Piers Jiggens ([email protected]), Eamonn Daly, Hugh Evans, Alain Hilgers Spacecraft Environment and Effects Section, ESA/ESTEC, Noordwijk, The Netherlands (http://space-env.esa.int) THE SEP ENVIRONMENT MODELLING SEP FLUXES Solar Energetic Particles (SEPs) arrive in highly sporadic concentrations known as Solar Particle In order to model the SEP environment for mission specifications a Events (SPEs). SPEs are characterised by enhancements of several orders of magnitude above statistical or data-driven approach is applied rather than physics- background levels for protons of energies from less than 1 MeV to over 1 GeV in extreme cases. These based models of particle acceleration. This is because the short extreme particle storms are known as Ground Level Enhancements (GLEs) as they can be detected by term variability (space weather) is not important for spacecraft ground-based neutron monitors with secondary neutrons emissions resulting from high energy design wherein the goal is to design a spacecraft whose components particles (with sufficient magnetic rigidity to penetrate through the geomagnetic field) interacting do not fail as a result of the effects of particle radiation without over with the Earth’s upper atmosphere. SPEs are not restricted only to protons but also electrons and -engineering. Steps to derive and apply an SEP environment model significant level of alpha particles and heavier ions. for mission specification is shown (top right figure). Since the beginning of the space age over 40 years of space particle radiation have been recorded at Well known models of the environment include the JPL model [1] altitudes sufficient for fluxes to be un-attenuated by the Earth’s magnetic field down to energies of 5 and the ESP/PSYCHIC models [2,3]. -

Mars Express

sp1240cover 7/7/04 4:17 PM Page 1 SP-1240 SP-1240 M ARS E XPRESS The Scientific Payload MARS EXPRESS The Scientific Payload Contact: ESA Publications Division c/o ESTEC, PO Box 299, 2200 AG Noordwijk, The Netherlands Tel. (31) 71 565 3400 - Fax (31) 71 565 5433 AAsec1.qxd 7/8/04 3:52 PM Page 1 SP-1240 August 2004 MARS EXPRESS The Scientific Payload AAsec1.qxd 7/8/04 3:52 PM Page ii SP-1240 ‘Mars Express: A European Mission to the Red Planet’ ISBN 92-9092-556-6 ISSN 0379-6566 Edited by Andrew Wilson ESA Publications Division Scientific Agustin Chicarro Coordination ESA Research and Scientific Support Department, ESTEC Published by ESA Publications Division ESTEC, Noordwijk, The Netherlands Price €50 Copyright © 2004 European Space Agency ii AAsec1.qxd 7/8/04 3:52 PM Page iii Contents Foreword v Overview The Mars Express Mission: An Overview 3 A. Chicarro, P. Martin & R. Trautner Scientific Instruments HRSC: the High Resolution Stereo Camera of Mars Express 17 G. Neukum, R. Jaumann and the HRSC Co-Investigator and Experiment Team OMEGA: Observatoire pour la Minéralogie, l’Eau, 37 les Glaces et l’Activité J-P. Bibring, A. Soufflot, M. Berthé et al. MARSIS: Mars Advanced Radar for Subsurface 51 and Ionosphere Sounding G. Picardi, D. Biccari, R. Seu et al. PFS: the Planetary Fourier Spectrometer for Mars Express 71 V. Formisano, D. Grassi, R. Orfei et al. SPICAM: Studying the Global Structure and 95 Composition of the Martian Atmosphere J.-L. Bertaux, D. Fonteyn, O. Korablev et al. -

WORLD SPACECRAFT DIGEST by Jos Heyman 2013 Version: 1 January 2014 © Copyright Jos Heyman

WORLD SPACECRAFT DIGEST by Jos Heyman 2013 Version: 1 January 2014 © Copyright Jos Heyman The spacecraft are listed, in the first instance, in the order of their International Designation, resulting in, with some exceptions, a date order. Spacecraft which did not receive an International Designation, being those spacecraft which failed to achieve orbit or those which were placed in a sub orbital trajectory, have been inserted in the date order. For each spacecraft the following information is provided: a. International Designation and NORAD number For each spacecraft the International Designation, as allocated by the International Committee on Space Research (COSPAR), has been used as the primary means to identify the spacecraft. This is followed by the NORAD catalogue number which has been assigned to each object in space, including debris etc., in a numerical sequence, rather than a chronoligical sequence. Normally no reference has been made to spent launch vehicles, capsules ejected by the spacecraft or fragments except where such have a unique identification which warrants consideration as a separate spacecraft or in other circumstances which warrants their mention. b. Name The most common name of the spacecraft has been quoted. In some cases, such as for US military spacecraft, the name may have been deduced from published information and may not necessarily be the official name. Alternative names have, however, been mentioned in the description and have also been included in the index. c. Country/International Agency For each spacecraft the name of the country or international agency which owned or had prime responsibility for the spacecraft, or in which the owner resided, has been included. -

Financial Operational Losses in Space Launch

UNIVERSITY OF OKLAHOMA GRADUATE COLLEGE FINANCIAL OPERATIONAL LOSSES IN SPACE LAUNCH A DISSERTATION SUBMITTED TO THE GRADUATE FACULTY in partial fulfillment of the requirements for the Degree of DOCTOR OF PHILOSOPHY By TOM ROBERT BOONE, IV Norman, Oklahoma 2017 FINANCIAL OPERATIONAL LOSSES IN SPACE LAUNCH A DISSERTATION APPROVED FOR THE SCHOOL OF AEROSPACE AND MECHANICAL ENGINEERING BY Dr. David Miller, Chair Dr. Alfred Striz Dr. Peter Attar Dr. Zahed Siddique Dr. Mukremin Kilic c Copyright by TOM ROBERT BOONE, IV 2017 All rights reserved. \For which of you, intending to build a tower, sitteth not down first, and counteth the cost, whether he have sufficient to finish it?" Luke 14:28, KJV Contents 1 Introduction1 1.1 Overview of Operational Losses...................2 1.2 Structure of Dissertation.......................4 2 Literature Review9 3 Payload Trends 17 4 Launch Vehicle Trends 28 5 Capability of Launch Vehicles 40 6 Wastage of Launch Vehicle Capacity 49 7 Optimal Usage of Launch Vehicles 59 8 Optimal Arrangement of Payloads 75 9 Risk of Multiple Payload Launches 95 10 Conclusions 101 10.1 Review of Dissertation........................ 101 10.2 Future Work.............................. 106 Bibliography 108 A Payload Database 114 B Launch Vehicle Database 157 iv List of Figures 3.1 Payloads By Orbit, 2000-2013.................... 20 3.2 Payload Mass By Orbit, 2000-2013................. 21 3.3 Number of Payloads of Mass, 2000-2013.............. 21 3.4 Total Mass of Payloads in kg by Individual Mass, 2000-2013... 22 3.5 Number of LEO Payloads of Mass, 2000-2013........... 22 3.6 Number of GEO Payloads of Mass, 2000-2013.......... -

Commercial Space Transportation: 2011 Year in Review

Commercial Space Transportation: 2011 Year in Review COMMERCIAL SPACE TRANSPORTATION: 2011 YEAR IN REVIEW January 2012 HQ-121525.INDD 2011 Year in Review About the Office of Commercial Space Transportation The Federal Aviation Administration’s Office of Commercial Space Transportation (FAA/AST) licenses and regulates U.S. commercial space launch and reentry activity, as well as the operation of non-federal launch and reentry sites, as authorized by Executive Order 12465 and Title 51 United States Code, Subtitle V, Chapter 509 (formerly the Commercial Space Launch Act). FAA/AST’s mission is to ensure public health and safety and the safety of property while protecting the national security and foreign policy interests of the United States during commercial launch and reentry operations. In addition, FAA/ AST is directed to encourage, facilitate, and promote commercial space launches and reentries. Additional information concerning commercial space transportation can be found on FAA/AST’s web site at http://www.faa.gov/about/office_org/headquarters_offices/ast/. Cover: Art by John Sloan (2012) NOTICE Use of trade names or names of manufacturers in this document does not constitute an official endorsement of such products or manufacturers, either expressed or implied, by the Federal Aviation Administration. • i • Federal Aviation Administration / Commercial Space Transportation CONTENTS Introduction . .1 Executive Summary . .2 2011 Launch Activity . .3 WORLDWIDE ORBITAL LAUNCH ACTIVITY . 3 Worldwide Launch Revenues . 5 Worldwide Orbital Payload Summary . 5 Commercial Launch Payload Summaries . 6 Non-Commercial Launch Payload Summaries . 7 U .S . AND FAA-LICENSED ORBITAL LAUNCH ACTIVITY . 9 FAA-Licensed Orbital Launch Summary . 9 U .S . and FAA-Licensed Orbital Launch Activity in Detail . -

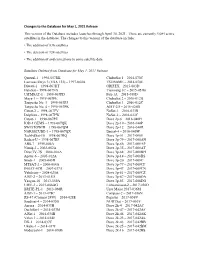

Changes to the Database for May 1, 2021 Release This Version of the Database Includes Launches Through April 30, 2021

Changes to the Database for May 1, 2021 Release This version of the Database includes launches through April 30, 2021. There are currently 4,084 active satellites in the database. The changes to this version of the database include: • The addition of 836 satellites • The deletion of 124 satellites • The addition of and corrections to some satellite data Satellites Deleted from Database for May 1, 2021 Release Quetzal-1 – 1998-057RK ChubuSat 1 – 2014-070C Lacrosse/Onyx 3 (USA 133) – 1997-064A TSUBAME – 2014-070E Diwata-1 – 1998-067HT GRIFEX – 2015-003D HaloSat – 1998-067NX Tianwang 1C – 2015-051B UiTMSAT-1 – 1998-067PD Fox-1A – 2015-058D Maya-1 -- 1998-067PE ChubuSat 2 – 2016-012B Tanyusha No. 3 – 1998-067PJ ChubuSat 3 – 2016-012C Tanyusha No. 4 – 1998-067PK AIST-2D – 2016-026B Catsat-2 -- 1998-067PV ÑuSat-1 – 2016-033B Delphini – 1998-067PW ÑuSat-2 – 2016-033C Catsat-1 – 1998-067PZ Dove 2p-6 – 2016-040H IOD-1 GEMS – 1998-067QK Dove 2p-10 – 2016-040P SWIATOWID – 1998-067QM Dove 2p-12 – 2016-040R NARSSCUBE-1 – 1998-067QX Beesat-4 – 2016-040W TechEdSat-10 – 1998-067RQ Dove 3p-51 – 2017-008E Radsat-U – 1998-067RF Dove 3p-79 – 2017-008AN ABS-7 – 1999-046A Dove 3p-86 – 2017-008AP Nimiq-2 – 2002-062A Dove 3p-35 – 2017-008AT DirecTV-7S – 2004-016A Dove 3p-68 – 2017-008BH Apstar-6 – 2005-012A Dove 3p-14 – 2017-008BS Sinah-1 – 2005-043D Dove 3p-20 – 2017-008C MTSAT-2 – 2006-004A Dove 3p-77 – 2017-008CF INSAT-4CR – 2007-037A Dove 3p-47 – 2017-008CN Yubileiny – 2008-025A Dove 3p-81 – 2017-008CZ AIST-2 – 2013-015D Dove 3p-87 – 2017-008DA Yaogan-18