RAPIDE 0.0 RHIC Accelerator Physics Intrepid Development Environment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ebook - Informations About Operating Systems Version: August 15, 2006 | Download

eBook - Informations about Operating Systems Version: August 15, 2006 | Download: www.operating-system.org AIX Internet: AIX AmigaOS Internet: AmigaOS AtheOS Internet: AtheOS BeIA Internet: BeIA BeOS Internet: BeOS BSDi Internet: BSDi CP/M Internet: CP/M Darwin Internet: Darwin EPOC Internet: EPOC FreeBSD Internet: FreeBSD HP-UX Internet: HP-UX Hurd Internet: Hurd Inferno Internet: Inferno IRIX Internet: IRIX JavaOS Internet: JavaOS LFS Internet: LFS Linspire Internet: Linspire Linux Internet: Linux MacOS Internet: MacOS Minix Internet: Minix MorphOS Internet: MorphOS MS-DOS Internet: MS-DOS MVS Internet: MVS NetBSD Internet: NetBSD NetWare Internet: NetWare Newdeal Internet: Newdeal NEXTSTEP Internet: NEXTSTEP OpenBSD Internet: OpenBSD OS/2 Internet: OS/2 Further operating systems Internet: Further operating systems PalmOS Internet: PalmOS Plan9 Internet: Plan9 QNX Internet: QNX RiscOS Internet: RiscOS Solaris Internet: Solaris SuSE Linux Internet: SuSE Linux Unicos Internet: Unicos Unix Internet: Unix Unixware Internet: Unixware Windows 2000 Internet: Windows 2000 Windows 3.11 Internet: Windows 3.11 Windows 95 Internet: Windows 95 Windows 98 Internet: Windows 98 Windows CE Internet: Windows CE Windows Family Internet: Windows Family Windows ME Internet: Windows ME Seite 1 von 138 eBook - Informations about Operating Systems Version: August 15, 2006 | Download: www.operating-system.org Windows NT 3.1 Internet: Windows NT 3.1 Windows NT 4.0 Internet: Windows NT 4.0 Windows Server 2003 Internet: Windows Server 2003 Windows Vista Internet: Windows Vista Windows XP Internet: Windows XP Apple - Company Internet: Apple - Company AT&T - Company Internet: AT&T - Company Be Inc. - Company Internet: Be Inc. - Company BSD Family Internet: BSD Family Cray Inc. -

An Introduction to the X Window System Introduction to X's Anatomy

An Introduction to the X Window System Robert Lupton This is a limited and partisan introduction to ‘The X Window System’, which is widely but improperly known as X-windows, specifically to version 11 (‘X11’). The intention of the X-project has been to provide ‘tools not rules’, which allows their basic system to appear in a very large number of confusing guises. This document assumes that you are using the configuration that I set up at Peyton Hall † There are helpful manual entries under X and Xserver, as well as for individual utilities such as xterm. You may need to add /usr/princeton/X11/man to your MANPATH to read the X manpages. This is the first draft of this document, so I’d be very grateful for any comments or criticisms. Introduction to X’s Anatomy X consists of three parts: The server The part that knows about the hardware and how to draw lines and write characters. The Clients Such things as terminal emulators, dvi previewers, and clocks and The Window Manager A programme which handles negotiations between the different clients as they fight for screen space, colours, and sunlight. Another fundamental X-concept is that of resources, which is how X describes any- thing that a client might want to specify; common examples would be fonts, colours (both foreground and background), and position on the screen. Keys X can, and usually does, use a number of special keys. You are familiar with the way that <shift>a and <ctrl>a are different from a; in X this sensitivity extends to things like mouse buttons that you might not normally think of as case-sensitive. -

Modelsim SE Tutorial T-3

ModelSim® SE Tutorial Version 5.6d Published: 6/Aug/02 The world’s most popular HDL simulator T-2 ModelSim /VHDL, ModelSim /VLOG, ModelSim /LNL, and ModelSim /PLUS are produced by Model Technology™ Incorporated. Unauthorized copying, duplication, or other reproduction is prohibited without the written consent of Model Technology. The information in this manual is subject to change without notice and does not represent a commitment on the part of Model Technology. The program described in this manual is furnished under a license agreement and may not be used or copied except in accordance with the terms of the agreement. The online documentation provided with this product may be printed by the end-user. The number of copies that may be printed is limited to the number of licenses purchased. ModelSim is a registered trademark and ChaseX and TraceX are trademarks of Model Technology Incorporated. Model Technology is a trademark of Mentor Graphics Corporation. PostScript is a registered trademark of Adobe Systems Incorporated. UNIX is a registered trademark of AT&T in the USA and other countries. FLEXlm is a trademark of Globetrotter Software, Inc. IBM, AT, and PC are registered trademarks, AIX and RISC System/6000 are trademarks of International Business Machines Corporation. Windows, Microsoft, and MS-DOS are registered trademarks of Microsoft Corporation. OSF/Motif is a trademark of the Open Software Foundation, Inc. in the USA and other countries. SPARC is a registered trademark and SPARCstation is a trademark of SPARC International, Inc. Sun Microsystems is a registered trademark, and Sun, SunOS and OpenWindows are trademarks of Sun Microsystems, Inc. -

Tms320c3x Workstation Emulator Installation Guide

TMS320C3x Workstation Emulator Installation Guide 1994 Microprocessor Development Systems Printed in U.S.A., December 1994 2617676-9741 revision A TMS320C3x Workstation Emulator Installation Guide SPRU130 December 1994 Printed on Recycled Paper IMPORTANT NOTICE Texas Instruments (TI) reserves the right to make changes to its products or to discontinue any semiconductor product or service without notice, and advises its customers to obtain the latest version of relevant information to verify, before placing orders, that the information being relied on is current. TI warrants performance of its semiconductor products and related software to the specifications applicable at the time of sale in accordance with TI’s standard warranty. Testing and other quality control techniques are utilized to the extent TI deems necessary to support this warranty. Specific testing of all parameters of each device is not necessarily performed, except those mandated by government requirements. Certain applications using semiconductor products may involve potential risks of death, personal injury, or severe property or environmental damage (“Critical Applications”). TI SEMICONDUCTOR PRODUCTS ARE NOT DESIGNED, INTENDED, AUTHORIZED, OR WARRANTED TO BE SUITABLE FOR USE IN LIFE-SUPPORT APPLICATIONS, DEVICES OR SYSTEMS OR OTHER CRITICAL APPLICATIONS. Inclusion of TI products in such applications is understood to be fully at the risk of the customer. Use of TI products in such applications requires the written approval of an appropriate TI officer. Questions concerning potential risk applications should be directed to TI through a local SC sales offices. In order to minimize risks associated with the customer’s applications, adequate design and operating safeguards should be provided by the customer to minimize inherent or procedural hazards. -

Installation Guide Meeting Maker

Enterprise Scheduling & Calendaring Meeting Maker Installation Guide 010-MAN-0560 Copyright © 1999 by ON Technology Corporation. All rights reserved worldwide. Second Printing: June 1999 Information in this document is subject to change without notice and does not represent a commitment on the part of ON Technology. The software described in this document is furnished under a license agreement and may be used only in accordance with that agreement. This document has been provided pursuant to an agreement containing restrictions on its use. This document is also protected by federal copyright law. No part of this document may be reproduced or distributed, transcribed, stored in a retrieval system, translated into any spoken or computer language or transmitted in any form or by any means whatsoever without the prior written consent of: ON Technology Corporation One Cambridge Center Cambridge, MA 02142 USA Telephone: (617) 374 1400 Fax: (617) 374 1433 ON Technology makes no warranty, representation or promise not expressly set forth in this agreement. ON Technology disclaims and excludes any and all implied warranties of merchantability, title, or fitness for a particular purpose. ON Technology does not warrant that the software or documentation will satisfy your requirements or that the software and documentation are without defect or error or that the operation of the software will be uninterrupted. LIMITATION OF LIABILITY: ON Technology's aggregate liability, as well as that of the authors of programs sold by ON Technology, arising from or relating to this agreement or the software or documentation is limited to the total of all payments made by or for you for the license. -

Installing Framemaker for UNIX®

Adobe ® FrameMaker ® 7.0 Installing FrameMaker for UNIX® © 2002 Adobe Systems Incorporated and its licensors. All rights reserved. Installing Adobe FrameMaker for UNIX This manual, as well as the software described in it, is furnished under license and may be used or copied only in accordance with the terms of such license. The content of this manual is furnished for informational use only, is subject to change without notice, and should not be construed as a com- mitment by Adobe Systems Incorporated. Adobe Systems Incorporated assumes no responsibility or liability for any errors or inaccuracies that may appear in this book. Except as permitted by such license, no part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, recording, or otherwise, without the prior written permission of Adobe Systems Incorporated. Please remember that existing artwork or images that you may want to include in your project may be protected under copyright law. The unautho- rized incorporation of such material into your new work could be a violation of the rights of the copyright owner. Please be sure to obtain any per- mission required from the copyright owner. Any references to company names in sample templates are for demonstration purposes only and are not intended to refer to any actual organization. Adobe, the Adobe logo, Acrobat, Acrobat Reader, Adobe Type Manager, ATM, Display PostScript, Distiller, Exchange, FrameMaker, InstantView, Post- Script, and SuperATM are trademarks of Adobe Systems Incorporated. The following are copyrights of their respective companies or organizations: Portions reproduced with the permission of Apple Computer, Inc. -

Administraci´On De Sistemas Unix

ADMINISTRACION´ DE SISTEMAS UNIX Antonio Villal´onHuerta <[email protected]> Sergio Bayarri Gausi <[email protected]> Mayo, 2005 2 ´Indice General 1 Introducci´on:el superusuario 5 1.1 Conceptos b´asicospara administradores . 5 1.2 El entorno del superusuario . 5 1.3 El papel del administrador . 6 1.4 Comunicaci´oncon los usuarios. 7 2 Arranque y parada de m´aquina 11 2.1 Arranque del sistema . 11 2.2 Parada del sistema . 16 2.3 El int´erpretede ´ordenes . 16 3 Procesos 19 3.1 Conceptos b´asicos . 19 3.2 Actividades de los usuarios . 20 3.3 Control de procesos . 23 4 Sistemas de ficheros 27 4.1 Ficheros . 27 4.2 Sistemas de ficheros . 29 4.3 Creaci´one incorporaci´onde sistemas de ficheros . 30 4.4 Gesti´ondel espacio en disco . 31 4.5 Algunos sistemas de ficheros . 32 5 Tareas. Mantenimiento del sistema 35 5.1 Gesti´onde usuarios . 35 5.2 Automatizaci´onde tareas . 39 5.3 Actualizaci´one instalaci´onde paquetes software . 42 5.4 Copias de seguridad . 44 5.4.1 Consejos a considerar al realizar copias de seguridad . 45 5.4.2 Planificaci´onde un programa de copias de seguridad . 45 5.4.3 Realizaci´onde copias de seguridad y restauraci´onde archivos . 46 5.4.4 Ejemplo pr´actico:copia de seguridad del sistema . 51 5.4.5 Resumen . 52 5.5 Instalaci´onde la red . 52 5.5.1 Conceptos sobre redes . 52 5.5.2 Configuraci´onde la red . 55 5.5.3 Configuraci´onde servicios en Internet . -

Open Windows Version 3 Installation and Start-Up Guide E 1991 by Sun Microsystems, Inc.-Printed in USA

Open Windows Version 3 Installation and Start-Up Guide e 1991 by Sun Microsystems, Inc.-Printed in USA. 2550 Garcia Avenue, Mountain View, California 94043-1100 All rights reserved. No part of this work covered by copyright may be reproduced in any form or by any means-graphic, electronic or mechanical, including photocopying, recording, taping, or storage in an information retrieval system- without prior written permission of the copyright owner. The OPEN LOOK and the Sun Graphical User Interfaces were developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the com puter industry. Sun holds a non-exclusive license from Xerox to the Xerox Graphical User Interface, which license also covers Sun's licensees. RESTRICTED RIGHTS LEGEND: Use, duplication, or disclosure by the government is subject to restrictions as set forth in subparagraph (c)(1)(ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013 (October 1988) and FAR 52.227-19 Oune 1987). The product described in this manual may be protected by one or more U.s. patents, foreign patents, and/or pending applications. TRADEMARKS Sun Logo, Sun Microsystems, NeWS, and NFS are registered trademarks, and SunSoft, SunSoft logo, SunOS, SunView, Sun-2, Sun-3, Sun-4, XGL, SunPHIGS, SunGKS, and OpenWindows are trademarks of SunMicrosystems, Inc. licensed to SunSoft, Inc. UNIX and OPEN LOOK are registered trademarks of UNIX System Laboratories, Inc. PostScript is a registered trademark of Adobe Systems Incorporated. -

Solaris Common Desktop Environment: User’S Transition Guide

Solaris Common Desktop Environment: User’s Transition Guide Sun Microsystems, Inc. 2550 Garcia Avenue Mountain View, CA 94043-1100 U.S.A. Part No: 802-6478–10 August, 1997 Copyright 1997 Sun Microsystems, Inc. 901 San Antonio Road, Palo Alto, California 94303-4900 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, SunSoft, SunDocs, SunExpress, OpenWindows and Solaris are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. The code and documentation for the DtComboBox and DtSpinBox widgets were contributed by Interleaf, Inc. Copyright 1993, Interleaf, Inc. The OPEN LOOK and SunTM Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. -

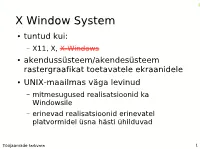

X Window System

X Window System ● tuntud kui: – X11, X, X-Windows ● akendussüsteem/akendesüsteem rastergraafikat toetavatele ekraanidele ● UNIX-maailmas väga levinud – mitmesugused realisatsioonid ka Windowsile – erinevad realisatsioonid erinevatel platvormidel üsna hästi ühilduvad Tööjaamade tarkvara 1 X Window System ● klient-server protokoll – riistvara spetsiifika ideaalis vaid serveris ● võimalik klient-server suhtlus üle võrgu ● võimalik käivitada ühes masinas mitu X serverit (loomulikult ka mitu X klienti) Tööjaamade tarkvara 2 X Window System: ajalugu ● graafilisi süsteeme enne X sündi: – 1973: Xerox Alto – 1981: Xerox Star – 1982: Andrew Project (Carnegie Mellon University) – 1983: Apple Lisa – 1984: Apple Macintosh – 1984: Blit Tööjaamade tarkvara 3 X Window System: ajalugu ● enne 1984: eelkäija “W” (Stanford University) ● 1984 juuni: esimene X (MIT, DEC, IBM) ● ... kiire areng... ● 1985 jaanuar: X versioon 5 ● 1985 september: X versioon 9 ● kasutati mõndadel DEC ja IBM süsteemidel ● 1986 jaanuar: X10R3 - esimene “laiapinnaline” X Tööjaamade tarkvara 4 X Window System: ajalugu ● 1986 sai selgeks, et on vaja luua korralikult ümberdisainitud X versioon ● 1986 mai algas DEC WSL avatud projekt X11 (protokolli) loomiseks - üks esimesi väga suure haardega vaba tarkvara projekte ● 1987: X11R1 ● 1988: loodi “The X Consortium” – mittetulunduslik tootjate ühendus – loodud juhtima X arendust – X11R2 (1988) - X11R6.3 (1996) Tööjaamade tarkvara 5 X Window System: ajalugu ● 1997: juhtimine üle “The Open Group”'ile – probleemid litsentsimisega – X11R6.4, X11R6.5 ● 1999: The Open Group loob X.org Tööjaamade tarkvara 6 X Window System: ajalugu ● 2004: X.org Foundation – suur muutus: juhtivaks jõuks arendajad, liikmeks võib saada igaüks – X11R6.7 - baseerus XFree86 4.4RC'l – nüüdsest lõppkasutajale kasutatavad tooted (varasemad X11 olid ainult aluseks tootjatele) Tööjaamade tarkvara 7 X Window System ● DECwindows (DEC) ● OpenWindows (Sun) ● XSun ● XFree86 ● X.Org ● .. -

X 윈도 시스템 (X Window System)

GNU/Linux X 윈도 시스템 (X Window System) Seo, Doo-Ok Clickseo.com [email protected] 목차 X 윈도 시스템 자유-오픈소스SW 패키지 2 운영체제 (1/5) 컴퓨터 소프트웨어 구성 시스템 소프트웨어와 응용 소프트웨어 Software System Software Application Software 운영체제 범용 소프트웨어 시스템 운영 프로그램 특정 목적 소프트웨어 시스템 지원 프로그램 시스템 개발 프로그램 3 운영체제 (2/5) 운영체제(OS, Operating System) 자원 관리(resource management) • 프로세스 관리 • 메모리 관리 (Memory management) “시스템 성능의 최적화” – 가상 메모리(Virtual memory) •장치관리: 디바이스 드라이버(Device drivers) •파일관리: 디스크 접근 및 파일 시스템 • 네트워크 및 보안 4 운영체제 (3/5) 운영체제 : 인터페이스 “사용자 편리성의 최적화” 사용자 인터페이스(User Interface) • 컴퓨터 하드웨어와 사용자(프로그램 또는 사람)간 인터페이스 제공 • CLI (Command Line Interface) • GUI (Graphical User Interface) [ CLI, Bash (Bourne-Again Sell) - UNIX Shell ] [ GUI, X11 and KDE ] 5 운영체제 (4/5) X 윈도 데스크톱 환경 : GNOME [ 출처 : GNOME, gnome.org ] 6 운영체제 (5/5) X 윈도 데스크톱 환경 : KDE [ 출처 : “KDE Plasma 5”, KDE, WIKIPEDIA. ] 7 X 윈도 시스템 X 윈도 시스템 디스플레이 서버 클라이언트 라이브러리 X 윈도 매니저 X 윈도 데스크톱 환경 자유-오픈소스SW 패키지 8 X 윈도 시스템 (1/2) X Window System : X11, X 주로 유닉스 계열 운영체제에서 사용되는 윈도 시스템 • 네트워크 프로토콜(X 프로토콜)에 기반한 그래픽 사용자 인터페이스 – GUI 환경의 구현을 위한 기본적인 프레임워크를 제공 • 1984년, 아데나 프로젝트(Athena Project)의 일환으로 시작 – 플랫폼 독립적으로 작동하는 그래픽 시스템 개발을 위해 DEC, IBM, MIT가 공동으로 진행 • 1986년, X10.4 공개 • 1987년, X11 발표 X 컨소시엄(X Consortium) • X11 버전 개정 : X11R2, X11R6 버전 발표 • 1996년 12월, X11R6.3 버전을 끝으로 X 컨소시엄 해체 일반적인 POSIX 시스템 : /etc/X11 • 현재, GNU/Linux를 비롯한 유닉스의 대부분이 X 윈도 시스템을 사용 9 X 윈도 시스템 (2/2) 클라이언트-서버 모델(Client-Server model) X 윈도 시스템은 사용자 컴퓨터에서 서버가 실행되는 반면 클라이언트는 원격 시스템에서 실행될 수 있다. -

Bonus Chapter B Programming for X

Bonus Chapter B Programming for X In this chapter and the next, we’ll take a look at writing programs to run in the usual Linux graphical environment, the XWindow System or X, http://www.x.org/Xorg.html. Modern UNIX systems and nearly all Linux distributions are shipped with a version of X. We’ll be concentrating on the programmer’s view of X, and we’ll assume that you are already comfortable with configuring, running, and using X on your system. We’ll cover ❑ X concepts ❑ X Windows managers ❑ X programming model ❑ Tk—its widgets, bindings, and geometry managers In the next chapter, we’ll move on to the GTK+ toolkit, which will allow us to program user interfaces in C for the GNOME system. What Is X? X was created at MIT as a way of providing a uniform environment for graphical programs. Nowadays it should be fair to assume that if you’ve used computers, you’ve come across either Microsoft Windows, X, or Apple MacOS before, so you’ll be familiar with the general concepts underlying a graphical user interface, or GUI. Unfortunately, although a Windows user might be able to navigate around the Mac interface, it’s a different story for programmers. Each windowing environment on each system is programmed differently. The ways that the display is handled and the programs communicate with the user are different. Although each system provides the programmer with the ability to open and manipulate windows on the screen, the functions used will be different. Writing applications that can run on more than one system (without using additional toolkits) is a daunting task.