Isscc 2008 / Session 1 / Plenary / 1.2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Calendrical Calculations: Third Edition

Notes and Errata for Calendrical Calculations: Third Edition Nachum Dershowitz and Edward M. Reingold Cambridge University Press, 2008 4:00am, July 24, 2013 Do I contradict myself ? Very well then I contradict myself. (I am large, I contain multitudes.) —Walt Whitman: Song of Myself All those complaints that they mutter about. are on account of many places I have corrected. The Creator knows that in most cases I was misled by following. others whom I will spare the embarrassment of mention. But even were I at fault, I do not claim that I reached my ultimate perfection from the outset, nor that I never erred. Just the opposite, I always retract anything the contrary of which becomes clear to me, whether in my writings or my nature. —Maimonides: Letter to his student Joseph ben Yehuda (circa 1190), Iggerot HaRambam, I. Shilat, Maaliyot, Maaleh Adumim, 1987, volume 1, page 295 [in Judeo-Arabic] Cuiusvis hominis est errare; nullius nisi insipientis in errore perseverare. [Any man can make a mistake; only a fool keeps making the same one.] —Attributed to Marcus Tullius Cicero If you find errors not given below or can suggest improvements to the book, please send us the details (email to [email protected] or hard copy to Edward M. Reingold, Department of Computer Science, Illinois Institute of Technology, 10 West 31st Street, Suite 236, Chicago, IL 60616-3729 U.S.A.). If you have occasion to refer to errors below in corresponding with the authors, please refer to the item by page and line numbers in the book, not by item number. -

Solution 6: Drag and Drop 169

06_0132344815_ch06.qxd 10/16/07 11:41 AM Page 167 SolutionSolution 1: Drag and Drop 167 6 Drag and Drop The ultimate in user interactivity, drag and drop is taken for granted in desktop appli- cations but is a litmus test of sorts for web applications: If you can easily implement drag and drop with your web application framework, then you know you’ve got something special. Until now, drag and drop for web applications has, for the most part, been limited to specialized JavaScript frameworks such as Script.aculo.us and Rico.1 No more. With the advent of GWT, we have drag-and-drop capabilities in a Java-based web applica- tion framework. Although GWT does not explicitly support drag and drop (drag and drop is an anticipated feature in the future), it provides us with all the necessary ingre- dients to make our own drag-and-drop module. In this solution, we explore drag-and-drop implementation with GWT. We implement drag and drop in a module of its own so that you can easily incorporate drag and drop into your applications. Stuff You’re Going to Learn This solution explores the following aspects of GWT: • Implementing composite widgets with the Composite class (page 174) • Removing widgets from panels (page 169) • Changing cursors for widgets with CSS styles (page 200) • Implementing a GWT module (page 182) • Adding multiple listeners to a widget (page 186) • Using the AbsolutePanel class to place widgets by pixel location (page 211) • Capturing and releasing events for a specific widget (page 191) • Using an event preview to inhibit browser reactions to events (page 196) 1 See http://www.script.aculo.us and http://openrico.org for more information about Script.aculo.us and Rico, respectively. -

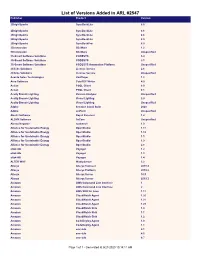

List of Versions Added in ARL #2547 Publisher Product Version

List of Versions Added in ARL #2547 Publisher Product Version 2BrightSparks SyncBackLite 8.5 2BrightSparks SyncBackLite 8.6 2BrightSparks SyncBackLite 8.8 2BrightSparks SyncBackLite 8.9 2BrightSparks SyncBackPro 5.9 3Dconnexion 3DxWare 1.2 3Dconnexion 3DxWare Unspecified 3S-Smart Software Solutions CODESYS 3.4 3S-Smart Software Solutions CODESYS 3.5 3S-Smart Software Solutions CODESYS Automation Platform Unspecified 4Clicks Solutions License Service 2.6 4Clicks Solutions License Service Unspecified Acarda Sales Technologies VoxPlayer 1.2 Acro Software CutePDF Writer 4.0 Actian PSQL Client 8.0 Actian PSQL Client 8.1 Acuity Brands Lighting Version Analyzer Unspecified Acuity Brands Lighting Visual Lighting 2.0 Acuity Brands Lighting Visual Lighting Unspecified Adobe Creative Cloud Suite 2020 Adobe JetForm Unspecified Alastri Software Rapid Reserver 1.4 ALDYN Software SvCom Unspecified Alexey Kopytov sysbench 1.0 Alliance for Sustainable Energy OpenStudio 1.11 Alliance for Sustainable Energy OpenStudio 1.12 Alliance for Sustainable Energy OpenStudio 1.5 Alliance for Sustainable Energy OpenStudio 1.9 Alliance for Sustainable Energy OpenStudio 2.8 alta4 AG Voyager 1.2 alta4 AG Voyager 1.3 alta4 AG Voyager 1.4 ALTER WAY WampServer 3.2 Alteryx Alteryx Connect 2019.4 Alteryx Alteryx Platform 2019.2 Alteryx Alteryx Server 10.5 Alteryx Alteryx Server 2019.3 Amazon AWS Command Line Interface 1 Amazon AWS Command Line Interface 2 Amazon AWS SDK for Java 1.11 Amazon CloudWatch Agent 1.20 Amazon CloudWatch Agent 1.21 Amazon CloudWatch Agent 1.23 Amazon -

Biohazard 4 Pc Movie Folder Download Biohazard 4 Download Software

biohazard 4 pc movie folder download Biohazard 4 Download Software. Toxic Biohazard is the 4th generation in the line of Toxic synthesizers. Toxic Biohazard distills the best features from the previous 3 versions to deliver our most concentrated Toxic ever. Beware. File Name: toxicbiohazard_install.exe Author: Image-Line License: Demo ($) File Size: 5.52 Mb Runs on: WinXP, Win Vista, Windows 7. This is my first theme, I made it for my beautiful wife who loves linux and hearts, space and pink.It uses the gtk from ambiance, the biohazard theme metacity file which I changed to add some crux theme options like images in the titlebar and it also tiles images.It also uses unfocused or inactive states for windows not in use (it grays out the frames more) I added stars in the titlebar, menu bar and menu items. File Name: 127484-BarbPink.tar.gz Author: Sam Riggs License: Freeware (Free) File Size: 40 Kb Runs on: Linux. Tool to determine the number of software downloads from the download sites. Joatl Download Counter is a tool to determine the number of software downloads from the download sites. Most developers send their software to various download sites. File Name: Joatl_Download_Counter_v1.0. 0_-_32bit.zip Author: Joatl License: Freeware (Free) File Size: 1.84 Mb Runs on: WinXP, Win Vista, Windows 7,Windows Vista, Windows 7 x64. Find out where to download anything you want. Ultimate Download Resources is a program that gives you a list of places to download music, movies, games and more. Just answer 3 simple questions, and the program will then analyze your answers to recommend the best place for you to download files. -

Index Images Download 2006 News Crack Serial Warez Full 12 Contact

index images download 2006 news crack serial warez full 12 contact about search spacer privacy 11 logo blog new 10 cgi-bin faq rss home img default 2005 products sitemap archives 1 09 links 01 08 06 2 07 login articles support 05 keygen article 04 03 help events archive 02 register en forum software downloads 3 security 13 category 4 content 14 main 15 press media templates services icons resources info profile 16 2004 18 docs contactus files features html 20 21 5 22 page 6 misc 19 partners 24 terms 2007 23 17 i 27 top 26 9 legal 30 banners xml 29 28 7 tools projects 25 0 user feed themes linux forums jobs business 8 video email books banner reviews view graphics research feedback pdf print ads modules 2003 company blank pub games copyright common site comments people aboutus product sports logos buttons english story image uploads 31 subscribe blogs atom gallery newsletter stats careers music pages publications technology calendar stories photos papers community data history arrow submit www s web library wiki header education go internet b in advertise spam a nav mail users Images members topics disclaimer store clear feeds c awards 2002 Default general pics dir signup solutions map News public doc de weblog index2 shop contacts fr homepage travel button pixel list viewtopic documents overview tips adclick contact_us movies wp-content catalog us p staff hardware wireless global screenshots apps online version directory mobile other advertising tech welcome admin t policy faqs link 2001 training releases space member static join health -

Highlights Ethiopia Reads Founder to Keynote Saturday President’S Program Sunrise Speaker Series Ohannes Gebregeorgis, 8:00 – 9:00 A.M

ALACognotes ALA BOSTON — 2010 MIDWINTER MEETING Saturday, January 16, 2010 Highlights Ethiopia Reads Founder to Keynote Saturday President’s Program Sunrise Speaker Series ohannes Gebregeorgis, 8:00 – 9:00 a.m. Y founder and execu- Boston Convention and tive director of Ethiopia Exhibition Center, Reads, will serve as key- Grand Ballroom note speaker for the ALA President’s Program 3:30 Exhibits Open p.m., Sunday at the Boston 9:00 a.m. – 5:00 p.m. Convention and Exhibition Center. Arthur Curley Memorial Ethiopia Reads focuses Lecture on Gebregeorigis’ organiza- 1:30 – 2:30 p.m. tion’s literacy work. The ALA President Camila A. Alire and Gene Shimshock, chair, Boston Convention and organization encourages Exhibits Round Table, cut the ribbon to open the exhibits as a love of reading by es- Exhibition Center, ALA President-elect Roberta A Stevens and the ALA Executive tablishing children’s and Yohannes Gebregeorgis Grand Ballroom Board look on. youth libraries in Ethiopia, free distribution of books to speak to the nation’s Spotlight on Adult Literature to children and multilin- library leaders on the val- 2:00 – 4:00 p.m. Toby Lester Analyzes the gual publishing. Gebre- ue of libraries,” said ALA Boston Convention and georgis was selected as one President Camila Alire. Exhibition Center Exhibit Birth Certificate of America of CNN’s Top 10 Heroes in “In a world where knowl- edge is power, libraries Floor By Frederick J. Augustyn, Jr. ing copy of the Waldseemuller 2008 for his work in estab- make communities more The Library of Congress map, which originally may lishing children’s libraries powerful. -

Microsoft Surface User Experience Guidelines

Microsoft Surface™ User Experience Guidelines Designing for Successful Touch Experiences Version 1.0 June 30, 2008 1 Copyright This document is provided for informational purposes only, and Microsoft makes no warranties, either express or implied, in this document. Information in this document, including URL and other Internet Web site references, is subject to change without notice. The entire risk of the use or the results from the use of this document remains with the user. Unless otherwise noted, the example companies, organizations, products, domain names, e-mail addresses, logos, people, places, financial and other data, and events depicted herein are fictitious. No association with any real company, organization, product, domain name, e-mail address, logo, person, places, financial or other data, or events is intended or should be inferred. Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, no part of this document may be reproduced, stored in or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise), or for any purpose, without the express written permission of Microsoft. Microsoft may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this document. Except as expressly provided in any written license agreement from Microsoft, the furnishing of this document does not give you any license to these patents, trademarks, copyrights, or other intellectual property. © 2008 Microsoft Corporation. All rights reserved. Microsoft, Excel, Microsoft Surface, the Microsoft Surface logo, Segoe, Silverlight, Windows, Windows Media, Windows Vista, Virtual Earth, Xbox LIVE, XNA, and Zune are either registered trademarks or trademarks of the Microsoft group of companies. -

Exploring the Process of Technological Innovation Matthew Mckinlay

Combination and context: Exploring the process of technological innovation Matthew McKinlay Entrepreneurship Commercialisation and Innovation Centre of the University of Adelaide Submitted: 21st September 2017 Submitted for the degree of Doctor of Philosophy Table of Contents CHAPTER 1 Introduction ................................................................................................... 17 1.0 Introduction ......................................................................................................... 17 1.1 Practical problem focus ...................................................................................... 18 1.2 Theoretical focus ................................................................................................ 21 1.3 The research questions ...................................................................................... 24 1.4 Scope of the research ......................................................................................... 24 1.4.1 Research design ..................................................................................... 25 1.5 Methodology ....................................................................................................... 26 1.6 Research outcomes ............................................................................................ 30 1.7 Summary of contributions ................................................................................... 34 1.8 Thesis overview ................................................................................................. -

WPF in Action with Visual Studio 2008

www.it-ebooks.info WPF in Action with Visual Studio 2008 www.it-ebooks.info www.it-ebooks.info WPF in Action with Visual Studio 2008 COVERS VISUAL STUDIO 2008 SP1 AND .NET 3.5 SP1 ARLEN FELDMAN MAXX DAYMON MANNING Greenwich (74° w. long.) www.it-ebooks.info For online information and ordering of this and other Manning books, please visit www.manning.com. The publisher offers discounts on this book when ordered in quantity. For more information, please contact: Special Sales Department Manning Publications Co. Sound View Court 3B Fax: (609) 877-8256 Greenwich, CT 06830 Email: [email protected] ©2009 by Manning Publications Co. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by means electronic, mechanical, photocopying, or otherwise, without prior written permission of the publisher. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in the book, and Manning Publications was aware of a trademark claim, the designations have been printed in initial caps or all caps. Recognizing the importance of preserving what has been written, it is Manning’s policy to have the books we publish printed on acid-free paper, and we exert our best efforts to that end. Recognizing also our responsibility to conserve the resources of our planet, Manning books are printed on paper that is at least 15% recycled and processed elemental chlorine-free Development Editor: Jeff Bleiel Manning -

Quick Start Guide Contents

Quick Start Guide Contents Contents INTRODUCTION................................................................... 4 Conventions used in this documentation............................................................................ 5 About this software.............................................................................................................. 7 About the Wonderware InduSoft Web Studio software components.................................13 Install the full Wonderware InduSoft Web Studio software............................................... 24 Install EmbeddedView or CEView on a target device.......................................................34 Execution Modes............................................................................................................... 40 THE DEVELOPMENT ENVIRONMENT............................. 42 Title Bar..............................................................................................................................43 Status Bar.......................................................................................................................... 44 Application button.............................................................................................................. 45 Quick Access Toolbar........................................................................................................ 46 Ribbon................................................................................................................................ 48 Home tab................................................................................................................... -

Open Model for the Distribution of Mobile Applications on Multiple Platforms

DIPLOMARBEIT Titel der Diplomarbeit „Concept of a distribution and infrastructure model for mobile applications development across multiple mobile platforms― Verfasserin / Verfasser Peter Bacher Angestrebter akademischer Grad Magister der Sozial- und Wirtschaftswissenschaften (Mag. rer. soc. oec.) Wien, 2011 Studienkennzahl lt. Studienblatt: A 157 Studienrichtung lt. Studienblatt: Diplomstudium Internationale Betriebswirtschaft Betreuer/: Univ.-Prof. Dr. Dimitris Karagiannis Erklärung Hiermit versichere ich, die vorliegende Diplomarbeit ohne Hilfe Dritter und nur mir den angegebenen Quellen und Hilfsmitteln angefertigt zu haben. Alle Stellen, die den Quellen entnommen wurden, sind als solche kenntlich gemacht worden. Diese Arbeit hat in gleicher oder ähnlicher Form noch keiner Prüfungsbehörde vorgelegen. Wien, 05.12.2011 ————————– Peter Bacher Page 2 Table of Contents Table of Contents ..................................................................................................... 3 1 Introduction ..................................................................................................... 10 1.1 Motivation ................................................................................................. 10 1.2 General problem statement ...................................................................... 11 1.3 Goal of the Thesis ..................................................................................... 12 1.4 Approach ................................................................................................. -

Windows Embedded CE

Windows Embedded CE While the traditional Windows desktop operating system (OS) developed by Microsoft was designed to run on well - defined and standardized computing hardware built with the x86 processor, Windows Embedded CE was designed to support multiple families of processors. This chapter provides an overview of CE and improvements for the latest Windows Embedded CE 6.0 R2 release. Multiple Windows Embedded products, including Windows Embedded CE, are being promoted and supported by the same business unit within Microsoft, the Windows Embedded Product group. What Is Embedded? Embedded is an industry buzz word that ’ s been in use for many years. Although it ’ s common for us to hear terms like embedded system, embedded software, embedded computer, embedded controller, and the like, most developers as well as business and teaching professionals have mixed views about the embedded market. To fully understand the potential offered by the Windows Embedded product family, we need to have good understanding about what ’ s considered an embedded device and embedded software. Before getting intoCOPYRIGHTED talking about Windows Embedded MATERIAL products, let’ s take a brief look at what embedded hardware and software are. Embedded Devices A computer is an electronic, digital device that can store and process information. In a similar fashion, an embedded device has a processor and memory and runs software. cc01.indd01.indd 1 99/26/08/26/08 55:11:04:11:04 PPMM Chapter 1: Windows Embedded CE While a computer is designed for general computing purposes, allowing the user to install different operating systems and applications to perform different tasks, an embedded device is generally developed with a single purpose and provides certain designated functions.