Microsoft Surface User Experience Guidelines

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MAGIC Summoning: Towards Automatic Suggesting and Testing of Gestures with Low Probability of False Positives During Use

JournalofMachineLearningResearch14(2013)209-242 Submitted 10/11; Revised 6/12; Published 1/13 MAGIC Summoning: Towards Automatic Suggesting and Testing of Gestures With Low Probability of False Positives During Use Daniel Kyu Hwa Kohlsdorf [email protected] Thad E. Starner [email protected] GVU & School of Interactive Computing Georgia Institute of Technology Atlanta, GA 30332 Editors: Isabelle Guyon and Vassilis Athitsos Abstract Gestures for interfaces should be short, pleasing, intuitive, and easily recognized by a computer. However, it is a challenge for interface designers to create gestures easily distinguishable from users’ normal movements. Our tool MAGIC Summoning addresses this problem. Given a specific platform and task, we gather a large database of unlabeled sensor data captured in the environments in which the system will be used (an “Everyday Gesture Library” or EGL). The EGL is quantized and indexed via multi-dimensional Symbolic Aggregate approXimation (SAX) to enable quick searching. MAGIC exploits the SAX representation of the EGL to suggest gestures with a low likelihood of false triggering. Suggested gestures are ordered according to brevity and simplicity, freeing the interface designer to focus on the user experience. Once a gesture is selected, MAGIC can output synthetic examples of the gesture to train a chosen classifier (for example, with a hidden Markov model). If the interface designer suggests his own gesture and provides several examples, MAGIC estimates how accurately that gesture can be recognized and estimates its false positive rate by comparing it against the natural movements in the EGL. We demonstrate MAGIC’s effectiveness in gesture selection and helpfulness in creating accurate gesture recognizers. -

“MICROSOFT COMPLETE” Service Contract Terms & Conditions

“MICROSOFT COMPLETE” Service Contract Terms & Conditions Thank You for purchasing “Microsoft Complete”! Please keep this important terms and conditions Contract document and Proof of Purchase together in a safe place, as these will be needed at time of a Claim. The information contained in this Contract document is intended to serve as a valuable reference guide to help You determine and understand “WHAT IS COVERED” under Your Contract. BE SURE TO REGISTER YOUR SERVICE CONTRACT ONLINE! In order to maximize Your benefits, please go to https://devicesupport.microsoft.com/ and register Your Service Contract within 10 days of purchase. Failure to do so may result in significant service delays when You have a Claim. For any questions regarding the information contained in this Contract document, or Your Coverage in general, please call Us toll-free at the following, as applicable to Your purchased Plan: For “SURFACE PLAN” or “STUDIO PLAN”: 1-855-425-8900 For “XBOX PLAN”: 1-877-696-7786 You can also visit https://support.microsoft.com/en-us/devices for online web support. CONSUMER RIGHTS: FOR CONSUMERS IN TERRITORIES THAT HAVE THE BENEFIT OF CONSUMER PROTECTION LAWS OR REGULATIONS, THE BENEFITS CONFERRED BY THIS SERVICE CONTRACT ARE IN ADDITION TO ALL RIGHTS AND REMEDIES PROVIDED UNDER SUCH LAWS AND REGULATIONS. NOTHING IN THIS SERVICE CONTRACT SHALL PREJUDICE CONSUMER RIGHTS GRANTED BY APPLICABLE MANDATORY LAWS, INCLUDING CONSUMER’S RIGHT TO THE REMEDIES UNDER STATUTORY WARRANTY LAW AND TO SEEK DAMAGES IN THE EVENT OF TOTAL OR PARTIAL NON-PERFORMANCE OR INADEQUATE PERFORMANCE BY US OF ANY OF OUR CONTRACTUAL OBLIGATIONS. -

Calendrical Calculations: Third Edition

Notes and Errata for Calendrical Calculations: Third Edition Nachum Dershowitz and Edward M. Reingold Cambridge University Press, 2008 4:00am, July 24, 2013 Do I contradict myself ? Very well then I contradict myself. (I am large, I contain multitudes.) —Walt Whitman: Song of Myself All those complaints that they mutter about. are on account of many places I have corrected. The Creator knows that in most cases I was misled by following. others whom I will spare the embarrassment of mention. But even were I at fault, I do not claim that I reached my ultimate perfection from the outset, nor that I never erred. Just the opposite, I always retract anything the contrary of which becomes clear to me, whether in my writings or my nature. —Maimonides: Letter to his student Joseph ben Yehuda (circa 1190), Iggerot HaRambam, I. Shilat, Maaliyot, Maaleh Adumim, 1987, volume 1, page 295 [in Judeo-Arabic] Cuiusvis hominis est errare; nullius nisi insipientis in errore perseverare. [Any man can make a mistake; only a fool keeps making the same one.] —Attributed to Marcus Tullius Cicero If you find errors not given below or can suggest improvements to the book, please send us the details (email to [email protected] or hard copy to Edward M. Reingold, Department of Computer Science, Illinois Institute of Technology, 10 West 31st Street, Suite 236, Chicago, IL 60616-3729 U.S.A.). If you have occasion to refer to errors below in corresponding with the authors, please refer to the item by page and line numbers in the book, not by item number. -

Amigaos 3.2 FAQ 47.1 (09.04.2021) English

$VER: AmigaOS 3.2 FAQ 47.1 (09.04.2021) English Please note: This file contains a list of frequently asked questions along with answers, sorted by topics. Before trying to contact support, please read through this FAQ to determine whether or not it answers your question(s). Whilst this FAQ is focused on AmigaOS 3.2, it contains information regarding previous AmigaOS versions. Index of topics covered in this FAQ: 1. Installation 1.1 * What are the minimum hardware requirements for AmigaOS 3.2? 1.2 * Why won't AmigaOS 3.2 boot with 512 KB of RAM? 1.3 * Ok, I get it; 512 KB is not enough anymore, but can I get my way with less than 2 MB of RAM? 1.4 * How can I verify whether I correctly installed AmigaOS 3.2? 1.5 * Do you have any tips that can help me with 3.2 using my current hardware and software combination? 1.6 * The Help subsystem fails, it seems it is not available anymore. What happened? 1.7 * What are GlowIcons? Should I choose to install them? 1.8 * How can I verify the integrity of my AmigaOS 3.2 CD-ROM? 1.9 * My Greek/Russian/Polish/Turkish fonts are not being properly displayed. How can I fix this? 1.10 * When I boot from my AmigaOS 3.2 CD-ROM, I am being welcomed to the "AmigaOS Preinstallation Environment". What does this mean? 1.11 * What is the optimal ADF images/floppy disk ordering for a full AmigaOS 3.2 installation? 1.12 * LoadModule fails for some unknown reason when trying to update my ROM modules. -

Surface Hub 2S Admin Guide

Surface Hub 2S Admin Guide Surface Hub 2S coming soon; Pre-release products shown; products and features subject to regulatory certification/approval, may change, and may vary by country/region. Surface Hub 2S has not yet been authorized under U.S. Federal Communications Commission (FCC) rules; actual sale and delivery is contingent on compliance with applicable FCC requirements. This documentation is an early release of the final documentation, which may be changed prior to final commercial release and is confidential and proprietary information of Microsoft Corporation. This document is provided for informational purposes only and Microsoft makes no warranties, either express or implied, in this document. © 2019. Microsoft Corporation. All rights reserved Introduction .................................................................................................................................................. 1 Welcome to Surface Hub 2S ......................................................................................................................... 1 New User Experience and Features ........................................................................................................................ 1 Microsoft Teams ..................................................................................................................................................... 1 New form factor and hardware changes ................................................................................................................ 2 Surface -

News Fact Sheet

Intel Corporation 2200 Mission College Blvd. P.O. Box 58119 Santa Clara, CA 95052-8119 News Fact Sheet CONTACT: Cristina Gutierrez 415-591-4047 [email protected] Intel News at Game Developers Conference March 23, 2009: At the Game Developers Conference in San Francisco, Intel Corporation announced broad additions to its Visual Adrenaline program, a comprehensive and worldwide offering dedicated to serving visual computing developers. First announced in August 2008, Visual Adrenaline provides tools, resources, information and marketing opportunities to game developers, artists and animators. Below is an overview of Intel’s news at the show. Developer Tools for Better Gaming – The Intel® Graphics Performance Analyzers (GPA) is a new suite of software tools that help software and PC game developers analyze and optimize for performance on Intel® Integrated Graphics. Consisting of the System Analyzer, Frame Analyzer, and the Software Development Kit, the suite of tools provides in-depth application analysis and customization that help developers pinpoint performance bottlenecks and enable them to optimize games for Intel Integrated Graphics-based desktops and notebooks. GPA offers software tools that provide a holistic view of system and graphics performance for Intel-based systems. GPA supports Intel integrated graphics chipsets and processors, including the Intel® Core™ i7 Processor, with planned support for future Intel graphics and multicore-related products. GPA is free of charge to members of the Visual Adrenaline program and is available to non-members for $299 from the Intel® Business Exchange. To learn more, visit www.intel.com/software/gpa or see the GPA news release http://www.intel.com/pressroom/archive/releases/20090323comp.htm. -

Tablets in the Enterprise: Comparing the Total Cost of Ownership

TABLETS IN THE ENTERPRISE: COMPARING THE TOTAL COST OF OWNERSHIP EXECUTIVE SUMMARY Windows 8 tablets provide a PC experience, which for many workers is essential to maintaining productivity. With Windows 8, users can run familiar desktop applications, maintaining productivity without having to find new ways to carry out their tasks. They can read, edit, and print their emails and Office documents—tasks that can be a challenge on other tablets. In addition to these productivity advantages, Intel Core i5 processor and Windows 8 tablets can provide enterprises with total cost of ownership (TCO) savings of up to 18 percent compared to other tablets. We expect additional TCO savings with Intel Core vPro™ processor-based tablets due to their additional manageability capabilities. We estimate tablets with Intel Architecture and Windows 8 will have lower TCO than other tablets, primarily due to the following: reduced software costs lower management costs improved end-user productivity JANUARY 2013 A PRINCIPLED TECHNOLOGIES WHITE PAPER Commissioned by Intel Corp. TOTAL COST OF OWNERSHIP OF TABLETS IN THE ENTERPRISE In this paper, we first present a TCO analysis for a hypothetical enterprise considering four major categories of tablets for their employees: Microsoft Windows 8 tablets, Windows RT tablets, Android tablets, and Apple iPads. Because the ranges of prices and capabilities differ among models within each category, we selected a single representative model for each. Following this analysis, we discuss the dominant issues in enterprise tablet computing as of this writing. We base our TCO analysis on the primary assumptions in Figure 1. Tablets must be nine inches or larger with 64 GB of storage and Wi-Fi support. -

Understanding and Mitigating the Security Risks of Apple Zeroconf

2016 IEEE Symposium on Security and Privacy Staying Secure and Unprepared: Understanding and Mitigating the Security Risks of Apple ZeroConf Xiaolong Bai*,1, Luyi Xing*,2, Nan Zhang2, XiaoFeng Wang2, Xiaojing Liao3, Tongxin Li4, Shi-Min Hu1 1TNList, Tsinghua University, Beijing, 2Indiana University Bloomington, 3Georgia Institute of Technology, 4Peking University [email protected], {luyixing, nz3, xw7}@indiana.edu, [email protected], [email protected], [email protected] Abstract—With the popularity of today’s usability-oriented tend to build their systems in a “plug-and-play” fashion, designs, dubbed Zero Configuration or ZeroConf, unclear are using techniques dubbed zero-configuration (ZeroConf ). For the security implications of these automatic service discovery, example, the AirDrop service on iPhone, once activated, “plug-and-play” techniques. In this paper, we report the first systematic study on this issue, focusing on the security features automatically detects another Apple device nearby running of the systems related to Apple, the major proponent of the service to transfer documents. Such ZeroConf services ZeroConf techniques. Our research brings to light a disturb- are characterized by automatic IP selection, host name ing lack of security consideration in these systems’ designs: resolving and target service discovery. Prominent examples major ZeroConf frameworks on the Apple platforms, includ- include Apple’s Bonjour [3], and the Link-Local Multicast ing the Core Bluetooth Framework, Multipeer Connectivity and -

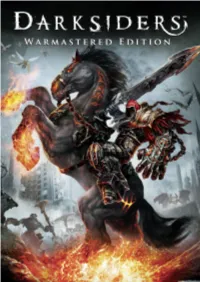

Remaster Edition Features

Deceived by the forces of evil into prematurely bringing about the end of the world, War – the first Horseman of the Apocalypse – stands accused of breaking the sacred law by inciting a war between Heaven and Hell. In the slaughter that ensued, the demonic forces defeated the heavenly hosts and laid claim to the Earth. Brought before the sacred Charred Council, War is indicted for his crimes and stripped of his powers. Dishonored and facing his own death, War is given the opportunity to return to Earth to search for the truth and punish those responsible. Hunted by a vengeful group of Angels, War must take on the forces of Hell, forge uneasy alliances with the very demons he hunts, and journey across the ravaged remains of the Earth on his quest for vengeance and vindication. Remaster Edition Features • PS4, Xbox One and Wii U versions of Darksiders (Wrath of War) • Native 1080p rendering resolution • Doubled all the texture resolutions • Rendering improvements and rework • Better shadow rendering quality • Post processing effects Apocalyptic Power – Unleash the wrath of War, combining brutal attacks and supernatural abilities to decimate all who stand in your way • 60 FPS in moment to moment gameplay (PS4, Xbox One, PC, 30 for WiiU) Extreme Arsenal – Wield a devastating arsenal of angelic, demonic and Earthly weapons; and blaze a trail of destruction atop Ruin, War’s fiery phantom steed Epic Quest – Battle across the wastelands and demon-infested dungeons of the decimated Earth in your quest for vengeance and redemption Character Progression – Uncover powerful ancient relics, upgrade your weapons, unlock new abilities, and customize your gameplay style Battle Heaven and Hell – Battle against all who stand in your way - from war-weary angelic forces to Hell’s hideous demon hordes © 2016 by Nordic Games Licensing AB, Sweden. -

Solution 6: Drag and Drop 169

06_0132344815_ch06.qxd 10/16/07 11:41 AM Page 167 SolutionSolution 1: Drag and Drop 167 6 Drag and Drop The ultimate in user interactivity, drag and drop is taken for granted in desktop appli- cations but is a litmus test of sorts for web applications: If you can easily implement drag and drop with your web application framework, then you know you’ve got something special. Until now, drag and drop for web applications has, for the most part, been limited to specialized JavaScript frameworks such as Script.aculo.us and Rico.1 No more. With the advent of GWT, we have drag-and-drop capabilities in a Java-based web applica- tion framework. Although GWT does not explicitly support drag and drop (drag and drop is an anticipated feature in the future), it provides us with all the necessary ingre- dients to make our own drag-and-drop module. In this solution, we explore drag-and-drop implementation with GWT. We implement drag and drop in a module of its own so that you can easily incorporate drag and drop into your applications. Stuff You’re Going to Learn This solution explores the following aspects of GWT: • Implementing composite widgets with the Composite class (page 174) • Removing widgets from panels (page 169) • Changing cursors for widgets with CSS styles (page 200) • Implementing a GWT module (page 182) • Adding multiple listeners to a widget (page 186) • Using the AbsolutePanel class to place widgets by pixel location (page 211) • Capturing and releasing events for a specific widget (page 191) • Using an event preview to inhibit browser reactions to events (page 196) 1 See http://www.script.aculo.us and http://openrico.org for more information about Script.aculo.us and Rico, respectively. -

Cooper ( Interaction Design

Cooper ( Interaction Design Inspiration The Myth of Metaphor Cooper books by Alan Cooper, Chairman & Founder Articles June 1995 Newsletter Originally Published in Visual Basic Programmer's Journal Concept projects Book reviews Software designers often speak of "finding the right metaphor" upon which to base their interface design. They imagine that rendering their user interface in images of familiar objects from the real world will provide a pipeline to automatic learning by their users. So they render their user interface as an office filled with desks, file cabinets, telephones and address books, or as a pad of paper or a street of buildings in the hope of creating a program with breakthrough ease-of- learning. And if you search for that magic metaphor, you will be in august company. Some of the best and brightest designers in the interface world put metaphor selection as one of their first and most important tasks. But by searching for that magic metaphor you will be making one of the biggest mistakes in user interface design. Searching for that guiding metaphor is like searching for the correct steam engine to power your airplane, or searching for a good dinosaur on which to ride to work. I think basing a user interface design on a metaphor is not only unhelpful but can often be quite harmful. The idea that good user interface design is based on metaphors is one of the most insidious of the many myths that permeate the software community. Metaphors offer a tiny boost in learnability to first time users at http://www.cooper.com/articles/art_myth_of_metaphor.htm (1 of 8) [1/16/2002 2:21:34 PM] Cooper ( Interaction Design tremendous cost. -

Información Sobre La Garantía

INFORMACIÓN SOBRE LA GARANTÍA GARANTÍA LIMITADA Bethesda Softworks LLC, empresa perteneciente a ZeniMax Media (“Bethesda Softworks”) le garantiza a usted, el comprador original de este disco y del videojuego en él incluido (“Juego”) que, en circunstancias de utilización normal del Juego, este funcionará fundamentalmente como se describe en el manual que lo acompaña durante un periodo de 90 días desde la fecha de compra (“Periodo de garantía”). Esta Garantía limitada: (a) no se aplica si el Juego se utiliza con fines empresariales o comerciales; y (b) queda anulada si el Juego falla debido a un accidente, a maltrato, al ataque de un virus o a un uso inadecuado. RECURSO EXCLUSIVO Y LIMITACIÓN DE RESPONSABILIDAD Toda reclamación relacionada con la garantía debe dirigirse al vendedor al que usted compró el Juego. Debe devolver el Juego al vendedor dentro del periodo de garantía junto con una copia del recibo de compra original y una descripción del problema que afecta al Juego. El vendedor, a su discreción, podrá reparar o reemplazar el Juego por otro. Todo Juego reemplazado estará sujeto a garantía durante el tiempo restante del Periodo de garantía original o durante 30 días; de los dos, el periodo de mayor duración. El recurso exclusivo del comprador y toda la responsabilidad de Bethesda Softworks, sus licenciantes y distribuidores consistirán, a discreción del vendedor, en la reparación o reemplazo del Juego que no cumpla con lo especificado en esta Garantía limitada y sea debidamente devuelto al vendedor. Fuera de Estados Unidos, no se aplicará ninguno de estos recursos ni ningún servicio de asistencia relacionado con el producto si no es con un comprobante de compra efectuada en una entidad internacional autorizada.