A Vindication of Program Verification 1. Introduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

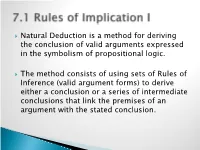

7.1 Rules of Implication I

Natural Deduction is a method for deriving the conclusion of valid arguments expressed in the symbolism of propositional logic. The method consists of using sets of Rules of Inference (valid argument forms) to derive either a conclusion or a series of intermediate conclusions that link the premises of an argument with the stated conclusion. The First Four Rules of Inference: ◦ Modus Ponens (MP): p q p q ◦ Modus Tollens (MT): p q ~q ~p ◦ Pure Hypothetical Syllogism (HS): p q q r p r ◦ Disjunctive Syllogism (DS): p v q ~p q Common strategies for constructing a proof involving the first four rules: ◦ Always begin by attempting to find the conclusion in the premises. If the conclusion is not present in its entirely in the premises, look at the main operator of the conclusion. This will provide a clue as to how the conclusion should be derived. ◦ If the conclusion contains a letter that appears in the consequent of a conditional statement in the premises, consider obtaining that letter via modus ponens. ◦ If the conclusion contains a negated letter and that letter appears in the antecedent of a conditional statement in the premises, consider obtaining the negated letter via modus tollens. ◦ If the conclusion is a conditional statement, consider obtaining it via pure hypothetical syllogism. ◦ If the conclusion contains a letter that appears in a disjunctive statement in the premises, consider obtaining that letter via disjunctive syllogism. Four Additional Rules of Inference: ◦ Constructive Dilemma (CD): (p q) • (r s) p v r q v s ◦ Simplification (Simp): p • q p ◦ Conjunction (Conj): p q p • q ◦ Addition (Add): p p v q Common Misapplications Common strategies involving the additional rules of inference: ◦ If the conclusion contains a letter that appears in a conjunctive statement in the premises, consider obtaining that letter via simplification. -

Predicate Logic. Formal and Informal Proofs

CS 441 Discrete Mathematics for CS Lecture 5 Predicate logic Milos Hauskrecht [email protected] 5329 Sennott Square CS 441 Discrete mathematics for CS M. Hauskrecht Negation of quantifiers English statement: • Nothing is perfect. • Translation: ¬ x Perfect(x) Another way to express the same meaning: • Everything ... M. Hauskrecht 1 Negation of quantifiers English statement: • Nothing is perfect. • Translation: ¬ x Perfect(x) Another way to express the same meaning: • Everything is imperfect. • Translation: x ¬ Perfect(x) Conclusion: ¬ x P (x) is equivalent to x ¬ P(x) M. Hauskrecht Negation of quantifiers English statement: • It is not the case that all dogs are fleabags. • Translation: ¬ x Dog(x) Fleabag(x) Another way to express the same meaning: • There is a dog that … M. Hauskrecht 2 Negation of quantifiers English statement: • It is not the case that all dogs are fleabags. • Translation: ¬ x Dog(x) Fleabag(x) Another way to express the same meaning: • There is a dog that is not a fleabag. • Translation: x Dog(x) ¬ Fleabag(x) • Logically equivalent to: – x ¬ ( Dog(x) Fleabag(x) ) Conclusion: ¬ x P (x) is equivalent to x ¬ P(x) M. Hauskrecht Negation of quantified statements (aka DeMorgan Laws for quantifiers) Negation Equivalent ¬x P(x) x ¬P(x) ¬x P(x) x ¬P(x) M. Hauskrecht 3 Formal and informal proofs CS 441 Discrete mathematics for CS M. Hauskrecht Theorems and proofs • The truth value of some statement about the world is obvious and easy to assign • The truth of other statements may not be obvious, … …. But it may still follow (be derived) from known facts about the world To show the truth value of such a statement following from other statements we need to provide a correct supporting argument - a proof Important questions: – When is the argument correct? – How to construct a correct argument, what method to use? CS 441 Discrete mathematics for CS M. -

Classical First-Order Logic Software Formal Verification

Classical First-Order Logic Software Formal Verification Maria Jo~aoFrade Departmento de Inform´atica Universidade do Minho 2008/2009 Maria Jo~aoFrade (DI-UM) First-Order Logic (Classical) MFES 2008/09 1 / 31 Introduction First-order logic (FOL) is a richer language than propositional logic. Its lexicon contains not only the symbols ^, _, :, and ! (and parentheses) from propositional logic, but also the symbols 9 and 8 for \there exists" and \for all", along with various symbols to represent variables, constants, functions, and relations. There are two sorts of things involved in a first-order logic formula: terms, which denote the objects that we are talking about; formulas, which denote truth values. Examples: \Not all birds can fly." \Every child is younger than its mother." \Andy and Paul have the same maternal grandmother." Maria Jo~aoFrade (DI-UM) First-Order Logic (Classical) MFES 2008/09 2 / 31 Syntax Variables: x; y; z; : : : 2 X (represent arbitrary elements of an underlying set) Constants: a; b; c; : : : 2 C (represent specific elements of an underlying set) Functions: f; g; h; : : : 2 F (every function f as a fixed arity, ar(f)) Predicates: P; Q; R; : : : 2 P (every predicate P as a fixed arity, ar(P )) Fixed logical symbols: >, ?, ^, _, :, 8, 9 Fixed predicate symbol: = for \equals" (“first-order logic with equality") Maria Jo~aoFrade (DI-UM) First-Order Logic (Classical) MFES 2008/09 3 / 31 Syntax Terms The set T , of terms of FOL, is given by the abstract syntax T 3 t ::= x j c j f(t1; : : : ; tar(f)) Formulas The set L, of formulas of FOL, is given by the abstract syntax L 3 φ, ::= ? j > j :φ j φ ^ j φ _ j φ ! j t1 = t2 j 8x: φ j 9x: φ j P (t1; : : : ; tar(P )) :, 8, 9 bind most tightly; then _ and ^; then !, which is right-associative. -

Chapter 10: Symbolic Trails and Formal Proofs of Validity, Part 2

Essential Logic Ronald C. Pine CHAPTER 10: SYMBOLIC TRAILS AND FORMAL PROOFS OF VALIDITY, PART 2 Introduction In the previous chapter there were many frustrating signs that something was wrong with our formal proof method that relied on only nine elementary rules of validity. Very simple, intuitive valid arguments could not be shown to be valid. For instance, the following intuitively valid arguments cannot be shown to be valid using only the nine rules. Somalia and Iran are both foreign policy risks. Therefore, Iran is a foreign policy risk. S I / I Either Obama or McCain was President of the United States in 2009.1 McCain was not President in 2010. So, Obama was President of the United States in 2010. (O v C) ~(O C) ~C / O If the computer networking system works, then Johnson and Kaneshiro will both be connected to the home office. Therefore, if the networking system works, Johnson will be connected to the home office. N (J K) / N J Either the Start II treaty is ratified or this landmark treaty will not be worth the paper it is written on. Therefore, if the Start II treaty is not ratified, this landmark treaty will not be worth the paper it is written on. R v ~W / ~R ~W 1 This or statement is obviously exclusive, so note the translation. 427 If the light is on, then the light switch must be on. So, if the light switch in not on, then the light is not on. L S / ~S ~L Thus, the nine elementary rules of validity covered in the previous chapter must be only part of a complete system for constructing formal proofs of validity. -

Saving the Square of Opposition∗

HISTORY AND PHILOSOPHY OF LOGIC, 2021 https://doi.org/10.1080/01445340.2020.1865782 Saving the Square of Opposition∗ PIETER A. M. SEUREN Max Planck Institute for Psycholinguistics, Nijmegen, the Netherlands Received 19 April 2020 Accepted 15 December 2020 Contrary to received opinion, the Aristotelian Square of Opposition (square) is logically sound, differing from standard modern predicate logic (SMPL) only in that it restricts the universe U of cognitively constructible situations by banning null predicates, making it less unnatural than SMPL. U-restriction strengthens the logic without making it unsound. It also invites a cognitive approach to logic. Humans are endowed with a cogni- tive predicate logic (CPL), which checks the process of cognitive modelling (world construal) for consistency. The square is considered a first approximation to CPL, with a cognitive set-theoretic semantics. Not being cognitively real, the null set Ø is eliminated from the semantics of CPL. Still rudimentary in Aristotle’s On Interpretation (Int), the square was implicitly completed in his Prior Analytics (PrAn), thereby introducing U-restriction. Abelard’s reconstruction of the logic of Int is logically and historically correct; the loca (Leak- ing O-Corner Analysis) interpretation of the square, defended by some modern logicians, is logically faulty and historically untenable. Generally, U-restriction, not redefining the universal quantifier, as in Abelard and loca, is the correct path to a reconstruction of CPL. Valuation Space modelling is used to compute -

Traditional Logic II Text (2Nd Ed

Table of Contents A Note to the Teacher ............................................................................................... v Further Study of Simple Syllogisms Chapter 1: Figure in Syllogisms ............................................................................................... 1 Chapter 2: Mood in Syllogisms ................................................................................................. 5 Chapter 3: Reducing Syllogisms to the First Figure ............................................................... 11 Chapter 4: Indirect Reduction of Syllogisms .......................................................................... 19 Arguments in Ordinary Language Chapter 5: Translating Ordinary Sentences into Logical Statements ..................................... 25 Chapter 6: Enthymemes ......................................................................................................... 33 Hypothetical Syllogisms Chapter 7: Conditional Syllogisms ......................................................................................... 39 Chapter 8: Disjunctive Syllogisms ......................................................................................... 49 Chapter 9: Conjunctive Syllogisms ......................................................................................... 57 Complex Syllogisms Chapter 10: Polysyllogisms & Aristotelian Sorites .................................................................. 63 Chapter 11: Goclenian & Conditional Sorites ........................................................................ -

Logic and Proof

Logic and Proof Computer Science Tripos Part IB Michaelmas Term Lawrence C Paulson Computer Laboratory University of Cambridge [email protected] Copyright c 2000 by Lawrence C. Paulson Contents 1 Introduction and Learning Guide 1 2 Propositional Logic 3 3 Proof Systems for Propositional Logic 12 4 Ordered Binary Decision Diagrams 19 5 First-order Logic 22 6 Formal Reasoning in First-Order Logic 29 7 Clause Methods for Propositional Logic 34 8 Skolem Functions and Herbrand’s Theorem 42 9 Unification 49 10 Applications of Unification 58 11 Modal Logics 65 12 Tableaux-Based Methods 70 i ii 1 1 Introduction and Learning Guide This course gives a brief introduction to logic, with including the resolution method of theorem-proving and its relation to the programming language Prolog. Formal logic is used for specifying and verifying computer systems and (some- times) for representing knowledge in Artificial Intelligence programs. The course should help you with Prolog for AI and its treatment of logic should be helpful for understanding other theoretical courses. Try to avoid getting bogged down in the details of how the various proof methods work, since you must also acquire an intuitive feel for logical reasoning. The most suitable course text is this book: Michael Huth and Mark Ryan, Logic in Computer Science: Modelling and Reasoning about Systems (CUP, 2000) It costs £18.36 from Amazon. It covers most aspects of this course with the ex- ception of resolution theorem proving. It includes material that may be useful in Specification and Verification II next year, namely symbolic model checking. -

Foundations of Mathematics

Foundations of Mathematics Fall 2019 ii Contents 1 Propositional Logic 1 1.1 The basic definitions . .1 1.2 Disjunctive Normal Form Theorem . .3 1.3 Proofs . .4 1.4 The Soundness Theorem . 11 1.5 The Completeness Theorem . 12 1.6 Completeness, Consistency and Independence . 14 2 Predicate Logic 17 2.1 The Language of Predicate Logic . 17 2.2 Models and Interpretations . 19 2.3 The Deductive Calculus . 21 2.4 Soundness Theorem for Predicate Logic . 24 3 Models for Predicate Logic 27 3.1 Models . 27 3.2 The Completeness Theorem for Predicate Logic . 27 3.3 Consequences of the completeness theorem . 31 4 Computability Theory 33 4.1 Introduction and Examples . 33 4.2 Finite State Automata . 34 4.3 Exercises . 37 4.4 Turing Machines . 38 4.5 Recursive Functions . 43 4.6 Exercises . 48 iii iv CONTENTS Chapter 1 Propositional Logic 1.1 The basic definitions Propositional logic concerns relationships between sentences built up from primitive proposition symbols with logical connectives. The symbols of the language of predicate calculus are 1. Logical connectives: ,&, , , : _ ! $ 2. Punctuation symbols: ( , ) 3. Propositional variables: A0, A1, A2,.... A propositional variable is intended to represent a proposition which can either be true or false. Restricted versions, , of the language of propositional logic can be constructed by specifying a subset of the propositionalL variables. In this case, let PVar( ) denote the propositional variables of . L L Definition 1.1.1. The collection of sentences, denoted Sent( ), of a propositional language is defined by recursion. L L 1. The basis of the set of sentences is the set PVar( ) of propositional variables of . -

Logic and Proof Release 3.18.4

Logic and Proof Release 3.18.4 Jeremy Avigad, Robert Y. Lewis, and Floris van Doorn Sep 10, 2021 CONTENTS 1 Introduction 1 1.1 Mathematical Proof ............................................ 1 1.2 Symbolic Logic .............................................. 2 1.3 Interactive Theorem Proving ....................................... 4 1.4 The Semantic Point of View ....................................... 5 1.5 Goals Summarized ............................................ 6 1.6 About this Textbook ........................................... 6 2 Propositional Logic 7 2.1 A Puzzle ................................................. 7 2.2 A Solution ................................................ 7 2.3 Rules of Inference ............................................ 8 2.4 The Language of Propositional Logic ................................... 15 2.5 Exercises ................................................. 16 3 Natural Deduction for Propositional Logic 17 3.1 Derivations in Natural Deduction ..................................... 17 3.2 Examples ................................................. 19 3.3 Forward and Backward Reasoning .................................... 20 3.4 Reasoning by Cases ............................................ 22 3.5 Some Logical Identities .......................................... 23 3.6 Exercises ................................................. 24 4 Propositional Logic in Lean 25 4.1 Expressions for Propositions and Proofs ................................. 25 4.2 More commands ............................................ -

CHAPTER 8 Hilbert Proof Systems, Formal Proofs, Deduction Theorem

CHAPTER 8 Hilbert Proof Systems, Formal Proofs, Deduction Theorem The Hilbert proof systems are systems based on a language with implication and contain a Modus Ponens rule as a rule of inference. They are usually called Hilbert style formalizations. We will call them here Hilbert style proof systems, or Hilbert systems, for short. Modus Ponens is probably the oldest of all known rules of inference as it was already known to the Stoics (3rd century B.C.). It is also considered as the most "natural" to our intuitive thinking and the proof systems containing it as the inference rule play a special role in logic. The Hilbert proof systems put major emphasis on logical axioms, keeping the rules of inference to minimum, often in propositional case, admitting only Modus Ponens, as the sole inference rule. 1 Hilbert System H1 Hilbert proof system H1 is a simple proof system based on a language with implication as the only connective, with two axioms (axiom schemas) which characterize the implication, and with Modus Ponens as a sole rule of inference. We de¯ne H1 as follows. H1 = ( Lf)g; F fA1;A2g MP ) (1) where A1;A2 are axioms of the system, MP is its rule of inference, called Modus Ponens, de¯ned as follows: A1 (A ) (B ) A)); A2 ((A ) (B ) C)) ) ((A ) B) ) (A ) C))); MP A ;(A ) B) (MP ) ; B 1 and A; B; C are any formulas of the propositional language Lf)g. Finding formal proofs in this system requires some ingenuity. Let's construct, as an example, the formal proof of such a simple formula as A ) A. -

List of Rules of Inference 1 List of Rules of Inference

List of rules of inference 1 List of rules of inference This is a list of rules of inference, logical laws that relate to mathematical formulae. Introduction Rules of inference are syntactical transform rules which one can use to infer a conclusion from a premise to create an argument. A set of rules can be used to infer any valid conclusion if it is complete, while never inferring an invalid conclusion, if it is sound. A sound and complete set of rules need not include every rule in the following list, as many of the rules are redundant, and can be proven with the other rules. Discharge rules permit inference from a subderivation based on a temporary assumption. Below, the notation indicates such a subderivation from the temporary assumption to . Rules for classical sentential calculus Sentential calculus is also known as propositional calculus. Rules for negations Reductio ad absurdum (or Negation Introduction) Reductio ad absurdum (related to the law of excluded middle) Noncontradiction (or Negation Elimination) Double negation elimination Double negation introduction List of rules of inference 2 Rules for conditionals Deduction theorem (or Conditional Introduction) Modus ponens (or Conditional Elimination) Modus tollens Rules for conjunctions Adjunction (or Conjunction Introduction) Simplification (or Conjunction Elimination) Rules for disjunctions Addition (or Disjunction Introduction) Separation of Cases (or Disjunction Elimination) Disjunctive syllogism List of rules of inference 3 Rules for biconditionals Biconditional introduction Biconditional Elimination Rules of classical predicate calculus In the following rules, is exactly like except for having the term everywhere has the free variable . Universal Introduction (or Universal Generalization) Restriction 1: does not occur in . -

Symbolic Logic: Grammar, Semantics, Syntax

Symbolic Logic: Grammar, Semantics, Syntax Logic aims to give a precise method or recipe for determining what follows from a given set of sentences. So we need precise definitions of `sentence' and `follows from.' 1. Grammar: What's a sentence? What are the rules that determine whether a string of symbols is a sentence, and when it is not? These rules are known as the grammar of a particular language. We have actually been operating with two different but very closely related grammars this term: a grammar for propositional logic and a grammar for first-order logic. The grammar for propositional logic (thus far) is simple: 1. There are an indefinite number of propositional variables, which we have been symbolizing as A; B; ::: and P; Q; :::; each of these is a sen- tence. ? is also a sentence. 2. If A; B are sentences, then: •:A is a sentence, • A ^ B is a sentence, and • A _ B is a sentence. 3. Nothing else is a sentence: anything that is neither a member of 1, nor constructable via successive applications of 2 to the members of 1, is not a sentence. The grammar for first-order logic (thus far) is more complex. 2 and 3 remain exactly the same as above, but 1 must be replaced by some- thing more complex. First, a list of all predicates (e.g. Tet or SameShape) and proper names (e.g. max; b) of a particular language L must be specified. Then we can define: 1F OL. A series of symbols s is an atomic sentence = s is an n-place predicate followed by an ordered sequence of n proper names.