The Entscheidungsproblem and Alan Turing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Hierarchy of Computably Enumerable Degrees, Ii: Some Recent Developments and New Directions

A HIERARCHY OF COMPUTABLY ENUMERABLE DEGREES, II: SOME RECENT DEVELOPMENTS AND NEW DIRECTIONS ROD DOWNEY, NOAM GREENBERG, AND ELLEN HAMMATT Abstract. A transfinite hierarchy of Turing degrees of c.e. sets has been used to calibrate the dynamics of families of constructions in computability theory, and yields natural definability results. We review the main results of the area, and discuss splittings of c.e. degrees, and finding maximal degrees in upper cones. 1. Vaughan Jones This paper is part of for the Vaughan Jones memorial issue of the New Zealand Journal of Mathematics. Vaughan had a massive influence on World and New Zealand mathematics, both through his work and his personal commitment. Downey first met Vaughan in the early 1990's, around the time he won the Fields Medal. Vaughan had the vision of improving mathematics in New Zealand, and hoped his Fields Medal could be leveraged to this effect. It is fair to say that at the time, maths in New Zealand was not as well-connected internationally as it might have been. It was fortunate that not only was there a lot of press coverage, at the same time another visionary was operating in New Zealand: Sir Ian Axford. Ian pioneered the Marsden Fund, and a group of mathematicians gathered by Vaughan, Marston Conder, David Gauld, Gaven Martin, and Rod Downey, put forth a proposal for summer meetings to the Marsden Fund. The huge influence of Vaughan saw to it that this was approved, and this group set about bringing in external experts to enrich the NZ scene. -

“The Church-Turing “Thesis” As a Special Corollary of Gödel's

“The Church-Turing “Thesis” as a Special Corollary of Gödel’s Completeness Theorem,” in Computability: Turing, Gödel, Church, and Beyond, B. J. Copeland, C. Posy, and O. Shagrir (eds.), MIT Press (Cambridge), 2013, pp. 77-104. Saul A. Kripke This is the published version of the book chapter indicated above, which can be obtained from the publisher at https://mitpress.mit.edu/books/computability. It is reproduced here by permission of the publisher who holds the copyright. © The MIT Press The Church-Turing “ Thesis ” as a Special Corollary of G ö del ’ s 4 Completeness Theorem 1 Saul A. Kripke Traditionally, many writers, following Kleene (1952) , thought of the Church-Turing thesis as unprovable by its nature but having various strong arguments in its favor, including Turing ’ s analysis of human computation. More recently, the beauty, power, and obvious fundamental importance of this analysis — what Turing (1936) calls “ argument I ” — has led some writers to give an almost exclusive emphasis on this argument as the unique justification for the Church-Turing thesis. In this chapter I advocate an alternative justification, essentially presupposed by Turing himself in what he calls “ argument II. ” The idea is that computation is a special form of math- ematical deduction. Assuming the steps of the deduction can be stated in a first- order language, the Church-Turing thesis follows as a special case of G ö del ’ s completeness theorem (first-order algorithm theorem). I propose this idea as an alternative foundation for the Church-Turing thesis, both for human and machine computation. Clearly the relevant assumptions are justified for computations pres- ently known. -

A Proof of Cantor's Theorem

Cantor’s Theorem Joe Roussos 1 Preliminary ideas Two sets have the same number of elements (are equinumerous, or have the same cardinality) iff there is a bijection between the two sets. Mappings: A mapping, or function, is a rule that associates elements of one set with elements of another set. We write this f : X ! Y , f is called the function/mapping, the set X is called the domain, and Y is called the codomain. We specify what the rule is by writing f(x) = y or f : x 7! y. e.g. X = f1; 2; 3g;Y = f2; 4; 6g, the map f(x) = 2x associates each element x 2 X with the element in Y that is double it. A bijection is a mapping that is injective and surjective.1 • Injective (one-to-one): A function is injective if it takes each element of the do- main onto at most one element of the codomain. It never maps more than one element in the domain onto the same element in the codomain. Formally, if f is a function between set X and set Y , then f is injective iff 8a; b 2 X; f(a) = f(b) ! a = b • Surjective (onto): A function is surjective if it maps something onto every element of the codomain. It can map more than one thing onto the same element in the codomain, but it needs to hit everything in the codomain. Formally, if f is a function between set X and set Y , then f is surjective iff 8y 2 Y; 9x 2 X; f(x) = y Figure 1: Injective map. -

NP-Completeness (Chapter 8)

CSE 421" Algorithms NP-Completeness (Chapter 8) 1 What can we feasibly compute? Focus so far has been to give good algorithms for specific problems (and general techniques that help do this). Now shifting focus to problems where we think this is impossible. Sadly, there are many… 2 History 3 A Brief History of Ideas From Classical Greece, if not earlier, "logical thought" held to be a somewhat mystical ability Mid 1800's: Boolean Algebra and foundations of mathematical logic created possible "mechanical" underpinnings 1900: David Hilbert's famous speech outlines program: mechanize all of mathematics? http://mathworld.wolfram.com/HilbertsProblems.html 1930's: Gödel, Church, Turing, et al. prove it's impossible 4 More History 1930/40's What is (is not) computable 1960/70's What is (is not) feasibly computable Goal – a (largely) technology-independent theory of time required by algorithms Key modeling assumptions/approximations Asymptotic (Big-O), worst case is revealing Polynomial, exponential time – qualitatively different 5 Polynomial Time 6 The class P Definition: P = the set of (decision) problems solvable by computers in polynomial time, i.e., T(n) = O(nk) for some fixed k (indp of input). These problems are sometimes called tractable problems. Examples: sorting, shortest path, MST, connectivity, RNA folding & other dyn. prog., flows & matching" – i.e.: most of this qtr (exceptions: Change-Making/Stamps, Knapsack, TSP) 7 Why "Polynomial"? Point is not that n2000 is a nice time bound, or that the differences among n and 2n and n2 are negligible. Rather, simple theoretical tools may not easily capture such differences, whereas exponentials are qualitatively different from polynomials and may be amenable to theoretical analysis. -

Computability and Incompleteness Fact Sheets

Computability and Incompleteness Fact Sheets Computability Definition. A Turing machine is given by: A finite set of symbols, s1; : : : ; sm (including a \blank" symbol) • A finite set of states, q1; : : : ; qn (including a special \start" state) • A finite set of instructions, each of the form • If in state qi scanning symbol sj, perform act A and go to state qk where A is either \move right," \move left," or \write symbol sl." The notion of a \computation" of a Turing machine can be described in terms of the data above. From now on, when I write \let f be a function from strings to strings," I mean that there is a finite set of symbols Σ such that f is a function from strings of symbols in Σ to strings of symbols in Σ. I will also adopt the analogous convention for sets. Definition. Let f be a function from strings to strings. Then f is computable (or recursive) if there is a Turing machine M that works as follows: when M is started with its input head at the beginning of the string x (on an otherwise blank tape), it eventually halts with its head at the beginning of the string f(x). Definition. Let S be a set of strings. Then S is computable (or decidable, or recursive) if there is a Turing machine M that works as follows: when M is started with its input head at the beginning of the string x, then if x is in S, then M eventually halts, with its head on a special \yes" • symbol; and if x is not in S, then M eventually halts, with its head on a special • \no" symbol. -

Linear Transformation (Sections 1.8, 1.9) General View: Given an Input, the Transformation Produces an Output

Linear Transformation (Sections 1.8, 1.9) General view: Given an input, the transformation produces an output. In this sense, a function is also a transformation. 1 4 3 1 3 Example. Let A = and x = 1 . Describe matrix-vector multiplication Ax 2 0 5 1 1 1 in the language of transformation. 1 4 3 1 31 5 Ax b 2 0 5 11 8 1 Vector x is transformed into vector b by left matrix multiplication Definition and terminologies. Transformation (or function or mapping) T from Rn to Rm is a rule that assigns to each vector x in Rn a vector T(x) in Rm. • Notation: T: Rn → Rm • Rn is the domain of T • Rm is the codomain of T • T(x) is the image of vector x • The set of all images T(x) is the range of T • When T(x) = Ax, A is a m×n size matrix. Range of T = Span{ column vectors of A} (HW1.8.7) See class notes for other examples. Linear Transformation --- Existence and Uniqueness Questions (Section 1.9) Definition 1: T: Rn → Rm is onto if each b in Rm is the image of at least one x in Rn. • i.e. codomain Rm = range of T • When solve T(x) = b for x (or Ax=b, A is the standard matrix), there exists at least one solution (Existence question). Definition 2: T: Rn → Rm is one-to-one if each b in Rm is the image of at most one x in Rn. • i.e. When solve T(x) = b for x (or Ax=b, A is the standard matrix), there exists either a unique solution or none at all (Uniqueness question). -

Edsger Dijkstra: the Man Who Carried Computer Science on His Shoulders

INFERENCE / Vol. 5, No. 3 Edsger Dijkstra The Man Who Carried Computer Science on His Shoulders Krzysztof Apt s it turned out, the train I had taken from dsger dijkstra was born in Rotterdam in 1930. Nijmegen to Eindhoven arrived late. To make He described his father, at one time the president matters worse, I was then unable to find the right of the Dutch Chemical Society, as “an excellent Aoffice in the university building. When I eventually arrived Echemist,” and his mother as “a brilliant mathematician for my appointment, I was more than half an hour behind who had no job.”1 In 1948, Dijkstra achieved remarkable schedule. The professor completely ignored my profuse results when he completed secondary school at the famous apologies and proceeded to take a full hour for the meet- Erasmiaans Gymnasium in Rotterdam. His school diploma ing. It was the first time I met Edsger Wybe Dijkstra. shows that he earned the highest possible grade in no less At the time of our meeting in 1975, Dijkstra was 45 than six out of thirteen subjects. He then enrolled at the years old. The most prestigious award in computer sci- University of Leiden to study physics. ence, the ACM Turing Award, had been conferred on In September 1951, Dijkstra’s father suggested he attend him three years earlier. Almost twenty years his junior, I a three-week course on programming in Cambridge. It knew very little about the field—I had only learned what turned out to be an idea with far-reaching consequences. a flowchart was a couple of weeks earlier. -

The Turing Approach Vs. Lovelace Approach

Connecting the Humanities and the Sciences: Part 2. Two Schools of Thought: The Turing Approach vs. The Lovelace Approach* Walter Isaacson, The Jefferson Lecture, National Endowment for the Humanities, May 12, 2014 That brings us to another historical figure, not nearly as famous, but perhaps she should be: Ada Byron, the Countess of Lovelace, often credited with being, in the 1840s, the first computer programmer. The only legitimate child of the poet Lord Byron, Ada inherited her father’s romantic spirit, a trait that her mother tried to temper by having her tutored in math, as if it were an antidote to poetic imagination. When Ada, at age five, showed a preference for geography, Lady Byron ordered that the subject be replaced by additional arithmetic lessons, and her governess soon proudly reported, “she adds up sums of five or six rows of figures with accuracy.” Despite these efforts, Ada developed some of her father’s propensities. She had an affair as a young teenager with one of her tutors, and when they were caught and the tutor banished, Ada tried to run away from home to be with him. She was a romantic as well as a rationalist. The resulting combination produced in Ada a love for what she took to calling “poetical science,” which linked her rebellious imagination to an enchantment with numbers. For many people, including her father, the rarefied sensibilities of the Romantic Era clashed with the technological excitement of the Industrial Revolution. Lord Byron was a Luddite. Seriously. In his maiden and only speech to the House of Lords, he defended the followers of Nedd Ludd who were rampaging against mechanical weaving machines that were putting artisans out of work. -

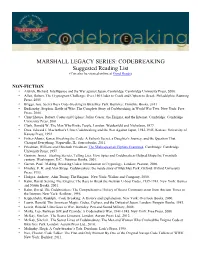

CODEBREAKING Suggested Reading List (Can Also Be Viewed Online at Good Reads)

MARSHALL LEGACY SERIES: CODEBREAKING Suggested Reading List (Can also be viewed online at Good Reads) NON-FICTION • Aldrich, Richard. Intelligence and the War against Japan. Cambridge: Cambridge University Press, 2000. • Allen, Robert. The Cryptogram Challenge: Over 150 Codes to Crack and Ciphers to Break. Philadelphia: Running Press, 2005 • Briggs, Asa. Secret Days Code-breaking in Bletchley Park. Barnsley: Frontline Books, 2011 • Budiansky, Stephen. Battle of Wits: The Complete Story of Codebreaking in World War Two. New York: Free Press, 2000. • Churchhouse, Robert. Codes and Ciphers: Julius Caesar, the Enigma, and the Internet. Cambridge: Cambridge University Press, 2001. • Clark, Ronald W. The Man Who Broke Purple. London: Weidenfeld and Nicholson, 1977. • Drea, Edward J. MacArthur's Ultra: Codebreaking and the War Against Japan, 1942-1945. Kansas: University of Kansas Press, 1992. • Fisher-Alaniz, Karen. Breaking the Code: A Father's Secret, a Daughter's Journey, and the Question That Changed Everything. Naperville, IL: Sourcebooks, 2011. • Friedman, William and Elizebeth Friedman. The Shakespearian Ciphers Examined. Cambridge: Cambridge University Press, 1957. • Gannon, James. Stealing Secrets, Telling Lies: How Spies and Codebreakers Helped Shape the Twentieth century. Washington, D.C.: Potomac Books, 2001. • Garrett, Paul. Making, Breaking Codes: Introduction to Cryptology. London: Pearson, 2000. • Hinsley, F. H. and Alan Stripp. Codebreakers: the inside story of Bletchley Park. Oxford: Oxford University Press, 1993. • Hodges, Andrew. Alan Turing: The Enigma. New York: Walker and Company, 2000. • Kahn, David. Seizing The Enigma: The Race to Break the German U-boat Codes, 1939-1943. New York: Barnes and Noble Books, 2001. • Kahn, David. The Codebreakers: The Comprehensive History of Secret Communication from Ancient Times to the Internet. -

Church's Thesis and the Conceptual Analysis of Computability

Church’s Thesis and the Conceptual Analysis of Computability Michael Rescorla Abstract: Church’s thesis asserts that a number-theoretic function is intuitively computable if and only if it is recursive. A related thesis asserts that Turing’s work yields a conceptual analysis of the intuitive notion of numerical computability. I endorse Church’s thesis, but I argue against the related thesis. I argue that purported conceptual analyses based upon Turing’s work involve a subtle but persistent circularity. Turing machines manipulate syntactic entities. To specify which number-theoretic function a Turing machine computes, we must correlate these syntactic entities with numbers. I argue that, in providing this correlation, we must demand that the correlation itself be computable. Otherwise, the Turing machine will compute uncomputable functions. But if we presuppose the intuitive notion of a computable relation between syntactic entities and numbers, then our analysis of computability is circular.1 §1. Turing machines and number-theoretic functions A Turing machine manipulates syntactic entities: strings consisting of strokes and blanks. I restrict attention to Turing machines that possess two key properties. First, the machine eventually halts when supplied with an input of finitely many adjacent strokes. Second, when the 1 I am greatly indebted to helpful feedback from two anonymous referees from this journal, as well as from: C. Anthony Anderson, Adam Elga, Kevin Falvey, Warren Goldfarb, Richard Heck, Peter Koellner, Oystein Linnebo, Charles Parsons, Gualtiero Piccinini, and Stewart Shapiro. I received extremely helpful comments when I presented earlier versions of this paper at the UCLA Philosophy of Mathematics Workshop, especially from Joseph Almog, D. -

ÉCLAT MATHEMATICS JOURNAL Lady Shri Ram College for Women

ECLAT´ MATHEMATICS JOURNAL Lady Shri Ram College For Women Volume X 2018-2019 HYPATIA This journal is dedicated to Hypatia who was a Greek mathematician, astronomer, philosopher and the last great thinker of ancient Alexandria. The Alexandrian scholar authored numerous mathematical treatises, and has been credited with writing commentaries on Diophantuss Arithmetica, on Apolloniuss Conics and on Ptolemys astronomical work. Hypatia is an inspiration, not only as the first famous female mathematician but most importantly as a symbol of learning and science. PREFACE Derived from the French language, Eclat´ means brilliance. Since it’s inception, this journal has aimed at providing a platform for undergraduate students to showcase their understand- ing of diverse mathematical concepts that interest them. As the journal completes a decade this year, it continues to be instrumental in providing an opportunity for students to make valuable contributions to academic inquiry. The work contained in this journal is not original but contains the review research of students. Each of the nine papers included in this year’s edition have been carefully written and compiled to stimulate the thought process of its readers. The four categories discussed in the journal - History of Mathematics, Rigour in Mathematics, Interdisciplinary Aspects of Mathematics and Extension of Course Content - give a comprehensive idea about the evolution of the subject over the years. We would like to express our sincere thanks to the Faculty Advisors of the department, who have guided us at every step leading to the publication of this volume, and to all the authors who have contributed their articles for this volume. -

5 Propositional Logic: Consistency and Completeness

5 Propositional Logic: Consistency and completeness Reading: Metalogic Part II, 24, 15, 28-31 Contents 5.1 Soundness . 61 5.2 Consistency . 62 5.3 Completeness . 63 5.3.1 An Axiomatization of Propositional Logic . 63 5.3.2 Kalmar's Proof: Informal Exposition . 66 5.3.3 Kalmar's Proof . 68 5.4 Homework Exercises . 70 5.4.1 Questions . 70 5.4.2 Answers . 70 5.1 Soundness In this section, we establish the soundness of the system, i.e., Theorem 3 (Soundness). Every theorem is a tautology, i.e., If ` A then j= A. Proof The proof is by induction the length of the proof of A. For the Basis step, we show that each of the axioms is a tautology. For the induction step, we show that if A and A ⊃ B are tautologies, then B is a tautology. Case 1 (PS1): AB B ⊃ A (A ⊃ (B ⊃ A)) TT TT TF TT FT FT FF TT Case 2 (PS2) 62 5 Propositional Logic: Consistency and completeness XYZ ABC B ⊃ C A ⊃ (B ⊃ C) A ⊃ B A ⊃ C Y ⊃ ZX ⊃ (Y ⊃ Z) TTT TTTTTT TTF FFTFFT TFT TTFTTT TFF TTFFTT FTT TTTTTT FTF FTTTTT FFT TTTTTT FFF TTTTTT Case 3 (PS3) AB » B » A » B ⊃∼ A A ⊃ B (» B ⊃∼ A) ⊃ (A ⊃ B) TT FFTTT TF TFFFT FT FTTTT FF TTTTT Case 4 (MP). If A is a tautology, i.e., true for every assignment of truth values to the atomic letters, and if A ⊃ B is a tautology, then there is no assignment which makes A T and B F.