Common Sense for Concurrency and Strong Paraconsistency Using Unstratified Inference and Reflection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dialetheists' Lies About the Liar

PRINCIPIA 22(1): 59–85 (2018) doi: 10.5007/1808-1711.2018v22n1p59 Published by NEL — Epistemology and Logic Research Group, Federal University of Santa Catarina (UFSC), Brazil. DIALETHEISTS’LIES ABOUT THE LIAR JONAS R. BECKER ARENHART Departamento de Filosofia, Universidade Federal de Santa Catarina, BRAZIL [email protected] EDERSON SAFRA MELO Departamento de Filosofia, Universidade Federal do Maranhão, BRAZIL [email protected] Abstract. Liar-like paradoxes are typically arguments that, by using very intuitive resources of natural language, end up in contradiction. Consistent solutions to those paradoxes usually have difficulties either because they restrict the expressive power of the language, orelse because they fall prey to extended versions of the paradox. Dialetheists, like Graham Priest, propose that we should take the Liar at face value and accept the contradictory conclusion as true. A logical treatment of such contradictions is also put forward, with the Logic of Para- dox (LP), which should account for the manifestations of the Liar. In this paper we shall argue that such a formal approach, as advanced by Priest, is unsatisfactory. In order to make contradictions acceptable, Priest has to distinguish between two kinds of contradictions, in- ternal and external, corresponding, respectively, to the conclusions of the simple and of the extended Liar. Given that, we argue that while the natural interpretation of LP was intended to account for true and false sentences, dealing with internal contradictions, it lacks the re- sources to tame external contradictions. Also, the negation sign of LP is unable to represent internal contradictions adequately, precisely because of its allowance of sentences that may be true and false. -

Chapter 2 Introduction to Classical Propositional

CHAPTER 2 INTRODUCTION TO CLASSICAL PROPOSITIONAL LOGIC 1 Motivation and History The origins of the classical propositional logic, classical propositional calculus, as it was, and still often is called, go back to antiquity and are due to Stoic school of philosophy (3rd century B.C.), whose most eminent representative was Chryssipus. But the real development of this calculus began only in the mid-19th century and was initiated by the research done by the English math- ematician G. Boole, who is sometimes regarded as the founder of mathematical logic. The classical propositional calculus was ¯rst formulated as a formal ax- iomatic system by the eminent German logician G. Frege in 1879. The assumption underlying the formalization of classical propositional calculus are the following. Logical sentences We deal only with sentences that can always be evaluated as true or false. Such sentences are called logical sentences or proposi- tions. Hence the name propositional logic. A statement: 2 + 2 = 4 is a proposition as we assume that it is a well known and agreed upon truth. A statement: 2 + 2 = 5 is also a proposition (false. A statement:] I am pretty is modeled, if needed as a logical sentence (proposi- tion). We assume that it is false, or true. A statement: 2 + n = 5 is not a proposition; it might be true for some n, for example n=3, false for other n, for example n= 2, and moreover, we don't know what n is. Sentences of this kind are called propositional functions. We model propositional functions within propositional logic by treating propositional functions as propositions. -

Aristotle's Assertoric Syllogistic and Modern Relevance Logic*

Aristotle’s assertoric syllogistic and modern relevance logic* Abstract: This paper sets out to evaluate the claim that Aristotle’s Assertoric Syllogistic is a relevance logic or shows significant similarities with it. I prepare the grounds for a meaningful comparison by extracting the notion of relevance employed in the most influential work on modern relevance logic, Anderson and Belnap’s Entailment. This notion is characterized by two conditions imposed on the concept of validity: first, that some meaning content is shared between the premises and the conclusion, and second, that the premises of a proof are actually used to derive the conclusion. Turning to Aristotle’s Prior Analytics, I argue that there is evidence that Aristotle’s Assertoric Syllogistic satisfies both conditions. Moreover, Aristotle at one point explicitly addresses the potential harmfulness of syllogisms with unused premises. Here, I argue that Aristotle’s analysis allows for a rejection of such syllogisms on formal grounds established in the foregoing parts of the Prior Analytics. In a final section I consider the view that Aristotle distinguished between validity on the one hand and syllogistic validity on the other. Following this line of reasoning, Aristotle’s logic might not be a relevance logic, since relevance is part of syllogistic validity and not, as modern relevance logic demands, of general validity. I argue that the reasons to reject this view are more compelling than the reasons to accept it and that we can, cautiously, uphold the result that Aristotle’s logic is a relevance logic. Keywords: Aristotle, Syllogism, Relevance Logic, History of Logic, Logic, Relevance 1. -

The Metamathematics of Putnam's Model-Theoretic Arguments

The Metamathematics of Putnam's Model-Theoretic Arguments Tim Button Abstract. Putnam famously attempted to use model theory to draw metaphysical conclusions. His Skolemisation argument sought to show metaphysical realists that their favourite theories have countable models. His permutation argument sought to show that they have permuted mod- els. His constructivisation argument sought to show that any empirical evidence is compatible with the Axiom of Constructibility. Here, I exam- ine the metamathematics of all three model-theoretic arguments, and I argue against Bays (2001, 2007) that Putnam is largely immune to meta- mathematical challenges. Copyright notice. This paper is due to appear in Erkenntnis. This is a pre-print, and may be subject to minor changes. The authoritative version should be obtained from Erkenntnis, once it has been published. Hilary Putnam famously attempted to use model theory to draw metaphys- ical conclusions. Specifically, he attacked metaphysical realism, a position characterised by the following credo: [T]he world consists of a fixed totality of mind-independent objects. (Putnam 1981, p. 49; cf. 1978, p. 125). Truth involves some sort of correspondence relation between words or thought-signs and external things and sets of things. (1981, p. 49; cf. 1989, p. 214) [W]hat is epistemically most justifiable to believe may nonetheless be false. (1980, p. 473; cf. 1978, p. 125) To sum up these claims, Putnam characterised metaphysical realism as an \externalist perspective" whose \favorite point of view is a God's Eye point of view" (1981, p. 49). Putnam sought to show that this externalist perspective is deeply untenable. To this end, he treated correspondence in terms of model-theoretic satisfaction. -

Recent Work in Relevant Logic

Recent Work in Relevant Logic Mark Jago Forthcoming in Analysis. Draft of April 2013. 1 Introduction Relevant logics are a group of logics which attempt to block irrelevant conclusions being drawn from a set of premises. The following inferences are all valid in classical logic, where A and B are any sentences whatsoever: • from A, one may infer B → A, B → B and B ∨ ¬B; • from ¬A, one may infer A → B; and • from A ∧ ¬A, one may infer B. But if A and B are utterly irrelevant to one another, many feel reluctant to call these inferences acceptable. Similarly for the validity of the corresponding material implications, often called ‘paradoxes’ of material implication. Relevant logic can be seen as the attempt to avoid these ‘paradoxes’. Relevant logic has a long history. Key early works include Anderson and Belnap 1962; 1963; 1975, and many important results appear in Routley et al. 1982. Those looking for a short introduction to relevant logics might look at Mares 2012 or Priest 2008. For a more detailed but still accessible introduction, there’s Dunn and Restall 2002; Mares 2004b; Priest 2008 and Read 1988. The aim of this article is to survey some of the most important work in the eld in the past ten years, in a way that I hope will be of interest to a philosophical audience. Much of this recent work has been of a formal nature. I will try to outline these technical developments, and convey something of their importance, with the minimum of technical jargon. A good deal of this recent technical work concerns how quantiers should work in relevant logic. -

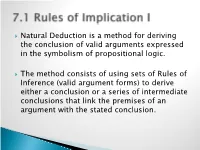

7.1 Rules of Implication I

Natural Deduction is a method for deriving the conclusion of valid arguments expressed in the symbolism of propositional logic. The method consists of using sets of Rules of Inference (valid argument forms) to derive either a conclusion or a series of intermediate conclusions that link the premises of an argument with the stated conclusion. The First Four Rules of Inference: ◦ Modus Ponens (MP): p q p q ◦ Modus Tollens (MT): p q ~q ~p ◦ Pure Hypothetical Syllogism (HS): p q q r p r ◦ Disjunctive Syllogism (DS): p v q ~p q Common strategies for constructing a proof involving the first four rules: ◦ Always begin by attempting to find the conclusion in the premises. If the conclusion is not present in its entirely in the premises, look at the main operator of the conclusion. This will provide a clue as to how the conclusion should be derived. ◦ If the conclusion contains a letter that appears in the consequent of a conditional statement in the premises, consider obtaining that letter via modus ponens. ◦ If the conclusion contains a negated letter and that letter appears in the antecedent of a conditional statement in the premises, consider obtaining the negated letter via modus tollens. ◦ If the conclusion is a conditional statement, consider obtaining it via pure hypothetical syllogism. ◦ If the conclusion contains a letter that appears in a disjunctive statement in the premises, consider obtaining that letter via disjunctive syllogism. Four Additional Rules of Inference: ◦ Constructive Dilemma (CD): (p q) • (r s) p v r q v s ◦ Simplification (Simp): p • q p ◦ Conjunction (Conj): p q p • q ◦ Addition (Add): p p v q Common Misapplications Common strategies involving the additional rules of inference: ◦ If the conclusion contains a letter that appears in a conjunctive statement in the premises, consider obtaining that letter via simplification. -

Two Sources of Explosion

Two sources of explosion Eric Kao Computer Science Department Stanford University Stanford, CA 94305 United States of America Abstract. In pursuit of enhancing the deductive power of Direct Logic while avoiding explosiveness, Hewitt has proposed including the law of excluded middle and proof by self-refutation. In this paper, I show that the inclusion of either one of these inference patterns causes paracon- sistent logics such as Hewitt's Direct Logic and Besnard and Hunter's quasi-classical logic to become explosive. 1 Introduction A central goal of a paraconsistent logic is to avoid explosiveness { the inference of any arbitrary sentence β from an inconsistent premise set fp; :pg (ex falso quodlibet). Hewitt [2] Direct Logic and Besnard and Hunter's quasi-classical logic (QC) [1, 5, 4] both seek to preserve the deductive power of classical logic \as much as pos- sible" while still avoiding explosiveness. Their work fits into the ongoing research program of identifying some \reasonable" and \maximal" subsets of classically valid rules and axioms that do not lead to explosiveness. To this end, it is natural to consider which classically sound deductive rules and axioms one can introduce into a paraconsistent logic without causing explo- siveness. Hewitt [3] proposed including the law of excluded middle and the proof by self-refutation rule (a very special case of proof by contradiction) but did not show whether the resulting logic would be explosive. In this paper, I show that for quasi-classical logic and its variant, the addition of either the law of excluded middle or the proof by self-refutation rule in fact leads to explosiveness. -

Edsger W. Dijkstra: a Commemoration

Edsger W. Dijkstra: a Commemoration Krzysztof R. Apt1 and Tony Hoare2 (editors) 1 CWI, Amsterdam, The Netherlands and MIMUW, University of Warsaw, Poland 2 Department of Computer Science and Technology, University of Cambridge and Microsoft Research Ltd, Cambridge, UK Abstract This article is a multiauthored portrait of Edsger Wybe Dijkstra that consists of testimo- nials written by several friends, colleagues, and students of his. It provides unique insights into his personality, working style and habits, and his influence on other computer scientists, as a researcher, teacher, and mentor. Contents Preface 3 Tony Hoare 4 Donald Knuth 9 Christian Lengauer 11 K. Mani Chandy 13 Eric C.R. Hehner 15 Mark Scheevel 17 Krzysztof R. Apt 18 arXiv:2104.03392v1 [cs.GL] 7 Apr 2021 Niklaus Wirth 20 Lex Bijlsma 23 Manfred Broy 24 David Gries 26 Ted Herman 28 Alain J. Martin 29 J Strother Moore 31 Vladimir Lifschitz 33 Wim H. Hesselink 34 1 Hamilton Richards 36 Ken Calvert 38 David Naumann 40 David Turner 42 J.R. Rao 44 Jayadev Misra 47 Rajeev Joshi 50 Maarten van Emden 52 Two Tuesday Afternoon Clubs 54 2 Preface Edsger Dijkstra was perhaps the best known, and certainly the most discussed, computer scientist of the seventies and eighties. We both knew Dijkstra |though each of us in different ways| and we both were aware that his influence on computer science was not limited to his pioneering software projects and research articles. He interacted with his colleagues by way of numerous discussions, extensive letter correspondence, and hundreds of so-called EWD reports that he used to send to a select group of researchers. -

Theorem Proving in Classical Logic

MEng Individual Project Imperial College London Department of Computing Theorem Proving in Classical Logic Supervisor: Dr. Steffen van Bakel Author: David Davies Second Marker: Dr. Nicolas Wu June 16, 2021 Abstract It is well known that functional programming and logic are deeply intertwined. This has led to many systems capable of expressing both propositional and first order logic, that also operate as well-typed programs. What currently ties popular theorem provers together is their basis in intuitionistic logic, where one cannot prove the law of the excluded middle, ‘A A’ – that any proposition is either true or false. In classical logic this notion is provable, and the_: corresponding programs turn out to be those with control operators. In this report, we explore and expand upon the research about calculi that correspond with classical logic; and the problems that occur for those relating to first order logic. To see how these calculi behave in practice, we develop and implement functional languages for propositional and first order logic, expressing classical calculi in the setting of a theorem prover, much like Agda and Coq. In the first order language, users are able to define inductive data and record types; importantly, they are able to write computable programs that have a correspondence with classical propositions. Acknowledgements I would like to thank Steffen van Bakel, my supervisor, for his support throughout this project and helping find a topic of study based on my interests, for which I am incredibly grateful. His insight and advice have been invaluable. I would also like to thank my second marker, Nicolas Wu, for introducing me to the world of dependent types, and suggesting useful resources that have aided me greatly during this report. -

Starting the Dismantling of Classical Mathematics

Australasian Journal of Logic Starting the Dismantling of Classical Mathematics Ross T. Brady La Trobe University Melbourne, Australia [email protected] Dedicated to Richard Routley/Sylvan, on the occasion of the 20th anniversary of his untimely death. 1 Introduction Richard Sylvan (n´eRoutley) has been the greatest influence on my career in logic. We met at the University of New England in 1966, when I was a Master's student and he was one of my lecturers in the M.A. course in Formal Logic. He was an inspirational leader, who thought his own thoughts and was not afraid to speak his mind. I hold him in the highest regard. He was very critical of the standard Anglo-American way of doing logic, the so-called classical logic, which can be seen in everything he wrote. One of his many critical comments was: “G¨odel's(First) Theorem would not be provable using a decent logic". This contribution, written to honour him and his works, will examine this point among some others. Hilbert referred to non-constructive set theory based on classical logic as \Cantor's paradise". In this historical setting, the constructive logic and mathematics concerned was that of intuitionism. (The Preface of Mendelson [2010] refers to this.) We wish to start the process of dismantling this classi- cal paradise, and more generally classical mathematics. Our starting point will be the various diagonal-style arguments, where we examine whether the Law of Excluded Middle (LEM) is implicitly used in carrying them out. This will include the proof of G¨odel'sFirst Theorem, and also the proof of the undecidability of Turing's Halting Problem. -

Relevant and Substructural Logics

Relevant and Substructural Logics GREG RESTALL∗ PHILOSOPHY DEPARTMENT, MACQUARIE UNIVERSITY [email protected] June 23, 2001 http://www.phil.mq.edu.au/staff/grestall/ Abstract: This is a history of relevant and substructural logics, written for the Hand- book of the History and Philosophy of Logic, edited by Dov Gabbay and John Woods.1 1 Introduction Logics tend to be viewed of in one of two ways — with an eye to proofs, or with an eye to models.2 Relevant and substructural logics are no different: you can focus on notions of proof, inference rules and structural features of deduction in these logics, or you can focus on interpretations of the language in other structures. This essay is structured around the bifurcation between proofs and mod- els: The first section discusses Proof Theory of relevant and substructural log- ics, and the second covers the Model Theory of these logics. This order is a natural one for a history of relevant and substructural logics, because much of the initial work — especially in the Anderson–Belnap tradition of relevant logics — started by developing proof theory. The model theory of relevant logic came some time later. As we will see, Dunn's algebraic models [76, 77] Urquhart's operational semantics [267, 268] and Routley and Meyer's rela- tional semantics [239, 240, 241] arrived decades after the initial burst of ac- tivity from Alan Anderson and Nuel Belnap. The same goes for work on the Lambek calculus: although inspired by a very particular application in lin- guistic typing, it was developed first proof-theoretically, and only later did model theory come to the fore. -

Saving the Square of Opposition∗

HISTORY AND PHILOSOPHY OF LOGIC, 2021 https://doi.org/10.1080/01445340.2020.1865782 Saving the Square of Opposition∗ PIETER A. M. SEUREN Max Planck Institute for Psycholinguistics, Nijmegen, the Netherlands Received 19 April 2020 Accepted 15 December 2020 Contrary to received opinion, the Aristotelian Square of Opposition (square) is logically sound, differing from standard modern predicate logic (SMPL) only in that it restricts the universe U of cognitively constructible situations by banning null predicates, making it less unnatural than SMPL. U-restriction strengthens the logic without making it unsound. It also invites a cognitive approach to logic. Humans are endowed with a cogni- tive predicate logic (CPL), which checks the process of cognitive modelling (world construal) for consistency. The square is considered a first approximation to CPL, with a cognitive set-theoretic semantics. Not being cognitively real, the null set Ø is eliminated from the semantics of CPL. Still rudimentary in Aristotle’s On Interpretation (Int), the square was implicitly completed in his Prior Analytics (PrAn), thereby introducing U-restriction. Abelard’s reconstruction of the logic of Int is logically and historically correct; the loca (Leak- ing O-Corner Analysis) interpretation of the square, defended by some modern logicians, is logically faulty and historically untenable. Generally, U-restriction, not redefining the universal quantifier, as in Abelard and loca, is the correct path to a reconstruction of CPL. Valuation Space modelling is used to compute