Typhoon Track Prediction Using Satellite Images in a Generative Adversarial Network Mario R ¨Uttgers1, Sangseung Lee1, and Donghyun You1,*

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Typhoon Neoguri Disaster Risk Reduction Situation Report1 DRR Sitrep 2014‐001 ‐ Updated July 8, 2014, 10:00 CET

Typhoon Neoguri Disaster Risk Reduction Situation Report1 DRR sitrep 2014‐001 ‐ updated July 8, 2014, 10:00 CET Summary Report Ongoing typhoon situation The storm had lost strength early Tuesday July 8, going from the equivalent of a Category 5 hurricane to a Category 3 on the Saffir‐Simpson Hurricane Wind Scale, which means devastating damage is expected to occur, with major damage to well‐built framed homes, snapped or uprooted trees and power outages. It is approaching Okinawa, Japan, and is moving northwest towards South Korea and the Philippines, bringing strong winds, flooding rainfall and inundating storm surge. Typhoon Neoguri is a once‐in‐a‐decade storm and Japanese authorities have extended their highest storm alert to Okinawa's main island. The Global Assessment Report (GAR) 2013 ranked Japan as first among countries in the world for both annual and maximum potential losses due to cyclones. It is calculated that Japan loses on average up to $45.9 Billion due to cyclonic winds every year and that it can lose a probable maximum loss of $547 Billion.2 What are the most devastating cyclones to hit Okinawa in recent memory? There have been 12 damaging cyclones to hit Okinawa since 1945. Sustaining winds of 81.6 knots (151 kph), Typhoon “Winnie” caused damages of $5.8 million in August 1997. Typhoon "Bart", which hit Okinawa in October 1999 caused damages of $5.7 million. It sustained winds of 126 knots (233 kph). The most damaging cyclone to hit Japan was Super Typhoon Nida (reaching a peak intensity of 260 kph), which struck Japan in 2004 killing 287 affecting 329,556 people injuring 1,483, and causing damages amounting to $15 Billion. -

Chapter 7. Building a Safe and Comfortable Society

Section 1 Realizing a Universal Society Building a Safe and Comfortable Chapter 7 Society Section 1 Realizing a Universal Society 1 Realizing Accessibility through a Universal Design Concept The “Act on Promotion of Smooth Transportation, etc. of Elderly Persons, Disabled Persons, etc.” embodies the universal design concept of “freedom and convenience for anywhere and anyone”, making it mandatory to comply with “Accessibility Standards” when newly establishing various facilities (passenger facilities, various vehicles, roads, off- street parking facilities, city parks, buildings, etc.), mandatory best effort for existing facilities as well as defining a development target for the end of FY2020 under the “Basic Policy on Accessibility” to promote accessibility. Also, in accordance with the local accessibility plan created by municipalities, focused and integrated promotion of accessibility is carried out in priority development district; to increase “caring for accessibility”, by deepening the national public’s understanding and seek cooperation for the promotion of accessibility, “accessibility workshops” are hosted in which you learn to assist as well as virtually experience being elderly, disabled, etc.; these efforts serve to accelerate II accessibility measures (sustained development in stages). Chapter 7 (1) Accessibility of Public Transportation In accordance with the “Act on Figure II-7-1-1 Current Accessibility of Public Transportation Promotion of Smooth Transportation, etc. (as of March 31, 2014) of Elderly Persons, Disabled -

Summary of 2014 NW Pacific Typhoon Season and Verification of Authors’ Seasonal Forecasts

Summary of 2014 NW Pacific Typhoon Season and Verification of Authors’ Seasonal Forecasts Issued: 27th January 2015 by Dr Adam Lea and Professor Mark Saunders Dept. of Space and Climate Physics, UCL (University College London), UK. Summary The 2014 NW Pacific typhoon season was characterised by activity slightly below the long-term (1965-2013) norm. The TSR forecasts over-predicted activity but mostly to within the forecast error. The Tropical Storm Risk (TSR) consortium presents a validation of their seasonal forecasts for the NW Pacific basin ACE index, numbers of intense typhoons, numbers of typhoons and numbers of tropical storms in 2014. These forecasts were issued on the 6th May, 3rd July and the 5th August 2014. The 2014 NW Pacific typhoon season ran from 1st January to 31st December. Features of the 2014 NW Pacific Season • Featured 23 tropical storms, 12 typhoons, 8 intense typhoons and a total ACE index of 273. These numbers were respectively 12%, 25%, 0% and 7% below their corresponding long-term norms. Seven out of the last eight years have now had a NW Pacific ACE index below the 1965-2013 climate norm value of 295. • The peak months of August and September were unusually quiet, with only one typhoon forming within the basin (Genevieve formed in the NE Pacific and crossed into the NW Pacific basin as a hurricane). Since 1965 no NW Pacific typhoon season has seen less than two typhoons develop within the NW Pacific basin during August and September. This lack of activity in 2014 was in part caused by an unusually strong and persistent suppressing phase of the Madden-Julian Oscillation. -

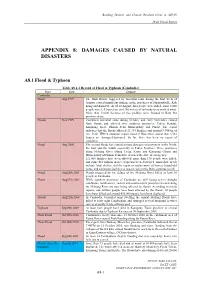

Appendix 8: Damages Caused by Natural Disasters

Building Disaster and Climate Resilient Cities in ASEAN Draft Finnal Report APPENDIX 8: DAMAGES CAUSED BY NATURAL DISASTERS A8.1 Flood & Typhoon Table A8.1.1 Record of Flood & Typhoon (Cambodia) Place Date Damage Cambodia Flood Aug 1999 The flash floods, triggered by torrential rains during the first week of August, caused significant damage in the provinces of Sihanoukville, Koh Kong and Kam Pot. As of 10 August, four people were killed, some 8,000 people were left homeless, and 200 meters of railroads were washed away. More than 12,000 hectares of rice paddies were flooded in Kam Pot province alone. Floods Nov 1999 Continued torrential rains during October and early November caused flash floods and affected five southern provinces: Takeo, Kandal, Kampong Speu, Phnom Penh Municipality and Pursat. The report indicates that the floods affected 21,334 families and around 9,900 ha of rice field. IFRC's situation report dated 9 November stated that 3,561 houses are damaged/destroyed. So far, there has been no report of casualties. Flood Aug 2000 The second floods has caused serious damages on provinces in the North, the East and the South, especially in Takeo Province. Three provinces along Mekong River (Stung Treng, Kratie and Kompong Cham) and Municipality of Phnom Penh have declared the state of emergency. 121,000 families have been affected, more than 170 people were killed, and some $10 million in rice crops has been destroyed. Immediate needs include food, shelter, and the repair or replacement of homes, household items, and sanitation facilities as water levels in the Delta continue to fall. -

Report on UN ESCAP / WMO Typhoon Committee Members Disaster Management System

Report on UN ESCAP / WMO Typhoon Committee Members Disaster Management System UNITED NATIONS Economic and Social Commission for Asia and the Pacific January 2009 Disaster Management ˆ ` 2009.1.29 4:39 PM ˘ ` 1 ¿ ‚fiˆ •´ lp125 1200DPI 133LPI Report on UN ESCAP/WMO Typhoon Committee Members Disaster Management System By National Institute for Disaster Prevention (NIDP) January 2009, 154 pages Author : Dr. Waonho Yi Dr. Tae Sung Cheong Mr. Kyeonghyeok Jin Ms. Genevieve C. Miller Disaster Management ˆ ` 2009.1.29 4:39 PM ˘ ` 2 ¿ ‚fiˆ •´ lp125 1200DPI 133LPI WMO/TD-No. 1476 World Meteorological Organization, 2009 ISBN 978-89-90564-89-4 93530 The right of publication in print, electronic and any other form and in any language is reserved by WMO. Short extracts from WMO publications may be reproduced without authorization, provided that the complete source is clearly indicated. Editorial correspon- dence and requests to publish, reproduce or translate this publication in part or in whole should be addressed to: Chairperson, Publications Board World Meteorological Organization (WMO) 7 bis, avenue de la Paix Tel.: +41 (0) 22 730 84 03 P.O. Box No. 2300 Fax: +41 (0) 22 730 80 40 CH-1211 Geneva 2, Switzerland E-mail: [email protected] NOTE The designations employed in WMO publications and the presentation of material in this publication do not imply the expression of any opinion whatsoever on the part of the Secretariat of WMO concerning the legal status of any country, territory, city or area, or of its authorities, or concerning the delimitation of its frontiers or boundaries. -

Minnesota Weathertalk Newsletter for Friday, January 3, 2014

Minnesota WeatherTalk Newsletter for Friday, January 3, 2014 To: MPR's Morning Edition From: Mark Seeley, Univ. of Minnesota, Dept of Soil, Water, and Climate Subject: Minnesota WeatherTalk Newsletter for Friday, January 3, 2014 HEADLINES -December 2013 was climate near historic for northern communities -Cold start to 2014 -Weekly Weather potpourri -MPR listener questions -Almanac for January 3rd -Past weather -Outlook Topic: December 2013 near historic for far north In assessing the climate for December 2013 it should be said that from the standpoint of cold temperatures the month was quite historic for many northern Minnesota communities, especially due to the Arctic cold that prevailed over the last few days of the month. Minnesota reported the coldest temperature in the 48 contiguous states thirteen times during the month, the highest frequency among all 48 states. Many northern observers saw overnight temperatures drop below -30 degrees F on several occasions. The mean monthly temperature for December from several communities ranked among the coldest Decembers ever. A sample listing includes: -4.1 F at International Falls, 2nd coldest all-time 4.6 F at Duluth, 8th coldest all-time 0.1 F at Crookston, 3rd coldest all-time -3.1 F at Roseau, 3rd coldest all-time 0.3 F at Park Rapids, 3rd coldest all-time -4.4 F at Embarrass, 2nd coldest all-time -4.1 F at Baudette, coldest all-time -3.7 F at Warroad, coldest all-time -2.9 F at Babbitt, coldest all-time -2.8 F at Gunflint Lake, coldest all-time In addition, some communities reported an exceptionally snowy month of December. -

Economic Analysis of Disaster Management Investment Effectiveness in Korea

sustainability Article Economic Analysis of Disaster Management Investment Effectiveness in Korea Bo-Young Heo 1 and Won-Ho Heo 2,* 1 National Disaster Management Research Institute, Ulsan 44538, Korea; [email protected] 2 School of Civil and Environmental Engineering, Yonsei University, 50 Yonsei-ro Seodaemun-gu, Seoul 03722, Korea * Correspondence: [email protected]; Tel.: +82-52-928-8112 Received: 12 March 2019; Accepted: 21 May 2019; Published: 28 May 2019 Abstract: Governments have been investing in extensive operations to minimize economic losses and casualties from natural disasters such as floods and storms. A suitable verification process is required to guarantee maximum effectiveness and efficiency of investments while ensuring sustained funding. Active investment can be expected by verifying the effectiveness of disaster prevention spending. However, the results of the budget invested in disaster-safety-related projects are not immediate but evident only over a period of time. Additionally, their effects should be verified in terms of the state or society overall, not from an individualistic perspective because of the nature of public projects. In this study, an economic analysis of the short- and long-term effects of investment in a disaster-safety-related project was performed and the effects of damage reduction before and after project implementation were analyzed to evaluate the short-term effects and a cost–benefit analysis was conducted to assess the long-term effects. The results show that disaster prevention projects reduce damages over both the short and long term. Therefore, investing in preventive projects to cope with disasters effectively is important to maximize the return on investment. -

The Change in Rainfall from Tropical Cyclones Due to Orographic Effect of the Sierra Madre Mountain Range in Luzon, Philippines

Philippine Journal of Science 145 (4): 313-326, December 2016 ISSN 0031 - 7683 Date Received: ?? Feb 20?? The Change in Rainfall from Tropical Cyclones Due to Orographic Effect of the Sierra Madre Mountain Range in Luzon, Philippines Bernard Alan B. Racoma1,2*, Carlos Primo C. David1, Irene A. Crisologo1, and Gerry Bagtasa3 1National Institute of Geological Sciences, College of Science, University of the Philippines, Diliman, Quezon City, Philippines 2Nationwide Operational Assessment of Hazards, University of the Philippines, Diliman, Quezon City, Philippines 3Institute of Environmental Science and Meteorology, College of Science, University of the Philippines, Diliman, Quezon City, Philippines This paper discusses the Sierra Madre Mountain Range of the Philippines and its associated influence on the intensity and distribution of rainfall during tropical cyclones. Based on Weather and Research Forecasting model simulations, a shift in rainfall was observed in different portions of the country, due to the reduction of the topography of the mountain. Besides increasing the rainfall along the mountain range, a shift in precipitation was observed during Tropical Storm Ondoy, Typhoon Labuyo, and Tropical Storm Mario. It was also observed that the presence of the Sierra Madre Mountain Range slows down the movement of a tropical cyclones, and as such allowing more time for precipitation to form over the country. Wind profiles also suggest that the windward and leeward sides of mountain ranges during Tropical Cyclones changes depending on the storm path. It has been suggested that in predicting the distribution of rainfall, the direction of movement of a tropical cyclones as well as its adjacent areas be taken into great consideration. -

3Rd Quarter 2014

Climate Impacts and Hawaii and U.S. Pacific Islands Region Outlook 3rd Quarter 2014 nd Significant Events and Impacts for 2 Quarter 2014 The region is still under an El Niño Watch. Periods of heavy rain fell in Hawaii, raising streams and Above normal rainfall flooding lowlands. fell over much of Guam, Palau, the Federated States of Micronesia, and the Marshall Islands. Guam was affected by three tropical storms and Sea-levels continued to record rains in July. fall across Guam, the Federated States of Micronesia, and the Republic of the Marshall Islands – supportive of the ongoing transition to El Niño state. Heavy rains fell in late July in American Samoa. Shading indicates each Island’s Exclusive Economic Zone (EEZ). nd Regional Climate Overview for 2 Quarter 2014 Sea-Surface Temperature Anomaly Map, valid July July 2014 precipitation anomaly. Source: Sea-Surface Height Anomaly, valid Aug 1, 30, 2014 . Source: http://www.cpc.ncep.noaa.gov http://iridl.ldeo.columbia.edu/ 2014. Source: http://cpc.ncep.noaa.gov/ The region remains under an El Niño Watch and weather conditions were more in-line with El Niño during the quarter (e.g., increasing sea-surface th temperatures, falling sea level, and wet conditions across Micronesia). However, as of August 4 , the Niño 3.4 region anomaly was -0.1°C, which corresponds to ENSO neutral conditions. Sea-surface temperatures were generally near normal, with the warmest anomalies exceeding 1°C near CNMI. The monthly mean sea level in the 2nd quarter continued to show falling levels in most of the USAPI stations; however, Guam, Pago Pago, and Honolulu remain slightly above normal. -

Philippines: 106 Dead* China

Creation date: 20 Feb 2015 Philippines, China: Typhoon RAMMASUN, Flood and landslide Philippines, China Country Philippines: Northern and central parts of the country Philippines: 106 dead* and China: Southern part District China: 62 dead* Duration 15 Jul 2014- Typhoon Rammasun that landed on the eastern part of Philippines: 5 missing* Philippines on 15 Jul, moved across Luzon Island and made Outline final landfall in southern China. Rammasun was the most powerful typhoon that hit southern China in 40 years. 106 China: 21 missing* people in Philippines, and 62 in China were killed. *Philippines: Reported on 16 Sep 2014 *China: Reported on 27 Jul 2014 Photo 1 A man gathering bamboos that drifted to the bridge due to the Typhoon Rammasun, in Batangas in the southwest of Manila (17 Jul 2014) Source http://www.afpbb.com/articles/-/3020815 Copyright © 1956 Infrastructure Development Institute – Japan. All Rights Reserved. The reproduction or republication of this material is strictly prohibited without the prior written permission of IDI. 一般社団法人 国際建設技術協会 Infrastructure Development Institute – Japan Photo 2 Residents heading to an evacuation shelter in the strong wind and heavy rain caused by the typhoon in Manila (16 Jul 2014) Source http://www.afpbb.com/articles/-/3020815?pid=14054270 Photo 3 A resident climbs on a bridge destroyed during the onslaught of Typhoon Rammasun, (locally named Glenda) in Batangas city south of Manila, July 17, 2014. Source http://www.reuters.com/article/2014/07/17/us-philippines-typhoon-idUSKBN0FM01320140717 Photo 4 A person taking photos of storm-swept sea in Hainan province (18 Jul 2014) Source http://www.afpbb.com/articles/-/3021050?pid=14066126 Copyright © 1956 Infrastructure Development Institute – Japan. -

Initializing the WRF Model with Tropical Cyclone Real-Time Reports Using the Ensemble

Initializing the WRF Model with Tropical Cyclone Real-Time Reports using the Ensemble Kalman Filter Algorithm Tien Duc Du(1), Thanh Ngo-Duc(2), and Chanh Kieu(3)* (1)National Center for Hydro-Meteorological Forecasting, 8 Phao Dai Lang, Hanoi, Vietnam 1 (2)Department of Space and Aeronautics, University of Science and Technology of Hanoi, Vietnam 2 (3)Department of Earth and Atmospheric Sciences, Indiana University, Bloomington IN 47405, USA Revised: 18 April 2017 Submitted to Pure and Applied Geophysical Science Abbreviated title: Tropical Cyclone Ensemble Forecast Keywords: Tropical cyclones, ensemble Kalman filter, the WRF model, tropical cyclone vital, ensemble forecasting ____________________ *Corresponding author: Chanh Kieu, Atmospheric Program, GY428A Geological Building, Department of Earth and Atmospheric Sciences, Indiana University, Bloomington, IN 47405. Tel: 812-856-5704. Email: [email protected]. 1 1 Abstract 2 This study presents an approach to assimilate tropical cyclone (TC) real-time reports and the 3 University of Wisconsin-Cooperative Institute for Meteorological Satellite Studies (CIMSS) 4 Atmospheric Motion Vectors (AMV) data into the Weather Research and Forecasting (WRF) model 5 for TC forecast applications. Unlike current methods in which TC real-time reports are used to either 6 generate a bogus vortex or spin-up a model initial vortex, the proposed approach ingests the TC real- 7 time reports through blending a dynamically consistent synthetic vortex structure with the CIMSS- 8 AMV data. The blended dataset is then assimilated into the WRF initial condition, using the local 9 ensemble transform Kalman filter (LETKF) algorithm. Retrospective experiments for a number of 10 TC cases in the north Western Pacific basin during 2013-2014 demonstrate that this approach could 11 effectively increase both the TC circulation and enhance the large-scale environment that the TCs are 12 embedded in. -

Das Center for Disaster Managment and Risk Reduction Technology

Center for Disaster Management and Risk Reduction Technology CEDIM Forensic Disaster Analysis Group (FDA) TYPHOON 09W "RAMMASUN" – Short Summary 18 July 2014 – Report No. 1 – 12:00 GMT - Update: 30 July 2014 Authors: Bernhard Mühr, James Daniell, Tina Bessel, Susan Brink, Michael Kunz SUMMARY Official Disaster Name Date Landfall UTC Local Duration (PHL) Rammasun (Glenda, Philippines) 15/16-07 07 UTC +8 12 hours Rammasun (China, Vietnam) 18/19/20-07 06:30 UTC +8 12 hours Preferred Hazard Information: Definition (Saffir- Path Speed Simpson Scale) Width (km) Gust (Peak) Landfall Sustained 1st : Category 3 (15/7) 125 kt 15.07., 150 kph near WNW (Philippines) 20 kph Category 1 (16/7) Max: 150 232 kph 15 LT center 165 kt 135 kt Category 4 (18/7), Storm warning 306 kph 250 kph NW (China, Vietnam) 25 kph max. intensity 06 UTC area: 220 km (JTWC) (JTWC) Location Information: Provinces/ Economic Country ISO Regions Highest Impact Exposure HDI (2012) Urbanity Pop. affected Samar, Masbate, Philippines PH Luzon, Mindoro Not applicable 0.654 55% Ca. 2 million Hainan (including capital city Haikou, Hainan southern Guangdong Hanoi Area >10 million, CN Guangdong (Leizhou Peninsula), $321.1 billion Haikou 3.8 million China and and Guangxi south-eastern $563 billion 40.5 million people Vietnam VN Yunnan Guangxi USD PPP 0.617 potentially affected Vulnerability and Exposure Metrics (Population, Infrastructure, Economic) . 40.5 million people potentially affected in China (23 million) and Vietnam (18 million) . The total exposed value is $321.1 billion USD, or approx. $563 billion USD PPP (Purchasing Power Parity) In northern Vietnam, much less economic exposure (approx.