Optimizing Ecryptfs for Better Performance and Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PV204: Disk Encryption Lab

PV204: Disk encryption lab May 12, 2016, Milan Broz <[email protected]> Introduction Encryption can provide confidentiality and authenticity of user data. It can be implemented on several different layes, including application, file system or storage device. Application encryption examples are PGP or ZIP compression with password. Encryption of files (inside filesystem or through independent layer like Linux eCryptfs) provides more generic solution. Yet some parts (like filesystem metadata) are still unencrypted. However this solution provides encrypted data with private key per user. (Every user can have own directory encrypted by own key.) Encryption of the low-level storage (disk) is called Full Disk Encryption (FDE). It is completely transparent to the user (no need to choose what to encrypt – the whole disk is encrypted). The encrypted disk behaves as the same as a disk without encryption. The major disadvantage is that everyone who knows the password can read the whole disk. Often we combine FDE with another encryption layer. The primary use of FDE is to provide data confidentiality in power-down mode (stolen laptop does not leak user data). Once the disk is unlocked, the main encryption key remains in system, usually directly in system RAM. Exercise II will show how easy is to get this key from memory image of system. Another disadvantage of FDE is that it usually cannot guarantee integrity of data. Encryption is fully transparent and length-preserving, the ciphertext and plaintext device are of the same size. There is no space to store any integrity information. This allows attacks by direct modification of ciphertext. -

Mcafee Foundstone Fsl Update

2016-AUG-18 FSL version 7.5.841 MCAFEE FOUNDSTONE FSL UPDATE To better protect your environment McAfee has created this FSL check update for the Foundstone Product Suite. The following is a detailed summary of the new and updated checks included with this release. NEW CHECKS 20369 - Splunk Enterprise Multiple Vulnerabilities (SP-CAAAPQM) Category: General Vulnerability Assessment -> NonIntrusive -> Web Server Risk Level: High CVE: CVE-2013-0211, CVE-2015-2304, CVE-2016-1541, CVE-2016-2105, CVE-2016-2106, CVE-2016-2107, CVE-2016-2108, CVE- 2016-2109, CVE-2016-2176 Description Multiple vulnerabilities are present in some versions of Splunk Enterprise. Observation Splunk Enterprise is an operational intelligence solution Multiple vulnerabilities are present in some versions of Splunk Enterprise. The flaws lie in multiple components. Successful exploitation by a remote attacker could lead to the information disclosure of sensitive information, cause denial of service or execute arbitrary code. 20428 - (HT206899) Apple iCloud Multiple Vulnerabilities Prior To 5.2.1 Category: Windows Host Assessment -> Miscellaneous (CATEGORY REQUIRES CREDENTIALS) Risk Level: High CVE: CVE-2016-1684, CVE-2016-1836, CVE-2016-4447, CVE-2016-4448, CVE-2016-4449, CVE-2016-4483, CVE-2016-4607, CVE- 2016-4608, CVE-2016-4609, CVE-2016-4610, CVE-2016-4612, CVE-2016-4614, CVE-2016-4615, CVE-2016-4616, CVE-2016-4619 Description Multiple vulnerabilities are present in some versions of Apple iCloud. Observation Apple iCloud is a manager for the Apple's could based storage service. Multiple vulnerabilities are present in some versions of Apple iCloud. The flaws lie in several components. Successful exploitation could allow an attacker to retrieve sensitive data, cause a denial of service condition or have other unspecified impact on the target system. -

ANDROID PRIVACY THROUGH ENCRYPTION by DANIEL

ANDROID PRIVACY THROUGH ENCRYPTION by DANIEL DEFREEZ A THESIS Presented to the Department of Computer Science in partial fullfillment of the requirements for the degree of Master of Science in Mathematics and Computer Science Ashland, Oregon May 2012 ii APPROVAL PAGE “Android Privacy Through Encryption,” a thesis prepared by Daniel DeFreez in partial fulfillment of the requirements for the Master of Science in Mathematics and Computer Science. This project has been approved and accepted by: Dr. Lynn Ackler, Chair of the Examining Committee Date Pete Nordquist, Committee Member Date Hart Wilson, Committee Member Date Daniel DeFreez c 2012 iii ABSTRACT OF THESIS ANDROID PRIVACY THROUGH ENCRYPTION By Daniel DeFreez This thesis explores the field of Android forensics in relation to a person’s right to privacy. As the field of mobile forensics becomes increasingly sophisticated, it is clear that bypassing common privacy measures, such as disk encryption, will become routine. A new keying method for eCryptfs is proposed that could significantly mitigate memory attacks against encrypted file systems. It is shown how eCryptfs could be modified to implement this keying method on an Android device. iv ACKNOWLEDGMENTS I would like to thank Dr. Lynn Ackler for introducing me to the vast world of computer security and forensics, cultivating a healthy paranoia, and for being a truly excellent teacher. Dr. Dan Harvey, Pete Nordquist, and Hart Wilson provided helpful feedback during the preparation of this thesis, for which I thank them. I am deeply indebted to my friends and colleagues Brandon Kester, Andrew Krug, Adam Mashinchi, Jeff McJunkin, and Stephen Perkins, for their enthusiastic interest in the forensics and security fields, insightful comments, love of free software, and encouraging words. -

Ubuntu Server Guide Basic Installation Preparing to Install

Ubuntu Server Guide Welcome to the Ubuntu Server Guide! This site includes information on using Ubuntu Server for the latest LTS release, Ubuntu 20.04 LTS (Focal Fossa). For an offline version as well as versions for previous releases see below. Improving the Documentation If you find any errors or have suggestions for improvements to pages, please use the link at thebottomof each topic titled: “Help improve this document in the forum.” This link will take you to the Server Discourse forum for the specific page you are viewing. There you can share your comments or let us know aboutbugs with any page. PDFs and Previous Releases Below are links to the previous Ubuntu Server release server guides as well as an offline copy of the current version of this site: Ubuntu 20.04 LTS (Focal Fossa): PDF Ubuntu 18.04 LTS (Bionic Beaver): Web and PDF Ubuntu 16.04 LTS (Xenial Xerus): Web and PDF Support There are a couple of different ways that the Ubuntu Server edition is supported: commercial support and community support. The main commercial support (and development funding) is available from Canonical, Ltd. They supply reasonably- priced support contracts on a per desktop or per-server basis. For more information see the Ubuntu Advantage page. Community support is also provided by dedicated individuals and companies that wish to make Ubuntu the best distribution possible. Support is provided through multiple mailing lists, IRC channels, forums, blogs, wikis, etc. The large amount of information available can be overwhelming, but a good search engine query can usually provide an answer to your questions. -

Z/VSE Security Overview and Update Ingo Franzki

z/VSE Live Virtual Class 2013 z/VSE Security Overview and Update Ingo Franzki http://www.ibm.com/zVSE http://twitter.com/IBMzVSE ©2013 IBM Corporation z/VSE Live Virtual Class 2013 Trademarks The following are trademarks of the International Business Machines Corporation in the United States, other countries, or both. Not all common law marks used by IBM are listed on this page. Failure of a mark to appear does not mean that IBM does not use the mark nor does it mean that the product is not actively marketed or is not significant within its relevant market. Those trademarks followed by ®are registered trademarks of IBM in the United States; all others are trademarks or common law marks of IBM in the United States. For a complete list of IBM Trademarks, see www.ibm.com/legal/copytrade.shtml: *, AS/400®, e business(logo)®, DBE, ESCO, eServer, FICON, IBM®, IBM (logo)®,iSeries®, MVS, OS/390®, pSeries®, RS/6000®, S/30, VM/ESA®, VSE/ESA, WebSphere®, xSeries®, z/OS®, zSeries®, z/VM®, System i, System i5, System p, System p5, System x, System z, System z9®, BladeCenter® The following are trademarks or registered trademarks of other companies. Adobe, the Adobe logo, PostScript, and the PostScript logo are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States, and/or other countries. Cell Broadband Engine is a trademark of Sony Computer Entertainment, Inc. in the United States, other countries, or both and is used under license therefrom. Java and all Java-based trademarks are trademarks of Sun Microsystems, Inc. -

Automated Control of Distributed Systems

Summer Research Fellowship Programme-2015 Indian Academy of Sciences, Bangalore PROJECT REPORT AUTOMATED CONTROL OF DISTRIBUTED SYSTEMS UNDER THE GUIDANCE OF Dr. B.M MEHTRE Associate Professor, Head, Center for Information Assurance and Management (CIAM) Institute for Development and Research in Banking Technology (IDRBT), Hyderabad - 500 057 Submitted by: S. NIVEADHITHA II Year, B Tech Computer Science Engineering SRM University, Kattankulathur, Chennai. SRF- ENGS7327 (2015) Indian Academy of Sciences, Bangalore CERTIFICATE This is to certify that Ms S Niveadhitha, Student, Second year B Tech Computer Science Engineering, SRM University, Kattankulathur, Chennai has undertaken Summer Research Fellowship Programme (2015) conducted by Indian Academy of Sciences, Bangalore at IDRBT, Hyderabad from May 25, 2015 to July 20, 2015. She was assigned the project “Automated Control of Distributed Systems” under my guidance. I wish her all the best for all her future endeavours. Dr. B.M MEHTRE Associate Professor, Head, Center for Information Assurance and Management (CIAM) Institute for Development and Research in Banking Technology (IDRBT), Hyderabad - 500 057 ACKNOWLEDGMENT I express my deep sense of gratitude to my Guide Dr. B. M. Mehtre, Associate Professor, Head, CIAM, IDRBT, Hyderabad - 500 057 for giving me an great opportunity to do this project in CIAM, IDRBT and providing all the support. I am thankful to Prof. Dr. B.L.Deekshatulu, Adjunct Professor, IDRBT for his guidance and valuable feedback. I am grateful to Mr. Hiran V Nath, Miss Shashi Sachan and colleagues of CIAM, IDRBT who constantly encouraged me for my project work and supported me by providing all the necessary information. I am indebted to Indian Academy of Sciences, Bangalore, Director, E & T SRM University, and Head, CSE, SRM University, Kattankulathur, Chennai for giving me this golden opportunity to undertake Summer Research Fellowship Programme at IDRBT. -

Best Practices to Secure Your Z/VSE System and Data Using New Security and Crypto Features

IBM z Systems – z/VSE – VM Workshop 2016 Best Practices to secure your z/VSE system and data using new security and crypto features Ingo Franzki © 2016 IBM Corporation IBM z Systems – z/VSE – VM Workshop 2016 Trademarks The following are trademarks of the International Business Machines Corporation in the United States, other countries, or both. Not all common law marks used by IBM are listed on this page. Failure of a mark to appear does not mean that IBM does not use the mark nor does it mean that the product is not actively marketed or is not significant within its relevant market. Those trademarks followed by ® are registered trademarks of IBM in the United States; all others are trademarks or common law marks of IBM in the United States. For a complete list of IBM Trademarks, see www.ibm.com/legal/copytrade.shtml: *, AS/400®, e business(logo)®, DBE, ESCO, eServer, FICON, IBM®, IBM (logo)®, iSeries®, MVS, OS/390®, pSeries®, RS/6000®, S/30, VM/ESA®, VSE/ESA, WebSphere®, xSeries®, z/OS®, zSeries®, z/VM®, System i, System i5, System p, System p5, System x, System z, System z9®, BladeCenter® The following are trademarks or registered trademarks of other companies. Adobe, the Adobe logo, PostScript, and the PostScript logo are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States, and/or other countries. Cell Broadband Engine is a trademark of Sony Computer Entertainment, Inc. in the United States, other countries, or both and is used under license therefrom. Java and all Java-based trademarks are trademarks of Sun Microsystems, Inc. -

Introduction to Truecrypt

Introduction to TrueCrypt WELCOME 11 January 2012 SLUUG - St. Louis Unix Users Group http://www.sluug.org/ A Very Basic Tutorial and Demonstration By Stan Reichardt [email protected] 1 Introduction to TrueCrypt DEFINITIONS Encryption Secrecy Privacy Paranoia Human Rights Self-determination See http://www.markus-gattol.name/ws/dm-crypt_luks.html#sec1 2 Introduction to TrueCrypt WHO Who uses TrueCrypt? Who here has NOT used TrueCrypt? Who here has used TrueCrypt? 3 Introduction to TrueCrypt WHO ELSE Used by Businesses Military forces Government agencies Suspects (Possibly Bad people) Freedom Fighters (Against Bad Governments) Everyday People (That Want Privacy or Security) 4 Introduction to TrueCrypt WHO WATCHES Who watches the watchmen? http://en.wikipedia.org/wiki/Quis_custodiet_ipsos_custodes%3F 5 Introduction to TrueCrypt WHAT What is it? GENERAL TrueCrypt is powerful encryption software for your personal data. It works by creating creating a virtual hard drive within a file and mounts it, so your computer treats it as a real hard drive. You can choose to encrypt an entire hard drive, certain folders, or removable media such as a USB flash drive. Encryption is automatic, real-time and transparent, so all the hard work is handled for you. It also provides two levels of plausible deniability, and supports various encryption algorithms depending on your needs, including AES-256, Serpent, and 6 Twofish. Introduction to TrueCrypt WHAT IT DOES What can it do? The capabilities of TrueCrypt (taken from Users Guide, Introduction on page 6): TrueCrypt is a software system for establishing and maintaining an on-the-fly- encrypted volume (data storage device). -

WHEN FILE ENCRYPTION HELPS PASSWORD CRACKING Phdays V

WHEN FILE ENCRYPTION HELPS PASSWORD CRACKING PHDays V Sylvain Pelissier, Roman Korkikian May 26, 2015 IN BRIEF Password hashing Password cracking eCryptfs presentation eCryptfs salting problem Correction Questions 2 PASSWORD HASHING 3 PASSWORD CRACKING From the hash recover the password value. 4 BRUTEFORCE 1. Get the hash file /etc/shadow 2. For all possible password, compute H(password) until a match is found in the file. 3. Output password 5 PRE-COMPUTED DICTIONNARY ATTACK 1. Create a database of all (password; H(password)). 2. To crack a given password hash, check if it is in the database. 3. Output the corresponding password 6 RAINBOW TABLE ATTACK Trade-off between computation and memory. 7 SALTING Compute H(saltjjpassword) instead of H(password) and store both salt and hash. Make dictionary and rainbow table attacks much more difficult but not bruteforce attack. Salting is used in most modern systems i.e Linux uses 5000 iterations of SHA-512 with randomly generated salt. 8 PASSWORD CRACKING Well studied problem. Several optimized tools: I John the ripper (www.openwall.com/john). I hashcat (hashcat.net/oclhashcat). Password Hashing Competition (PHC) to select new password hashing algorithms (password-hashing.net). Hashrunner contest 9 ECRYPTFS File encryption software (ecryptfs.org). Included in the Linux Kernel. Used for user home folder encryption by Ubuntu. 10 ECRYPTFS UTILS 11 ECRYPTFS UTILS 1. During creation of the encrypted home folder, a passphrase (16 bytes by default) is randomly generated. 2. The passphrase is use for file encryption (AES by default). 3. The passphrase is stored encrypted in the file: /home/.ecrpytfs/$USER/.ecrpytfs/wrapped-passphrase 4. -

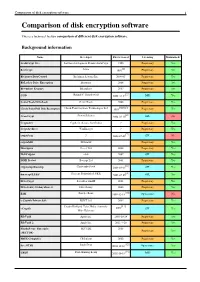

Comparison of Disk Encryption Software 1 Comparison of Disk Encryption Software

Comparison of disk encryption software 1 Comparison of disk encryption software This is a technical feature comparison of different disk encryption software. Background information Name Developer First released Licensing Maintained? ArchiCrypt Live Softwaredevelopment Remus ArchiCrypt 1998 Proprietary Yes [1] BestCrypt Jetico 1993 Proprietary Yes BitArmor DataControl BitArmor Systems Inc. 2008-05 Proprietary Yes BitLocker Drive Encryption Microsoft 2006 Proprietary Yes Bloombase Keyparc Bloombase 2007 Proprietary Yes [2] CGD Roland C. Dowdeswell 2002-10-04 BSD Yes CenterTools DriveLock CenterTools 2008 Proprietary Yes [3][4][5] Check Point Full Disk Encryption Check Point Software Technologies Ltd 1999 Proprietary Yes [6] CrossCrypt Steven Scherrer 2004-02-10 GPL No Cryptainer Cypherix (Secure-Soft India) ? Proprietary Yes CryptArchiver WinEncrypt ? Proprietary Yes [7] cryptoloop ? 2003-07-02 GPL No cryptoMill SEAhawk Proprietary Yes Discryptor Cosect Ltd. 2008 Proprietary Yes DiskCryptor ntldr 2007 GPL Yes DISK Protect Becrypt Ltd 2001 Proprietary Yes [8] cryptsetup/dmsetup Christophe Saout 2004-03-11 GPL Yes [9] dm-crypt/LUKS Clemens Fruhwirth (LUKS) 2005-02-05 GPL Yes DriveCrypt SecurStar GmbH 2001 Proprietary Yes DriveSentry GoAnywhere 2 DriveSentry 2008 Proprietary Yes [10] E4M Paul Le Roux 1998-12-18 Open source No e-Capsule Private Safe EISST Ltd. 2005 Proprietary Yes Dustin Kirkland, Tyler Hicks, (formerly [11] eCryptfs 2005 GPL Yes Mike Halcrow) FileVault Apple Inc. 2003-10-24 Proprietary Yes FileVault 2 Apple Inc. 2011-7-20 Proprietary -

Trusted Computing and Linux

Trusted Computing and Linux Kylene Hall Tom Lendacky Emily Ratliff IBM IBM IBM [email protected] [email protected] [email protected] Kent Yoder IBM [email protected] Abstract 1 Introduction The Trusted Computing Group (TCG) released the first set of hardware and software specifi- cations shortly after the creation of that group in 2003.1 This year, a short two years later, While Trusted Computing and Linux R may 20 million computers will be sold containing a seem antithetical on the surface, Linux users Trusted Platform Module (TPM) [Mohamed], can benefit from the security features, including which will largely go unused. Despite the system integrity and key confidentiality, pro- controversy surrounding abuses potentially en- vided by Trusted Computing. The purpose of abled by the TPM, Linux has the opportunity this paper is to discuss the work that has been to build controls into the enablement of the done to enable Linux users to make use of their Trusted Computing technology to help the end Trusted Platform Module (TPM) in a non-evil user control the TPM and take advantage of se- manner. The paper describes the individual curity gains that can be made by exercising the software components that are required to en- TPM properly. This paper will cover the pieces able the use of the TPM, including the TPM needed for a Linux user to begin to make use of device driver and TrouSerS, the Trusted Soft- the TPM. ware Stack, and TPM management. Key con- cerns with Trusted Computing are highlighted This paper is organized into sections covering along with what the Trusted Computing Group the goals of Trusted Computing, a brief intro- has done and what individual TPM owners can duction to Trusted Computing, the components do to mitigate these concerns. -

Ecryptfs: an Enterprise-Class Encrypted Filesystem for Linux

eCryptfs: An Enterprise-class Encrypted Filesystem for Linux Michael Austin Halcrow International Business Machines [email protected] Abstract the integrity of the data in jeopardy of compro- mise in the event of unauthorized access to the media on which the data is stored. eCryptfs is a cryptographic filesystem for Linux that stacks on top of existing filesys- While users and administrators take great tems. It provides functionality similar to that of pains to configure access control mecha- GnuPG, except the process of encrypting and nisms, including measures such as user ac- decrypting the data is done transparently from count and privilege separation, Mandatory Ac- the perspective of the application. eCryptfs cess Control[13], and biometric identification, leverages the recently introduced Linux ker- they often fail to fully consider the circum- nel keyring service, the kernel cryptographic stances where none of these technologies can API, the Linux Pluggable Authentication Mod- have any effect – for example, when the me- ules (PAM) framework, OpenSSL/GPGME, dia itself is separated from the control of its the Trusted Platform Module (TPM), and the host environment. In these cases, access con- GnuPG keyring in order to make the process trol must be enforced via cryptography. of key and authentication token management When a business process incorporates a cryp- seamless to the end user. tographic solution, it must take several issues into account. How will this affect incremental backups? What sort of mitigation is in place to address key loss? What sort of education is 1 Enterprise Requirements required on the part of the employees? What should the policies be? Who should decide them, and how are they expressed? How dis- Any cryptographic application is hard to imple- ruptive or costly will this technology be? What ment correctly and hard to effectively deploy.