Security Analysis of Android OS

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Systematization of Vulnerability Discovery Knowledge: Review

Systematization of Vulnerability Discovery Knowledge Review Protocol Nuthan Munaiah and Andrew Meneely Department of Software Engineering Rochester Institute of Technology Rochester, NY 14623 {nm6061,axmvse}@rit.edu February 12, 2019 1 Introduction As more aspects of our daily lives depend on technology, the software that supports this technology must be secure. We, as users, almost subconsciously assume the software we use to always be available to serve our requests while preserving the confidentiality and integrity of our information. Unfortunately, incidents involving catastrophic software vulnerabilities such as Heartbleed (in OpenSSL), Stagefright (in Android), and EternalBlue (in Windows) have made abundantly clear that software, like other engineered creations, is prone to mistakes. Over the years, Software Engineering, as a discipline, has recognized the potential for engineers to make mistakes and has incorporated processes to prevent such mistakes from becoming exploitable vulnerabilities. Developers leverage a plethora of processes, techniques, and tools such as threat modeling, static and dynamic analyses, unit/integration/fuzz/penetration testing, and code reviews to engineer secure software. These practices, while effective at identifying vulnerabilities in software, are limited in their ability to describe the engineering failures that may have led to the introduction of vulnerabilities. Fortunately, as researchers propose empirically-validated metrics to characterize historical vulnerabilities, the factors that may have led to the introduction of vulnerabilities emerge. Developers must be made aware of these factors to help them proactively consider security implications of the code that they contribute. In other words, we want developers to think like an attacker (i.e. inculcate an attacker mindset) to proactively discover vulnerabilities. -

Internet Security Threat Report VOLUME 21, APRIL 2016 TABLE of CONTENTS 2016 Internet Security Threat Report 2

Internet Security Threat Report VOLUME 21, APRIL 2016 TABLE OF CONTENTS 2016 Internet Security Threat Report 2 CONTENTS 4 Introduction 21 Tech Support Scams Go Nuclear, 39 Infographic: A New Zero-Day Vulnerability Spreading Ransomware Discovered Every Week in 2015 5 Executive Summary 22 Malvertising 39 Infographic: A New Zero-Day Vulnerability Discovered Every Week in 2015 8 BIG NUMBERS 23 Cybersecurity Challenges For Website Owners 40 Spear Phishing 10 MOBILE DEVICES & THE 23 Put Your Money Where Your Mouse Is 43 Active Attack Groups in 2015 INTERNET OF THINGS 23 Websites Are Still Vulnerable to Attacks 44 Infographic: Attackers Target Both Large and Small Businesses 10 Smartphones Leading to Malware and Data Breaches and Mobile Devices 23 Moving to Stronger Authentication 45 Profiting from High-Level Corporate Attacks and the Butterfly Effect 10 One Phone Per Person 24 Accelerating to Always-On Encryption 45 Cybersecurity, Cybersabotage, and Coping 11 Cross-Over Threats 24 Reinforced Reassurance with Black Swan Events 11 Android Attacks Become More Stealthy 25 Websites Need to Become Harder to 46 Cybersabotage and 12 How Malicious Video Messages Could Attack the Threat of “Hybrid Warfare” Lead to Stagefright and Stagefright 2.0 25 SSL/TLS and The 46 Small Business and the Dirty Linen Attack Industry’s Response 13 Android Users under Fire with Phishing 47 Industrial Control Systems and Ransomware 25 The Evolution of Encryption Vulnerable to Attacks 13 Apple iOS Users Now More at Risk than 25 Strength in Numbers 47 Obscurity is No Defense -

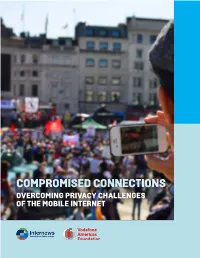

Compromised Connections

COMPROMISED CONNECTIONS OVERCOMING PRIVACY CHALLENGES OF THE MOBILE INTERNET The Universal Declaration of Human Rights, the International Covenant on Civil and Political Rights, and many other international and regional treaties recognize privacy as a fundamental human right. Privacy A WORLD OF INFORMATION underpins key values such as freedom of expression, freedom of association, and freedom of speech, IN YOUR MOBILE PHONE and it is one of the most important, nuanced and complex fundamental rights of contemporary age. For those of us who care deeply about privacy, safety and security, not only for ourselves but also for our development partners and their missions, we need to think of mobile phones as primary computers As mobile phones have transformed from clunky handheld calling devices to nifty touch-screen rather than just calling devices. We need to keep in mind that, as the storage, functionality, and smartphones loaded with apps and supported by cloud access, the networks these phones rely on capability of mobiles increase, so do the risks to users. have become ubiquitous, ferrying vast amounts of data across invisible spectrums and reaching the Can we address these hidden costs to our digital connections? Fortunately, yes! We recommend: most remote corners of the world. • Adopting device, data, network and application safety measures From a technical point-of-view, today’s phones are actually more like compact mobile computers. They are packed with digital intelligence and capable of processing many of the tasks previously confined -

Mind the Gap: Dissecting the Android Patch Gap | Ben Schlabs

Mind the Gap – Dissecting the Android patch gap Ben Schlabs <[email protected]> SRLabs Template v12 Corporate Design 2016 Allow us to take you on two intertwined journeys This talk in a nutshell § Wanted to understand how fully-maintained Android phones can be exploited Research § Found surprisingly large patch gaps for many Android vendors journey – some of these are already being closed § Also found Android exploitation to be unexpectedly difficult § Wanted to check thousands of firmwares for the presence of Das Logo Horizontal hundreds of patches — Pos / Neg Engineering § Developed and scaled a rather unique analysis method journey § Created an app for your own analysis 2 3 Android patching is a known-hard problem Patching challenges Patch ecosystems § Computer OS vendors regularly issue patches OS vendor § Users “only” have to confirm the installation of § Microsoft OS patches Patching is hard these patches § Apple Endpoints & severs to start with § Still, enterprises consider regular patching § Linux distro among the most effortful security tasks § “The moBile ecosystem’s diversity […] OS Chipset Phone Android contriButes to security update complexity and vendor vendor vendor phones inconsistency.” – FTC report, March 2018 [1] The nature of Telco § Das Logo HorizontalAndroid makes Patches are handed down a long chain of — Pos / Negpatching so typically four parties Before reaching the user much more § Only some devices get patched (2016: 17% [2]). difficult We focus our research on these “fully patched” phones Our research question – -

Automating Patching of Vulnerable Open-Source Software Versions in Application Binaries

Automating Patching of Vulnerable Open-Source Software Versions in Application Binaries Ruian Duan:, Ashish Bijlani:, Yang Ji:, Omar Alrawi:, Yiyuan Xiong˚, Moses Ike:, Brendan Saltaformaggio,: and Wenke Lee: fruian, ashish.bijlani, yang.ji, alrawi, [email protected], [email protected] [email protected], [email protected] : Georgia Institute of Technology, ˚ Peking University Abstract—Mobile application developers rely heavily on open- while ensuring backward compatibility, and test for unin- source software (OSS) to offload common functionalities such tended side-effects. For the Android platform, Google has as the implementation of protocols and media format playback. initiated the App Security Improvement Program (ASIP) [21] Over the past years, several vulnerabilities have been found in to notify developers of vulnerable third-party libraries in popular open-source libraries like OpenSSL and FFmpeg. Mobile use. Unfortunately, many developers, as OSSPolice [15] and applications that include such libraries inherit these flaws, which LibScout [4] show, do not update or patch their application, make them vulnerable. Fortunately, the open-source community is responsive and patches are made available within days. However, which leaves end-users exposed. Android developers mainly mobile application developers are often left unaware of these use Java and C/C++ [1] libraries. While Derr et al. [14] flaws. The App Security Improvement Program (ASIP) isa show that vulnerable Java libraries can be fixed by library- commendable effort by Google to notify application developers level update, their C/C++ counterparts, which contain many of these flaws, but recent work has shown that many developers more documented security bugs in the National Vulnerability do not act on this information. -

VULNERABLE by DESIGN: MITIGATING DESIGN FLAWS in HARDWARE and SOFTWARE Konoth, R.K

VU Research Portal VULNERABLE BY DESIGN: MITIGATING DESIGN FLAWS IN HARDWARE AND SOFTWARE Konoth, R.K. 2020 document version Publisher's PDF, also known as Version of record Link to publication in VU Research Portal citation for published version (APA) Konoth, R. K. (2020). VULNERABLE BY DESIGN: MITIGATING DESIGN FLAWS IN HARDWARE AND SOFTWARE. General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal ? Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. E-mail address: [email protected] Download date: 07. Oct. 2021 VULNERABLE BY DESIGN: MITIGATING DESIGN FLAWS IN HARDWARE AND SOFTWARE PH.D. THESIS RADHESH KRISHNAN KONOTH VRIJE UNIVERSITEIT AMSTERDAM, 2020 Faculty of Science The research reported in this dissertation was conducted at the Faculty of Science — at the Department of Computer Science — of the Vrije Universiteit Amsterdam This work was supported by the MALPAY consortium, consisting of the Dutch national police, ING, ABN AMRO, Rabobank, Fox-IT, and TNO. -

Malware Detection Techiniques and Tools for Android Mobile – a Brief Review

International Journal of Latest Research in Engineering and Technology (IJLRET) ISSN: 2454-5031 www.ijlret.com || Volume 03 - Issue 03 || March 2017 || PP. 102-105 Malware Detection Techiniques and Tools for Android Mobile – A Brief Review Dr. Bhaskar V. Patil#1 #1Bharati Vidyapeeth University Yashwantrao Mohite institute of Management, Karad [M.S.], INDIA Dr. Rahul J. Jadhav#2 21Bharati Vidyapeeth University Yashwantrao Mohite institute of Management, Karad [M.S.], INDIA Abstract: Android has the biggest market share among all Smartphone operating system. The number of devices running with the Android operating system has been on the rise. By the end of 2012, it will account for nearly half of the world's Smartphone market. Along with its growth, the importance of security has also risen. Security is one of the main concerns for Smartphone users today. As the power and features of Smartphone’s increase, so has their vulnerability for attacks by viruses etc. Perhaps android is more secured operating system than any other Smartphone operating system today. Android has very few restrictions for developer, increases the security risk for end users. Antivirus Android apps remain one of the most popular types of applications on Android. Some people either like having that extra security or just want to be extra cautious just in case and there is nothing wrong with that. In this list, we’ll check out the best antivirus Android apps and anti-malware apps on Android! The efficacies of these applications have not been empirically established. This paper analyzes some of the security tools written for the Android platform to gauge their effectiveness at mitigating spyware and malware. -

Toward Data-Driven Discovery of Software Vulnerabilities

Rochester Institute of Technology RIT Scholar Works Theses 4-2020 Toward Data-Driven Discovery of Software Vulnerabilities Nuthan Munaiah [email protected] Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Munaiah, Nuthan, "Toward Data-Driven Discovery of Software Vulnerabilities" (2020). Thesis. Rochester Institute of Technology. Accessed from This Dissertation is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Toward Data-Driven Discovery of Software Vulnerabilities by Nuthan Munaiah A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computing and Information Sciences B. Thomas Golisano College of Computing and Information Sciences Rochester Institute of Technology Rochester, New York April 2020 Toward Data-Driven Discovery of Software Vulnerabilities by Nuthan Munaiah Committee Approval: We, the undersigned committee members, certify that we have advised and/or supervised the candidate on the work described in this dissertation. We further certify that we have reviewed the dissertation manuscript and approve it in partial fulfillment of the requirements of the degree of Doctor of Philosophy in Computing and Information Sciences. Dr. Andrew Meneely Date Dissertation Advisor Dr. Naveen Sharma Date Dissertation Committee Member Dr. Ernest Fokoué Date Dissertation Committee Member Dr. Pradeep K. Murukannaiah Date Dissertation Committee Member Dr. Sharon Mason Date Dissertation Defense Chairperson Certified by: Dr. Pencheng Shi Date Ph.D. Program Director, Computing and Information Sciences ii iii © 2020 Nuthan Munaiah All rights reserved. -

Aasys Newsletter August 2015

Issue # 8 Volume #16 August 2015 Are there subjects that you Solutions would like to have covered in future newsletters? We are always looking for topics of interest and we welcome all suggestions! To submit a topic, subscribe, or unsubscribe to our distribution Team iOS Or Team Droid? list, please send an email to [email protected] ability to create a wide range of add- on functionalities that extend beyond 11301 N. US Highway 301, Suite 106 the offerings of Apple’s iOS. Thonotosassa, FL 33592 (800) 799-8699 www.aasysgroup.com What researchers have discovered is that both operating systems have had their fair share of run-ins with hacker attempts. Recently, Stagefright al- lowed hackers to take complete con- trol of any Android phone or tablet. And last year, there were 127 laws AaSys Group will be closed discovered in the iOS platform, one of on Monday, September 7th For years, Apple and Google have which allowed hackers to install fake for the Labor Day holiday. waged war against each other with apps like Facebook and Skype onto iOS their iOS versus Android feud. Many devices. claimed they can even tell a lot about a Bottom line is: whether you use an Inside this issue: person based on what cell phone they iPhone 6 or a Samsung Galaxy, safety carried. Truth is, whether you’re on has to be the main concern. Team iOS or Team Droid, you’re still open to vulnerabilities. Team iOS or Team 1 –2 Droid? Work Smarter Not 2 Apple uses the iOS operating system Harder-3 Tricks to Maximize Your Out- for their mobile devices. -

38 Toward Engineering a Secure Android Ecosystem: a Survey of Existing Techniques

Toward Engineering a Secure Android Ecosystem: A Survey of Existing Techniques MENG XU, CHENGYU SONG, YANG JI, MING-WEI SHIH, KANGJIE LU, CONG ZHENG, RUIAN DUAN, YEONGJIN JANG, BYOUNGYOUNG LEE, CHENXIONG QIAN, SANGHO LEE, and TAESOO KIM, Georgia Institute of Technology The openness and extensibility of Android have made it a popular platform for mobile devices and a strong candidate to drive the Internet-of-Things. Unfortunately, these properties also leave Android vulnerable, attracting attacks for profit or fun. To mitigate these threats, numerous issue-specific solutions have been proposed. With the increasing number and complexity of security problems and solutions, we believe this is the right moment to step back and systematically re-evaluate the Android security architecture and security practices in the ecosystem. We organize the most recent security research on the Android platform into two categories: the software stack and the ecosystem. For each category, we provide a comprehensive narrative of the problem space, highlight the limitations of the proposed solutions, and identify open problems for future research. Based on our collection of knowledge, we envision a blueprint for engineering a secure, next-generationr Android ecosystem. CCS Concepts: Security and privacy → Mobile platform security; Malware and its mitigation;Social aspects of security and privacy Additional Key Words and Phrases: Android, mobile malware, survey, ecosystem ACM Reference Format: Meng Xu, Chengyu Song, Yang Ji, Ming-Wei Shih, Kangjie Lu, Cong Zheng, Ruian Duan, Yeongjin Jang, Byoungyoung Lee, Chenxiong Qian, Sangho Lee, and Taesoo Kim. 2016. Toward engineering a secure android ecosystem: A survey of existing techniques. ACM Comput. -

Chapter 12 CATEGORIZING MOBILE DEVICE MALWARE BASED ON

Chapter 12 CATEGORIZING MOBILE DEVICE MALWARE BASED ON SYSTEM SIDE-EFFECTS Zachary Grimmett, Jason Staggs and Sujeet Shenoi Abstract Malware targeting mobile devices is an ever increasing threat. The most insidious type of malware resides entirely in volatile memory and does not leave a trail of persistent artifacts. Such malware requires novel detection and capture methods in order to be reliably identified, an- alyzed and mitigated. This chapter proposes malware categorization and detection techniques based on measurable system side-effects ob- served in an exploited mobile device. Using the Stagefright family of exploits as a case study, common system side-effects produced as a result of attempted exploitation are identified. These system side-effects are leveraged to trigger volatile memory (i.e., RAM) collection by memory acquisition tools (e.g., LiME) to enable analysis of the malware. Keywords: Mobile malware, memory-resident, categorization, system side-effects 1. Introduction Critical vulnerabilities that affect large families of mobile devices make it imperative to develop new techniques for securing these devices against increasingly sophisticated attacks as well as for conducting forensic in- vestigations. Investigating attacks on mobile devices requires the cap- ture and analysis of evidence pertaining to attacks. However, the most insidious malware resides entirely in memory and does not create per- sistent artifacts. Memory-resident malware that removes itself from a mobile device after performing its malicious activities can evade capture and analysis by malware investigators, even after its presence has been detected by a user. Live memory acquisition is the only way to recover memory-resident malware from exploited devices. -

Everything You Code Can and Will Be Re-Used Against

instead of Summer School on real-world crypto and privacy, Šibenik (Croatia), June 11–15, 2018 Collaborators: Acknowledgement Luca Davi, Essen-Duisburg University, GE Ferdinand Brasser, Tommaso Frassetto, Christopher Liebchen, TU Darmstadt, GE N. Asokan, Aalto, FI Fabian Monrose, Kevin Snow, UNC Chapel Hill, US Hovav Shacham, UCSD, US Per Larsen, Steven Crane, Andrei Homescu, Gene Tsudik, Michael Franz, UCI, US Thorsten Holz, Bochum University, GE Felix Shuster, Microsoft Research, UK Yier Jin, Dean Sullivan, Orlando Arias, UCF, US Patrick Kroebel, Matthias Schunter, Intel Labs Georg Koppen, Tor Project Summer School on real-world crypto and privacy, Šibenik (Croatia), June 11–15, 2018 Motivation Summer School on real-world crypto and privacy, Šibenik (Croatia), June 11–15, 2018 Three Decades of Runtime Attacks return-into- Return-oriented Morris Worm libc programming Continuing Arms 1988 Solar Designer Shacham Race 1997 CCS 2007 … Borrowed Code Code Chunk Injection Exploitation AlephOne Krahmer 1996 2005 Summer School on real-world crypto and privacy, Šibenik (Croatia), June 11–15, 2018 Recent Attacks Attacks on Tor Browser [2013] Stagefright [Drake, BlackHat 2015] FBI Admits It Controlled Tor Servers These issues in Stagefright code critically Behind Mass Malware Attack. expose 95% of Android devices, an estimated 950 million devices MMS Adversary Cisco Router Exploit [2018] The Million Dollar Dissident [2016] Million CISCO ASA Firewalls potentially Government targeted human rights vulnerable to attacks (XML parsing vuln.)