Examining the Content Filtering Practices of Social Media Giants

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Expert Says Brexit Campaign Used Data Mined from Facebook 27 March 2018, by Danica Kirka

Expert says Brexit campaign used data mined from Facebook 27 March 2018, by Danica Kirka persuade voters. "It was everywhere." Among the companies that had access to the data was AggregateIQ, a Canadian political consultant that did work for Vote Leave, the official campaign backing Britain's withdrawal from the EU, Wylie said. Wylie described Cambridge Analytica as just one arm of a global company, SCL Group, that gets most of its income from military contracts but is also a political gun-for-hire, often in countries where democratic institutions are weak. He suggested the company combines computer algorithms and dirty tricks to help candidates win regardless of the cost. Whistleblower Christopher Wylie who alleges that the The 28-year-old Canadian with a swath of pink hair campaign for Britain to leave the EU cheated in the referendum in 2016, speaking at a lawyers office to the says he helped set up Cambridge Analytica in media in London, Monday, March 26, 2018. Chris 2013. He left the next year. Wylie's claims center around the official Vote Leave campaign and its links to a group called BeLeave, which Wylie has previously alleged that Cambridge it helped fund. The links allegedly allowed the campaign Analytica used personal data improperly collected to bypass spending rules. (AP Photo/Alastair Grant) from Facebook users to help Trump's 2016 presidential campaign. Cambridge Analytica says none of the Facebook The computer expert who alleges a trove of data was used in its work on the Trump campaign. Facebook data was improperly used to help It denies any wrongdoing. -

1605026 NY Spotlight Memo

! MEMORANDUM TO: Interested Parties FROM: Alixandria Lapp, Executive Director, House Majority PAC DATE: May 26, 2016 RE: Congressional Democrats Poised for Pick-Ups Across the Empire State With just over a month until New York’s June 28 congressional primaries, and just under six months from the November general election, Democrats are poised for significant pick-ups in congressional districts across the Empire State. This year Democrats are overwhelmingly on offense in New York – with at least six Republican held seats that could be flipped this November. Multiple Republican incumbents and challengers are finding their already-precarious political prospects diminishing even further as they struggle with a damaging party brand, a toxic presidential ticket-mate, and increasingly prove themselves out of touch with their own districts. Bottom line: With New York’s congressional Republicans increasingly vulnerable heading into the fall, Democrats are overwhelmingly on offense and well-positioned to win key districts across the state in 2016. New York Republicans Tied to Toxic Brand As in any presidential year, down-ballot races will be heavily shaped by the top of the ticket. For Republicans, particularly in New York, that’s bad news. Even before the GOP presidential race took shape, New York’s congressional Republicans faced significant structural political challenges. In six competitive Republican-held districts, President Obama either won or came within 1% of winning in 2008 and 2012. Now with Donald Trump as their presidential ticket-mate, down-ballot prospects for New York Republicans are far worse. Earlier this month, a poll by Morning Consult found that nearly half of all Americans would “be less likely to support candidates for public office if they say they back Donald Trump.” And despite Donald Trump’s big win in New York’s presidential primary, there’s no indication that it will translate to success in November. -

Doj Inspector General Michael Horowitz Was Handpicked by the “Espionage Machine Part…

12/20/2017 Americans for Innovation: DOJ INSPECTOR GENERAL MICHAEL HOROWITZ WAS HANDPICKED BY THE “ESPIONAGE MACHINE PART… More Next Blog» Create Blog Sign In SEARCH by topic, keyword or phrase. Type in Custom Search box e.g. "IBM Eclipse Foundation" or "racketeering" Custom Search Saturday, December 16, 2017 DEEP STATE Member SHADOW DOJ INSPECTOR GENERAL MICHAEL HOROWITZ GOVERNMENT WAS HANDPICKED BY THE “ESPIONAGE MACHINE POSTER Harvard | Yale | Stanford Sycophants PARTY” RUN BY SOROS & ROGUE SPIES FOR AN Updated Dec. 12, 2017. UNELECTED CORPORATE COMBINE CLICK HERE TO SEE COMBINED TIMELINE OF THE INCREDIBLE BACKSTORY: HIJACKING OF THE INTERNET HOROWITZ HAS BEEN GROOMED BY GEORGE SOROS VIA DNC BARNEY PAY-to-PLA Y NEW W ORLD ORDER FRANK, THE CLINTONS AND JAMES CHANDLER SINCE HARVARD LAW SCHOOL This timeline shows how insiders sell access & manipulate politicians, police, intelligence, HOROWITZ & CLINTON ROAMED THE PLANET USING SPEECHES TO ORGANIZE judges and media to keep their secrets Clintons, Obamas, Summers were paid in cash for THE “ESPIONAGE MACHINE PARTY” TAKEOVER OF THE USA outlandish speaking fees and Foundation donations. Sycophant judges, politicians, academics, bureaucrats and media were fed tips to mutual funds tied to insider HE COACHED LEGAL & CORPORATE CRONIES HOW TO SKIRT U.S. stocks like Facebook. Risk of public exposure, SENTENCING & ETHICS LAWS blackmail, pedophilia, “snuff parties” (ritual child sexual abuse and murder) and Satanism have ensured silence among pay-to-play beneficiaries. The U.S. Patent Office CONTRIBUTING WRITERS | OPINION | AMERICANS FOR INNOVATION | DEC. 17, 2017 UPDATED DEC. 20, 2017 | is their toy box from which to steal new ideas. -

District 16 District 142 Brandon Creighton Harold Dutton Room EXT E1.412 Room CAP 3N.5 P.O

Elected Officials in District E Texas House District 16 District 142 Brandon Creighton Harold Dutton Room EXT E1.412 Room CAP 3N.5 P.O. Box 2910 P.O. Box 2910 Austin, TX 78768 Austin, TX 78768 (512) 463-0726 (512) 463-0510 (512) 463-8428 Fax (512) 463-8333 Fax 326 ½ N. Main St. 8799 N. Loop East Suite 110 Suite 305 Conroe, TX 77301 Houston, TX 77029 (936) 539-0028 (713) 692-9192 (936) 539-0068 Fax (713) 692-6791 Fax District 127 District 143 Joe Crab Ana Hernandez Room 1W.5, Capitol Building Room E1.220, Capitol Extension Austin, TX 78701 Austin, TX 78701 (512) 463-0520 (512) 463-0614 (512) 463-5896 Fax 1233 Mercury Drive 1110 Kingwood Drive, #200 Houston, TX 77029 Kingwood, TX 77339 (713) 675-8596 (281) 359-1270 (713) 675-8599 Fax (281) 359-1272 Fax District 144 District 129 Ken Legler John Davis Room E2.304, Capitol Extension Room 4S.4, Capitol Building Austin, TX 78701 Austin, TX 78701 (512) 463-0460 (512) 463-0734 (512) 463-0763 Fax (512) 479-6955 Fax 1109 Fairmont Parkway 1350 NASA Pkwy, #212 Pasadena, 77504 Houston, TX 77058 (281) 487-8818 (281) 333-1350 (713) 944-1084 (281) 335-9101 Fax District 145 District 141 Carol Alvarado Senfronia Thompson Room EXT E2.820 Room CAP 3S.06 P.O. Box 2910 P.O. Box 2910 Austin, TX 78768 Austin, TX 78768 (512) 463-0732 (512) 463-0720 (512) 463-4781 Fax (512) 463-6306 Fax 8145 Park Place, Suite 100 10527 Homestead Road Houston, TX 77017 Houston, TX (713) 633-3390 (713) 649-6563 (713) 649-6454 Fax Elected Officials in District E Texas Senate District 147 2205 Clinton Dr. -

M&A @ Facebook: Strategy, Themes and Drivers

A Work Project, presented as part of the requirements for the Award of a Master Degree in Finance from NOVA – School of Business and Economics M&A @ FACEBOOK: STRATEGY, THEMES AND DRIVERS TOMÁS BRANCO GONÇALVES STUDENT NUMBER 3200 A Project carried out on the Masters in Finance Program, under the supervision of: Professor Pedro Carvalho January 2018 Abstract Most deals are motivated by the recognition of a strategic threat or opportunity in the firm’s competitive arena. These deals seek to improve the firm’s competitive position or even obtain resources and new capabilities that are vital to future prosperity, and improve the firm’s agility. The purpose of this work project is to make an analysis on Facebook’s acquisitions’ strategy going through the key acquisitions in the company’s history. More than understanding the economics of its most relevant acquisitions, the main research is aimed at understanding the strategic view and key drivers behind them, and trying to set a pattern through hypotheses testing, always bearing in mind the following question: Why does Facebook acquire emerging companies instead of replicating their key success factors? Keywords Facebook; Acquisitions; Strategy; M&A Drivers “The biggest risk is not taking any risk... In a world that is changing really quickly, the only strategy that is guaranteed to fail is not taking risks.” Mark Zuckerberg, founder and CEO of Facebook 2 Literature Review M&A activity has had peaks throughout the course of history and different key industry-related drivers triggered that same activity (Sudarsanam, 2003). Historically, the appearance of the first mergers and acquisitions coincides with the existence of the first companies and, since then, in the US market, there have been five major waves of M&A activity (as summarized by T.J.A. -

In the Court of Chancery of the State of Delaware Karen Sbriglio, Firemen’S ) Retirement System of St

EFiled: Aug 06 2021 03:34PM EDT Transaction ID 66784692 Case No. 2018-0307-JRS IN THE COURT OF CHANCERY OF THE STATE OF DELAWARE KAREN SBRIGLIO, FIREMEN’S ) RETIREMENT SYSTEM OF ST. ) LOUIS, CALIFORNIA STATE ) TEACHERS’ RETIREMENT SYSTEM, ) CONSTRUCTION AND GENERAL ) BUILDING LABORERS’ LOCAL NO. ) 79 GENERAL FUND, CITY OF ) BIRMINGHAM RETIREMENT AND ) RELIEF SYSTEM, and LIDIA LEVY, derivatively on behalf of Nominal ) C.A. No. 2018-0307-JRS Defendant FACEBOOK, INC., ) ) Plaintiffs, ) PUBLIC INSPECTION VERSION ) FILED AUGUST 6, 2021 v. ) ) MARK ZUCKERBERG, SHERYL SANDBERG, PEGGY ALFORD, ) ) MARC ANDREESSEN, KENNETH CHENAULT, PETER THIEL, JEFFREY ) ZIENTS, ERSKINE BOWLES, SUSAN ) DESMOND-HELLMANN, REED ) HASTINGS, JAN KOUM, ) KONSTANTINOS PAPAMILTIADIS, ) DAVID FISCHER, MICHAEL ) SCHROEPFER, and DAVID WEHNER ) ) Defendants, ) -and- ) ) FACEBOOK, INC., ) ) Nominal Defendant. ) SECOND AMENDED VERIFIED STOCKHOLDER DERIVATIVE COMPLAINT TABLE OF CONTENTS Page(s) I. SUMMARY OF THE ACTION...................................................................... 5 II. JURISDICTION AND VENUE ....................................................................19 III. PARTIES .......................................................................................................20 A. Plaintiffs ..............................................................................................20 B. Director Defendants ............................................................................26 C. Officer Defendants ..............................................................................28 -

Like It Or Not, Trump Has a Point: FISA Reform and the Appearance of Partisanship in Intelligence Investigations by Stewart Baker1

Like It or Not, Trump Has a Point: FISA Reform and the Appearance of Partisanship in Intelligence Investigations by Stewart Baker1 To protect the country from existential threats, its intelligence capabilities need to be extraordinary -- and extraordinarily intrusive. The more intrusive they are, the greater the risk they will be abused. So, since the attacks of 9/11, debate over civil liberties has focused almost exclusively on the threat that intelligence abuses pose to the individuals and disfavored minorities. That made sense when our intelligence agencies were focused on terrorism carried out by small groups of individuals. But a look around the world shows that intelligence agencies can be abused in ways that are even more dangerous, not just to individuals but to democracy. Using security agencies to surveil and suppress political opponents is always a temptation for those in power. It is a temptation from which the United States has not escaped. The FBI’s director for life, J. Edgar Hoover, famously maintained his power by building files on numerous Washington politicians and putting his wiretapping and investigative capabilities at the service of Presidents pursuing political vendettas. That risk is making a comeback. The United States used the full force of its intelligence agencies to hold terrorism at bay for twenty years, but we paid too little attention to geopolitical adversaries who are now using chipping away at our strengths in ways we did not expect. As American intelligence reshapes itself to deal with new challenges from Russia and China, it is no longer hunting small groups of terrorists in distant deserts. -

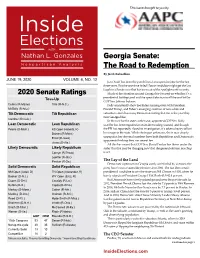

June 19, 2020 Volume 4, No

This issue brought to you by Georgia Senate: The Road to Redemption By Jacob Rubashkin JUNE 19, 2020 VOLUME 4, NO. 12 Jon Ossoff has been the punchline of an expensive joke for the last three years. But the one-time failed House candidate might get the last laugh in a Senate race that has been out of the spotlight until recently. 2020 Senate Ratings Much of the attention around Georgia has focused on whether it’s a Toss-Up presidential battleground and the special election to fill the seat left by GOP Sen. Johnny Isakson. Collins (R-Maine) Tillis (R-N.C.) Polls consistently show Joe Biden running even with President McSally (R-Ariz.) Donald Trump, and Biden’s emerging coalition of non-white and Tilt Democratic Tilt Republican suburban voters has many Democrats feeling that this is the year they turn Georgia blue. Gardner (R-Colo.) In the race for the state’s other seat, appointed-GOP Sen. Kelly Lean Democratic Lean Republican Loeffler has been engulfed in an insider trading scandal, and though Peters (D-Mich.) KS Open (Roberts, R) the FBI has reportedly closed its investigation, it’s taken a heavy toll on Daines (R-Mont.) her image in the state. While she began unknown, she is now deeply Ernst (R-Iowa) unpopular; her abysmal numbers have both Republican and Democratic opponents thinking they can unseat her. Jones (D-Ala.) All this has meant that GOP Sen. David Perdue has flown under the Likely Democratic Likely Republican radar. But that may be changing now that the general election matchup Cornyn (R-Texas) is set. -

Investigation Into the Use of Data Analytics in Political Campaigns

Information Commissioner’ Investigation into the use of data analytics in political campaigns Investigation update 11 July 2018 ? Contents Executive summary ................................................................................................................................. 2 1. Introduction ................................................................................................................................ 6 2. The investigation ......................................................................................................................... 9 3. Regulatory enforcement action and criminal offences ............................................................ 12 3.1 Failure to properly comply with the Data Protection Principles; ........................................... 13 3.2 Failure to properly comply with the Privacy and Electronic Communications Regulations (PECR); ........................................................................................................................................... 13 3.3 Section 55 offences under the Data Protection Act 1998 ...................................................... 13 4. Interim update .......................................................................................................................... 14 4.1 Political parties ........................................................................................................................ 14 4.2 Social media platforms ........................................................................................................... -

Sheila Jackson-Lee Statement

The University of Houston provided the following statement from Elwyn C. Lee, Jackson Lee's husband. "In response to your inquiry, the University of Houston has received the following Congressional earmarks, either sponsored or co‐sponsored by Rep. Sheila Jackson Lee: FY 2011 Bill: Defense Project: Carbon Composite Thin Films for Power Generation and Energy Storage Amount: $1 million Sponsor: Sheila Jackson Lee FY 2010 Bill: Energy and Water Project: National Wind Energy Center Amount: $2 million Sponsor: Kay Bailey Hutchison, Al Green, Gene Green, Lee Bill: Labor‐HHS Project: Teacher Training and Professional Development Amount: $400,000 Sponsor: Lee FY 2009 Bill: Energy and Water Project: National Wind Energy Center Amount: $2,378,750 Sponsor: Al Green, Gene Green, Lee Bill: Energy and Water Project: Center for Clean Fuels and Power Generation Amount: $475,750 Sponsor: Lee and Ted Poe As best I can recall, I came to UH in January 1978 to teach law. In fall 1989 I took an interim assignment to revive the African American Studies Program. I did that continuously through fall 1990, which was for 3 semesters and a summer. In 1991 I was appointed interim Vice President for Student Affairs and, later that year, Vice President for Student Affairs. In l998 the Vice Chancellorâs title was added. In March 2011 I physically moved into my current office as Vice President for Community Relations & Institutional Access. At no time in any of these positions have I been involved, directly or indirectly, in securing Congressional earmarks for the University of Houston, nor have my duties and responsibilities in any way involved securing Congressional earmarks. -

Presidential Fatigue Hits Gen Z Users, Shows Oath's News Data

Presidential Fatigue Hits Gen Z Users, Shows Oath’s News Data By [oath News Team] -- TBC Source: oath News Analytics - Longitudinal Trends across Generation X, Millennial, and Generation Z The common assumption is that anyone engaged in current events today is focused on U.S. politics, but the data shows that it actually depends on your generation. Gen Z is focused on a diverse array of issues and are largely isolated from the obsessive focus on national politics seen in other generations. Since they are the next generation to come online as voters (beginning in 2018 for the oldest Gen Zers), should we be concerned for the next election? Oath News data on longitudinal trends across Gen X, Millennials and Gen Z show that while the November 8th presidential election drew people from all age groups on presidential topics, trends for Gen X and Millennial are also similar. Gen Z has the most diverse interest over time and was the only group with significant discussion in international politics and society related stories. The US elections, Brexit, France’s landmark presidential election, and the unfortunate terrorist attacks over the past year prompted us to dig deeper into our news platform, to see how these events may have impacted our communities. This analysis was conducted through a review of over 100 million comments, of which 3 million were analyzed to define the top 10 trending US hosted articles per month ranked by total comments. Included in this analysis were comments generated within the flagship mobile app Newsroom iOS and Android, and across our biggest news platforms Yahoo.com and Yahoo News. -

"Make America Great Again Rally" in Cedar Rapids, Iowa June 21, 2017

Administration of Donald J. Trump, 2017 Remarks at a "Make America Great Again Rally" in Cedar Rapids, Iowa June 21, 2017 Audience members. U.S.A.! U.S.A.! U.S.A.! The President. Thank you, everybody. It is great to be back in the incredible, beautiful, great State of Iowa, home of the greatest wrestlers in the world, including our friend, Dan Gable. Some of the great, great wrestlers of the world, right? We love those wrestlers. It's always terrific to be able to leave that Washington swamp and spend time with the truly hard-working people. We call them American patriots, amazing people. I want to also extend our congratulations this evening to Karen Handel of Georgia. And we can't forget Ralph Norman in South Carolina. He called me, and I called him. He said, you know, last night I felt like the forgotten man. But he won, and he won really beautifully, even though most people—a lot of people didn't show up because they thought he was going to win by so much. It's always dangerous to have those big leads. But he won very easily, and he is a terrific guy. And I'll tell you what, Karen is going to be really incredible. She is going to be joining some wonderful people and doing some wonderful work, including major, major tax cuts and health care and lots of things. Going to be reducing crime, and we're securing that Second Amendment. I told you about that. And that looks like it's in good shape with Judge Gorsuch.