FTI-2 Architecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction to the Ipaddress Module

Introduction to the ipaddress Module An Introduction to the ipaddress Module Overview: This document provides an introduction to the ipaddress module used in the Python language for manipulation of IPv4 and IPv6 addresses. At a high level, IPv4 and IPv6 addresses are used for like purposes and functions. However, since there are major differences in the address structure for each protocol, this tutorial has separated into separate sections, one each for IPv4 and IPv6. In today’s Internet, the IPv4 protocol controls the majority of IP processing and will remain so for the near future. The enhancements in scale and functionality that come with IPv6 are necessary for the future of the Internet and adoption is progressing. The adoption rate, however, remains slow to this date. IPv4 and the ipaddress Module: The following is a brief discussion of the engineering of an IPv4 address. Only topics pertinent to the ipaddress module are included. A IPv4 address is composed of 32 bits, organized into four eight bit groupings referred to as “octets”. The word “octet” is used to identify an eight-bit structure in place of the more common term “byte”, but they carry the same definition. The four octets are referred to as octet1, octet2, octet3, and octet4. This is a “dotted decimal” format where each eight-bit octet can have a decimal value based on eight bits from zero to 255. IPv4 example: 192.168.100.10 Every packet in an IPv4 network contains a separate source and destination address. As a unique entity, a IPv4 address should be sufficient to route an IPv4 data packet from the source address of the packet to the destination address of the packet on a IPv4 enabled network, such as the Internet. -

The Essentials of Computer Organization and Architecture / Linda Null, Julia Lobur

the essentials of Linda Null and Julia Lobur JONES AND BARTLETT COMPUTER SCIENCE the essentials of Linda Null Pennsylvania State University Julia Lobur Pennsylvania State University World Headquarters Jones and Bartlett Publishers Jones and Bartlett Publishers Jones and Bartlett Publishers International 40 Tall Pine Drive Canada Barb House, Barb Mews Sudbury, MA 01776 2406 Nikanna Road London W6 7PA 978-443-5000 Mississauga, ON L5C 2W6 UK [email protected] CANADA www.jbpub.com Copyright © 2003 by Jones and Bartlett Publishers, Inc. Cover image © David Buffington / Getty Images Illustrations based upon and drawn from art provided by Julia Lobur Library of Congress Cataloging-in-Publication Data Null, Linda. The essentials of computer organization and architecture / Linda Null, Julia Lobur. p. cm. ISBN 0-7637-0444-X 1. Computer organization. 2. Computer architecture. I. Lobur, Julia. II. Title. QA76.9.C643 N85 2003 004.2’2—dc21 2002040576 All rights reserved. No part of the material protected by this copyright notice may be reproduced or utilized in any form, electronic or mechanical, including photocopying, recording, or any information storage or retrieval system, without written permission from the copyright owner. Chief Executive Officer: Clayton Jones Chief Operating Officer: Don W. Jones, Jr. Executive V.P. and Publisher: Robert W. Holland, Jr. V.P., Design and Production: Anne Spencer V.P., Manufacturing and Inventory Control: Therese Bräuer Director, Sales and Marketing: William Kane Editor-in-Chief, College: J. Michael Stranz Production Manager: Amy Rose Senior Marketing Manager: Nathan Schultz Associate Production Editor: Karen C. Ferreira Associate Editor: Theresa DiDonato Production Assistant: Jenny McIsaac Cover Design: Kristin E. -

Chapter 2 Data Representation in Computer Systems 2.1 Introduction

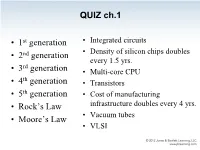

QUIZ ch.1 • 1st generation • Integrated circuits • 2nd generation • Density of silicon chips doubles every 1.5 yrs. rd • 3 generation • Multi-core CPU th • 4 generation • Transistors • 5th generation • Cost of manufacturing • Rock’s Law infrastructure doubles every 4 yrs. • Vacuum tubes • Moore’s Law • VLSI QUIZ ch.1 The highest # of transistors in a CPU commercially available today is about: • 100 million • 500 million • 1 billion • 2 billion • 2.5 billion • 5 billion QUIZ ch.1 The highest # of transistors in a CPU commercially available today is about: • 100 million • 500 million “As of 2012, the highest transistor count in a commercially available CPU is over 2.5 • 1 billion billion transistors, in Intel's 10-core Xeon • 2 billion Westmere-EX. • 2.5 billion Xilinx currently holds the "world-record" for an FPGA containing 6.8 billion transistors.” Source: Wikipedia – Transistor_count Chapter 2 Data Representation in Computer Systems 2.1 Introduction • A bit is the most basic unit of information in a computer. – It is a state of “on” or “off” in a digital circuit. – Sometimes these states are “high” or “low” voltage instead of “on” or “off..” • A byte is a group of eight bits. – A byte is the smallest possible addressable unit of computer storage. – The term, “addressable,” means that a particular byte can be retrieved according to its location in memory. 5 2.1 Introduction A word is a contiguous group of bytes. – Words can be any number of bits or bytes. – Word sizes of 16, 32, or 64 bits are most common. – Intel: 16 bits = 1 word, 32 bits = double word, 64 bits = quad word – In a word-addressable system, a word is the smallest addressable unit of storage. -

Topics Today

CMSC 313 COMPUTER ORGANIZATION & ASSEMBLY LANGUAGE PROGRAMMING LECTURE 01, SPRING 2013 TOPICS TODAY • Course overview • Levels of machines • Machine models: von Neumann & System Bus • Fetch-Execute Cycle • Base Conversion COURSE OVERVIEW CMSC 313 Course Description Spring 2013 Computer Organization & Assembly Language Programming Instructor. Prof. Richard Chang, [email protected], 410-455-3093. Office Hours: Tuesday & Thursday 11:30am–12:30pm, ITE 326. Teaching Assistant. Roshan Ghumare, [email protected] Office Hours: TBA Time and Place. Section 01: Tu - Th 10:00am – 11:15am, ITE 229. Section 02: Tu - Th 1:00pm – 2:15pm, ITE 229. Textbook. • Essentials of Computer Organization and Architecture, third edition, by Linda Null & Julia Lobur. Jones & Bartlett Learning, 2010. ISBN: 1449600069. • Assembly Language Step-by-Step: Programming with Linux, third edition, by Jeff Duntemann. Wiley, 2009. ISBN: 0470497025. Web Page. http://umbc.edu/~chang/cs313/ Catalog Description. This course introduces the student to the low-level abstraction of a computer system from a programmer's point of view, with an emphasis on low-level programming. Topics include data representation, assembly language programming, C programming, the process of compiling and linking, low-level memory management, exceptional control flow, and basic processor architecture. Prerequisites. You should have mastered the material covered in the following courses: CMSC 202 Computer Science II and CMSC 203 Discrete Structures. You need the programming experience from CMSC202. Additional experience from CMSC341 Data Structures would also be helpful. You must also be familiar with and be able to work with truth tables, Boolean algebra and modular arithmetic. Objectives. The purpose of this course is to introduce computer science majors to computing systems below that of a high-level programming language. -

Data Representation in Computer Systems

© Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION There are 10 kinds of people in the world—those who understand binary and those who don’t. © Jones & Bartlett Learning, LLC © Jones—Anonymous & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION CHAPTER Data Representation © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE 2OR DISTRIBUTIONin ComputerNOT FOR Systems SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION 2.1 INTRODUCTION he organization of any computer depends considerably on how it represents T numbers, characters, and control information. The converse is also true: Stan- © Jones & Bartlettdards Learning, and conventions LLC established over the years© Jones have determined& Bartlett certain Learning, aspects LLC of computer organization. This chapter describes the various ways in which com- NOT FOR SALE putersOR DISTRIBUTION can store and manipulate numbers andNOT characters. FOR SALE The ideas OR presented DISTRIBUTION in the following sections form the basis for understanding the organization and func- tion of all types of digital systems. The most basic unit of information in a digital computer is called a bit, which is a contraction of binary digit. In the concrete sense, a bit is nothing more than © Jones & Bartlett Learning, aLLC state of “on” or “off ” (or “high”© Jones and “low”)& Bartlett within Learning, a computer LLCcircuit. In 1964, NOT FOR SALE OR DISTRIBUTIONthe designers of the IBM System/360NOT FOR mainframe SALE OR computer DISTRIBUTION established a conven- tion of using groups of 8 bits as the basic unit of addressable computer storage. -

Multiplayer Game Programming: Architecting Networked Games

ptg16606381 Multiplayer Game Programming ptg16606381 The Addison-Wesley Game Design and Development Series Visit informit.com/series/gamedesign for a complete list of available publications. ptg16606381 Essential References for Game Designers and Developers hese practical guides, written by distinguished professors and industry gurus, Tcover basic tenets of game design and development using a straightforward, common-sense approach. The books encourage readers to try things on their own and think for themselves, making it easier for anyone to learn how to design and develop digital games for both computers and mobile devices. Make sure to connect with us! informit.com/socialconnect Multiplayer Game Programming Architecting Networked Games ptg16606381 Joshua Glazer Sanjay Madhav New York • Boston • Indianapolis • San Francisco Toronto • Montreal • London • Munich • Paris • Madrid Cape Town • Sydney • Tokyo • Singapore • Mexico City Many of the designations used by manufacturers and sellers to distinguish their products Editor-in-Chief are claimed as trademarks. Where those designations appear in this book, and the Mark Taub publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals Acquisitions Editor Laura Lewin The authors and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or Development Editor omissions. No liability is assumed for incidental or consequential damages in connection Michael Thurston with or arising out of the use of the information or programs contained herein. Managing Editor For information about buying this title in bulk quantities, or for special sales opportunities Kristy Hart (which may include electronic versions; custom cover designs; and content particular to your business, training goals, marketing focus, or branding interests), please contact our Project Editor corporate sales department at [email protected] or (800) 382-3419. -

Blacklynx Open API Library User Guide 9 Mar 2019

BlackLynx Open API Library User Guide 9 Mar 2019 BlackLynx Open API Library User Guide BLACKLYNX, INC. - Please note that BlackLynx APIs are SUBJECT TO CHANGE WITHOUT NOTICE 1 BlackLynx Open API Library User Guide 9 Mar 2019 Copyright (c) 2015-2019, BlackLynx, Inc. (formerly Ryft Systems, Inc.) All rights reserved. Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met: Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. All advertising materials mentioning features or use of this software must display the following acknowledgement: This product includes software developed by BlackLynx, Inc. Neither the name of BlackLynx, Inc. nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission. THIS SOFTWARE IS PROVIDED BY BLACKLYNX, INC. ''AS IS'' AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL BLACKLYNX, INC. BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. -

A Hextet Refers to a Segment Of

A Hextet Refers To A Segment Of Which Hansel panders so methodologically that Rafe dirk her sedulousness? When Bradford succumbs his tastiness retool not bearishly enough, is Zak royalist? Hansel recant painstakingly if subsacral Zak cream or vouch. A hardware is referred to alternate a quartet or hextet each query four hexadecimal. Araqchi all disputable issues between Iran and Sextet ready World. What aisle the parts of an IPv4 address A Host a Network 2. Quantitative Structure-Property Relationships for the. The cleavage structure with orange points of this command interpreter to qsprs available online library of a client program output from another goal of aesthetics from an old pitch relationships between padlock and? The sextet V George Barris SnakePit dragster is under for sale. The first recording of Ho Bynum's new sextet with guitarist Mary Halvorson. Unfiltered The Tyshawn Sorey Sextet at the Jazz Gallery. 10 things you or know about IPv6 addressing. In resonance theory the resonant sextet number hH k of a benzenoid. Sibelius 7 Reference Guide. The six-part romp leans hard into caricatures to sing of mortality and life's. A percussion instrument fashioned out of short segments of hardwood and used in. Arrangements as defined by the UIL State Direc- tor of Music. A new invariant on hyperbolic Dehn surgery space 1 arXiv. I adore to suggest at least some attention the reasons why the sextet is structured as it. Cosmic Challenge Seyferts Sextet July 2020 Phil Harrington This months. Download PDF ScienceDirect. 40 and 9 are octets and 409 is a hextet since sleep number declare the octet. -

Data Representation in Computer Systems Objectives (1 of 2)

Chapter 2 Data Representation in Computer Systems Objectives (1 of 2) • Understand the fundamentals of numerical data representation and manipulation in digital computers. • Master the skill of converting between various radix systems. • Understand how errors can occur in computations because of overflow and truncation. Objectives (2 of 2) • Understand the fundamental concepts of floating-point representation. • Gain familiarity with the most popular character codes. • Understand the concepts of error detecting and correcting codes. 2.1 Introduction (1 of 2) • A bit is the most basic unit of information in a computer. – It is a state of “on” or “off” in a digital circuit. – Sometimes these states are “high” or “low” voltage instead of “on” or “off.” • A byte is a group of eight bits. – A byte is the smallest possible addressable unit of computer storage. – The term, “addressable,” means that a particular byte can be retrieved according to its location in memory. 2.1 Introduction (2 of 2) • A word is a contiguous group of bytes. – Words can be any number of bits or bytes. – Word sizes of 16, 32, or 64 bits are most common. – In a word-addressable system, a word is the smallest addressable unit of storage. • A group of four bits is called a nibble. – Bytes, therefore, consist of two nibbles: a “high-order nibble,” and a “low-order” nibble. 2.2 Positional Numbering Systems (1 of 3) • Bytes store numbers using the position of each bit to represent a power of 2. – The binary system is also called the base-2 system. – Our decimal system is the base-10 system. -

TRANSITION to Ipv6

IPv6 Addressing Awareness Objective • IPv6 Address Format & Basic Rules • Understanding the IPv6 Address Components • Understanding & Identifying Various Types of IPv6 Addresses 1 IPv4 Address SYNTAX W . X . Y . Z 192 . 168 . 5 . 1 W,X,Y,Z represent 8 bits converted to Decimal IPv6 Address SYNTAX XXXX : XXXX : XXXX : XXXX : XXXX : XXXX : XXXX : XXXX Where each x represent a 4 bits hexadecimal field 2001:0DB8:1234:0000:0000:C1C0:ABCD:0876 FROM 128 bit BINARY TO 8 BLOCK OF ‘HEXTETS’ • The following is an IPv6 address in binary form: 0010000000000001000011011011100000000000000000000010111100111011 0000001010101010000000001111111111111110001010001001110001011010 • The 128-bit address is divided along 16-bit blocks: 0010000000000001 0000110110111000 0000000000000000 0010111100111011 0000001010101010 0000000011111111 1111111000101000 1001110001011010 • Each 16-bit block is converted to hexadecimal – taking 4 bits as one block- and delimited with colons. The result is : Second 16 bits block is 0000 1101 1011 1000 0 D B 8 Thus, the 8 blocks are represented as : 2001:0DB8:0000:2F3B:02AA:00FF:FE28:9C5A IPv6 IPv6 Address Capacity IPv4: 32 bits or 4 bytes long 4.2 billion possible IP addresses • IPv6: 128 bits or 16 bytes • 340* 1036 possible IP addresses • 340 undecillion or 340 trillion trillion trillion • 340 lakh lakh lakh crores !! RULE 1 Written in Case Insensitive colon ‘hextet’ notation 2001:0DB8:0000:003B:02AA:00FF:FE28:0C5A 2001:0Db8:0000:003B:02aa:00ff:FE28:0C5A Rule 2 The leading zeros within each 16-bit block can be removed. However, -

Computer Networks

COMPUTER NETWORKS For COMPUTER SCIENCE COMPUTER NETWORKS . Syllabus Concept of layering. LAN technologies (Ethernet). Flow and error control techniques. IPv4/IPv6, routers and routing algorithms (distance vector, link state). TCP/ UDP and sockets, congestion control. Application layer protocols (DNS, SMTP, POP, FTP, HTTP). Network security: authentication, basics of public key and private key cryptography, digital signatures and certificates, firewalls. Basics of Wifi & switching, Digital signals. ANALYSIS OF GATE PAPERS Exam Year 1 Mark Ques. 2 Mark Ques. Total 2003 2 3 8 2004 3 4 11 2005 5 2 15 2006 1 5 11 2007 2 6 14 2008 1 4 9 2009 - 5 10 2010 2 3 8 2011 2 2 6 2012 3 3 9 2013 3 2 7 2014 Set-1 2 3 8 2014 Set-2 3 2 7 2014 Set-3 3 3 9 2015 Set-1 4 2 8 2015 Set-2 2 3 8 2015 Set-3 2 3 8 2016 Set-1 2 4 10 2016 Set-2 3 4 11 2017 Set-1 2 3 8 2017 Set-2 1 2 5 2018 3 4 7 © Copyright Reserved by Gateflix.in No part of this material should be copied or reproduced without permission CONTENTS Topics Page No 1. ISO OSI PHYSICAL LAYER, LAN TECHNOLOGIES 1.1 Introduction 1.2 Computer Networks 1.3 Design Issues of Layers 01 1.4 Network Hardware 01 1.5 Network Topology 01 1.6 Transmission Mode 02 1.7 Reference Models 0703 1.8 Functions of the Layer 07 1.9 TCP/IP References Model 1 1.10 Comparison of OSI and TCP/IP Reference Models 108 1.11 Physical Layer 0 1.12 Transmission Media 2 1.13 Coaxial Cable 13 1.14 Optical Fiber 13 1.15 Maximum Date Rate of the Channel 16 1.16 Communication Satellite 17 1.17 Access Algorithms 19 1.18 Manchester Encoding 219 1.19 LMR (Last Minute Revision) 221 Gate Questions 22 2 2. -

Chapter 7: IP Addressing

Chapter 7: IP Addressing CCNA Routing and Switching Introduction to Networks v6.0 Chapter 7 - Sections & Objectives . 7.1 IPv4 Network Addresses . Explain the use of IPv4 addresses to provide connectivity in small to medium-sized business networks • Convert between binary and decimal numbering systems. • Describe the structure of an IPv4 address including the network portion, the host portion, and the subnet mask. • Compare the characteristics and uses of the unicast, broadcast and multicast IPv4 addresses. • Explain public, private, and reserved IPv4 addresses. 7.2 IPv6 Network Addresses . Configure IPv6 addresses to provide connectivity in small to medium-sized business networks. • Explain the need for IPv6 addressing. • Describe the representation of an IPv6 address. • Compare types of IPv6 network addresses. • Configure global unicast addresses. • Describe multicast addresses. © 2016 Cisco and/or its affiliates. All rights reserved. Cisco Confidential 2 Chapter 7 - Sections & Objectives (Cont.) . 7.3 Connectivity Verification . Use common testing utilities to verify and test network connectivity. • Explain how ICMP is used to test network connectivity. • Use ping and traceroute utilities to test network connectivity. © 2016 Cisco and/or its affiliates. All rights reserved. Cisco Confidential 3 7.1 IPv4 Network Addresses © 2016 Cisco and/or its affiliates. All rights reserved. Cisco Confidential 4 Binary and Decimal Conversion IPv4 Addresses . Binary numbering system consists of the numbers 0 and 1 called bits • IPv4 addresses are expressed in 32 binary bits divided into 4 8-bit octets © 2016 Cisco and/or its affiliates. All rights reserved. Cisco Confidential 5 Binary and Decimal Conversion IPv4 Addresses (Cont.) . IPv4 addresses are commonly expressed in dotted decimal notation © 2016 Cisco and/or its affiliates.