Data Representation in Computer Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction to the Ipaddress Module

Introduction to the ipaddress Module An Introduction to the ipaddress Module Overview: This document provides an introduction to the ipaddress module used in the Python language for manipulation of IPv4 and IPv6 addresses. At a high level, IPv4 and IPv6 addresses are used for like purposes and functions. However, since there are major differences in the address structure for each protocol, this tutorial has separated into separate sections, one each for IPv4 and IPv6. In today’s Internet, the IPv4 protocol controls the majority of IP processing and will remain so for the near future. The enhancements in scale and functionality that come with IPv6 are necessary for the future of the Internet and adoption is progressing. The adoption rate, however, remains slow to this date. IPv4 and the ipaddress Module: The following is a brief discussion of the engineering of an IPv4 address. Only topics pertinent to the ipaddress module are included. A IPv4 address is composed of 32 bits, organized into four eight bit groupings referred to as “octets”. The word “octet” is used to identify an eight-bit structure in place of the more common term “byte”, but they carry the same definition. The four octets are referred to as octet1, octet2, octet3, and octet4. This is a “dotted decimal” format where each eight-bit octet can have a decimal value based on eight bits from zero to 255. IPv4 example: 192.168.100.10 Every packet in an IPv4 network contains a separate source and destination address. As a unique entity, a IPv4 address should be sufficient to route an IPv4 data packet from the source address of the packet to the destination address of the packet on a IPv4 enabled network, such as the Internet. -

The Essentials of Computer Organization and Architecture / Linda Null, Julia Lobur

the essentials of Linda Null and Julia Lobur JONES AND BARTLETT COMPUTER SCIENCE the essentials of Linda Null Pennsylvania State University Julia Lobur Pennsylvania State University World Headquarters Jones and Bartlett Publishers Jones and Bartlett Publishers Jones and Bartlett Publishers International 40 Tall Pine Drive Canada Barb House, Barb Mews Sudbury, MA 01776 2406 Nikanna Road London W6 7PA 978-443-5000 Mississauga, ON L5C 2W6 UK [email protected] CANADA www.jbpub.com Copyright © 2003 by Jones and Bartlett Publishers, Inc. Cover image © David Buffington / Getty Images Illustrations based upon and drawn from art provided by Julia Lobur Library of Congress Cataloging-in-Publication Data Null, Linda. The essentials of computer organization and architecture / Linda Null, Julia Lobur. p. cm. ISBN 0-7637-0444-X 1. Computer organization. 2. Computer architecture. I. Lobur, Julia. II. Title. QA76.9.C643 N85 2003 004.2’2—dc21 2002040576 All rights reserved. No part of the material protected by this copyright notice may be reproduced or utilized in any form, electronic or mechanical, including photocopying, recording, or any information storage or retrieval system, without written permission from the copyright owner. Chief Executive Officer: Clayton Jones Chief Operating Officer: Don W. Jones, Jr. Executive V.P. and Publisher: Robert W. Holland, Jr. V.P., Design and Production: Anne Spencer V.P., Manufacturing and Inventory Control: Therese Bräuer Director, Sales and Marketing: William Kane Editor-in-Chief, College: J. Michael Stranz Production Manager: Amy Rose Senior Marketing Manager: Nathan Schultz Associate Production Editor: Karen C. Ferreira Associate Editor: Theresa DiDonato Production Assistant: Jenny McIsaac Cover Design: Kristin E. -

Chapter 2 Data Representation in Computer Systems 2.1 Introduction

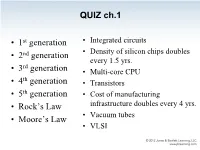

QUIZ ch.1 • 1st generation • Integrated circuits • 2nd generation • Density of silicon chips doubles every 1.5 yrs. rd • 3 generation • Multi-core CPU th • 4 generation • Transistors • 5th generation • Cost of manufacturing • Rock’s Law infrastructure doubles every 4 yrs. • Vacuum tubes • Moore’s Law • VLSI QUIZ ch.1 The highest # of transistors in a CPU commercially available today is about: • 100 million • 500 million • 1 billion • 2 billion • 2.5 billion • 5 billion QUIZ ch.1 The highest # of transistors in a CPU commercially available today is about: • 100 million • 500 million “As of 2012, the highest transistor count in a commercially available CPU is over 2.5 • 1 billion billion transistors, in Intel's 10-core Xeon • 2 billion Westmere-EX. • 2.5 billion Xilinx currently holds the "world-record" for an FPGA containing 6.8 billion transistors.” Source: Wikipedia – Transistor_count Chapter 2 Data Representation in Computer Systems 2.1 Introduction • A bit is the most basic unit of information in a computer. – It is a state of “on” or “off” in a digital circuit. – Sometimes these states are “high” or “low” voltage instead of “on” or “off..” • A byte is a group of eight bits. – A byte is the smallest possible addressable unit of computer storage. – The term, “addressable,” means that a particular byte can be retrieved according to its location in memory. 5 2.1 Introduction A word is a contiguous group of bytes. – Words can be any number of bits or bytes. – Word sizes of 16, 32, or 64 bits are most common. – Intel: 16 bits = 1 word, 32 bits = double word, 64 bits = quad word – In a word-addressable system, a word is the smallest addressable unit of storage. -

Exploring Unit Fractions

Page 36 Exploring Unit Fractions Alan Christison [email protected] INTRODUCTION In their article “The Beauty of Cyclic Numbers”, Duncan Samson and Peter Breetzke (2014) explored, among other things, unit fractions and their decimal expansions6. They showed that for a unit fraction to terminate, its denominator must be expressible in the form 2푛. 5푚 where 푛 and 푚 are non-negative integers, both not simultaneously equal to zero. In this article I take the above observation as a starting point and explore it further. FURTHER OBSERVATIONS 1 For any unit fraction of the form where 푛 and 푚 are non-negative integers, both not 2푛.5푚 simultaneously equal to zero, the number of digits in the terminating decimal will be equal to the larger 1 1 of 푛 or 푚. By way of example, has 4 terminating decimals (0,0625), has 5 terminating decimals 24 55 1 (0,00032), and has 6 terminating decimals (0,000125). The reason for this can readily be explained by 26.53 1 converting the denominators into a power of 10. Consider for example which has six terminating 26.53 decimals. In order to convert the denominator into a power of 10 we need to multiply both numerator and denominator by 53. Thus: 1 1 53 53 53 125 = × = = = = 0,000125 26. 53 26. 53 53 26. 56 106 1000000 1 More generally, consider a unit fraction of the form where 푛 > 푚. Multiplying both the numerator 2푛.5푚 and denominator by 5푛−푚 gives: 1 1 5푛−푚 5푛−푚 5푛−푚 = × = = 2푛. 5푚 2푛. -

0.999… = 1 an Infinitesimal Explanation Bryan Dawson

0 1 2 0.9999999999999999 0.999… = 1 An Infinitesimal Explanation Bryan Dawson know the proofs, but I still don’t What exactly does that mean? Just as real num- believe it.” Those words were uttered bers have decimal expansions, with one digit for each to me by a very good undergraduate integer power of 10, so do hyperreal numbers. But the mathematics major regarding hyperreals contain “infinite integers,” so there are digits This fact is possibly the most-argued- representing not just (the 237th digit past “Iabout result of arithmetic, one that can evoke great the decimal point) and (the 12,598th digit), passion. But why? but also (the Yth digit past the decimal point), According to Robert Ely [2] (see also Tall and where is a negative infinite hyperreal integer. Vinner [4]), the answer for some students lies in their We have four 0s followed by a 1 in intuition about the infinitely small: While they may the fifth decimal place, and also where understand that the difference between and 1 is represents zeros, followed by a 1 in the Yth less than any positive real number, they still perceive a decimal place. (Since we’ll see later that not all infinite nonzero but infinitely small difference—an infinitesimal hyperreal integers are equal, a more precise, but also difference—between the two. And it’s not just uglier, notation would be students; most professional mathematicians have not or formally studied infinitesimals and their larger setting, the hyperreal numbers, and as a result sometimes Confused? Perhaps a little background information wonder . -

Topics Today

CMSC 313 COMPUTER ORGANIZATION & ASSEMBLY LANGUAGE PROGRAMMING LECTURE 01, SPRING 2013 TOPICS TODAY • Course overview • Levels of machines • Machine models: von Neumann & System Bus • Fetch-Execute Cycle • Base Conversion COURSE OVERVIEW CMSC 313 Course Description Spring 2013 Computer Organization & Assembly Language Programming Instructor. Prof. Richard Chang, [email protected], 410-455-3093. Office Hours: Tuesday & Thursday 11:30am–12:30pm, ITE 326. Teaching Assistant. Roshan Ghumare, [email protected] Office Hours: TBA Time and Place. Section 01: Tu - Th 10:00am – 11:15am, ITE 229. Section 02: Tu - Th 1:00pm – 2:15pm, ITE 229. Textbook. • Essentials of Computer Organization and Architecture, third edition, by Linda Null & Julia Lobur. Jones & Bartlett Learning, 2010. ISBN: 1449600069. • Assembly Language Step-by-Step: Programming with Linux, third edition, by Jeff Duntemann. Wiley, 2009. ISBN: 0470497025. Web Page. http://umbc.edu/~chang/cs313/ Catalog Description. This course introduces the student to the low-level abstraction of a computer system from a programmer's point of view, with an emphasis on low-level programming. Topics include data representation, assembly language programming, C programming, the process of compiling and linking, low-level memory management, exceptional control flow, and basic processor architecture. Prerequisites. You should have mastered the material covered in the following courses: CMSC 202 Computer Science II and CMSC 203 Discrete Structures. You need the programming experience from CMSC202. Additional experience from CMSC341 Data Structures would also be helpful. You must also be familiar with and be able to work with truth tables, Boolean algebra and modular arithmetic. Objectives. The purpose of this course is to introduce computer science majors to computing systems below that of a high-level programming language. -

A Theorem on Repeating Decimals

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Faculty Publications, Department of Mathematics Mathematics, Department of 6-1967 A THEOREM ON REPEATING DECIMALS William G. Leavitt University of Nebraska - Lincoln Follow this and additional works at: https://digitalcommons.unl.edu/mathfacpub Part of the Mathematics Commons Leavitt, William G., "A THEOREM ON REPEATING DECIMALS" (1967). Faculty Publications, Department of Mathematics. 48. https://digitalcommons.unl.edu/mathfacpub/48 This Article is brought to you for free and open access by the Mathematics, Department of at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Faculty Publications, Department of Mathematics by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. The American Mathematical Monthly, Vol. 74, No. 6 (Jun. - Jul., 1967), pp. 669-673. Copyright 1967 Mathematical Association of America 19671 A THEOREM ON REPEATING DECIMALS A THEOREM ON REPEATING DECIMALS W. G. LEAVITT, University of Nebraska It is well known that a real number is rational if and only if its decimal ex- pansion is a repeating decimal. For example, 2/7 =.285714285714 . Many students also know that if n/m is a rational number reduced to lowest terms (that is, n and m relatively prime), then the number of repeated digits (we call this the length of period) depends only on m. Thus all fractions with denominator 7 have length of period 6. A sharp-eyed student may also notice that when the period (that is, the repeating digits) for 2/7 is split into its two half-periods 285 and 714, then the sum 285+714=999 is a string of nines. -

Repeating Decimals Warm up Problems. A. Find the Decimal Expressions for the Fractions 1 7 , 2 7 , 3 7 , 4 7 , 5 7 , 6 7 . What

Repeating Decimals Warm up problems. a. Find the decimal expressions for the fractions 1 2 3 4 5 6 ; ; ; ; ; : 7 7 7 7 7 7 What interesting things do you notice? b. Repeat the problem for the fractions 1 2 12 ; ;:::; : 13 13 13 What is interesting about these answers? Every fraction has a decimal representation. These representations either terminate (e.g. 3 8 = 0:375) or or they do not terminate but are repeating (e.g. 3 = 0:428571428571 ::: = 0:428571; ) 7 where the bar over the six block set of digits indicates that that block repeats indefinitely. 1 1. Perform the by hand, long divisions to calculate the decimal representations for 13 2 and 13 . Use these examples to help explain why these fractions have repeating decimals. 2. With these examples can you predict the \interesting things" that you observed in 1 warm up problem b.? Look at the long division for 7 . Does this example confirm your reasoning? 1 3. It turns out that 41 = 0:02439: Write out the long division that shows this and then find (without dividing) the four other fractions whose repeating part has these five digits in the same cyclic order. 4. As it turns out, if you divide 197 by 26, you get a quotient of 7 and a remainder of 15. How can you use this information to find the result when 2 · 197 = 394 is divided by 26? When 5 · 197 = 985 is divided by 26? 5. Suppose that when you divide N by D, the quotient is Q and the remainder is R. -

Irrational Numbers and Rounding Off*

OpenStax-CNX module: m31341 1 Irrational Numbers and Rounding Off* Rory Adams Free High School Science Texts Project Mark Horner Heather Williams This work is produced by OpenStax-CNX and licensed under the Creative Commons Attribution License 3.0 1 Introduction You have seen that repeating decimals may take a lot of paper and ink to write out. Not only is that impossible, but writing numbers out to many decimal places or a high accuracy is very inconvenient and rarely gives practical answers. For this reason we often estimate the number to a certain number of decimal places or to a given number of signicant gures, which is even better. 2 Irrational Numbers Irrational numbers are numbers that cannot be written as a fraction with the numerator and denominator as integers. This means that any number that is not a terminating decimal number or a repeating decimal number is irrational. Examples of irrational numbers are: p p p 2; 3; 3 4; π; p (1) 1+ 5 2 ≈ 1; 618 033 989 tip: When irrational numbers are written in decimal form, they go on forever and there is no repeated pattern of digits. If you are asked to identify whether a number is rational or irrational, rst write the number in decimal form. If the number is terminated then it is rational. If it goes on forever, then look for a repeated pattern of digits. If there is no repeated pattern, then the number is irrational. When you write irrational numbers in decimal form, you may (if you have a lot of time and paper!) continue writing them for many, many decimal places. -

Data Representation in Computer Systems

© Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION There are 10 kinds of people in the world—those who understand binary and those who don’t. © Jones & Bartlett Learning, LLC © Jones—Anonymous & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION CHAPTER Data Representation © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE 2OR DISTRIBUTIONin ComputerNOT FOR Systems SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION 2.1 INTRODUCTION he organization of any computer depends considerably on how it represents T numbers, characters, and control information. The converse is also true: Stan- © Jones & Bartlettdards Learning, and conventions LLC established over the years© Jones have determined& Bartlett certain Learning, aspects LLC of computer organization. This chapter describes the various ways in which com- NOT FOR SALE putersOR DISTRIBUTION can store and manipulate numbers andNOT characters. FOR SALE The ideas OR presented DISTRIBUTION in the following sections form the basis for understanding the organization and func- tion of all types of digital systems. The most basic unit of information in a digital computer is called a bit, which is a contraction of binary digit. In the concrete sense, a bit is nothing more than © Jones & Bartlett Learning, aLLC state of “on” or “off ” (or “high”© Jones and “low”)& Bartlett within Learning, a computer LLCcircuit. In 1964, NOT FOR SALE OR DISTRIBUTIONthe designers of the IBM System/360NOT FOR mainframe SALE OR computer DISTRIBUTION established a conven- tion of using groups of 8 bits as the basic unit of addressable computer storage. -

142857, and More Numbers Like It

142857, and more numbers like it John Kerl January 4, 2012 Abstract These are brief jottings to myself on vacation-spare-time observations about trans- posable numbers. Namely, 1/7 in base 10 is 0.142857, 2/7 is 0.285714, 3/7 is 0.428571, and so on. That’s neat — can we find more such? What happens when we use denom- inators other than 7, or bases other than 10? The results presented here are generally ancient and not essentially original in their particulars. The current exposition takes a particular narrative and data-driven approach; also, elementary group-theoretic proofs preferred when possible. As much I’d like to keep the presentation here as elementary as possible, it makes the presentation far shorter to assume some first-few-weeks-of-the-semester group theory Since my purpose here is to quickly jot down some observations, I won’t develop those concepts in this note. This saves many pages, at the cost of some accessibility, and with accompanying unevenness of tone. Contents 1 The number 142857, and some notation 3 2 Questions 4 2.1 Are certain constructions possible? . .. 4 2.2 Whatperiodscanexist? ............................. 5 2.3 Whencanfullperiodsexist?........................... 5 2.4 Howdodigitsetscorrespondtonumerators? . .... 5 2.5 What’s the relationship between add order and shift order? . ...... 5 2.6 Why are half-period shifts special? . 6 1 3 Findings 6 3.1 Relationship between expansions and integers . ... 6 3.2 Periodisindependentofnumerator . 6 3.3 Are certain constructions possible? . .. 7 3.4 Whatperiodscanexist? ............................. 7 3.5 Whencanfullperiodsexist?........................... 8 3.6 Howdodigitsetscorrespondtonumerators? . .... 8 3.7 What’s the relationship between add order and shift order? . -

Renormalization Group Calculation of Dynamic Exponent in the Models E and F with Hydrodynamic Fluctuations M

Vol. 131 (2017) ACTA PHYSICA POLONICA A No. 4 Proceedings of the 16th Czech and Slovak Conference on Magnetism, Košice, Slovakia, June 13–17, 2016 Renormalization Group Calculation of Dynamic Exponent in the Models E and F with Hydrodynamic Fluctuations M. Dančoa;b;∗, M. Hnatiča;c;d, M.V. Komarovae, T. Lučivjanskýc;d and M.Yu. Nalimove aInstitute of Experimental Physics SAS, Watsonova 47, 040 01 Košice, Slovakia bBLTP, Joint Institute for Nuclear Research, Dubna, Russia cInstitute of Physics, Faculty of Sciences, P.J. Safarik University, Park Angelinum 9, 041 54 Košice, Slovakia d‘Peoples’ Friendship University of Russia (RUDN University) 6 Miklukho-Maklaya St, Moscow, 117198, Russian Federation eDepartment of Theoretical Physics, St. Petersburg University, Ulyanovskaya 1, St. Petersburg, Petrodvorets, 198504 Russia The renormalization group method is applied in order to analyze models E and F of critical dynamics in the presence of velocity fluctuations generated by the stochastic Navier–Stokes equation. Results are given to the one-loop approximation for the anomalous dimension γλ and fixed-points’ structure. The dynamic exponent z is calculated in the turbulent regime and stability of the fixed points for the standard model E is discussed. DOI: 10.12693/APhysPolA.131.651 PACS/topics: 64.60.ae, 64.60.Ht, 67.25.dg, 47.27.Jv 1. Introduction measurable index α [4]. The index α has been also de- The liquid-vapor critical point, λ transition in three- termined in the framework of the renormalization group dimensional superfluid helium 4He belong to famous ex- (RG) approach using a resummation procedure [5] up to amples of continuous phase transitions.