An Approach to Automatic Music Band Member Detection Based on Supervised Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mongrel Media Presents

Mongrel Media Presents Official Selection: Toronto International Film Festival Official Selection: Sundance Film Festival (97 mins, USA, 2009) Distribution Publicity Bonne Smith Star PR 1028 Queen Street West Tel: 416-488-4436 Toronto, Ontario, Canada, M6J 1H6 Fax: 416-488-8438 Tel: 416-516-9775 Fax: 416-516-0651 E-mail: [email protected] E-mail: [email protected] www.mongrelmedia.com High res stills may be downloaded from http://www.mongrelmedia.com/press.html Short Synopsis Rarely can a film penetrate the glamorous surface of rock legends. It Might Get Loud tells the personal stories, in their own words, of three generations of electric guitar virtuosos – The Edge (U2), Jimmy Page (Led Zeppelin), and Jack White (The White Stripes). It reveals how each developed his unique sound and style of playing favorite instruments, guitars both found and invented. Concentrating on the artist’s musical rebellion, traveling with him to influential locations, provoking rare discussion as to how and why he writes and plays, this film lets you witness intimate moments and hear new music from each artist. The movie revolves around a day when Jimmy Page, Jack White, and The Edge first met and sat down together to share their stories, teach and play. Long Synopsis Who hasn't wanted to be a rock star, join a band or play electric guitar? Music resonates, moves and inspires us. Strummed through the fingers of The Edge, Jimmy Page and Jack White, somehow it does more. Such is the premise of It Might Get Loud, a new documentary conceived by producer Thomas Tull. -

The Raconteurs

DNA concerti è orgogliosa di presentare Per la prima volta in Italia THE RACONTEURS 09/07/2008 - Ferrara @ Piazza Castello 25 euro + d.p. Arrivano per la prima volta in Italia The Raconteurs! Il supergruppo, formato da Jack White dei White Stripes, dal cantautore Brendan Benson e da Jack Lawrence e Patrick Keeler, rispettivamente bassista e batterista dei Greenhornes, che con il precedente album Broken Boy Soldiers ha scalato le classifiche di tutto il mondo sarà in Italia per presentare il nuovo capolavoro Consolers Of The Lonely. Il nuovo album contiene 14 brani, tra cui il primo singolo Salute Your Solution, è stato interamente registrato presso i Blackbird studio a Nashville, dove vivono tutti i componenti della band dal 2005, ed è uscito ovunque e in tutti i formati il 25 marzo su etichetta Third Man Records/XL Recordings. L’annuncio dell’album è stato dato soltanto una settimana prima dell’uscita! Il proposito, ha dichiarato la band, è stato quello “di far arrivare l’album ai fan, alle radio e ai giornalisti esattamente nello stesso momento, così che nessuno potesse avere priorità su nessun altro riguardo la disponibilità, la ricezione o la percezione del disco stesso. Insomma l’obiettivo era garantire un approccio alla musica il più diretto possibile, senza alcun filtro e senza nessuna guida privilegiata. 14 brani di rock puro, diretto ed entusiasmante costituiscono l’ossatura di Consolers Of The Lonely. Un album classico che non scade mai nella banalità, in cui il power-pop di Benson, l'hard blues di White e il garage-rock di Lawrence e Keeler si fondono alla perfezione in uno stile difficile da definire se non con il termine ROCK. -

Austin City Limits Showcases Supergroup the Raconteurs & Introduces 2020 GRAMMY “Best New Artist” Nominee Black Pumas

Austin City Limits Showcases Supergroup The Raconteurs & Introduces 2020 GRAMMY “Best New Artist” Nominee Black Pumas New Episode Premieres January 11 on PBS Austin, TX—January 9, 2020—Austin City Limits (ACL) presents a Season 45 highlight, the return of powerhouse rockers The Raconteurs, the supergroup featuring Jack White, Brendan Benson, Patrick Keeler and Jack Lawrence making their first appearance in over a decade in a must-see new installment. The hour also introduces a 2020 GRAMMY® “Best New Artist” nominee, Austin breakout duo Black Pumas. The broadcast premieres Saturday, January 11 at 8pm CT/9pm ET on PBS. The episode will be available to music fans everywhere to stream online the next day beginning Sunday, January 12 @10am ET at pbs.org/austincitylimits. Providing viewers a front-row seat to the best in live performance for a remarkable 45 years, the program airs weekly on PBS stations nationwide (check local listings for times) and full episodes are made available online for a limited time at pbs.org/austincitylimits immediately following the initial broadcast. Viewers can visit acltv.com for news regarding future tapings, episode schedules and select live stream updates. The show's official hashtag is #acltv. The series recently announced the first taping of Season 46, featuring UK country-soul phenom Yola on February 4, 2020. The Raconteurs return with a full-tilt romp featuring killer gems from the acclaimed HELP US STRANGER, their third studio LP and first album in more than a decade. Featuring both Jack White and Brendan Benson as lead singers/guitarists AND songwriters, with an ace rhythm section of Jack Lawrence (bass) and Patrick Keeler (drums), The Raconteurs deliver a love letter to classic rock in a performance for the ages. -

The Posies and Brendan Benson at the Bottom Lounge: Live Review and Photo Gallery Posted in Audio File Blog by Gavin Paul on Nov 8, 2010 at 3:07Pm

Time Out Worldwide Chicago Kids New York Boston Travel Time Out store Subscribe: iPad Print Newsletters Customer Service HOT TOPICS Cult workouts for 2013 2012 in quotes Search Things to Do Arts + Culture Music + Nightlife Restaurants + Bars Shopping Guides Blogs Find an event All events Today Any neighborhood Go Previous post Next post Connect to share what you're reading and see friend activity. (?) The Posies and Brendan Benson at The Bottom Lounge: Live review and photo gallery Posted in Audio File blog by Gavin Paul on Nov 8, 2010 at 3:07pm Categories Audio File blog Keywords Dana Loftus, Ken Stringfellow, Music/Clubs Gallery, Posies, The Raconteurs Everyone Friends Me Recent user activity on www.timeoutchicago.com: Alejandro Aguilar : Robert Feder | Chicago Media blog 5 hours ago Rob Kozlowski : The Master to return to the Music Box in 70mm 7 hours ago Andrew Hilsberg : We love: State Street shopping Photos: Dana Loftus 9 hours ago Terry Socol : Early ’90s power-pop nation all but invaded the Bottom Lounge Saturday night despite the Sun-Times standing up for Spielman’s fact that co-headliners the Posies were pulling heavily from their seventh LP, Blood/Candy (Rykodisc) in the set list—an album released in this decade. The Posies were cocksure with fists in the air attacking every chord crunch like the sludge of grunge was fixing to swallow their career whole again. Frontman Ken Stringfellow energetically chased the specter of Seattle hype from his The Lists opening deadpan cryptic one liner from the Posies debut album, Failure, with his eyes on the back of the wall. -

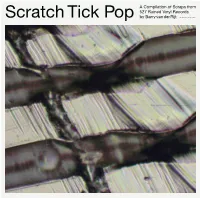

Scratch Tick Pop a Compilation of Scraps from 527 Ruined Vinyl

A Compilation of Scraps from 527 Ruined Vinyl Records Scratch Tick Pop by Barry van der Rijt The Eriskay Connection Scratch Tick Pop Dedicated to the memory Concept by Sleeve design by I would like to thank Additionally, I would like Published by A Compilation of Scraps of Vincent Brinkhoff Barry van der Rijt Rob van Hoesel everyone who has lent me to thank Peter Dekens, The Eriskay Connection A Compilation of Scraps from from 527 Ruined Vinyl their ruined vinyl records Jos Janssen, Jenny Martens, www.eriskayconnection.com For Daniël and Mara Compiled and produced Pressing and production Records by Barry van der Rijt Mels van Zutphen and Anouk & by Barry van der Rijt by Record Industry 2017 To Leonie Rinus from Record Industry TEC052 / 978–94–92051–33–2 527 Ruined Vinyl Records from 2016 until 2017 For the best experience www.barryvanderrijt.com please use headphones Scratch Tick Pop by Barry van der Rijt The Eriskay Connection Scratch Tick Pop — A Compilation of Scraps from 527 Ruined Vinyl Records by Barry van der 527 Ruined Vinyl Records — A Compilation of Scraps from Rijt TEC052 — The Eriskay Connection 32. Pop found in the track (You’re My) Soul 65. Tick found in the track Cafe from 99. Scratch found in the track Mother 134. Tick found in the track Super Stupid 167. Pop found in the track Help! from 200. Pop found in the track Saturday Night 231. Pop found in the track Rocket U.S.A. 298. Scratch found in the track Flash from 332. Tick found in the track Ben Jij Ook 364. -

AND YOU WILL KNOW US Mistakes & Regrets .1-2-5 What

... AND YOU WILL KNOW US Mistakes & Regrets .1-2-5 What You Don't Know 10 COMMANDMENTS Not True 1989 100 FLOWERS The Long Arm Of The Social Sciences 100 FLOWERS The Long Arm Of The Social Sciences 100 WATT SMILE Miss You 15 MINUTES Last Chance For You 16 HORSEPOWER 16 Horsepower A&M 540 436 2 CD D 1995 16 HORSEPOWER Sackcloth'n'Ashes PREMAX' TAPESLEEVES PTS 0198 MC A 1996 16 HORSEPOWER Low Estate PREMAX' TAPESLEEVES PTS 0198 MC A 1997 16 HORSEPOWER Clogger 16 HORSEPOWER Cinder Alley 4 PROMILLE Alte Schule 45 GRAVE Insurance From God 45 GRAVE School's Out 4-SKINS One Law For Them 64 SPIDERS Bulemic Saturday 198? 64 SPIDERS There Ain't 198? 99 TALES Baby Out Of Jail A GLOBAL THREAT Cut Ups A GUY CALLED GERALD Humanity A GUY CALLED GERALD Humanity (Funkstörung Remix) A SUBTLE PLAGUE First Street Blues 1997 A SUBTLE PLAGUE Might As Well Get Juiced A SUBTLE PLAGUE Hey Cop A-10 Massive At The Blind School AC 001 12" I 1987 A-10 Bad Karma 1988 AARDVARKS You're My Loving Way AARON NEVILLE Tell It Like It Is ABBA Ring Ring/Rock'n Roll Band POLYDOR 2041 422 7" D 1973 ABBA Waterloo (Swedish version)/Watch Out POLYDOR 2040 117 7" A 1974 ABDELLI Adarghal (The Blind In Spirit) A-BONES Music Minus Five NORTON ED-233 12" US 1993 A-BONES You Can't Beat It ABRISS WEST Kapitalismus tötet ABSOLUTE GREY No Man's Land 1986 ABSOLUTE GREY Broken Promise STRANGE WAYS WAY 101 CD D 1995 ABSOLUTE ZEROS Don't Cry ABSTÜRZENDE BRIEFTAUBEN Das Kriegen Wir schon Hin AU-WEIA SYSTEM 001 12" D 1986 ABSTÜRZENDE BRIEFTAUBEN We Break Together BUCKEL 002 12" D 1987 ABSTÜRZENDE -

The Compact, Issue 2, 24Th of October, 2005

Technological University Dublin ARROW@TU Dublin DIT Student Union Dublin Institute of Technology 2005 The Compact, Issue 2, 24th of October, 2005 DIT: Students' Union Follow this and additional works at: https://arrow.tudublin.ie/ditsu Recommended Citation Dublin Institute of Technology Students' Union, The Compact, Issue 2, October 24, 2005. Dublin, DIT, 2005. This Other is brought to you for free and open access by the Dublin Institute of Technology at ARROW@TU Dublin. It has been accepted for inclusion in DIT Student Union by an authorized administrator of ARROW@TU Dublin. For more information, please contact [email protected], [email protected]. This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 License News I KeVlews I •., thecompact ..•••:::.• • Sponsored By IrishIndependent the compact 4 Canteen Re-Opens 5 Vice-President Academic & Student Affairs 6 Mature Students 7 Students Kicked Out 8 Cocaine Story 10 City Channel 12 Fashion 14 CD / DVD Reviews 16 Musicals Editorial. 18 Brendan Benson Welcome to the Halloween edition ofThe Compact 19 Video Analysis and I hope you all like what we have on display for 20 A Tribute to Shane Brennan you this time around. It has been a busy couple of weeks which has included the infamous FRAG 21 Sports Forecast & Cupla trip to Leitrim, the Freshers' Fair in Aungier St., Focal and the 70's Ball on Harcourt St. just last week. Check-out The Compact's Private Eye and see all 22 Columnists the reviews and photos of what really happened at those events. -

The Raconteurs

KONZERTBÜR O PRESSEMITTEILUNG VOM 19.03.2019 Konzertbüro Schoneberg GmbH Amtsgericht Münster · HRB 3232 USt.-ID-Nummer: DE126111533 Geschäftsführer: Till Schoneberg www.schoneberg.de schonebergkonzerte The Raconteurs Nach zehn Jahren für zwei exklusive Konzerte zurück in Deutschland Endlich, nach mehr als zehn Jahren, kehren Jack White und Brendan Benson (beide Gesang, Keyboard und Gitarre) sowie Jack Lawrence (Bassist) und Patrick Keeler (Schlagzeug) zurück: The Raconteurs feiern das 10-jährige Jubiläum ihres Grammy nominierten Albums „Consolers Of The Lonely“ (März 2008 | Warner Bros. Records). Neben einer Neuauflage ist auch noch neue Musik mit im Gepäck: „Now That You’re Gone“ feat. Brendan Benson als Leadsänger sowie „Sunday Driver“, gesungen von Jack White, heißen die zwei brandneuen Singles (Dezember 2018 | Third Man Records) der Rock-Supergroup, die eine erste Hörprobe für ihr neues Studioalbum darbieten. Die dazugehörigen Videos überzeugen und bringen eine ordentlichen Ladung Rock ’n‘ Roll mit. Noch diesen Frühjahr soll das neue und somit dritte Album herauskommen, doch schon jetzt ist klar, es wird wieder grandios sein: Als „Feinstes Gitarrenhandwerk“ bezeichnet der Rolling Stone die neuen Songs und beschreibt sie: „Der erste Track baut sich mit elektrisierenden Gi- tarren zu einem vitalen Rocksong auf, während der zweite Titel eine getragenere und nach- denklichere Atmosphäre anstimmt.“ Wir sind voller gespannter Vorfreude auf die Konzerte im Mai! Rolling Stone präsentiert: The Raconteurs Tour 2019 28.05.19 Köln, E-Werk 30.05.19 Berlin, Verti Music Hall Tickets für die Tour sind im Internet über www.schoneberg.de sowie bei ausgesuchten CTS-/Eventim VVK-Stellen erhältlich. Pressematerial: Infos, Bilder und Pressetexte unter www.schoneberg.de Pressekontakt: Sophia Weber, [email protected] | +49 (0) 89-1250956-12 Berlin Frankfurt Köln München Münster Waldstraße 14 Berger Straße 296 Gottesweg 165 Zenettistraße 34 Rudolf-von-Langen-Str. -

Blackberry Smoke Herausgeber 04 Oonagh | Left Boy Aktiv Musik Marketing Gmbh & Co

GRATIS | FEBRUAR 2014 Ausgabe 5 BLACKBERRY OONAGH ANDREAS KÜMMERT LEFT BOY SMOKE ELIZA DOOLITTLE ENNIO MORRICONE JENNIFER ROSTOCK MALIA & BORIS BLANK NILS LANDGREN MICHAEL WOLLNY INHALT INHALT EdITION – IMPRESSUM 03 BLACKBERRY SMOKE HERAUSGEBER 04 OONAGH | LEFT BOY AKTIV MUSIK MARKETING GMBH & CO. KG 05 ANDREAS KÜMMERT | ELIZA DOOLITTLE Steintorweg 8, 20099 Hamburg, UstID: DE 187995651 06 JENNIFER ROSTOCK | ENNIO MORRICONE PERSÖnlICH HAFTEndE GESELLSCHAFTERIN: 07 MALIA & BORIS BLANK AKTIV MUSIK MARKETING 08 NILS LANDGREN | MICHAEL WOLLNY VERWALTUNGS GMBH & CO. KG 09 TORUN ERIKSEN | VARIOUS/OST Steintorweg 8, 20099 Hamburg SOLANDER | YOU ME AT SIX SITZ: Hamburg, HR B 100122 10 MARTERIA | JUDITH HOLOFERNES | FLO MEGA GESCHÄFTSFÜHRER Jörg Hottas 11 MARIEMARIE | MIKE & THE MECHANICS FON: 040/468 99 28-0 Fax: 040/468 99 28-15 SCHANDMAUL E-MAIL: [email protected] 14 ANNETT LOUISAN | PETER MAFFAY JON FLEMMING OLSEN REDAKTIONS- UND ANZEIGENLEITUNG 15 MAXIMO PARK | BROKEN BELLS CAGE THE ELEPHANT Daniel Ahrweiler (verantwortlich für den Inhalt) 16 NEUHEITEN 22 JAZZ PLATTENLÄDEN MITARBEITER DIESER AUSGABE 24 Helmut Blecher (hb), Jessica Franke (jf), Dagmar Lei- schow (dl), Nadine Lischick (nli) Ilka Mamero, Patrick AUF TOUR Niemeier (nie), Henning Richter (hr) FOTOGRAFEN DIESER AUSGABE Earache (1 Blackberry Smoke), David McClister (3 Black- berry Smoke), Universal Music (4 Oonagh, 15 Cage The Elephant), Warner Music (4 Left Boy, 5 Eliza Doolittle), Shane McCauley (6 Jennifer Rostock), Mali Lazell (7 Malia + Boris Blank), Steven Haberland (8 Nils Landg- ren), Jörg Steinmetz (8 Michael Wollny), Paul Ripke (10 THEODORE, PAUL & GABRIEL Marteria), Four Music (10 Judith Holofernes), Jens Koch (10 Flo Mega), Ben Wolf (11 MarieMarie), Eric Weiss Die drei jungen Pariserinnen beleben ganz unverhofft (11 Schandmaul), Marie Isabel Mora (14 Annett Loui- den American Folkrock– ihre Unbekümmertheit und san), Andreas Ortner (14 Peter Maffay), Beba Franziska ihr angeborenes Savoir-faire prädestinieren sie ganz Lindthorst (14 Jon Flemming Olsen), Steve Gullick (15 offensichtlich dafür. -

Medlin Uaf 0006N 10126.Pdf

A familiar & favorite terror Item Type Thesis Authors Medlin, Zackary Download date 02/10/2021 11:12:47 Link to Item http://hdl.handle.net/11122/4485 A FAMILIAR & FAVORITE TERROR A THESIS Presented to the Faculty of the University of Alaska Fairbanks in Partial Fulfillment of the Requirements for the Degree of MASTER OF FINE ARTS By Zackary Medlin, B.A. Fairbanks, Alaska December 2013 v Abstract The collection A Familiar & Favorite Terror explores love and violence, how the two are entangled and how that entanglement is constitutive of a self. It wants to show how love is a form of violence to the self, demanding a fracture. These poems view love, and not just romantic love, as a breaking of the self, both in its binding and its severing. With love there is always a hole, or a not quite whole. That’s where these poems want to dig – but not dig up – and sift through the ways we fill this void. And while this collection is decidedly personal, tracing it lineage through books such as John Berryman’s Dream Songs and Robert Lowell’s Life Studies, it is not confessional – there is rarely guilt or shame associated with the speaker. Instead, the self in these poems, and the poems themselves, are unapologetically postmodern; if Berryman and Lowell are ancestors to these poems, then their immediate family would be contemporary poets like Bob Hicok, Tony Hoagland, Dean Young, and Matthew Zapruder. These poems build their foundation on the unstable, seismically active terrain of pop-culture and the mutable, multiple self that peoples that land. -

Type Your Headline Here

JACK WHITE AND ALICIA KEYS TEAM FOR QUANTUM OF SOLACE THEME SONG -“Another Way To Die” Will Be First Duet In Bond History”- Michael G. Wilson and Barbara Broccoli, producers of the highly anticipated 22nd James Bond adventure “Quantum of Solace,” announced today that multi-Grammy Award-winning and platinum selling recording artists Jack White of the rock band The White Stripes, and Alicia Keys, have recorded the theme song for the film, which will be released worldwide this November. Their song, written and produced by Jack White, and titled “Another Way to Die,” will be the first duet in Bond soundtrack history. In addition to writing the song, Jack White is also featured as the rummer on this track. The soundtrack to QUANTUM OF SOLACE will be released by J Records on October 28, 2008. Daniel Craig reprises his role as Ian Fleming’s James Bond 007 in QUANTUM OF SOLACE, the Metro-Goldwyn-Mayer Pictures/Columbia Pictures release of EON Productions’ 22nd adventure in the longest-running film franchise in motion picture history. The film is directed by Marc Forster. The screenplay is by Neal Purvis & Robert Wade as well as Paul Haggis and the film’s score will be composed by David Arnold. Wilson and Broccoli said: "We are delighted and pleased to have two such exciting artists as Jack and Alicia, who were inspired by our film to join together their extraordinary talents in creating a unique sound for QUANTUM OF SOLACE." One of the most enigmatic figures in music, Jack White has built a reputation as something of a modern American renaissance man. -

The History of Rock Music - the Nineties

The History of Rock Music - The Nineties The History of Rock Music: 1995-2001 Drum'n'bass, trip-hop, glitch music History of Rock Music | 1955-66 | 1967-69 | 1970-75 | 1976-89 | The early 1990s | The late 1990s | The 2000s | Alpha index Musicians of 1955-66 | 1967-69 | 1970-76 | 1977-89 | 1990s in the US | 1990s outside the US | 2000s Back to the main Music page (Copyright © 2009 Piero Scaruffi) Depression (These are excerpts from my book "A History of Rock and Dance Music") Desperate songwriters, 1996-99 TM, ®, Copyright © 2008 Piero Scaruffi All rights reserved. The dejected tone of so many young songwriters seemed out of context in the late 1990s, when the economy was booming, wars were receding and the world was one huge party. They seemed to reflect a lack of confidence in society, in politics and, ultimately, in life itself. First there was the musician who could claim the title of founder of this school in the old days of the new wave. Mike Gira (2) basically continued the atmospheric work of latter-period Swans. His tortured soul engaged in a form of lugubrious and apocalyptic folk, which constituted, at the same time, a form of cathartic and purgatorial ritual. After his solo album Drainland (1995), which was still, de facto, a Swans album, assisted by Jarboe and Bill Rieflin, Gira split the late Swans sound in two: Body Lovers impersonated the ambient/atmospheric element, while Angels Of Light focused on the orchestral pop element. On the one hand, Gira crafted the sinister and baroque layered instrumental music of Body Lovers' Number One Of Three (1998) and the subliminal musique concrete of Body Haters (1998).