Engineering Faster Sorters for Small Sets of Items

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Evolutionary Approach for Sorting Algorithms

ORIENTAL JOURNAL OF ISSN: 0974-6471 COMPUTER SCIENCE & TECHNOLOGY December 2014, An International Open Free Access, Peer Reviewed Research Journal Vol. 7, No. (3): Published By: Oriental Scientific Publishing Co., India. Pgs. 369-376 www.computerscijournal.org Root to Fruit (2): An Evolutionary Approach for Sorting Algorithms PRAMOD KADAM AND Sachin KADAM BVDU, IMED, Pune, India. (Received: November 10, 2014; Accepted: December 20, 2014) ABstract This paper continues the earlier thought of evolutionary study of sorting problem and sorting algorithms (Root to Fruit (1): An Evolutionary Study of Sorting Problem) [1]and concluded with the chronological list of early pioneers of sorting problem or algorithms. Latter in the study graphical method has been used to present an evolution of sorting problem and sorting algorithm on the time line. Key words: Evolutionary study of sorting, History of sorting Early Sorting algorithms, list of inventors for sorting. IntroDUCTION name and their contribution may skipped from the study. Therefore readers have all the rights to In spite of plentiful literature and research extent this study with the valid proofs. Ultimately in sorting algorithmic domain there is mess our objective behind this research is very much found in documentation as far as credential clear, that to provide strength to the evolutionary concern2. Perhaps this problem found due to lack study of sorting algorithms and shift towards a good of coordination and unavailability of common knowledge base to preserve work of our forebear platform or knowledge base in the same domain. for upcoming generation. Otherwise coming Evolutionary study of sorting algorithm or sorting generation could receive hardly information about problem is foundation of futuristic knowledge sorting problems and syllabi may restrict with some base for sorting problem domain1. -

Algoritmi Za Sortiranje U Programskom Jeziku C++ Završni Rad

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Repository of the University of Rijeka SVEUČILIŠTE U RIJECI FILOZOFSKI FAKULTET U RIJECI ODSJEK ZA POLITEHNIKU Algoritmi za sortiranje u programskom jeziku C++ Završni rad Mentor završnog rada: doc. dr. sc. Marko Maliković Student: Alen Jakus Rijeka, 2016. SVEUČILIŠTE U RIJECI Filozofski fakultet Odsjek za politehniku Rijeka, Sveučilišna avenija 4 Povjerenstvo za završne i diplomske ispite U Rijeci, 07. travnja, 2016. ZADATAK ZAVRŠNOG RADA (na sveučilišnom preddiplomskom studiju politehnike) Pristupnik: Alen Jakus Zadatak: Algoritmi za sortiranje u programskom jeziku C++ Rješenjem zadatka potrebno je obuhvatiti sljedeće: 1. Napraviti pregled algoritama za sortiranje. 2. Opisati odabrane algoritme za sortiranje. 3. Dijagramima prikazati rad odabranih algoritama za sortiranje. 4. Opis osnovnih svojstava programskog jezika C++. 5. Detaljan opis tipova podataka, izvedenih oblika podataka, naredbi i drugih elemenata iz programskog jezika C++ koji se koriste u rješenjima odabranih problema. 6. Opis rješenja koja su dobivena iz napisanih programa. 7. Cjelokupan kôd u programskom jeziku C++. U završnom se radu obvezno treba pridržavati Pravilnika o diplomskom radu i Uputa za izradu završnog rada sveučilišnog dodiplomskog studija. Zadatak uručen pristupniku: 07. travnja 2016. godine Rok predaje završnog rada: ____________________ Datum predaje završnog rada: ____________________ Zadatak zadao: Doc. dr. sc. Marko Maliković 2 FILOZOFSKI FAKULTET U RIJECI Odsjek za politehniku U Rijeci, 07. travnja 2016. godine ZADATAK ZA ZAVRŠNI RAD (na sveučilišnom preddiplomskom studiju politehnike) Pristupnik: Alen Jakus Naslov završnog rada: Algoritmi za sortiranje u programskom jeziku C++ Kratak opis zadatka: Napravite pregled algoritama za sortiranje. Opišite odabrane algoritme za sortiranje. -

Parallel Sorting Algorithms + Topic Overview

+ Design of Parallel Algorithms Parallel Sorting Algorithms + Topic Overview n Issues in Sorting on Parallel Computers n Sorting Networks n Bubble Sort and its Variants n Quicksort n Bucket and Sample Sort n Other Sorting Algorithms + Sorting: Overview n One of the most commonly used and well-studied kernels. n Sorting can be comparison-based or noncomparison-based. n The fundamental operation of comparison-based sorting is compare-exchange. n The lower bound on any comparison-based sort of n numbers is Θ(nlog n) . n We focus here on comparison-based sorting algorithms. + Sorting: Basics What is a parallel sorted sequence? Where are the input and output lists stored? n We assume that the input and output lists are distributed. n The sorted list is partitioned with the property that each partitioned list is sorted and each element in processor Pi's list is less than that in Pj's list if i < j. + Sorting: Parallel Compare Exchange Operation A parallel compare-exchange operation. Processes Pi and Pj send their elements to each other. Process Pi keeps min{ai,aj}, and Pj keeps max{ai, aj}. + Sorting: Basics What is the parallel counterpart to a sequential comparator? n If each processor has one element, the compare exchange operation stores the smaller element at the processor with smaller id. This can be done in ts + tw time. n If we have more than one element per processor, we call this operation a compare split. Assume each of two processors have n/p elements. n After the compare-split operation, the smaller n/p elements are at processor Pi and the larger n/p elements at Pj, where i < j. -

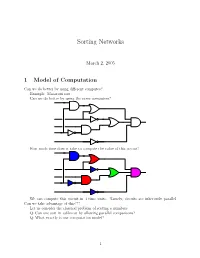

Sorting Networks

Sorting Networks March 2, 2005 1 Model of Computation Can we do better by using different computes? Example: Macaroni sort. Can we do better by using the same computers? How much time does it take to compute the value of this circuit? We can compute this circuit in 4 time units. Namely, circuits are inherently parallel. Can we take advantage of this??? Let us consider the classical problem of sorting n numbers. Q: Can one sort in sublinear by allowing parallel comparisons? Q: What exactly is our computation model? 1 1.1 Computing with a circuit We are going to design a circuit, where the inputs are the numbers, and we compare two numbers using a comparator gate: ¢¤¦© ¡ ¡ Comparator ¡£¢¥¤§¦©¨ ¡ For our drawings, we will draw such a gate as follows: ¢¡¤£¦¥¨§ © ¡£¦ So, circuits would just be horizontal lines, with vertical segments (i.e., gates) between them. A complete sorting network, looks like: The inputs come on the wires on the left, and are output on the wires on the right. The largest number is output on the bottom line. The surprising thing, is that one can generate circuits from a sorting algorithm. In fact, consider the following circuit: Q: What does this circuit does? A: This is the inner loop of insertion sort. Repeating this inner loop, we get the following sorting network: 2 Alternative way of drawing it: Q: How much time does it take for this circuit to sort the n numbers? Running time = how many time clocks we have to wait till the result stabilizes. In this case: 5 1 2 3 4 6 7 8 9 In general, we get: Lemma 1.1 Insertion sort requires 2n − 1 time units to sort n numbers. -

Foundations of Differentially Oblivious Algorithms

Foundations of Differentially Oblivious Algorithms T-H. Hubert Chan Kai-Min Chung Bruce Maggs Elaine Shi August 5, 2020 Abstract It is well-known that a program's memory access pattern can leak information about its input. To thwart such leakage, most existing works adopt the technique of oblivious RAM (ORAM) simulation. Such an obliviousness notion has stimulated much debate. Although ORAM techniques have significantly improved over the past few years, the concrete overheads are arguably still undesirable for real-world systems | part of this overhead is in fact inherent due to a well-known logarithmic ORAM lower bound by Goldreich and Ostrovsky. To make matters worse, when the program's runtime or output length depend on secret inputs, it may be necessary to perform worst-case padding to achieve full obliviousness and thus incur possibly super-linear overheads. Inspired by the elegant notion of differential privacy, we initiate the study of a new notion of access pattern privacy, which we call \(, δ)-differential obliviousness". We separate the notion of (, δ)-differential obliviousness from classical obliviousness by considering several fundamental algorithmic abstractions including sorting small-length keys, merging two sorted lists, and range query data structures (akin to binary search trees). We show that by adopting differential obliv- iousness with reasonable choices of and δ, not only can one circumvent several impossibilities pertaining to full obliviousness, one can also, in several cases, obtain meaningful privacy with little overhead relative to the non-private baselines (i.e., having privacy \almost for free"). On the other hand, we show that for very demanding choices of and δ, the same lower bounds for oblivious algorithms would be preserved for (, δ)-differential obliviousness. -

Sorting Algorithm 1 Sorting Algorithm

Sorting algorithm 1 Sorting algorithm In computer science, a sorting algorithm is an algorithm that puts elements of a list in a certain order. The most-used orders are numerical order and lexicographical order. Efficient sorting is important for optimizing the use of other algorithms (such as search and merge algorithms) that require sorted lists to work correctly; it is also often useful for canonicalizing data and for producing human-readable output. More formally, the output must satisfy two conditions: 1. The output is in nondecreasing order (each element is no smaller than the previous element according to the desired total order); 2. The output is a permutation, or reordering, of the input. Since the dawn of computing, the sorting problem has attracted a great deal of research, perhaps due to the complexity of solving it efficiently despite its simple, familiar statement. For example, bubble sort was analyzed as early as 1956.[1] Although many consider it a solved problem, useful new sorting algorithms are still being invented (for example, library sort was first published in 2004). Sorting algorithms are prevalent in introductory computer science classes, where the abundance of algorithms for the problem provides a gentle introduction to a variety of core algorithm concepts, such as big O notation, divide and conquer algorithms, data structures, randomized algorithms, best, worst and average case analysis, time-space tradeoffs, and lower bounds. Classification Sorting algorithms used in computer science are often classified by: • Computational complexity (worst, average and best behaviour) of element comparisons in terms of the size of the list . For typical sorting algorithms good behavior is and bad behavior is . -

Visvesvaraya Technological University a Project Report

` VISVESVARAYA TECHNOLOGICAL UNIVERSITY “Jnana Sangama”, Belagavi – 590 018 A PROJECT REPORT ON “PREDICTIVE SCHEDULING OF SORTING ALGORITHMS” Submitted in partial fulfillment for the award of the degree of BACHELOR OF ENGINEERING IN COMPUTER SCIENCE AND ENGINEERING BY RANJIT KUMAR SHA (1NH13CS092) SANDIP SHAH (1NH13CS101) SAURABH RAI (1NH13CS104) GAURAV KUMAR (1NH13CS718) Under the guidance of Ms. Sridevi (Senior Assistant Professor, Dept. of CSE, NHCE) DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING NEW HORIZON COLLEGE OF ENGINEERING (ISO-9001:2000 certified, Accredited by NAAC ‘A’, Permanently affiliated to VTU) Outer Ring Road, Panathur Post, Near Marathalli, Bangalore – 560103 ` NEW HORIZON COLLEGE OF ENGINEERING (ISO-9001:2000 certified, Accredited by NAAC ‘A’ Permanently affiliated to VTU) Outer Ring Road, Panathur Post, Near Marathalli, Bangalore-560 103 DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING CERTIFICATE Certified that the project work entitled “PREDICTIVE SCHEDULING OF SORTING ALGORITHMS” carried out by RANJIT KUMAR SHA (1NH13CS092), SANDIP SHAH (1NH13CS101), SAURABH RAI (1NH13CS104) and GAURAV KUMAR (1NH13CS718) bonafide students of NEW HORIZON COLLEGE OF ENGINEERING in partial fulfillment for the award of Bachelor of Engineering in Computer Science and Engineering of the Visvesvaraya Technological University, Belagavi during the year 2016-2017. It is certified that all corrections/suggestions indicated for Internal Assessment have been incorporated in the report deposited in the department library. The project report has been approved as it satisfies the academic requirements in respect of Project work prescribed for the Degree. Name & Signature of Guide Name Signature of HOD Signature of Principal (Ms. Sridevi) (Dr. Prashanth C.S.R.) (Dr. Manjunatha) External Viva Name of Examiner Signature with date 1. -

I. Sorting Networks Thomas Sauerwald

I. Sorting Networks Thomas Sauerwald Easter 2015 Outline Outline of this Course Introduction to Sorting Networks Batcher’s Sorting Network Counting Networks I. Sorting Networks Outline of this Course 2 Closely follow the book and use the same numberring of theorems/lemmas etc. I. Sorting Networks (Sorting, Counting, Load Balancing) II. Matrix Multiplication (Serial and Parallel) III. Linear Programming (Formulating, Applying and Solving) IV. Approximation Algorithms: Covering Problems V. Approximation Algorithms via Exact Algorithms VI. Approximation Algorithms: Travelling Salesman Problem VII. Approximation Algorithms: Randomisation and Rounding VIII. Approximation Algorithms: MAX-CUT Problem (Tentative) List of Topics Algorithms (I, II) Complexity Theory Advanced Algorithms I. Sorting Networks Outline of this Course 3 Closely follow the book and use the same numberring of theorems/lemmas etc. (Tentative) List of Topics Algorithms (I, II) Complexity Theory Advanced Algorithms I. Sorting Networks (Sorting, Counting, Load Balancing) II. Matrix Multiplication (Serial and Parallel) III. Linear Programming (Formulating, Applying and Solving) IV. Approximation Algorithms: Covering Problems V. Approximation Algorithms via Exact Algorithms VI. Approximation Algorithms: Travelling Salesman Problem VII. Approximation Algorithms: Randomisation and Rounding VIII. Approximation Algorithms: MAX-CUT Problem I. Sorting Networks Outline of this Course 3 (Tentative) List of Topics Algorithms (I, II) Complexity Theory Advanced Algorithms I. Sorting Networks (Sorting, Counting, Load Balancing) II. Matrix Multiplication (Serial and Parallel) III. Linear Programming (Formulating, Applying and Solving) IV. Approximation Algorithms: Covering Problems V. Approximation Algorithms via Exact Algorithms VI. Approximation Algorithms: Travelling Salesman Problem VII. Approximation Algorithms: Randomisation and Rounding VIII. Approximation Algorithms: MAX-CUT Problem Closely follow the book and use the same numberring of theorems/lemmas etc. -

An 11-Step Sorting Network for 18 Elements

An 11-Step Sorting Network for 18 Elements Sherenaz W. Al-Haj Baddar, Kenneth E. Batcher Kent State University Department of Computer Science Kent, Ohio 44240 [email protected] [email protected] output set must be a permutation of the input set {I1,I2,…,IN}. Abstract— Sorting Networks are cost-effective multistage Moreover, for every two elements of the output set Oj and Ok, interconnection networks with sorting capabilities. These Oj must be less than or equal to Ok whenever j ≤ k. networks theoretically consume Θ(NlogN) comparisons. However, Sorting networks are constructed using stages (steps) of the fastest implementable sorting networks built so far consume basic cells called Comparator Exchange(CE) modules. A CE is Θ(Nlog2N) comparisons, and generally, use the Merge-sorting a 2-element sorting circuit. It accepts two inputs via two input strategy to sort the input. An 18-element network using the Merge-sorting strategy needs at least 12 steps-here we show a lines, compares them and outputs the larger element on its high network that sorts 18 elements in only 11 steps. output line, whereas the smaller is output on its low output line. It is assumed that two comparators with disjoint inputs can operate in parallel. A typical CE is depicted in Figure 1[2]. Index Terms—Sorting Networks, Partial Ordering, 0/1 cases. I. INTRODUCTION Parallel processors are fast and powerful computing systems Figure 1. A comparator exchange module[2] that have been developed to help undertake computationally challenging problems. Deploying parallelism for solving a An optimal N-element sorting network requires θ(NlogN) given problem implies splitting the problem into subtasks. -

How to Sort out Your Life in O(N) Time

How to sort out your life in O(n) time arel Číže @kaja47K funkcionaklne.cz I said, "Kiss me, you're beautiful - These are truly the last days" Godspeed You! Black Emperor, The Dead Flag Blues Everyone, deep in their hearts, is waiting for the end of the world to come. Haruki Murakami, 1Q84 ... Free lunch 1965 – 2022 Cramming More Components onto Integrated Circuits http://www.cs.utexas.edu/~fussell/courses/cs352h/papers/moore.pdf He pays his staff in junk. William S. Burroughs, Naked Lunch Sorting? quicksort and chill HS 1964 QS 1959 MS 1945 RS 1887 quicksort, mergesort, heapsort, radix sort, multi- way merge sort, samplesort, insertion sort, selection sort, library sort, counting sort, bucketsort, bitonic merge sort, Batcher odd-even sort, odd–even transposition sort, radix quick sort, radix merge sort*, burst sort binary search tree, B-tree, R-tree, VP tree, trie, log-structured merge tree, skip list, YOLO tree* vs. hashing Robin Hood hashing https://cs.uwaterloo.ca/research/tr/1986/CS-86-14.pdf xs.sorted.take(k) (take (sort xs) k) qsort(lotOfIntegers) It may be the wrong decision, but fuck it, it's mine. (Mark Z. Danielewski, House of Leaves) I tell you, my man, this is the American Dream in action! We’d be fools not to ride this strange torpedo all the way out to the end. (HST, FALILV) Linear time sorting? I owe the discovery of Uqbar to the conjunction of a mirror and an Encyclopedia. (Jorge Luis Borges, Tlön, Uqbar, Orbis Tertius) Sorting out graph processing https://github.com/frankmcsherry/blog/blob/master/posts/2015-08-15.md Radix Sort Revisited http://www.codercorner.com/RadixSortRevisited.htm Sketchy radix sort https://github.com/kaja47/sketches (thinking|drinking|WTF)* I know they accuse me of arrogance, and perhaps misanthropy, and perhaps of madness. -

Evaluation of Sorting Algorithms, Mathematical and Empirical Analysis of Sorting Algorithms

International Journal of Scientific & Engineering Research Volume 8, Issue 5, May-2017 86 ISSN 2229-5518 Evaluation of Sorting Algorithms, Mathematical and Empirical Analysis of sorting Algorithms Sapram Choudaiah P Chandu Chowdary M Kavitha ABSTRACT:Sorting is an important data structure in many real life applications. A number of sorting algorithms are in existence till date. This paper continues the earlier thought of evolutionary study of sorting problem and sorting algorithms concluded with the chronological list of early pioneers of sorting problem or algorithms. Latter in the study graphical method has been used to present an evolution of sorting problem and sorting algorithm on the time line. An extensive analysis has been done compared with the traditional mathematical methods of ―Bubble Sort, Selection Sort, Insertion Sort, Merge Sort, Quick Sort. Observations have been obtained on comparing with the existing approaches of All Sorts. An “Empirical Analysis” consists of rigorous complexity analysis by various sorting algorithms, in which comparison and real swapping of all the variables are calculatedAll algorithms were tested on random data of various ranges from small to large. It is an attempt to compare the performance of various sorting algorithm, with the aim of comparing their speed when sorting an integer inputs.The empirical data obtained by using the program reveals that Quick sort algorithm is fastest and Bubble sort is slowest. Keywords: Bubble Sort, Insertion sort, Quick Sort, Merge Sort, Selection Sort, Heap Sort,CPU Time. Introduction In spite of plentiful literature and research in more dimension to student for thinking4. Whereas, sorting algorithmic domain there is mess found in this thinking become a mark of respect to all our documentation as far as credential concern2. -

Gsoc 2018 Project Proposal

GSoC 2018 Project Proposal Description: Implement the project idea sorting algorithms benchmark and implementation (2018) Applicant Information: Name: Kefan Yang Country of Residence: Canada University: Simon Fraser University Year of Study: Third year Major: Computing Science Self Introduction: I am Kefan Yang, a third-year computing science student from Simon Fraser University, Canada. I have rich experience as a full-stack web developer, so I am familiar with different kinds of database, such as PostgreSQL, MySQL and MongoDB. I have a much better understand of database system other than how to use it. In the database course I took in the university, I implemented a simple SQL database. It supports basic SQL statements like select, insert, delete and update, and uses a B+ tree to index the records by default. The size of each node in the B+ tree is set the disk block size to maximize performance of disk operation. Also, 1 several kinds of merging algorithms are used to perform a cross table query. More details about this database project can be found here. Additionally, I have very solid foundation of basic algorithms and data structure. I’ve participated in division 1 contest of 2017 ACM-ICPC Pacific Northwest Regionals as a representative of Simon Fraser University, which clearly shows my talents in understanding and applying different kinds of algorithms. I believe the contest experience will be a big help for this project. Benefits to the PostgreSQL Community: Sorting routine is an important part of many modules in PostgreSQL. Currently, PostgreSQL is using median-of-three quicksort introduced by Bentley and Mcllroy in 1993 [1], which is somewhat outdated.