Visvesvaraya Technological University a Project Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Coursenotes 4 Non-Adaptive Sorting Batcher's Algorithm

4. Non Adaptive Sorting Batcher’s Algorithm 4.1 Introduction to Batcher’s Algorithm Sorting has many important applications in daily life and in particular, computer science. Within computer science several sorting algorithms exist such as the “bubble sort,” “shell sort,” “comb sort,” “heap sort,” “bucket sort,” “merge sort,” etc. We have actually encountered these before in section 2 of the course notes. Many different sorting algorithms exist and are implemented differently in each programming language. These sorting algorithms are relevant because they are used in every single computer program you run ranging from the unimportant computer solitaire you play to the online checking account you own. Indeed without sorting algorithms, many of the services we enjoy today would simply not be available. Batcher’s algorithm is a way to sort individual disorganized elements called “keys” into some desired order using a set number of comparisons. The keys that will be sorted for our purposes will be numbers and the order that we will want them to be in will be from greatest to least or vice a versa (whichever way you want it). The Batcher algorithm is non-adaptive in that it takes a fixed set of comparisons in order to sort the unsorted keys. In other words, there is no change made in the process during the sorting. Unlike other methods of sorting where you need to remember comparisons (tournament sort), Batcher’s algorithm is useful because it requires less thinking. Non-adaptive sorting is easy because outcomes of comparisons made at one point of the process do not affect which comparisons will be made in the future. -

1 Lower Bound on Runtime of Comparison Sorts

CS 161 Lecture 7 { Sorting in Linear Time Jessica Su (some parts copied from CLRS) 1 Lower bound on runtime of comparison sorts So far we've looked at several sorting algorithms { insertion sort, merge sort, heapsort, and quicksort. Merge sort and heapsort run in worst-case O(n log n) time, and quicksort runs in expected O(n log n) time. One wonders if there's something special about O(n log n) that causes no sorting algorithm to surpass it. As a matter of fact, there is! We can prove that any comparison-based sorting algorithm must run in at least Ω(n log n) time. By comparison-based, I mean that the algorithm makes all its decisions based on how the elements of the input array compare to each other, and not on their actual values. One example of a comparison-based sorting algorithm is insertion sort. Recall that insertion sort builds a sorted array by looking at the next element and comparing it with each of the elements in the sorted array in order to determine its proper position. Another example is merge sort. When we merge two arrays, we compare the first elements of each array and place the smaller of the two in the sorted array, and then we make other comparisons to populate the rest of the array. In fact, all of the sorting algorithms we've seen so far are comparison sorts. But today we will cover counting sort, which relies on the values of elements in the array, and does not base all its decisions on comparisons. -

Quicksort Z Merge Sort Z T(N) = Θ(N Lg(N)) Z Not In-Place CSE 680 Z Selection Sort (From Homework) Prof

Sorting Review z Insertion Sort Introduction to Algorithms z T(n) = Θ(n2) z In-place Quicksort z Merge Sort z T(n) = Θ(n lg(n)) z Not in-place CSE 680 z Selection Sort (from homework) Prof. Roger Crawfis z T(n) = Θ(n2) z In-place Seems pretty good. z Heap Sort Can we do better? z T(n) = Θ(n lg(n)) z In-place Sorting Comppgarison Sorting z Assumptions z Given a set of n values, there can be n! 1. No knowledge of the keys or numbers we permutations of these values. are sorting on. z So if we look at the behavior of the 2. Each key supports a comparison interface sorting algorithm over all possible n! or operator. inputs we can determine the worst-case 3. Sorting entire records, as opposed to complexity of the algorithm. numbers,,p is an implementation detail. 4. Each key is unique (just for convenience). Comparison Sorting Decision Tree Decision Tree Model z Decision tree model ≤ 1:2 > z Full binary tree 2:3 1:3 z A full binary tree (sometimes proper binary tree or 2- ≤≤> > tree) is a tree in which every node other than the leaves has two c hildren <1,2,3> ≤ 1:3 >><2,1,3> ≤ 2:3 z Internal node represents a comparison. z Ignore control, movement, and all other operations, just <1,3,2> <3,1,2> <2,3,1> <3,2,1> see comparison z Each leaf represents one possible result (a permutation of the elements in sorted order). -

An Evolutionary Approach for Sorting Algorithms

ORIENTAL JOURNAL OF ISSN: 0974-6471 COMPUTER SCIENCE & TECHNOLOGY December 2014, An International Open Free Access, Peer Reviewed Research Journal Vol. 7, No. (3): Published By: Oriental Scientific Publishing Co., India. Pgs. 369-376 www.computerscijournal.org Root to Fruit (2): An Evolutionary Approach for Sorting Algorithms PRAMOD KADAM AND Sachin KADAM BVDU, IMED, Pune, India. (Received: November 10, 2014; Accepted: December 20, 2014) ABstract This paper continues the earlier thought of evolutionary study of sorting problem and sorting algorithms (Root to Fruit (1): An Evolutionary Study of Sorting Problem) [1]and concluded with the chronological list of early pioneers of sorting problem or algorithms. Latter in the study graphical method has been used to present an evolution of sorting problem and sorting algorithm on the time line. Key words: Evolutionary study of sorting, History of sorting Early Sorting algorithms, list of inventors for sorting. IntroDUCTION name and their contribution may skipped from the study. Therefore readers have all the rights to In spite of plentiful literature and research extent this study with the valid proofs. Ultimately in sorting algorithmic domain there is mess our objective behind this research is very much found in documentation as far as credential clear, that to provide strength to the evolutionary concern2. Perhaps this problem found due to lack study of sorting algorithms and shift towards a good of coordination and unavailability of common knowledge base to preserve work of our forebear platform or knowledge base in the same domain. for upcoming generation. Otherwise coming Evolutionary study of sorting algorithm or sorting generation could receive hardly information about problem is foundation of futuristic knowledge sorting problems and syllabi may restrict with some base for sorting problem domain1. -

Improving the Performance of Bubble Sort Using a Modified Diminishing Increment Sorting

Scientific Research and Essay Vol. 4 (8), pp. 740-744, August, 2009 Available online at http://www.academicjournals.org/SRE ISSN 1992-2248 © 2009 Academic Journals Full Length Research Paper Improving the performance of bubble sort using a modified diminishing increment sorting Oyelami Olufemi Moses Department of Computer and Information Sciences, Covenant University, P. M. B. 1023, Ota, Ogun State, Nigeria. E- mail: [email protected] or [email protected]. Tel.: +234-8055344658. Accepted 17 February, 2009 Sorting involves rearranging information into either ascending or descending order. There are many sorting algorithms, among which is Bubble Sort. Bubble Sort is not known to be a very good sorting algorithm because it is beset with redundant comparisons. However, efforts have been made to improve the performance of the algorithm. With Bidirectional Bubble Sort, the average number of comparisons is slightly reduced and Batcher’s Sort similar to Shellsort also performs significantly better than Bidirectional Bubble Sort by carrying out comparisons in a novel way so that no propagation of exchanges is necessary. Bitonic Sort was also presented by Batcher and the strong point of this sorting procedure is that it is very suitable for a hard-wired implementation using a sorting network. This paper presents a meta algorithm called Oyelami’s Sort that combines the technique of Bidirectional Bubble Sort with a modified diminishing increment sorting. The results from the implementation of the algorithm compared with Batcher’s Odd-Even Sort and Batcher’s Bitonic Sort showed that the algorithm performed better than the two in the worst case scenario. The implication is that the algorithm is faster. -

Vertex Ordering Problems in Directed Graph Streams∗

Vertex Ordering Problems in Directed Graph Streams∗ Amit Chakrabartiy Prantar Ghoshz Andrew McGregorx Sofya Vorotnikova{ Abstract constant-pass algorithms for directed reachability [12]. We consider directed graph algorithms in a streaming setting, This is rather unfortunate given that many of the mas- focusing on problems concerning orderings of the vertices. sive graphs often mentioned in the context of motivating This includes such fundamental problems as topological work on graph streaming are directed, e.g., hyperlinks, sorting and acyclicity testing. We also study the related citations, and Twitter \follows" all correspond to di- problems of finding a minimum feedback arc set (edges rected edges. whose removal yields an acyclic graph), and finding a sink In this paper we consider the complexity of a variety vertex. We are interested in both adversarially-ordered and of fundamental problems related to vertex ordering in randomly-ordered streams. For arbitrary input graphs with directed graphs. For example, one basic problem that 1 edges ordered adversarially, we show that most of these motivated much of this work is as follows: given a problems have high space complexity, precluding sublinear- stream consisting of edges of an acyclic graph in an space solutions. Some lower bounds also apply when the arbitrary order, how much memory is required to return stream is randomly ordered: e.g., in our most technical result a topological ordering of the graph? In the offline we show that testing acyclicity in the p-pass random-order setting, this can be computed in O(m + n) time using model requires roughly n1+1=p space. -

Data Structures & Algorithms

DATA STRUCTURES & ALGORITHMS Tutorial 6 Questions SORTING ALGORITHMS Required Questions Question 1. Many operations can be performed faster on sorted than on unsorted data. For which of the following operations is this the case? a. checking whether one word is an anagram of another word, e.g., plum and lump b. findin the minimum value. c. computing an average of values d. finding the middle value (the median) e. finding the value that appears most frequently in the data Question 2. In which case, the following sorting algorithm is fastest/slowest and what is the complexity in that case? Explain. a. insertion sort b. selection sort c. bubble sort d. quick sort Question 3. Consider the sequence of integers S = {5, 8, 2, 4, 3, 6, 1, 7} For each of the following sorting algorithms, indicate the sequence S after executing each step of the algorithm as it sorts this sequence: a. insertion sort b. selection sort c. heap sort d. bubble sort e. merge sort Question 4. Consider the sequence of integers 1 T = {1, 9, 2, 6, 4, 8, 0, 7} Indicate the sequence T after executing each step of the Cocktail sort algorithm (see Appendix) as it sorts this sequence. Advanced Questions Question 5. A variant of the bubble sorting algorithm is the so-called odd-even transposition sort . Like bubble sort, this algorithm a total of n-1 passes through the array. Each pass consists of two phases: The first phase compares array[i] with array[i+1] and swaps them if necessary for all the odd values of of i. -

Parallel Sorting Algorithms + Topic Overview

+ Design of Parallel Algorithms Parallel Sorting Algorithms + Topic Overview n Issues in Sorting on Parallel Computers n Sorting Networks n Bubble Sort and its Variants n Quicksort n Bucket and Sample Sort n Other Sorting Algorithms + Sorting: Overview n One of the most commonly used and well-studied kernels. n Sorting can be comparison-based or noncomparison-based. n The fundamental operation of comparison-based sorting is compare-exchange. n The lower bound on any comparison-based sort of n numbers is Θ(nlog n) . n We focus here on comparison-based sorting algorithms. + Sorting: Basics What is a parallel sorted sequence? Where are the input and output lists stored? n We assume that the input and output lists are distributed. n The sorted list is partitioned with the property that each partitioned list is sorted and each element in processor Pi's list is less than that in Pj's list if i < j. + Sorting: Parallel Compare Exchange Operation A parallel compare-exchange operation. Processes Pi and Pj send their elements to each other. Process Pi keeps min{ai,aj}, and Pj keeps max{ai, aj}. + Sorting: Basics What is the parallel counterpart to a sequential comparator? n If each processor has one element, the compare exchange operation stores the smaller element at the processor with smaller id. This can be done in ts + tw time. n If we have more than one element per processor, we call this operation a compare split. Assume each of two processors have n/p elements. n After the compare-split operation, the smaller n/p elements are at processor Pi and the larger n/p elements at Pj, where i < j. -

Algorithms (Decrease-And-Conquer)

Algorithms (Decrease-and-Conquer) Pramod Ganapathi Department of Computer Science State University of New York at Stony Brook August 22, 2021 1 Contents Concepts 1. Decrease by constant Topological sorting 2. Decrease by constant factor Lighter ball Josephus problem 3. Variable size decrease Selection problem ............................................................... Problems Stooge sort 2 Contributors Ayush Sharma 3 Decrease-and-conquer Problem(n) [Step 1. Decrease] Subproblem(n0) [Step 2. Conquer] Subsolution [Step 3. Combine] Solution 4 Types of decrease-and-conquer Decrease by constant. n0 = n − c for some constant c Decrease by constant factor. n0 = n/c for some constant c Variable size decrease. n0 = n − c for some variable c 5 Decrease by constant Size of instance is reduced by the same constant in each iteration of the algorithm Decrease by 1 is common Examples: Array sum Array search Find maximum/minimum element Integer product Exponentiation Topological sorting 6 Decrease by constant factor Size of instance is reduced by the same constant factor in each iteration of the algorithm Decrease by factor 2 is common Examples: Binary search Search/insert/delete in balanced search tree Fake coin problem Josephus problem 7 Variable size decrease Size of instance is reduced by a variable in each iteration of the algorithm Examples: Selection problem Quicksort Search/insert/delete in binary search tree Interpolation search 8 Decrease by Constant HOME 9 Topological sorting Problem Topological sorting of vertices of a directed acyclic graph is an ordering of the vertices v1, v2, . , vn in such a way that there is an edge directed towards vertex vj from vertex vi, then vi comes before vj. -

Topological Sort (An Application of DFS)

Topological Sort (an application of DFS) CSC263 Tutorial 9 Topological sort • We have a set of tasks and a set of dependencies (precedence constraints) of form “task A must be done before task B” • Topological sort : An ordering of the tasks that conforms with the given dependencies • Goal : Find a topological sort of the tasks or decide that there is no such ordering Examples • Scheduling : When scheduling task graphs in distributed systems, usually we first need to sort the tasks topologically ...and then assign them to resources (the most efficient scheduling is an NP-complete problem) • Or during compilation to order modules/libraries d c a g f b e Examples • Resolving dependencies : apt-get uses topological sorting to obtain the admissible sequence in which a set of Debian packages can be installed/removed Topological sort more formally • Suppose that in a directed graph G = (V, E) vertices V represent tasks, and each edge ( u, v)∊E means that task u must be done before task v • What is an ordering of vertices 1, ..., | V| such that for every edge ( u, v), u appears before v in the ordering? • Such an ordering is called a topological sort of G • Note: there can be multiple topological sorts of G Topological sort more formally • Is it possible to execute all the tasks in G in an order that respects all the precedence requirements given by the graph edges? • The answer is " yes " if and only if the directed graph G has no cycle ! (otherwise we have a deadlock ) • Such a G is called a Directed Acyclic Graph, or just a DAG Algorithm for -

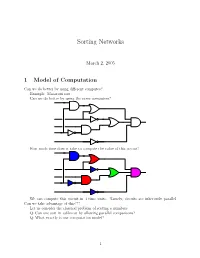

Sorting Networks

Sorting Networks March 2, 2005 1 Model of Computation Can we do better by using different computes? Example: Macaroni sort. Can we do better by using the same computers? How much time does it take to compute the value of this circuit? We can compute this circuit in 4 time units. Namely, circuits are inherently parallel. Can we take advantage of this??? Let us consider the classical problem of sorting n numbers. Q: Can one sort in sublinear by allowing parallel comparisons? Q: What exactly is our computation model? 1 1.1 Computing with a circuit We are going to design a circuit, where the inputs are the numbers, and we compare two numbers using a comparator gate: ¢¤¦© ¡ ¡ Comparator ¡£¢¥¤§¦©¨ ¡ For our drawings, we will draw such a gate as follows: ¢¡¤£¦¥¨§ © ¡£¦ So, circuits would just be horizontal lines, with vertical segments (i.e., gates) between them. A complete sorting network, looks like: The inputs come on the wires on the left, and are output on the wires on the right. The largest number is output on the bottom line. The surprising thing, is that one can generate circuits from a sorting algorithm. In fact, consider the following circuit: Q: What does this circuit does? A: This is the inner loop of insertion sort. Repeating this inner loop, we get the following sorting network: 2 Alternative way of drawing it: Q: How much time does it take for this circuit to sort the n numbers? Running time = how many time clocks we have to wait till the result stabilizes. In this case: 5 1 2 3 4 6 7 8 9 In general, we get: Lemma 1.1 Insertion sort requires 2n − 1 time units to sort n numbers. -

Investigating the Effect of Implementation Languages and Large Problem Sizes on the Tractability and Efficiency of Sorting Algorithms

International Journal of Engineering Research and Technology. ISSN 0974-3154, Volume 12, Number 2 (2019), pp. 196-203 © International Research Publication House. http://www.irphouse.com Investigating the Effect of Implementation Languages and Large Problem Sizes on the Tractability and Efficiency of Sorting Algorithms Temitayo Matthew Fagbola and Surendra Colin Thakur Department of Information Technology, Durban University of Technology, Durban 4000, South Africa. ORCID: 0000-0001-6631-1002 (Temitayo Fagbola) Abstract [1],[2]. Theoretically, effective sorting of data allows for an efficient and simple searching process to take place. This is Sorting is a data structure operation involving a re-arrangement particularly important as most merge and search algorithms of an unordered set of elements with witnessed real life strictly depend on the correctness and efficiency of sorting applications for load balancing and energy conservation in algorithms [4]. It is important to note that, the rearrangement distributed, grid and cloud computing environments. However, procedure of each sorting algorithm differs and directly impacts the rearrangement procedure often used by sorting algorithms on the execution time and complexity of such algorithm for differs and significantly impacts on their computational varying problem sizes and type emanating from real-world efficiencies and tractability for varying problem sizes. situations [5]. For instance, the operational sorting procedures Currently, which combination of sorting algorithm and of Merge Sort, Quick Sort and Heap Sort follow a divide-and- implementation language is highly tractable and efficient for conquer approach characterized by key comparison, recursion solving large sized-problems remains an open challenge. In this and binary heap’ key reordering requirements, respectively [9].