Whitepaper NVIDIA Geforce GTX 980

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Efficient Supersampling Antialiasing for High-Performance Architectures

Efficient Supersampling Antialiasing for High-Performance Architectures TR91-023 April, 1991 Steven Molnar The University of North Carolina at Chapel Hill Department of Computer Science CB#3175, Sitterson Hall Chapel Hill, NC 27599-3175 This work was supported by DARPA/ISTO Order No. 6090, NSF Grant No. DCI- 8601152 and IBM. UNC is an Equa.l Opportunity/Affirmative Action Institution. EFFICIENT SUPERSAMPLING ANTIALIASING FOR HIGH PERFORMANCE ARCHITECTURES Steven Molnar Department of Computer Science University of North Carolina Chapel Hill, NC 27599-3175 Abstract Techniques are presented for increasing the efficiency of supersampling antialiasing in high-performance graphics architectures. The traditional approach is to sample each pixel with multiple, regularly spaced or jittered samples, and to blend the sample values into a final value using a weighted average [FUCH85][DEER88][MAMM89][HAEB90]. This paper describes a new type of antialiasing kernel that is optimized for the constraints of hardware systems and produces higher quality images with fewer sample points than traditional methods. The central idea is to compute a Poisson-disk distribution of sample points for a small region of the screen (typically pixel-sized, or the size of a few pixels). Sample points are then assigned to pixels so that the density of samples points (rather than weights) for each pixel approximates a Gaussian (or other) reconstruction filter as closely as possible. The result is a supersampling kernel that implements importance sampling with Poisson-disk-distributed samples. The method incurs no additional run-time expense over standard weighted-average supersampling methods, supports successive-refinement, and can be implemented on any high-performance system that point samples accurately and has sufficient frame-buffer storage for two color buffers. -

NVIDIA Opengl in 2012 Mark Kilgard

NVIDIA OpenGL in 2012 Mark Kilgard • Principal System Software Engineer – OpenGL driver and API evolution – Cg (“C for graphics”) shading language – GPU-accelerated path rendering • OpenGL Utility Toolkit (GLUT) implementer • Author of OpenGL for the X Window System • Co-author of Cg Tutorial Outline • OpenGL’s importance to NVIDIA • OpenGL API improvements & new features – OpenGL 4.2 – Direct3D interoperability – GPU-accelerated path rendering – Kepler Improvements • Bindless Textures • Linux improvements & new features • Cg 3.1 update NVIDIA’s OpenGL Leverage Cg GeForce Parallel Nsight Tegra Quadro OptiX Example of Hybrid Rendering with OptiX OpenGL (Rasterization) OptiX (Ray tracing) Parallel Nsight Provides OpenGL Profiling Configure Application Trace Settings Parallel Nsight Provides OpenGL Profiling Magnified trace options shows specific OpenGL (and Cg) tracing options Parallel Nsight Provides OpenGL Profiling Parallel Nsight Provides OpenGL Profiling Trace of mix of OpenGL and CUDA shows glFinish & OpenGL draw calls Only Cross Platform 3D API OpenGL 3D Graphics API • cross-platform • most functional • peak performance • open standard • inter-operable • well specified & documented • 20 years of compatibility OpenGL Spawns Closely Related Standards Congratulations: WebGL officially approved, February 2012 “The web is now 3D enabled” Buffer and OpenGL 4 – DirectX 11 Superset Event Interop • Interop with a complete compute solution – OpenGL is for graphics – CUDA / OpenCL is for compute • Shaders can be saved to and loaded from binary -

Msalvi Temporal Supersampling.Pdf

1 2 3 4 5 6 7 8 We know for certain that the last sample, shaded in the current frame, is valid. 9 We cannot say whether the color of the remaining 3 samples would be the same if computed in the current frame due to motion, (dis)occlusion, change in lighting, etc.. 10 Re-using stale/invalid samples results in quite interesting image artifacts. In this case the fairy model is moving from the left to the right side of the screen and if we re-use every past sample we will observe a characteristic ghosting artifact. 11 There are many possible strategies to reject samples. Most involve checking whether plurality of pre and post shading attributes from past frames is consistent with information acquired in the current frame. In practice it is really hard to come up with a robust strategy that works in the general case. For these reasons “old” approaches have seen limited success/applicability. 12 More recent temporal supersampling methods are based on the general idea of re- using resolved color (i.e. the color associated to a “small” image region after applying a reconstruction filter), while older methods re-use individual samples. What might appear as a small difference is actually extremely important and makes possible to build much more robust temporal techniques. First of all re-using resolved color makes possible to throw away most of the past information and to only keep around the last frame. This helps reducing the cost of temporal methods. Since we don’t have access to individual samples anymore the pixel color is computed by continuous integration using a moving exponential average (EMA). -

Discontinuity Edge Overdraw Pedro V

Discontinuity Edge Overdraw Pedro V. Sander Hugues Hoppe John Snyder Steven J. Gortler Harvard University Microsoft Research Microsoft Research Harvard University http://cs.harvard.edu/~pvs http://research.microsoft.com/~hoppe [email protected] http://cs.harvard.edu/~sjg Abstract Aliasing is an important problem when rendering triangle meshes. Efficient antialiasing techniques such as mipmapping greatly improve the filtering of textures defined over a mesh. A major component of the remaining aliasing occurs along discontinuity edges such as silhouettes, creases, and material boundaries. Framebuffer supersampling is a simple remedy, but 2×2 super- sampling leaves behind significant temporal artifacts, while greater supersampling demands even more fill-rate and memory. We present an alternative that focuses effort on discontinuity edges by overdrawing such edges as antialiased lines. Although the idea is simple, several subtleties arise. Visible silhouette edges must be detected efficiently. Discontinuity edges need consistent orientations. They must be blended as they approach (a) aliased original (b) overdrawn edges (c) final result the silhouette to avoid popping. Unfortunately, edge blending Figure 1: Reducing aliasing artifacts using edge overdraw. results in blurriness. Our technique balances these two competing objectives of temporal smoothness and spatial sharpness. Finally, With current graphics hardware, a simple technique for reducing the best results are obtained when discontinuity edges are sorted aliasing is to supersample and filter the output image. On current by depth. Our approach proves surprisingly effective at reducing displays (desktop screens of ~1K×1K resolution), 2×2 supersam- temporal artifacts commonly referred to as "crawling jaggies", pling reduces but does not eliminate aliasing. Of course, a finer with little added cost. -

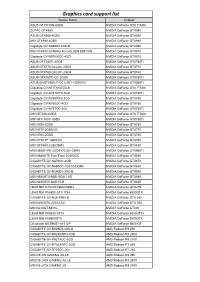

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

NVIDIA Quadro RTX for V-Ray Next

NVIDIA QUADRO RTX V-RAY NEXT GPU Image courtesy of © Dabarti Studio, rendered with V-Ray GPU Quadro RTX Accelerates V-Ray Next GPU Rendering Solutions for V-Ray Next GPU V-Ray Next GPU taps into the power of NVIDIA® Quadro® NVIDIA Quadro® provides a wide range of RTX-enabled RTX™ to speed up production rendering with dedicated RT solutions for desktop, mobile, server-based rendering, and Cores for ray tracing and Tensor Cores for AI-accelerated virtual workstations with NVIDIA Quadro Virtual Data denoising.¹ With up to 18X faster rendering than CPU-based Center Workstation (Quadro vDWS) software.2 With up to 96 solutions and enhanced performance with NVIDIA NVLink™, gigabytes (GB) of GPU memory available,3 Quadro RTX V-Ray Next GPU with RTX support provides incredible provides the power you need for the largest professional performance improvements for your rendering workloads. graphics and rendering workloads. “ Accelerating artist productivity is always our top Benchmark: V-Ray Next GPU Rendering Performance Increase on Quadro RTX GPUs priority, so we’re quick to take advantage of the latest ray-tracing hardware breakthroughs. By Quadro RTX 6000 x2 1885 ™ Quadro RTX 6000 104 supporting NVIDIA RTX in V-Ray GPU, we’re Quadro RTX 4000 783 bringing our customers an exciting new boost in PU 1 0 2 4 6 8 10 12 14 16 18 20 their GPU production rendering speeds.” Relatve Performance – Phillip Miller, Vice President, Product Management, Chaos Group Desktop performance Tests run on 1x Xeon old 6154 3 Hz (37 Hz Turbo), 64 B DDR4 RAM Wn10x64 Drver verson 44128 Performance results may vary dependng on the scene NVIDIA Quadro professional graphics solutions are verified and recommended for the most demanding projects by Chaos Group. -

NVIDIA Launches Tegra X1 Mobile Super Chip

NVIDIA Launches Tegra X1 Mobile Super Chip Maxwell GPU Architecture Delivers First Teraflops Mobile Processor, Powering Deep Learning and Computer Vision Applications NVIDIA today unveiled Tegra® X1, its next-generation mobile super chip with over one teraflops of processing power – delivering capabilities that open the door to unprecedented graphics and sophisticated deep learning and computer vision applications. Tegra X1 is built on the same NVIDIA Maxwell™ GPU architecture rolled out only months ago for the world's top-performing gaming graphics card, the GeForce® GTX 980. The 256-core Tegra X1 provides twice the performance of its predecessor, the Tegra K1, which is based on the previous-generation Kepler™ architecture and debuted at last year's Consumer Electronics Show. Tegra processors are built for embedded products, mobile devices, autonomous machines and automotive applications. Tegra X1 will begin appearing in the first half of the year. It will be featured in the newly announced NVIDIA DRIVE™ car computers. DRIVE PX is an auto-pilot computing platform that can process video from up to 12 onboard cameras to run capabilities providing Surround-Vision, for a seamless 360-degree view around the car, and Auto-Valet, for true self-parking. DRIVE CX is a complete cockpit platform designed to power the advanced graphics required across the increasing number of screens used for digital clusters, infotainment, head-up displays, virtual mirrors and rear-seat entertainment. "We see a future of autonomous cars, robots and drones that see and learn, with seeming intelligence that is hard to imagine," said Jen-Hsun Huang, CEO and co-founder, NVIDIA. -

Programming Graphics Hardware Overview of the Tutorial: Afternoon

Tutorial 5 ProgrammingProgramming GraphicsGraphics HardwareHardware Randy Fernando, Mark Harris, Matthias Wloka, Cyril Zeller Overview of the Tutorial: Morning 8:30 Introduction to the Hardware Graphics Pipeline Cyril Zeller 9:30 Controlling the GPU from the CPU: the 3D API Cyril Zeller 10:15 Break 10:45 Programming the GPU: High-level Shading Languages Randy Fernando 12:00 Lunch Tutorial 5: Programming Graphics Hardware Overview of the Tutorial: Afternoon 12:00 Lunch 14:00 Optimizing the Graphics Pipeline Matthias Wloka 14:45 Advanced Rendering Techniques Matthias Wloka 15:45 Break 16:15 General-Purpose Computation Using Graphics Hardware Mark Harris 17:30 End Tutorial 5: Programming Graphics Hardware Tutorial 5: Programming Graphics Hardware IntroductionIntroduction toto thethe HardwareHardware GraphicsGraphics PipelinePipeline Cyril Zeller Overview Concepts: Real-time rendering Hardware graphics pipeline Evolution of the PC hardware graphics pipeline: 1995-1998: Texture mapping and z-buffer 1998: Multitexturing 1999-2000: Transform and lighting 2001: Programmable vertex shader 2002-2003: Programmable pixel shader 2004: Shader model 3.0 and 64-bit color support PC graphics software architecture Performance numbers Tutorial 5: Programming Graphics Hardware Real-Time Rendering Graphics hardware enables real-time rendering Real-time means display rate at more than 10 images per second 3D Scene = Image = Collection of Array of pixels 3D primitives (triangles, lines, points) Tutorial 5: Programming Graphics Hardware Hardware Graphics Pipeline -

GPU-Based Deep Learning Inference

Whitepaper GPU-Based Deep Learning Inference: A Performance and Power Analysis November 2015 1 Contents Abstract ......................................................................................................................................................... 3 Introduction .................................................................................................................................................. 3 Inference versus Training .............................................................................................................................. 4 GPUs Excel at Neural Network Inference ..................................................................................................... 5 Inference Optimizations in Caffe and cuDNN 4 ........................................................................................ 5 Experimental Setup and Testing Methodology ........................................................................................ 7 Inference on Small and Large GPUs .......................................................................................................... 8 Conclusion ................................................................................................................................................... 10 References .................................................................................................................................................. 10 2 Abstract Deep learning methods are revolutionizing various areas of machine perception. On a -

Nvidia Cuda Getting Started Guide for Linux

NVIDIA CUDA GETTING STARTED GUIDE FOR LINUX DU-05347-001_v7.0 | March 2015 Installation and Verification on Linux Systems TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. System Requirements.................................................................................... 1 1.1.1. x86 32-bit Support.................................................................................. 2 1.2. About This Document.................................................................................... 3 Chapter 2. Pre-installation Actions...........................................................................4 2.1. Verify You Have a CUDA-Capable GPU................................................................ 4 2.2. Verify You Have a Supported Version of Linux.......................................................4 2.3. Verify the System Has gcc Installed................................................................... 5 2.4. Choose an Installation Method......................................................................... 5 2.5. Download the NVIDIA CUDA Toolkit....................................................................6 2.6. Handle Conflicting Installation Methods.............................................................. 6 Chapter 3. Package Manager Installation....................................................................8 3.1. Overview.................................................................................................. -

Cuda C Programming Guide

CUDA C PROGRAMMING GUIDE PG-02829-001_v10.0 | October 2018 Design Guide CHANGES FROM VERSION 9.0 ‣ Documented restriction that operator-overloads cannot be __global__ functions in Operator Function. ‣ Removed guidance to break 8-byte shuffles into two 4-byte instructions. 8-byte shuffle variants are provided since CUDA 9.0. See Warp Shuffle Functions. ‣ Passing __restrict__ references to __global__ functions is now supported. Updated comment in __global__ functions and function templates. ‣ Documented CUDA_ENABLE_CRC_CHECK in CUDA Environment Variables. ‣ Warp matrix functions now support matrix products with m=32, n=8, k=16 and m=8, n=32, k=16 in addition to m=n=k=16. www.nvidia.com CUDA C Programming Guide PG-02829-001_v10.0 | ii TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. From Graphics Processing to General Purpose Parallel Computing............................... 1 1.2. CUDA®: A General-Purpose Parallel Computing Platform and Programming Model.............3 1.3. A Scalable Programming Model.........................................................................4 1.4. Document Structure...................................................................................... 5 Chapter 2. Programming Model............................................................................... 7 2.1. Kernels......................................................................................................7 2.2. Thread Hierarchy........................................................................................ -

NVIDIA Ampere GA102 GPU Architecture Whitepaper

NVIDIA AMPERE GA102 GPU ARCHITECTURE Second-Generation RTX Updated with NVIDIA RTX A6000 and NVIDIA A40 Information V2.0 Table of Contents Introduction 5 GA102 Key Features 7 2x FP32 Processing 7 Second-Generation RT Core 7 Third-Generation Tensor Cores 8 GDDR6X and GDDR6 Memory 8 Third-Generation NVLink® 8 PCIe Gen 4 9 Ampere GPU Architecture In-Depth 10 GPC, TPC, and SM High-Level Architecture 10 ROP Optimizations 11 GA10x SM Architecture 11 2x FP32 Throughput 12 Larger and Faster Unified Shared Memory and L1 Data Cache 13 Performance Per Watt 16 Second-Generation Ray Tracing Engine in GA10x GPUs 17 Ampere Architecture RTX Processors in Action 19 GA10x GPU Hardware Acceleration for Ray-Traced Motion Blur 20 Third-Generation Tensor Cores in GA10x GPUs 24 Comparison of Turing vs GA10x GPU Tensor Cores 24 NVIDIA Ampere Architecture Tensor Cores Support New DL Data Types 26 Fine-Grained Structured Sparsity 26 NVIDIA DLSS 8K 28 GDDR6X Memory 30 RTX IO 32 Introducing NVIDIA RTX IO 33 How NVIDIA RTX IO Works 34 Display and Video Engine 38 DisplayPort 1.4a with DSC 1.2a 38 HDMI 2.1 with DSC 1.2a 38 Fifth Generation NVDEC - Hardware-Accelerated Video Decoding 39 AV1 Hardware Decode 40 Seventh Generation NVENC - Hardware-Accelerated Video Encoding 40 NVIDIA Ampere GA102 GPU Architecture ii Conclusion 42 Appendix A - Additional GeForce GA10x GPU Specifications 44 GeForce RTX 3090 44 GeForce RTX 3070 46 Appendix B - New Memory Error Detection and Replay (EDR) Technology 49 Appendix C - RTX A6000 GPU Perf ormance 50 List of Figures Figure 1.