Read My Lips Product Guide Free Subscriptions Read My Lips Conferences/Events

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Uses of Animation 1

The Uses of Animation 1 1 The Uses of Animation ANIMATION Animation is the process of making the illusion of motion and change by means of the rapid display of a sequence of static images that minimally differ from each other. The illusion—as in motion pictures in general—is thought to rely on the phi phenomenon. Animators are artists who specialize in the creation of animation. Animation can be recorded with either analogue media, a flip book, motion picture film, video tape,digital media, including formats with animated GIF, Flash animation and digital video. To display animation, a digital camera, computer, or projector are used along with new technologies that are produced. Animation creation methods include the traditional animation creation method and those involving stop motion animation of two and three-dimensional objects, paper cutouts, puppets and clay figures. Images are displayed in a rapid succession, usually 24, 25, 30, or 60 frames per second. THE MOST COMMON USES OF ANIMATION Cartoons The most common use of animation, and perhaps the origin of it, is cartoons. Cartoons appear all the time on television and the cinema and can be used for entertainment, advertising, 2 Aspects of Animation: Steps to Learn Animated Cartoons presentations and many more applications that are only limited by the imagination of the designer. The most important factor about making cartoons on a computer is reusability and flexibility. The system that will actually do the animation needs to be such that all the actions that are going to be performed can be repeated easily, without much fuss from the side of the animator. -

Columbia Photographic Images and Photorealistic Computer Graphics Dataset

Columbia Photographic Images and Photorealistic Computer Graphics Dataset Tian-Tsong Ng, Shih-Fu Chang, Jessie Hsu, Martin Pepeljugoski¤ fttng,sfchang,[email protected], [email protected] Department of Electrical Engineering Columbia University ADVENT Technical Report #205-2004-5 Feb 2005 Abstract Passive-blind image authentication is a new area of research. A suitable dataset for experimentation and comparison of new techniques is important for the progress of the new research area. In response to the need for a new dataset, the Columbia Photographic Images and Photorealistic Computer Graphics Dataset is made open for the passive-blind image authentication research community. The dataset is composed of four component image sets, i.e., the Photorealistic Com- puter Graphics Set, the Personal Photographic Image Set, the Google Image Set, and the Recaptured Computer Graphics Set. This dataset, available from http://www.ee.columbia.edu/trustfoto, will be for those who work on the photographic images versus photorealistic com- puter graphics classi¯cation problem, which is a subproblem of the passive-blind image authentication research. In this report, we de- scribe the design and the implementation of the dataset. The report will also serve as a user guide for the dataset. 1 Introduction Digital watermarking [1] has been an active area of research since a decade ago. Various fragile [2, 3, 4, 5] or semi-fragile watermarking algorithms [6, 7, 8, 9] has been proposed for the image content authentication and the detection of image tampering. In addition, authentication signature [10, ¤This work was done when Martin spent his summer in our research group 1 11, 12, 13] has also been proposed as an alternative image authentication technique. -

HP and Autodesk Create Stunning Digital Media and Entertainment with HP Workstations

HP and Autodesk Create stunning digital media and entertainment with HP Workstations. Does your workstation meet your digital Performance: Advanced compute and visualization power help speed your work, beat deadlines, and meet expectations. At the heart of media challenges? HP Z Workstations are the new Intel® processors with advanced processor performance technologies and NVIDIA Quadro professional It’s no secret that the media and entertainment industry is constantly graphics cards with the NVIDIA CUDA parallel processing architecture; evolving, and the push to deliver better content faster is an everyday delivering real-time previewing and editing of native, high-resolution challenge. To meet those demands, technology matters—a lot. You footage, including multiple layers of 4K video. Intel® Turbo Boost1 need innovative, high-performing, reliable hardware and software tools is designed to enhance the base operating frequency of processor tuned to your applications so your team can create captivating content, cores, providing more processing speed for single and multi-threaded meet tight production schedules, and stay on budget. HP offers an applications. The HP Z Workstation cooling design enhances this expansive portfolio of integrated workstation hardware and software performance. solutions designed to maximize the creative capabilities of Autodesk® software. Together, HP and Autodesk help you create stunning digital Reliability: HP product testing includes application performance, media. graphics and comprehensive ISV certification for maximum productivity. All HP Workstations come with a limited 3-year parts, 3-year labor and The HP Difference 3-year onsite service (3/3/3) standard warranty that is extendable up to 5 years.2 You can be confident in your HP and Autodesk solution. -

Maaston Mallintaminen Visualisointikäyttöön

MAASTON MALLINTAMINEN VISUALISOINTIKÄYTTÖÖN LAHDEN AMMATTIKORKEAKOULU Tekniikan ala Mediatekniikan koulutusohjelma Teknisen Visualisoinnin suuntautumisvaihtoehto Opinnäytetyö Kevät 2012 Ilona Moilanen Lahden ammattikorkeakoulu Mediatekniikan koulutusohjelma MOILANEN, ILONA: Maaston mallintaminen visualisointikäyttöön Teknisen Visualisoinnin suuntautumisvaihtoehdon opinnäytetyö, 29 sivua Kevät 2012 TIIVISTELMÄ Maastomallit ovat yleisesti käytössä peli- ja elokuvateollisuudessa sekä arkkitehtuurisissa visualisoinneissa. Mallinnettujen 3D-maastojen käyttö on lisääntynyt sitä mukaa, kun tietokoneista on tullut tehokkaampia. Opinnäytetyössä käydään läpi, millaisia maastonmallintamisen ohjelmia on saatavilla ja osa ohjelmista otetaan tarkempaan käsittelyyn. Opinnäytetyössä käydään myös läpi valittujen ohjelmien hyvät ja huonot puolet. Tarkempaan käsittelyyn otettavat ohjelmat ovat Terragen- sekä 3ds Max - ohjelmat. 3ds Max-ohjelmassa käydään läpi maaston luonti korkeuskartan ja Displace modifier -toiminnon avulla, sekä se miten maaston tuominen onnistuu Google Earth-ohjelmasta Autodeskin tuotteisiin kuten 3ds Max:iin käyttäen apuna Google Sketchup -ohjelmaa. Lopuksi vielä käydään läpi ohjelmien hyvät ja huonot puolet. Casessa mallinnetaan maasto Terragen-ohjelmassa sekä 3ds Max- ohjelmassa korkeuskartan avulla ja verrataan kummalla mallintaminen onnistuu paremmin. Maasto mallinnettiin valituilla ohjelmilla ja käytiin läpi saatavilla olevia maaston mallinnusohjelmia. Lopputuloksena päädyttiin, että valokuvamaisen lopputuloksen saamiseksi Terragen -

´Anoq of the Sun Detailed CV As a Graphics Artist

Anoq´ of the Sun Detailed CV as a Graphics Artist Anoq´ of the Sun, Hardcore Processing ∗ January 31, 2010 Online Link for this Detailed CV This document is available online in 2 file formats: • http://www.anoq.net/music/cv/anoqcvgraphicsartist.pdf • http://www.anoq.net/music/cv/anoqcvgraphicsartist.ps All My CVs and an Overview All my CVs (as a computer scientist, musician and graphics artist) and an overview can be found at: • http://www.hardcoreprocessing.com/home/anoq/cv/anoqcv.html Contents Overview Employment, Education and Skills page 2 ProjectList page 3 ∗ c 2010 Anoq´ of the Sun Graphics Related Employment and Education Company My Role Dates Duration 1 day=7.5 hrs HardcoreProcessing GraphicsArtist 1998-now (seeproject list) I founded this company Pre-print December 1998 Marketing www.hardcoreprocessing.com Anoq´ Music Graphics Artist 2007-now (see project list) I founded this record label Pre-print December 2007 Marketing www.anoq.net/music/label CasperThorsøeVideo Production 3DGraphicsArtist 1997-1998 1year www.ctvp.com (Partly System Administrator) Visionik Worked Partly as a 1997 5 months www.visionik.dk Graphics Artist (not full-time graphics!) List of Graphics Related Skills (Updated on 2010-01-31) (Years Are Not Full-time Durations, But Years with Active Use) Key for ”Level”: 1: Expert, 2: Lots of Routine, 3: Routine, 4: Good Knowledge, 5: Some Knowledge Skill Name / Group Doing What Level Latest Years with (1-5) Use Active Use Graphics Software and Equipment: Alias|Wavefront PowerAnimator Modelling, Animation 2 1998 1 Alias|Wavefront Maya Modelling, Animation 2 1998 1 LightWave 3D Modelling, Animation 2 1997 4 SoftImage 3D Modelling, Animation 5 1998 0.2 3D Studio MAX Modelling, Animation 5 1997 0.5 Blue Moon Rendering Tools (a.k.a. -

Jean Claude Nouchy :: Curriculum Vitae – 2020 (Experienced Houdini Teacher, VFX TD/Lead, VFX/On-Set Supervisor) :: Page 1 Date

Jean Claude Nouchy :: Curriculum vitae – 2020 (Experienced Houdini Teacher, V ! T"#$ead, V !#on%&et &upervi&or' :: (a)e * date o+ ,irth : -pril,*2th, *./0 citi1en&hip : 2talian current re&idence: $ondon, 3nited 4in)dom lan)ua)e& : luent: talian, En)li&h, rench5 6a&ic: 7pani&h5 :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: pro+e&&ional Experience : 20*.#(re&ent Jelly+i&h (icture& $T" – $ondon – C !# !#Cro8d& 7upervi&or "ream8or9&: Ho8 to Train your "ra)on, Homecomin) – $ead ! (-mmy -8ard Nominated' :undi&clo&ed; :in pro)re&&; % C !# !#Cro8d& 7upervi&or 2o*<#pre&ent – Vi&ual Cortex $a, $T" – $ondon – ounder Vi&ual Cortex $a, +ocu&e& on the creation o+ Vi&ual E++ect& +or movie& and commercial& and the advanced trainin) +or pro+e&&ional& and &chool &tudent& o+ the (7ide !' Houdini =" &o+t8are ## >ith 20 year& o+ teachin) experience, previou&ly 7o+tima)e, 2 have ,een actively involved in Teachin) Houdini in the +ollo8in) &chool& and 3niver&itie&, and V ! ,outi?ue& in the la&t &everal year&, includin): -ccaEdi – @ilan – 2taly (20*.' Vi ! 7chool – Thiene – 2taly (20*A%20*B' Event Hori1on 7chool – Turin – 2taly (20*B%20*B' $a7alle 3niver&ity – 6arcelona – 7pain (20*/%20*B' @y4ey 7tudio& – @ilano 2taly (20*=%20*0' ramea&tore – $ondon – 3nited 4in)dom Co++ee and TV – $ondon – 3nited 4in)dom Came7y& – $ondon – 3nited 4in)dom De,ellion Came& – Ex+ord – 3nited 4in)dom 74F 7port – 2&le8orth – 3nited 4in)dom 20*. – Creat V ! – $ondon – 7enior 7imulatio ! T" E@EC-: ! T", =A0 proGected proGect includin) )rain, li?uid&, volume& &imulation&5 20*B#20*. -

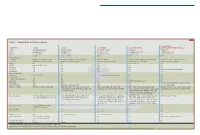

Table 1. Visualization Software Features

Table 1. Visualization Software Features INFORMATIX COMPANY ALIAS ALIAS AUTODESK AUTO•DES•SYS SOFTWARE INTERNATIONAL Product ALIAS IMAGESTUDIO STUDIOTOOLS 11 AUTODESK VIZ 2005 FORM.Z 4.5 PIRANESI 3 E-mail [email protected] [email protected] Use website form [email protected] [email protected] Web site www.alias.com www.alias.com www.autodesk.com www.formz.com www.informatix.co.uk Price $3,999 Starts at $7,500 $1,995 $1,495 $750 Operating systems Supported Windows XP/2000 Professional Windows XP/2000 Professional, SGI IRIX Windows 2000/XP Windows 98/NT/XP/ME/2000, Macintosh 9/X Windows 98 and later, Macintosh OS X Reviewed Windows XP Professional SP1 Windows XP Professional SP1 Windows XP Professional SP1 Windows XP Professional SP1 Windows XP Professional SP1 Modeling None Yes Yes Solid and Surface N/A NURBS Imports NURBS models Yes Yes Yes N/A Refraction Yes Yes Yes Yes N/A Reflection Yes Yes Yes Yes By painting with a generated texture Anti-aliasing Yes Yes Yes Yes N/A Rendering methods Radiosity Yes, Final Gather No Yes, and global illumination/caustics Yes N/A* Ray-tracing Yes Yes Yes Yes N/A* Shade/render (Gouraud) Yes Yes Yes Yes N/A* Animations No† Yes Yes Walkthrough, Quicktime VR N/A Panoramas Yes Yes Yes Yes Can paint cubic panorams and create .MOV files Base file formats AIS Alias StudioTools .wire format MAX FMZ EPX, EPP (panoramas) Import file formats StudioTools (.WIRE), IGES, Maya IGES, STEP, DXF, PTC Granite, CATIA V4/V5, 3DS, AI, XML, DEM, DWG, DXF, .FBX, 3DGF, 3DMF, 3DS, Art*lantis, BMP, DWG EPX, EPP§; Vedute: converts DXF, 3DS; for plans UGS, VDAFS, VDAIS, JAMA-IS, DES, OBJ, EPS IGES, LS, .STL, VWRL, Inventor (installed) DXF, EPS, FACT, HPGL, IGES, AI, JPEG, Light- and elevations; JPG, PNG, TIF, raster formats AI, Inventor, ASCII Scape, Lightwave, TIF, MetaFo;e. -

Graphics, 3D Modeling, Cad and Visual Computing 6/19/17, 9�52 AM

Computer Graphics World - graphics, 3d modeling, cad and visual computing 6/19/17, 952 AM Article Search Advanced | Help CURRENT ISSUE August 2003 Part 7: Movie Retrospective Celebrating our 25th year with digital visual effects and scenes from animated features By Barbara Robertson Although Looker (1981) was the first film with shaded 3D com puter graphics, Tron (1982) was the first with 15 minutes of computer animation and lots of shaded graphics. Some believe Tron's box office failure slowed the adoption of digital effects, but now most films include computer graph ics. Indeed, last year, of the top 10 box office films, eight had hundreds of digital effects shots or were created entirely with 3D computer graphics. During the past two decades, CG inventors have been in spired by filmmaking to create exciting new tools. In so doing, they have in spired filmmakers to expand the art of filmmaking-as you can see on the following pages. What's next? CG tools move QUICK VOTE downstream. Synergy gets energy. Sponsored by: (The date at the beginning of each caption refers to the issue of Computer Graphics World in which the image and credit appeared. The film's release date follows the Which of the following caption.) digital video tools do you use/plan to use? (Check July 1982 For this one-minute "Genesis effect" during all that apply.) Star Trek II: The Wrath of Khan, a dead planet comes DV cameras to life thanks to the first cinematic use of fractals, particle effects, and 32-bit RGBA paint software from DV effects Lucasfilm's Pixar group. -

Computación Software Libre Y Compromiso Social

Universidad de Cordoba´ Escuela Politecnica´ Superior Grado en Informatica:´ Computacion´ Software Libre Y Compromiso Social: Gr´aficos3D con Blender Autor: • Alejandro Manuel Olivares Luque • Josu´eFrancisco Gr´aciaRodr´ıguez Mayo, 2014 ´Indice General ´Indice General III 1. Introducci´on 1 1.1. >Qu´ees blender? . .1 1.2. Historia de Blender . .2 1.2.1. Versiones y revisiones . .4 1.3. Licencia . .7 1.4. Otros software . .7 1.4.1. Autodesk 3ds Max . .7 1.4.2. Maya . 10 1.4.3. MAXON . 10 1.4.4. Softimage . 10 1.4.5. NewTek . 11 2. Instalaci´on 13 2.1. Instalaci´onen Windows . 13 2.1.1. Descarga . 13 2.1.2. Versi´on. 13 2.1.3. Localizar . 13 2.1.4. Instalaci´on. 14 2.2. Instalaci´onen Linux . 14 iii ´INDICE GENERAL 2.2.1. Instalaci´onR´apida . 14 2.2.2. Instalaci´onDetallada . 15 2.3. Instalaci´onen Mac . 15 2.3.1. Descargar . 15 2.3.2. Localizar . 15 2.3.3. Montar . 15 2.3.4. Instalar . 16 3. Interfaz 17 3.1. Sistemas de ventanas . 17 3.1.1. Interfaz y escenas predeterminadas . 18 3.2. Tipos de ventanas . 21 3.3. Entornos de trabajos . 22 3.3.1. Configuraci´onde Pantallas . 24 3.4. Men´us . 26 3.5. Paneles . 27 3.6. Botones y controladores . 27 3.6.1. Botones de operaci´on . 28 3.6.2. Botones de conmutaci´on . 28 3.6.3. Botones de radio . 28 3.6.4. Botones n´umericos . 29 3.6.5. Unidades . -

Guide to Graphics Software Tools

Jim x. ehen With contributions by Chunyang Chen, Nanyang Yu, Yanlin Luo, Yanling Liu and Zhigeng Pan Guide to Graphics Software Tools Second edition ~ Springer Contents Pre~ace ---------------------- - ----- - -v Chapter 1 Objects and Models 1.1 Graphics Models and Libraries ------- 1 1.2 OpenGL Programming 2 Understanding Example 1.1 3 1.3 Frame Buffer, Scan-conversion, and Clipping ----- 5 Scan-converting Lines 6 Scan-converting Circles and Other Curves 11 Scan-converting Triangles and Polygons 11 Scan-converting Characters 16 Clipping 16 1.4 Attributes and Antialiasing ------------- -17 Area Sampling 17 Antialiasing a Line with Weighted Area Sampling 18 1.5 Double-bl{tferingfor Animation - 21 1.6 Review Questions ------- - -26 X Contents 1.7 Programming Assignments - - -------- - -- 27 Chapter 2 Transformation and Viewing 2.1 Geometrie Transformation ----- 29 2.2 2D Transformation ---- - ---- - 30 20 Translation 30 20 Rotation 31 20 Scaling 32 Composition of2D Transformations 33 2.3 3D Transformation and Hidden-surjaee Removal -- - 38 3D Translation, Rotation, and Scaling 38 Transfonnation in OpenGL 40 Hidden-surface Remova! 45 Collision Oetection 46 30 Models: Cone, Cylinder, and Sphere 46 Composition of30 Transfonnations 51 2.4 Viewing ----- - 56 20 Viewing 56 30 Viewing 57 30 Clipping Against a Cube 61 Clipping Against an Arbitrary Plane 62 An Example ofViewing in OpenGL 62 2.5 Review Questions 65 2.6 Programming Assignments 67 Chapter 3 Color andLighting 3.1 Color -------- - - 69 RGß Mode and Index Mode 70 Eye Characteristics and -

Appendix: Graphics Software Took

Appendix: Graphics Software Took Appendix Objectives: • Provide a comprehensive list of graphics software tools. • Categorize graphics tools according to their applications. Many tools come with multiple functions. We put a primary category name behind a tool name in the alphabetic index, and put a tool name into multiple categories in the categorized index according to its functions. A.I. Graphics Tools Listed by Categories We have no intention of rating any of the tools. Many tools in the same category are not necessarily of the same quality or at the same capacity level. For example, a software tool may be just a simple function of another powerful package, but it may be free. Low4evel Graphics Libraries 1. DirectX/DirectSD - - 248 2. GKS-3D - - - 278 3. Mesa 342 4. Microsystem 3D Graphic Tools 346 5. OpenGL 370 6. OpenGL For Java (GL4Java; Maps OpenGL and GLU APIs to Java) 281 7. PHIGS 383 8. QuickDraw3D 398 9. XGL - 497 138 Appendix: Graphics Software Toois Visualization Tools 1. 3D Grapher (Illustrates and solves mathematical equations in 2D and 3D) 160 2. 3D Studio VIZ (Architectural and industrial designs and concepts) 167 3. 3DField (Elevation data visualization) 171 4. 3DVIEWNIX (Image, volume, soft tissue display, kinematic analysis) 173 5. Amira (Medicine, biology, chemistry, physics, or engineering data) 193 6. Analyze (MRI, CT, PET, and SPECT) 197 7. AVS (Comprehensive suite of data visualization and analysis) 211 8. Blueberry (Virtual landscape and terrain from real map data) 221 9. Dice (Data organization, runtime visualization, and graphical user interface tools) 247 10. Enliten (Views, analyzes, and manipulates complex visualization scenarios) 260 11. -

Darren Blencowe | 3D Generalist W: M: +44 (0)7971 558 041 CV E: [email protected]

Darren Blencowe | 3D Generalist W: www.darrenblencowe.com M: +44 (0)7971 558 041 CV E: [email protected] INTRODUCTION | Bio: Softimage 3D Generalist/Animator with high level of Motion Graphics and VFX competency. A digital creative that brings over 27 years of direct creative experience into a wide range of digital content projects, from film, broadcast, interactive and new media channels. corporate identities promo s commercials brands sponsorships music videos applications digital signage video games designs products interactive Project involvement has been solo, in teams of up to thirty and able to lead small creative teams whilst being budget, schedule conscious and planning accordingly. Integral at various stages of CGI development for diverse media platform projects. Gaining thorough knowledge of many CGI pipelines and workflows whilst performing various creative and managerial roles. independents agencies corporates multi-nationals broadcasters publishers marketing web video games entertainment education communications SKILLS | software: 3D: Softimage: Advanced Softimage ICE: Intermediate Maya: Basic 3DMax: Basic Houdini: Basic Lightwave: Basic 3DTool: Intermediate Mudbox: Basic Sculptris: Basic Unity: Basic ZBrush: Basic Substance Painter: Intermediate 2D: AutoCAD: Advanced AdobeCS6: Advanced Edraw: Advanced BouJou: Intermediate RENDERING: Mental Ray: Advanced Arnold: Intermediate Red Shift: Intermediate Royal Render: Intermediate OS: MS Windows: Advanced Mac OS/x: Basic Linux: Basic PRODUCTION: MS Office: Advanced SmartSheet: Advanced Freelance & Contract | OCT 1998 to PRESENT | Highlighted achievements: JOB ROLES: Pr: Producer Cr: Creative Director Sv: Supervisor Co: Concept De: Design Sb: Storyboarding Pv: Pre-Viz Mo: Modelling Tx: Texturing Ri: Rigging Li: Lighting An: Animating Si: Simulation Re: Rendering Cm: Compositing In: Interactive Mc: Multi-Channel 2014 – 9Yards Dive into Dosing ‘4K Video Wall’: Responsible as lead Creative and generalist on 3 min 4k Character led story info-graphic for international symposia.