CS425 Computer Systems Architecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fill Your Boots: Enhanced Embedded Bootloader Exploits Via Fault Injection and Binary Analysis

IACR Transactions on Cryptographic Hardware and Embedded Systems ISSN 2569-2925, Vol. 2021, No. 1, pp. 56–81. DOI:10.46586/tches.v2021.i1.56-81 Fill your Boots: Enhanced Embedded Bootloader Exploits via Fault Injection and Binary Analysis Jan Van den Herrewegen1, David Oswald1, Flavio D. Garcia1 and Qais Temeiza2 1 School of Computer Science, University of Birmingham, UK, {jxv572,d.f.oswald,f.garcia}@cs.bham.ac.uk 2 Independent Researcher, [email protected] Abstract. The bootloader of an embedded microcontroller is responsible for guarding the device’s internal (flash) memory, enforcing read/write protection mechanisms. Fault injection techniques such as voltage or clock glitching have been proven successful in bypassing such protection for specific microcontrollers, but this often requires expensive equipment and/or exhaustive search of the fault parameters. When multiple glitches are required (e.g., when countermeasures are in place) this search becomes of exponential complexity and thus infeasible. Another challenge which makes embedded bootloaders notoriously hard to analyse is their lack of debugging capabilities. This paper proposes a grey-box approach that leverages binary analysis and advanced software exploitation techniques combined with voltage glitching to develop a powerful attack methodology against embedded bootloaders. We showcase our techniques with three real-world microcontrollers as case studies: 1) we combine static and on-chip dynamic analysis to enable a Return-Oriented Programming exploit on the bootloader of the NXP LPC microcontrollers; 2) we leverage on-chip dynamic analysis on the bootloader of the popular STM8 microcontrollers to constrain the glitch parameter search, achieving the first fully-documented multi-glitch attack on a real-world target; 3) we apply symbolic execution to precisely aim voltage glitches at target instructions based on the execution path in the bootloader of the Renesas 78K0 automotive microcontroller. -

Computer Organization and Architecture Designing for Performance Ninth Edition

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION William Stallings Boston Columbus Indianapolis New York San Francisco Upper Saddle River Amsterdam Cape Town Dubai London Madrid Milan Munich Paris Montréal Toronto Delhi Mexico City São Paulo Sydney Hong Kong Seoul Singapore Taipei Tokyo Editorial Director: Marcia Horton Designer: Bruce Kenselaar Executive Editor: Tracy Dunkelberger Manager, Visual Research: Karen Sanatar Associate Editor: Carole Snyder Manager, Rights and Permissions: Mike Joyce Director of Marketing: Patrice Jones Text Permission Coordinator: Jen Roach Marketing Manager: Yez Alayan Cover Art: Charles Bowman/Robert Harding Marketing Coordinator: Kathryn Ferranti Lead Media Project Manager: Daniel Sandin Marketing Assistant: Emma Snider Full-Service Project Management: Shiny Rajesh/ Director of Production: Vince O’Brien Integra Software Services Pvt. Ltd. Managing Editor: Jeff Holcomb Composition: Integra Software Services Pvt. Ltd. Production Project Manager: Kayla Smith-Tarbox Printer/Binder: Edward Brothers Production Editor: Pat Brown Cover Printer: Lehigh-Phoenix Color/Hagerstown Manufacturing Buyer: Pat Brown Text Font: Times Ten-Roman Creative Director: Jayne Conte Credits: Figure 2.14: reprinted with permission from The Computer Language Company, Inc. Figure 17.10: Buyya, Rajkumar, High-Performance Cluster Computing: Architectures and Systems, Vol I, 1st edition, ©1999. Reprinted and Electronically reproduced by permission of Pearson Education, Inc. Upper Saddle River, New Jersey, Figure 17.11: Reprinted with permission from Ethernet Alliance. Credits and acknowledgments borrowed from other sources and reproduced, with permission, in this textbook appear on the appropriate page within text. Copyright © 2013, 2010, 2006 by Pearson Education, Inc., publishing as Prentice Hall. All rights reserved. Manufactured in the United States of America. -

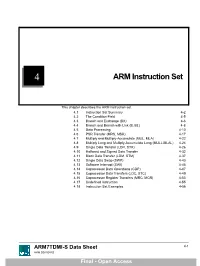

ARM Instruction Set

4 ARM Instruction Set This chapter describes the ARM instruction set. 4.1 Instruction Set Summary 4-2 4.2 The Condition Field 4-5 4.3 Branch and Exchange (BX) 4-6 4.4 Branch and Branch with Link (B, BL) 4-8 4.5 Data Processing 4-10 4.6 PSR Transfer (MRS, MSR) 4-17 4.7 Multiply and Multiply-Accumulate (MUL, MLA) 4-22 4.8 Multiply Long and Multiply-Accumulate Long (MULL,MLAL) 4-24 4.9 Single Data Transfer (LDR, STR) 4-26 4.10 Halfword and Signed Data Transfer 4-32 4.11 Block Data Transfer (LDM, STM) 4-37 4.12 Single Data Swap (SWP) 4-43 4.13 Software Interrupt (SWI) 4-45 4.14 Coprocessor Data Operations (CDP) 4-47 4.15 Coprocessor Data Transfers (LDC, STC) 4-49 4.16 Coprocessor Register Transfers (MRC, MCR) 4-53 4.17 Undefined Instruction 4-55 4.18 Instruction Set Examples 4-56 ARM7TDMI-S Data Sheet 4-1 ARM DDI 0084D Final - Open Access ARM Instruction Set 4.1 Instruction Set Summary 4.1.1 Format summary The ARM instruction set formats are shown below. 3 3 2 2 2 2 2 2 2 2 2 2 1 1 1 1 1 1 1 1 1 1 9876543210 1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0 Cond 0 0 I Opcode S Rn Rd Operand 2 Data Processing / PSR Transfer Cond 0 0 0 0 0 0 A S Rd Rn Rs 1 0 0 1 Rm Multiply Cond 0 0 0 0 1 U A S RdHi RdLo Rn 1 0 0 1 Rm Multiply Long Cond 0 0 0 1 0 B 0 0 Rn Rd 0 0 0 0 1 0 0 1 Rm Single Data Swap Cond 0 0 0 1 0 0 1 0 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 Rn Branch and Exchange Cond 0 0 0 P U 0 W L Rn Rd 0 0 0 0 1 S H 1 Rm Halfword Data Transfer: register offset Cond 0 0 0 P U 1 W L Rn Rd Offset 1 S H 1 Offset Halfword Data Transfer: immediate offset Cond 0 -

3.2 the CORDIC Algorithm

UC San Diego UC San Diego Electronic Theses and Dissertations Title Improved VLSI architecture for attitude determination computations Permalink https://escholarship.org/uc/item/5jf926fv Author Arrigo, Jeanette Fay Freauf Publication Date 2006 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California 1 UNIVERSITY OF CALIFORNIA, SAN DIEGO Improved VLSI Architecture for Attitude Determination Computations A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Electrical and Computer Engineering (Electronic Circuits and Systems) by Jeanette Fay Freauf Arrigo Committee in charge: Professor Paul M. Chau, Chair Professor C.K. Cheng Professor Sujit Dey Professor Lawrence Larson Professor Alan Schneider 2006 2 Copyright Jeanette Fay Freauf Arrigo, 2006 All rights reserved. iv DEDICATION This thesis is dedicated to my husband Dale Arrigo for his encouragement, support and model of perseverance, and to my father Eugene Freauf for his patience during my pursuit. In memory of my mother Fay Freauf and grandmother Fay Linton Thoreson, incredible mentors and great advocates of the quest for knowledge. iv v TABLE OF CONTENTS Signature Page...............................................................................................................iii Dedication … ................................................................................................................iv Table of Contents ...........................................................................................................v -

Consider an Instruction Cycle Consisting of Fetch, Operators Fetch (Immediate/Direct/Indirect), Execute and Interrupt Cycles

Module-2, Unit-3 Instruction Execution Question 1: Consider an instruction cycle consisting of fetch, operators fetch (immediate/direct/indirect), execute and interrupt cycles. Explain the purpose of these four cycles. Solution 1: The life of an instruction passes through four phases—(i) Fetch, (ii) Decode and operators fetch, (iii) execute and (iv) interrupt. The purposes of these phases are as follows 1. Fetch We know that in the stored program concept, all instructions are also present in the memory along with data. So the first phase is the “fetch”, which begins with retrieving the address stored in the Program Counter (PC). The address stored in the PC refers to the memory location holding the instruction to be executed next. Following that, the address present in the PC is given to the address bus and the memory is set to read mode. The contents of the corresponding memory location (i.e., the instruction) are transferred to a special register called the Instruction Register (IR) via the data bus. IR holds the instruction to be executed. The PC is incremented to point to the next address from which the next instruction is to be fetched So basically the fetch phase consists of four steps: a) MAR <= PC (Address of next instruction from Program counter is placed into the MAR) b) MBR<=(MEMORY) (the contents of Data bus is copied into the MBR) c) PC<=PC+1 (PC gets incremented by instruction length) d) IR<=MBR (Data i.e., instruction is transferred from MBR to IR and MBR then gets freed for future data fetches) 2. -

Akukwe Michael Lotachukwu 18/Sci01/014 Computer Science

AKUKWE MICHAEL LOTACHUKWU 18/SCI01/014 COMPUTER SCIENCE The purpose of every computer is some form of data processing. The CPU supports data processing by performing the functions of fetch, decode and execute on programmed instructions. Taken together, these functions are frequently referred to as the instruction cycle. In addition to the instruction cycle functions, the CPU performs fetch and writes functions on data. When a program runs on a computer, instructions are stored in computer memory until they're executed. The CPU uses a program counter to fetch the next instruction from memory, where it's stored in a format known as assembly code. The CPU decodes the instruction into binary code that can be executed. Once this is done, the CPU does what the instruction tells it to, performing an operation, fetching or storing data or adjusting the program counter to jump to a different instruction. The types of operations that typically can be performed by the CPU include simple math functions like addition, subtraction, multiplication and division. The CPU can also perform comparisons between data objects to determine if they're equal. All the amazing things that computers can do are performed with these and a few other basic operations. After an instruction is executed, the next instruction is fetched and the cycle continues. While performing the execute function of the instruction cycle, the CPU may be asked to execute an instruction that requires data. For example, executing an arithmetic function requires the numbers that will be used for the calculation. To deliver the necessary data, there are instructions to fetch data from memory and write data that has been processed back to memory. -

Parallel Computing

Lecture 1: Computer Organization 1 Outline • Overview of parallel computing • Overview of computer organization – Intel 8086 architecture • Implicit parallelism • von Neumann bottleneck • Cache memory – Writing cache-friendly code 2 Why parallel computing • Solving an × linear system Ax=b by using Gaussian elimination takes ≈ flops. 1 • On Core i7 975 @ 4.0 GHz,3 which is capable of about 3 60-70 Gigaflops flops time 1000 3.3×108 0.006 seconds 1000000 3.3×1017 57.9 days 3 What is parallel computing? • Serial computing • Parallel computing https://computing.llnl.gov/tutorials/parallel_comp 4 Milestones in Computer Architecture • Analytic engine (mechanical device), 1833 – Forerunner of modern digital computer, Charles Babbage (1792-1871) at University of Cambridge • Electronic Numerical Integrator and Computer (ENIAC), 1946 – Presper Eckert and John Mauchly at the University of Pennsylvania – The first, completely electronic, operational, general-purpose analytical calculator. 30 tons, 72 square meters, 200KW. – Read in 120 cards per minute, Addition took 200µs, Division took 6 ms. • IAS machine, 1952 – John von Neumann at Princeton’s Institute of Advanced Studies (IAS) – Program could be represented in digit form in the computer memory, along with data. Arithmetic could be implemented using binary numbers – Most current machines use this design • Transistors was invented at Bell Labs in 1948 by J. Bardeen, W. Brattain and W. Shockley. • PDP-1, 1960, DEC – First minicomputer (transistorized computer) • PDP-8, 1965, DEC – A single bus -

Instruction Cycle

INSTRUCTION CYCLE SUBJECT:COMPUTER ORGANIZATION&ARCHITECTURE SAVIYA VARGHESE Dept of BCA 2020-21 A computer instruction is a group of bits that instructs the computer to perform a particular task. Each instruction cycle is subdivided into different parts. This lesson explains about phases of instruction cycle in detail. A computer instruction is a binary code that specifies a sequence of micro operations for the computer. Instruction codes together with data are stored in memory. The computer reads each instruction from memory and places it in a control register. The control then interprets the binary code of the instructions and proceeds to execute it by issuing a sequence of micro operations. The instruction cycle (also known as the fetch–decode–execute cycle, or simply the fetch-execute cycle) is the cycle that the central processing unit (CPU) follows from boot-up until the computer has shut down in order to process instructions. ´A program consisting of sequence of instructions is executed in the computer by going through a cycle for each instruction. ´ Each instruction cycle is subdivided in to sub cycles or phases.They are ´Fetch an instruction from memory ´Decode instruction ´Read effective address from memory if instruction has an indirect address ´Execute instruction This cycle repeats indefinitely unless a HALT instruction is encountered The basic computer has three instruction code formats. Each format has 16 bits. The operation code (opcode) part of the instruction contains three bits and the meaning of the remaining 13 bits depends on the operation code encountered. A memoryreference instruction uses 12 bits to specify an address and one bit to specify the addressing mode I. -

VLIW Architectures Lisa Wu, Krste Asanovic

CS252 Spring 2017 Graduate Computer Architecture Lecture 10: VLIW Architectures Lisa Wu, Krste Asanovic http://inst.eecs.berkeley.edu/~cs252/sp17 WU UCB CS252 SP17 Last Time in Lecture 9 Vector supercomputers § Vector register versus vector memory § Scaling performance with lanes § Stripmining § Chaining § Masking § Scatter/Gather CS252, Fall 2015, Lecture 10 © Krste Asanovic, 2015 2 Sequential ISA Bottleneck Sequential Superscalar compiler Sequential source code machine code a = foo(b); for (i=0, i< Find independent Schedule operations operations Superscalar processor Check instruction Schedule dependencies execution CS252, Fall 2015, Lecture 10 © Krste Asanovic, 2015 3 VLIW: Very Long Instruction Word Int Op 1 Int Op 2 Mem Op 1 Mem Op 2 FP Op 1 FP Op 2 Two Integer Units, Single Cycle Latency Two Load/Store Units, Three Cycle Latency Two Floating-Point Units, Four Cycle Latency § Multiple operations packed into one instruction § Each operation slot is for a fixed function § Constant operation latencies are specified § Architecture requires guarantee of: - Parallelism within an instruction => no cross-operation RAW check - No data use before data ready => no data interlocks CS252, Fall 2015, Lecture 10 © Krste Asanovic, 2015 4 Early VLIW Machines § FPS AP120B (1976) - scientific attached array processor - first commercial wide instruction machine - hand-coded vector math libraries using software pipelining and loop unrolling § Multiflow Trace (1987) - commercialization of ideas from Fisher’s Yale group including “trace scheduling” - available -

18-741 Advanced Computer Architecture Lecture 1: Intro And

18-447 Computer Architecture Lecture 6: Multi-cycle Microarchitectures Prof. Onur Mutlu Carnegie Mellon University Spring 2013, 1/28/2013 Reminder: Homeworks Homework 1 Due today, midnight Turn in via AFS (hand-in directories) Homework 2 Will be assigned later today. Stay tuned… ISA concepts, ISA vs. microarchitecture, microcoded machines 2 Reminder: Lab Assignment 1 Due this Friday (Feb 1), at the end of Friday lab A functional C-level simulator for a subset of the MIPS ISA Study the MIPS ISA Tutorial TAs will continue to cover this in Lab Sessions this week 3 Lookahead: Lab Assignment 2 Lab Assignment 1.5 Verilog practice Not to be turned in Lab Assignment 2 Due Feb 15 Single-cycle MIPS implementation in Verilog All labs are individual assignments No collaboration; please respect the honor code 4 Lookahead: Extra Credit for Lab Assignment 2 Complete your normal (single-cycle) implementation first, and get it checked off in lab. Then, implement the MIPS core using a microcoded approach similar to what we will discuss in class. We are not specifying any particular details of the microcode format or the microarchitecture; you can be creative. For the extra credit, the microcoded implementation should execute the same programs that your ordinary implementation does, and you should demo it by the normal lab deadline. 5 Readings for Today P&P, Revised Appendix C Microarchitecture of the LC-3b Appendix A (LC-3b ISA) will be useful in following this P&H, Appendix D Mapping Control to Hardware Optional Maurice Wilkes, “The Best Way to Design an Automatic Calculating Machine,” Manchester Univ. -

Introduction to Cpu

microprocessors and microcontrollers - sadri 1 INTRODUCTION TO CPU Mohammad Sadegh Sadri Session 2 Microprocessor Course Isfahan University of Technology Sep., Oct., 2010 microprocessors and microcontrollers - sadri 2 Agenda • Review of the first session • A tour of silicon world! • Basic definition of CPU • Von Neumann Architecture • Example: Basic ARM7 Architecture • A brief detailed explanation of ARM7 Architecture • Hardvard Architecture • Example: TMS320C25 DSP microprocessors and microcontrollers - sadri 3 Agenda (2) • History of CPUs • 4004 • TMS1000 • 8080 • Z80 • Am2901 • 8051 • PIC16 microprocessors and microcontrollers - sadri 4 Von Neumann Architecture • Same Memory • Program • Data • Single Bus microprocessors and microcontrollers - sadri 5 Sample : ARM7T CPU microprocessors and microcontrollers - sadri 6 Harvard Architecture • Separate memories for program and data microprocessors and microcontrollers - sadri 7 TMS320C25 DSP microprocessors and microcontrollers - sadri 8 Silicon Market Revenue Rank Rank Country of 2009/2008 Company (million Market share 2009 2008 origin changes $ USD) Intel 11 USA 32 410 -4.0% 14.1% Corporation Samsung 22 South Korea 17 496 +3.5% 7.6% Electronics Toshiba 33Semiconduc Japan 10 319 -6.9% 4.5% tors Texas 44 USA 9 617 -12.6% 4.2% Instruments STMicroelec 55 FranceItaly 8 510 -17.6% 3.7% tronics 68Qualcomm USA 6 409 -1.1% 2.8% 79Hynix South Korea 6 246 +3.7% 2.7% 812AMD USA 5 207 -4.6% 2.3% Renesas 96 Japan 5 153 -26.6% 2.2% Technology 10 7 Sony Japan 4 468 -35.7% 1.9% microprocessors and microcontrollers -

Fpga-Based Digital Phase-Locked Loop Analysis and Implementation

FPGA-BASED DIGITAL PHASE-LOCKED LOOP ANALYSIS AND IMPLEMENTATION BY DAN HU THESIS Submitted in partial fulfillment of the requirements for the degree of Master of Science in Electrical and Computer Engineering in the Graduate College of the University of Illinois at Urbana-Champaign, 2011 Urbana, Illinois Advisers: Professor Steven J. Franke Visiting Lecturer Christopher D. Schmitz Abstract The thesis presents a digital PLL project that will be used as an ECE 463 lab module and serve as a platform for future communication research projects. Field Programmable Gate Array (FPGA) technology is used for all digital signal processing tasks. A Direct Digital Synthesizer (DDS) is used to synthesize analog output, the frequency of which is controlled digitally by the FPGA. This system is implemented in a way that makes it educational and suitable for a lab module. Unlike purely digital PLL, this project in- volves several analog circuits soldered on PCBs, which will help the students visualize the signal flow in the PLL and get some exposure to mixed-signal systems. ii Contents 1 Introduction . 1 2 Theory . 3 2.1 PLLtheory ............................ 3 2.2 Phase detector . 7 2.3 DDStheory ............................ 11 2.4 Figures............................... 14 3 Implementation and Analysis . 18 3.1 FPGA............................... 18 3.2 Otherhardware.......................... 24 3.3 Modeling of actual system . 24 3.4 Figures............................... 27 4 Experiment and Characterization . 34 4.1 Constant parameters in experiment setup . 34 4.2 Response to step stimulus . 35 4.3 Response to sinusoidal stimulus . 37 4.4 Processing lag . 39 4.5 Lockrange............................. 40 4.6 Figures............................... 42 5 Conclusion .