Recreational Fishing Technical Report CARIBBEAN REGIONAL

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Isopoda: Cymothoidae) in the Brazilian Coastal Waters with Aspects of Host-Parasite Interaction*

NOTE BRAZILIAN JOURNAL OF OCEANOGRAPHY, 58(special issue IICBBM):73-77, 2010 NEW HOST RECORD FOR Livoneca redmanni (LEACH, 1818) (ISOPODA: CYMOTHOIDAE) IN THE BRAZILIAN COASTAL WATERS WITH ASPECTS OF HOST-PARASITE INTERACTION* 1,** 1,** Eudriano Florêncio dos Santos Costa and Sathyabama Chellappa Universidade Federal do Rio Grande do Norte Centro de Biociência - Programa de Pós-Graduação em Bioecologia Aquática (Praia de Mãe Luiza, s/n, 59014-100 Natal, RN, Brasil) **Corresponding authors: [email protected]; [email protected] Cymothoids are among the largest parasites The fish Chloroscombrus chrysurus of fishes. These are the isopods commonly seen in (Linnaeus, 1766) (Osteichthyes: Carangidae), numerous families and species of fishes of commercial commonly known as Atlantic bumper, has a wide importance, in tropical and subtropical waters, distribution range along the shallow Brazilian attached on the body surface, in the mouth or on the tropical waters, mainly in bays and estuarine areas. gills of their hosts (BRUSCA, 1981; BUNKLEY- in the western atlantic there are records of this species WILLIAMS; WILLIAMS, 1998; LESTER; from Massachusetts, USA, to Northern Argentina. ROUBAL, 2005). Some cymothoids form pouches in This species is very common in Brazilian Southeast the lateral musculature of few freshwater and marine region where they reach a total body length of 300 fishes; are highly host and site specific (BRUSCA, mm ( MENEZES ; FIGUEIREDO , 1980). The length at 1981; BUNKLEY-WILLIAMS; WILLIAMS, 1998). first spawning for C. chrysurus has been registered at They are protandrous hermaphrodites which are 95 mm of total length in Northeast region (CUNHA et unable to leave their hosts after becoming females. -

© Iccat, 2007

A5 By-catch Species APPENDIX 5: BY-CATCH SPECIES A.5 By-catch species By-catch is the unintentional/incidental capture of non-target species during fishing operations. Different types of fisheries have different types and levels of by-catch, depending on the gear used, the time, area and depth fished, etc. Article IV of the Convention states: "the Commission shall be responsible for the study of the population of tuna and tuna-like fishes (the Scombriformes with the exception of Trichiuridae and Gempylidae and the genus Scomber) and such other species of fishes exploited in tuna fishing in the Convention area as are not under investigation by another international fishery organization". The following is a list of by-catch species recorded as being ever caught by any major tuna fishery in the Atlantic/Mediterranean. Note that the lists are qualitative and are not indicative of quantity or mortality. Thus, the presence of a species in the lists does not imply that it is caught in significant quantities, or that individuals that are caught necessarily die. Skates and rays Scientific names Common name Code LL GILL PS BB HARP TRAP OTHER Dasyatis centroura Roughtail stingray RDC X Dasyatis violacea Pelagic stingray PLS X X X X Manta birostris Manta ray RMB X X X Mobula hypostoma RMH X Mobula lucasana X Mobula mobular Devil ray RMM X X X X X Myliobatis aquila Common eagle ray MYL X X Pteuromylaeus bovinus Bull ray MPO X X Raja fullonica Shagreen ray RJF X Raja straeleni Spotted skate RFL X Rhinoptera spp Cownose ray X Torpedo nobiliana Torpedo -

The Serra Spanish Mackerel Fishery (Scomberomorus Brasiliensis

ISSN 1519-6984 (Print) ISSN 1678-4375 (Online) THE INTERNATIONAL JOURNAL ON NEOTROPICAL BIOLOGY THE INTERNATIONAL JOURNAL ON GLOBAL BIODIVERSITY AND ENVIRONMENT Original Article The Serra Spanish mackerel fishery (Scomberomorus brasiliensis – Teleostei) in Southern Brazil: the growing landings of a high trophic level resource A pesca da cavala, Scomberomorus brasiliensis (Teleostei), no sul do Brasil: o aumento nos desembarques de um recurso de alto nível trófico P. T. C. Chavesa* and P. O. Birnfelda aUniversidade Federal do Paraná – UFPR, Zoology Department, Curitiba, Parana, Brasil Abstract In fisheries, the phenomenon known as fishing down food webs is supposed to be a consequence of overfishing, which would be reflected in a reduction in the trophic level of landings. In such scenarios, the resilience of carnivorous, top predator species is particularly affected, making these resources the first to be depleted. The Serra Spanish mackerel, Scomberomorus brasiliensis, exemplifies a predator resource historically targeted by artisanal fisheries on the Brazilian coast. The present work analyzes landings in three periods within a 50-year timescale on the Parana coast, Southern Brazil, aiming to evaluate whether historical production has supposedly declined. Simultaneously, the diet was analyzed to confirm carnivorous habits and evaluate the trophic level in this region. Surprisingly, the results show that from the 1970’s to 2019 Serra Spanish mackerel production grew relatively to other resources, as well as in individual values. The trophic level was calculated as 4.238, similar to other Scomberomorus species, consisting of a case where landings increase over time, despite the high trophic level and large body size of the resource. -

SMALL TUNAS SMT-1. Generalities the Species Under The

2019 SCRS REPORT 9.12 SMT – SMALL TUNAS SMT-1. Generalities The species under the Small Tunas Species Group include the following tuna and tuna-like species: – BLF Blackfin tuna (Thunnus atlanticus) – BLT Bullet tuna (Auxis rochei) – BON Atlantic bonito (Sarda sarda) – BOP Plain bonito (Orcynopsis unicolor) – BRS Serra Spanish mackerel (Scomberomorus brasiliensis) – CER Cero (Scomberomorus regalis) – FRI Frigate tuna (Auxis thazard) – KGM King mackerel (Scomberomorus cavalla) – LTA Little tunny (Euthynnus alletteratus) – MAW West African Spanish mackerel (Scomberomorus tritor) – SSM Atlantic Spanish mackerel (Scomberomorus maculatus) – WAH Wahoo (Acanthocybium solandri) – DOL Dolphinfish (Coryphaena hippurus) Knowledge on the biology and fishery of small tunas is very fragmented. Furthermore, the quality of the knowledge varies according to the species concerned. This is due in large part to the fact that these species are often perceived to have little economic importance compared to other tunas and tuna-like species, and owing to the difficulties in conducting sampling of the landings from artisanal fisheries, which constitute a high proportion of the fisheries exploiting small tuna resources. The large industrial fleets often discard small tuna catches at sea or sell them on local markets mixed with other by-catches, especially in Africa. The amount caught is rarely reported in logbooks; however observer programs from purse seine fleets have recently provided estimates of catches of small tunas. Small tuna species can reach high levels of catches and values in some years and have a very high relevance from a social and economic point of view, because they are important for many coastal communities in all areas and a main source of food. -

Intrinsic Vulnerability in the Global Fish Catch

The following appendix accompanies the article Intrinsic vulnerability in the global fish catch William W. L. Cheung1,*, Reg Watson1, Telmo Morato1,2, Tony J. Pitcher1, Daniel Pauly1 1Fisheries Centre, The University of British Columbia, Aquatic Ecosystems Research Laboratory (AERL), 2202 Main Mall, Vancouver, British Columbia V6T 1Z4, Canada 2Departamento de Oceanografia e Pescas, Universidade dos Açores, 9901-862 Horta, Portugal *Email: [email protected] Marine Ecology Progress Series 333:1–12 (2007) Appendix 1. Intrinsic vulnerability index of fish taxa represented in the global catch, based on the Sea Around Us database (www.seaaroundus.org) Taxonomic Intrinsic level Taxon Common name vulnerability Family Pristidae Sawfishes 88 Squatinidae Angel sharks 80 Anarhichadidae Wolffishes 78 Carcharhinidae Requiem sharks 77 Sphyrnidae Hammerhead, bonnethead, scoophead shark 77 Macrouridae Grenadiers or rattails 75 Rajidae Skates 72 Alepocephalidae Slickheads 71 Lophiidae Goosefishes 70 Torpedinidae Electric rays 68 Belonidae Needlefishes 67 Emmelichthyidae Rovers 66 Nototheniidae Cod icefishes 65 Ophidiidae Cusk-eels 65 Trachichthyidae Slimeheads 64 Channichthyidae Crocodile icefishes 63 Myliobatidae Eagle and manta rays 63 Squalidae Dogfish sharks 62 Congridae Conger and garden eels 60 Serranidae Sea basses: groupers and fairy basslets 60 Exocoetidae Flyingfishes 59 Malacanthidae Tilefishes 58 Scorpaenidae Scorpionfishes or rockfishes 58 Polynemidae Threadfins 56 Triakidae Houndsharks 56 Istiophoridae Billfishes 55 Petromyzontidae -

© Iccat, 2007

A2.2 ICCAT Species Codes APPENDIX 2.2: SPECIES CODES Y ello wfin tuna Codes used to identify the ICCAT tuna and tuna-like species as well as by-catch species Atún blanco Tuna and tuna-like species G e r m o n Numerical Alphabetical Scientific Name English SkipjackFra tunancais EspañolR a b i l 1 BFT Thunnus thynnus Northern bluefin tuna Thon rouge du Nord Atún común (Cimarrón) 2 SBF Thunnus maccoyii Southern bluefin tuna Thon rouge du Sud Atún del Sur 3 YFT Thunnus albacares erocablA T hazard-bâtard L i s t a d o 4 ALB Thunnus alalunga erocablA Plain bonito 5 BET Thunnus obesus Bigeye tuna Thon obèse(=Patudo)P a l o m e t tPatudo e 6 BLF Thunnus atlanticus Blackfin tuna Thon à nageoires noires Atún des aletas negras 7 LTA Euthynnus alletteratus Little tunny(=Atl.black skipjack) Thonine commune BacoretaT a s a r t e 8 SKJ Katsuwonus pelamis WBlack a h o o m arlinoatsiL M akaire noir 9 BON Sarda sarda Atlantic bonito Bonite à dos rayé Bonito del AtlánticoA guja negra P e t o 10 FRI Auxis thazard Frigate tuna Auxide Melva 11 BOP Orcynopsis unicolor 12 WAH Acanthocybium solandri Pez espada 13 SSM Scomberomorus maculatus Atlantic SpanishS w mackerel o r d f i s hTh azard atlantique Carite atlántico 14 KGM Scomberomorus cavalla King mackerel Thazard Ebarr sé p a d o n Carite lucio 15 SAI Istiophorus albicans Atlantic sailfish Voilier de l'Atlantique Pez vela del Atlántico 16 BLM Makaira indica 17 BUM Makaira nigricans Atlantic blue marlin Makaire bleu de l'Atlantique Aguja azul del Atlántico 18 WHM Tetrapturus albidus Atlantic white marlin Makaire blanc de l'Atlantique Aguja blanca del Atlántico 28 19 SWO Xiphias gladius 3 20 SPF Tetrapturus pfluegeri Longbill spearfish Makaire bécune Aguja picuda 284 ICCAT MANUAL, 1st Edition (January 2010) 21 TUN Thunnini sanuT ien sédinohT acn senutA pen 23 YOU gnuoY sanut senueJ sédinoht senutA senevój 24 BIL Istiophoridae Marlins,sailfishes,etc. -

Fao Species Catalogue

FAO Fisheries Synopsis No. 125, Volume 2 FIR/S125 Vol. 2 FAO SPECIES CATALOGUE VOL. 2 SCOMBRIDS OF THE WORLD AN ANNOTATED AND ILLUSTRATED CATALOGUE OF TUNAS, MACKERELS, BONITOS, AND RELATED SPECIES KNOWN TO DATE UNITED NATIONS DEVELOPMENT PROGRAMME FOOD AND AGRICULTURE ORGANIZATION OF THE UNITED NATIONS FAO Fisheries Synopsis No. 125, Volume 2 FIR/S125 Vol. 2 FAO SPECIES CATALOGUE VOL. 2 SCOMBRIDS OF THE WORLD An Annotated and Illustrated Catalogue of Tunas, Mackerels, Bonitos and Related Species Known to Date prepared by Bruce B. Collette and Cornelia E. Nauen NOAA, NMFS Marine Resources Service Systematics Laboratory Fishery Resources and Environment Division National Museum of Natural History FAO Fisheries Department Washington, D.C. 20560, USA 00100 Rome, Italy UNITED NATIONS DEVELOPMENT PROGRAMME FOOD AND AGRICULTURE ORGANIZATION OF THE UNITED NATIONS Rome 1983 The designations employed and the presentation of material in this publication do not imply the expression of any opinion whatsoever on the part of the Food and Agriculture Organization of the United Nations concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. M-42 ISBN 92-5-101381-0 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying or otherwise, without the prior permission of the copyright owner. Applications for such permission, with a statement of the purpose and extent of the reproduction, should be addressed to the Director, Publications Division, Food and Agriculture Organization of the United Nations, Via delle Terme di Caracalla, 00100 Rome Italy. -

Ovarian Development and Spawning of Serra Spanish Mackerel in Coastal Waters of Northeastern Brazil

Ovarian development and spawning of Serra Spanish mackerel in coastal waters of Northeastern Brazil Chellappa, S.a*, Lima, JTAX.b, Araújo, A.b and Chellappa, NT.a aPrograma de Pós-Graduação em Ecologia, Departamento de Oceanografia e Limnologia, Centro de Biociências, Universidade Federal do Rio Grande do Norte – UFRN, CEP 59072-970, Natal, RN, Brazil bPrograma de Pós-Graduação em Psicobiologia, Departamento de Fisiologia, Centro de Biociências, Universidade Federal do Rio Grande do Norte – UFRN, Av. Salgado Filho, 3000, Lagoa Nova, CEP 59072-970, Natal, RN, Brazil *e-mail: [email protected] Received April 6, 2009 – Accepted May 26, 2009 – Distributed May 31, 2010 (With 5 figures) Abstract The Serra Spanish mackerel, Scomberomorus brasiliensis is an important fishery resource of the Atlantic Ocean and is a major component of artisanal fisheries in northern and northeastern Brazil. Ovarian development and spawning period of S. brasiliensis were investigated using both macroscopic and histological techniques. Mean monthly values of GSI and ovarian maturation indicate that the main spawning period occurs during the rainy season. Keywords: Serra Spanish mackerel, spawning period, ovarian development, histology, GSI. Desenvolvimento ovariano e desova da Serra nas águas costeiras do Nordeste do Brasil Resumo A Serra, Scomberomorus brasiliensis, é uma espécie marinha de valor comercial do oceano Atlântico sendo um maior componente de pesca artesanal no norte e nordeste brasileiros. Desenvolvimento ovariano e época de desova de S. brasiliensis foram investigados utilizando-se técnicas macroscópicas e histológicas. Valores mensais médios de IGS e maturação gonadal indicam que a época de desova ocorre durante o período de chuva. Palavras-chave: Serra, época de desova, desenvolvimento ovariano, histologia, IGS. -

Scombridae.Pdf

click for previous page 1836 Bony Fishes SCOMBRIDAE Mackerels and tunas by B.B. Collette, National Marine Fisheries Service, National Museum of Natural History, Washington D.C., USA iagnostic characters: Medium to large-sized (to 3 m) with elongate and fusiform body, moderately com - Dpressed in some genera. Snout pointed; adipose eyelid sometimes present ( Scomber ); premaxillae beak-like, free from nasal bones which are separated by ethmoid bone; mouth moderately large; teeth in jaws strong, moderate, or weak;no true canines;palate and tongue may have teeth.Two dorsal fins;anterior fin usu - ally short and separated from posterior fin; 5 to 10 finlets present behind dorsal and anal fins; caudal fin deeply forked with supporting caudal rays completely covering hypural plate;pectoral fins placed high;pelvic fins moderate or small. At least 2 small keels on each side of caudal peduncle,a larger keel in between in many species .Lateral line simple.Vertebrae 31 to 66.Body either uniformly covered with small to moderate scales (e.g. Scomber, Scomberomorus ) or a corselet developed (area behind head and around pectoral fins covered with moderately large, thick scales) and rest of body naked ( Auxis, Euthynnus, Katsuwonus ), or cov - ered with small scales ( Thunnus ). Colour : Scomber species are usually bluish or greenish above with a pat - tern of wavy bands on upper sides and silvery below; Scomberomorus and Acanthocybium are blue-grey above and silvery below with dark vertical bars or spots on sides. Sarda has 5 to 11 stripes on back; Euthynnus has a striped pattern on back and several dark spots between pectoral and pelvic fins; Katsuwonus has 4 to 6 conspicuous longitudinal stripes on belly; Auxis and Thunnus are deep blue-black above; most species of Thunnus have bright yellow finlets with black borders. -

Publications Office

16.7.2021 EN Offi cial Jour nal of the European Union L 253/51 DECISIONS COMMISSION DELEGATED DECISION (EU) 2021/1167 of 27 April 2021 establishing the multiannual Union programme for the collection and management of biological, environmental, technical and socioeconomic data in the fisheries and aquaculture sectors from 2022 THE EUROPEAN COMMISSION, Having regard to the Treaty on the Functioning of the European Union, Having regard to Regulation (EU) 2017/1004 of the European Parliament and of the Council of 17 May 2017 on the establishment of a Union framework for the collection, management and use of data in the fisheries sector and support for scientific advice regarding the common fisheries policy and repealing Council Regulation (EC) No 199/2008 (1), and in particular the first and second subparagraphs of Article 4(1) thereof, Whereas: (1) Article 25 of Regulation (EU) No 1380/2013 of the European Parliament and of the Council (2) requires the Member States to collect the biological, environmental, technical and socioeconomic data that are necessary for fisheries management. (2) Article 4(1) of Regulation (EU) 2017/1004 requires the Commission to establish a multiannual Union programme for the collection, management and use of data in the fisheries sector (EU MAP). (3) The EU MAP is necessary for Member States to specify and plan their data collection activities in their national work plans and sets out a detailed list of data requirements for the collection and management of biological, environmental and socioeconomic data, lists mandatory surveys at sea and sets thresholds for data collection. The EU MAP for 2020-2021 was adopted by Commission Delegated Decision (EU) 2019/910 (3) and Commission Implementing Decision (EU) 2019/909 (4). -

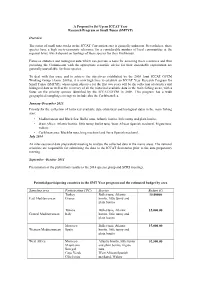

A Proposal to Set up an ICCAT Year Research Program Or Small Tunas (SMTYP) Overview the Status of Small Tuna Stocks in the ICCAT

A Proposal to Set Up an ICCAT Year Research Program or Small Tunas (SMTYP) Overview The status of small tuna stocks in the ICCAT Convention area is generally unknown. Nevertheless, these species have a high socio-economic relevance for a considerable number of local communities at the regional level, which depend on landings of these species for their livelihoods. Fisheries statistics and biological data which can provide a basis for assessing these resources and thus providing the Commission with the appropriate scientific advice for their sustainable exploitation are generally unavailable for these species. To deal with this issue and to achieve the objectives established by the 2008 Joint ICCAT GFCM Working Group (Anon. 2009a), it is now high time to establish an ICCAT Year Research Program for Small Tunas (SMTYP), whose main objective for the first two years will be the collection of statistics and biological data as well as the recovery of all the historical available data in the main fishing areas, with a focus on the priority species identified by the ICCAT/GFCM in 2008. This program has a wide geographical sampling coverage to include also the Caribbean Sea. January-December 2013: Priority for the collection of historical available data (statistical and biological data) in the main fishing area: • Mediterranean and Black Sea: Bullet tuna, Atlantic bonito, little tunny and plain bonito; • West Africa: Atlantic bonito, little tunny, bullet tuna, West African Spanish mackerel, frigate tuna, wahoo; • Caribbean area: Blackfin tuna, king mackerel and Serra Spanish mackerel. July 2014 An inter-sessional data preparatory meeting to analyse the collected data in the mains areas. -

List of Bycatch Species

APPENDIX 5_1: UPDATED BY-CATCH SPECIES Provisional consolidated list of species. It includes the 27 new Alfa-3 codes (shaded yellow) added by FAO to the ASFIS database. Scientific names shaded “green” indicate a choice of one of 2 scientific names available (criteria: adopt ASFIS or most recent knowledge of the small WG)./Liste des espèces consolidée provisoire. Elle inclut les 27 nouveaux codes Alfa-3 (ombrés en jaune) ajoutés par la FAO à la base de données d’ASFIS. Les noms scientifiques ombrés en « vert » indiquent qu’un des deux noms scientifique disponibles a été choisi (critères : adopter ASFIS ou les connaissances les plus récentes du Groupe de travail réduit)./Lista de especies provisional consolidada. Incluye los 27 nuevos códigos de 3 letras (sombreados en amarillo) añadidos por la FAO a la base de datos de ASFIS. Los nombres científicos sombreados en “VERDE” indican que se ha elegido uno de los dos nombres científicos disponibles (criterios: adoptar ASFIS o los conocimientos más recientes del pequeño Grupo de trabajo). NOTES: 1- Species pending from entering in the ICCAT list/ Espèces en attente de figurer dans la liste ICCAT/Especies pendientes de entrar en la lista de ICCAT : C hlamydoselachus africana (African frilled shark); Somniosus antarcticus (Southern sleeper shark) 2- Shaded cells in field "scientific name " indicates differences between ICCAT and FAO / Les cellules ombrées dans la rubrique « nom scientifique » indiquent les différences entre l’ICCAT et la FAO/Cuando en las celdas aparece sombreado el campo “nombre