Vehicle Color Recognition Using Convolutional Neural Network

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FDA Visual Identity Guidelines September 27, 2016 Introduction: FDA, ITS VISUAL IDENTITY, and THIS STYLE GUIDE

FDA Visual Identity Guidelines September 27, 2016 Introduction: FDA, ITS VISUAL IDENTITY, AND THIS STYLE GUIDE The world in which the U.S. Food and Drug Administration Therefore, the agency embarked on a comprehensive (FDA) operates today is one of growing complexity, new examination of FDA’s communication materials, including an challenges, and increased risks. Thanks to revolutionary analysis of the FDA’s mission and key audiences, in order to advances in science, medicine, and technology, we have establish a more unified communications program using enormous opportunities that we can leverage to meet many consistent and more cost-effective pathways for creating and of these challenges and ultimately benefit the public health. disseminating information in a recognizable format. This has resulted in what you see here today: a standard and uniform As a public health and regulatory agency that makes its Visual Identity system. decisions based on the best available science, while maintaining its far-reaching mission to protect and promote This new Visual Identity program will improve the the public health, FDA is uniquely prepared and positioned to effectiveness of the FDA’s communication by making it much anticipate and successfully meet these challenges. easier to identify the FDA, an internationally recognized, trusted, and credible agency, as the source of the information Intrinsically tied to this is the agency’s crucial ability to being communicated. provide the public with clear, concise and accurate information on a wide range of important scientific, medical, The modern and accessible design will be used to inspire how regulatory, and public health matters. we look, how we speak, and what we say to the people we impact most. -

Copyrighted Material

INDEX A Bertsch, Fred, 16 Caslon Italic, 86 accents, 224 Best, Mark, 87 Caslon Openface, 68 Adobe Bickham Script Pro, 30, 208 Betz, Jennifer, 292 Cassandre, A. M., 87 Adobe Caslon Pro, 40 Bézier curve, 281 Cassidy, Brian, 268, 279 Adobe InDesign soft ware, 116, 128, 130, 163, Bible, 6–7 casual scripts typeface design, 44 168, 173, 175, 182, 188, 190, 195, 218 Bickham Script Pro, 43 cave drawing, type development, 3–4 Adobe Minion Pro, 195 Bilardello, Robin, 122 Caxton, 110 Adobe Systems, 20, 29 Binner Gothic, 92 centered type alignment Adobe Text Composer, 173 Birch, 95 formatting, 114–15, 116 Adobe Wood Type Ornaments, 229 bitmapped (screen) fonts, 28–29 horizontal alignment, 168–69 AIDS awareness, 79 Black, Kathleen, 233 Century, 189 Akuin, Vincent, 157 black letter typeface design, 45 Chan, Derek, 132 Alexander Isley, Inc., 138 Black Sabbath, 96 Chantry, Art, 84, 121, 140, 148 Alfon, 71 Blake, Marty, 90, 92, 95, 140, 204 character, glyph compared, 49 alignment block type project, 62–63 character parts, typeface design, 38–39 fi ne-tuning, 167–71 Blok Design, 141 character relationships, kerning, spacing formatting, 114–23 Bodoni, 95, 99 considerations, 187–89 alternate characters, refi nement, 208 Bodoni, Giambattista, 14, 15 Charlemagne, 206 American Type Founders (ATF), 16 boldface, hierarchy and emphasis technique, China, type development, 5 Amnesty International, 246 143 Cholla typeface family, 122 A N D, 150, 225 boustrophedon, Greek alphabet, 5 circle P (sound recording copyright And Atelier, 139 bowl symbol), 223 angled brackets, -

"B" Wing Renovations

SCC - Jack A. Powers Building “B” Wing Renovation OSE # H59-6148-JM-B SPARTANBURG, SC SPARTANBURG COMMUNITY COLLEGE BID REVIEW 04.12.2021 MPS PROJECT #020041.00 2020 Edition TABLE OF CONTENTS PROJECT NAME: SCC Jack A. Powers Building "B" Wing Renovation PROJECT NUMBER: H59-6148-JM-B SECTION NUMBER OF PAGES Table of Contents .........................................................................................................................................2 SE-310, Invitation for Design-Bid-Build Construction Services..............................................................1 AIA Document A701 Instructions to Bidders South Carolina Division of Procurement Services, Office of State Engineer Version.........................13 Bid Bond (AIA A310 or reference) .............................................................................................................1 SE-330, Lump Sum Bid Form.....................................................................................................................6 AIA Document A101 Standard Form of Agreement between Owner and Contractor South Carolina Division of Procurement Services, Office of State Engineer Version...........................9 AIA Document A201 General Conditions of the Contract for Construction South Carolina Division of Procurement Services, Office of State Engineer Version.........................49 G702-1992 Application & Certification for Payment - Draft ..................................................................1 G703-1992 Continuation Sheet - Draft.......................................................................................................1 -

General Assembly Presents: Visual Design Hacking Mini- Bootcamp

General Assembly Presents: Visual Design Hacking Mini- Bootcamp Design Principles: Layout Graphic Type Color Layout Graphic Typography Typefaces Everlane Facebook Color Color meaning Swarm Gilt Case studies Pintrest Dorkfood AirBnB Resources Design Principles: Layout Graphic Type Color Layout Contrast, alignment, repetition, proximity Hierarchy - up down, left right, size Pre-made grids help with alignment - eg. Sketch (good for UI Design) Grid, hierarchy, repetition, contrast, proximity, alignment Graphic Lines - pretty much about lines when grid - lines impact design hugely Shapes too - buttons ... Textures - faded background, blur look Icons Photography and illustration - visual interest for design. Stock photos a lot better - unsplash.com, depth of stock photo, ... Typography Graphic design in itself Font vs typeface - typeface the archetype, font - the kinds, eg. regular, medium, bold, ... Helvetica Neue - online version of helvetica proxima nova, open sans, avenir, lato good too futura, tahoma, myraid, arial a bit boxy but good online type connection - http://www.typeconnection.com/ Typefaces White/black or Grey - contrast Slight grey or off-white may be less start and easier on the eye fontsquirrel - free fonts fontfox - game to guess typefaces Everlane Example - less copy on mobile - everlane custom font like blair mtv - a sans serif as logo type and a serif as body but works well Facebook Only helvetica Neue - keep it simple, focus on content not type With font, use same family, weights, opacity ... on site Color Color theory - combining colors that work well together on the color wheel Complementary colors are opposite on the color wheel Analogous colors - close adobe kulor https://color.adobe.com/create/color-wheel/ Use RGB when seeing online, emails - CYMK better for print Color meaning look into colors and how perceived in different cultures warm colors, cool colors, red strong, more of an accent color - subconscious effect red - anger, passion, love, .. -

Design & Brand Guidelines

Design & Brand Guidelines VERSION 3.0 | NOVEMBER 2017 We Mean Business Brand Guidelines 2 // 18 Introduction THE DESIGN GUIDELINES As with many organizations with a worldwide audience, The following pages describe the visual elements that multiple contributors from around the globe provide represent the We Mean Business brand identity. This includes information for the online and print communications of our name, logo and other elements such as color, type We Mean Business. graphics and imagery. This guide serves as a resource for contributors, to ensure Sending a consistent, visual message of who we are is that the multiple voices of coalition representatives essential to presenting a strong, unified coalition. communicate with visual cohesion. CONTACT Address Phone & Email Online 1201 Connecticut Ave., NW Email 1: [email protected] Website: www.wemeanbusinesscoalition.org Suite 300 Email 2: [email protected] Twitter: www.twitter.com/WMBtweets | #wemeanit Washington, DC 20036 LinkedIn: www.linkedin.com/company/wemeanbusiness YouTube: https://www.youtube.com/channel/ UCZj1URWtaVRN83-HRZbcIAg WE MEAN BUSINESS Table of Contents SECTION 1 | LOGO MARK AND PLACEMENT SECTION 2 | TYPOGRAPHY AND TEXT HIERARCHY SECTION 3 | COLOR SYSTEM SECTION 4 | IMAGERY & GRAPHICS SECTION 5 | SUMMARY AND CONTACT Logo Mark 01 and placement Our logo is the key building block of our identity, the primary visual element that identifies us. The logo mark is a combination of the green triangle symbol and our company name – they have a fixed relationship that should never be changed in any way. We Mean Business Brand Guidelines 5 // 18 LOGO MARK 1) The Logo Symbol The We Mean Business logo comprises two elements, the The green triangle pointing up suggests logo symbol and logo typeface. -

Fianl Request for Proposals Design-Build Project Tip I

-- STATE OF NORTH CAROLINA-- DEPARTMENT OF TRANSPORTATION RALEIGH, N.C. FIANL REQUEST FOR PROPOSALS DESIGN-BUILD PROJECT TIP I-5507 / R-0211EC / U-4714AB May 7, 2018 VOID FOR BIDDING DATE AND TIME OF TECHNICAL AND PRICE PROPOSAL SUBMISSION: July 25, 2018 BY 4:00 PM DATE AND TIME OF PRICE PROPOSAL OPENING: August 21, 2018 AT 2:00 PM CONTRACT ID: C203970 WBS ELEMENT NO. 43609.3.2 FEDERAL-AID NO. N/A COUNTY: Mecklenburg ROUTE NO. I-485 (Charlotte Outer Loop) MILES: 16.6 LOCATION: I-485 from I-77 to US 74 (Independence Boulevard); I-485 / Weddington Road Interchange; and I-485 / East John Street – Old Monroe Road Interchange TYPE OF WORK: DESIGN-BUILD AS SPECIFIED IN THE SCOPE OF WORK CONTAINED IN THE REQUEST FOR PROPOSALS NOTICE: ALL PROPOSERS SHALL COMPLY WITH ALL APPLICABLE LAWS REGULATING THE PRACTICE OF GENERAL CONTRACTING AS CONTAINED IN CHAPTER 87 OF THE GENERAL STATUTES OF NORTH CAROLINA WHICH REQUIRES THE PROPOSER TO BE LICENSED BY THE N.C. LICENSING BOARD FOR CONTRACTORS WHEN BIDDING ON ANY NON-FEDERAL AID PROJECT WHERE THE BID IS $30,000 OR MORE, EXCEPT FOR CERTAIN SPECIALTY WORK AS DETERMINED BY THE LICENSING BOARD. PROPOSERS SHALL ALSO COMPLY WITH ALL OTHER APPLICABLE LAWS REGULATING THE PRACTICES OF ELECTRICAL, PLUMBING, HEATING AND AIR CONDITIONING AND REFRIGERATION CONTRACTING AS CONTAINED IN CHAPTER 87 OF THE GENERAL STATUTES OF NORTH CAROLINA. NOT WITHSTANDING THESE LIMITATIONS ON BIDDING, THE PROPOSER WHO IS AWARDED ANY PROJECT SHALL COMPLY WITH CHAPTER 87 OF THE GENERAL STATUTES OF NORTH CAROLINA FOR LICENSING REQUIREMENTS WITHIN 60 CALENDAR DAYS OF BID OPENING, REGARDLESS OF FUNDING SOURCES. -

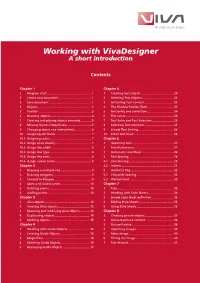

Working with Vivadesigner a Short Introduction

WE PUBLISH THE WORLD Working with VivaDesigner A short introduction Contents Chapter 1 Chapter 5 1 Program start .....................................................1 1 Creating Text objects .......................................20 2 Create new document .......................................1 2 Selecting Text Objects ......................................21 3 Save document ..................................................1 3 Activating Text Content ...................................22 4 Objects ................................................................2 4 The Module Palette (Text) ...............................22 5 Toolbar ...............................................................3 5 Text entry and corrections ...............................24 6 Drawing objects .................................................4 6 The cursor .........................................................24 7 Creating and placing objects precisely .............5 7 Text Entry and Text Selection ..........................25 8 Moving objects interactively .............................6 8 Selecting Text elements ...................................25 9 Changing object size interactively ....................6 9 Simple Text Linking ..........................................26 10 Assigning attributes ..........................................7 10 Break text chain ...............................................26 10.1 Assigning colors .................................................7 Chapter 6 10.2 Assign color density ...........................................7 -

A Peek Inside the Tent Overview

A Peek Inside the Tent Overview All about you Cost and Resources Overview of The Circus Recommendation Career Services Admissions Requirements Program Curriculum The Tour Our Mission The mission of The Creative Circus is to graduate the best prepared, most avidly sought after creatives in the industry. The 411 2 Year Certificate Program 210+ Students Founded in 1995 Fully Accredited by C.O.E. Bridge Gap Between Education and Industry Location/Environment What Employers Say How We’re Different Learn By Doing Integrated, Collaborative Programs Individual Attention/Class Size Student Competitions 7 Full-Time Dedicated Faculty Members Scheduling/Homework Part-Time Industry Pros The ‘Nice School’ Career Services Placement Statistics Graduates and Completers working in their field of study within six months of graduation: Forums Program 09-10 10-11 11-12 Mentors Art Direction 96% 100% 96% Portfolio Reviews Design 88.57% 100% 90% Copywriting 96.55% 100% 98% Local and National Networking Image 91.67% 100% 88% Interactive Development N/A N/A 100% Interactive Design N/A N/A N/A Average 93.07% 100% 94.53% Creative Circus Portfolio Review Industry Salaries 2013 The Creative Group 2013 Salary Guide INTERACTIVE STARTING SALARIES CREATIVE & PRODUCTION STARTING SALARIES TITLE LOW HIGH TITLE LOW HIGH Informaon Architect $80,500 $120,750 Copywriter (1 to 3yrs) $40,000 $55,000 User Experience Designer $55,000 $110,000 Copywriter (3 to 5yrs) $56,500 $73,250 Junior Interac0ve Designer $40,000 $55,000 Copywriter (5+ yrs) $72,750 $102,750 Senior Interac0ve Designer -

HIS20-04 Decision February 26, 2020 Page 2

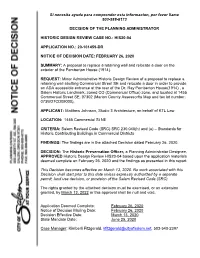

Si necesita ayuda para comprender esta informacion, por favor llame 503-588-6173 DECISION OF THE PLANNING ADMINISTRATOR HISTORIC DESIGN REVIEW CASE NO.: HIS20-04 APPLICATION NO.: 20-101459-DR NOTICE OF DECISION DATE: FEBRUARY 26, 2020 SUMMARY: A proposal to replace a retaining wall and relocate a door on the exterior of the Pemberton House (1914). REQUEST: Minor Administrative Historic Design Review of a proposal to replace a retaining wall abutting Commercial Street SE and relocate a door in order to provide an ADA accessible entrance at the rear of the Dr. Ray Pemberton House(1914) , a Salem Historic Landmark, zoned CO (Commercial Office) zone, and located at 1455 Commercial Street SE, 97302 (Marion County Assessorfts Map and tax lot number: 073W27CD09000). APPLICANT: Matthew Johnson, Studio 3 Architecture, on behalf of KTL Law LOCATION: 1455 Commercial St NE CRITERIA: Salem Revised Code (SRC) SRC 230.040(h) and (a) – Standards for Historic Contributing Buildings in Commercial Districts FINDINGS: The findings are in the attached Decision dated February 26, 2020. DECISION: The Historic Preservation Officer, a Planning Administrator Designee, APPROVED Historic Design Review HIS20-04 based upon the application materials deemed complete on February 26, 2020 and the findings as presented in this report. This Decision becomes effective on March 13, 2020. No work associated with this Decision shall start prior to this date unless expressly authorized by a separate permit, land use decision, or provision of the Salem Revised Code (SRC). The rights granted by the attached decision must be exercised, or an extension granted, by March 13, 2022 or this approval shall be null and void. -

Project Manual Idaho Transportation Department Coeur D'alene Office

Project Manual Idaho Transportation Department Coeur d’Alene Office/Conference Room Remodel Set No. ______ PROJECT MANUAL Idaho Transportation Department Coeur d’Alene Office/Conference Room Remodel OWNER: Idaho Transportation Department 600 W. Prairie Avenue Coeur d’Alene, ID 83815 ARCHITECT: Architects West, Inc. 210 E. Lakeside Avenue Coeur d'Alene, ID 83814 Ph (208) 667-9402; Fx (208) 667-6103 Structural Engineer: BC Engineers 11917 N. Warren St. Hayden, ID 83835 Ph (208) 772-8424; Fx (208) 772-8278 Mechanical Engineer: JTL Engineering 9116 E. Sprague Ave., # 457 Spokane Valley, WA 99206 Ph (509) 413-7997; Fx (509) 220-8292 Electrical Engineer: Trindera 1875 N. Lakewood Drive, Ste. 201 Coeur d’Alene, ID 83814 Ph (208) 676-8001; Ph (208) 676-0100 AW #1858 February 15, 2019 SECTION 000100 - TABLE OF CONTENTS BOILER PLATE FRONTAL SPECIFICATION DIVISION 1 -- GENERAL REQUIREMENTS 011000 – SUMMARY 012300 – ALTERNATES 012500 – SUBSTITUTION PROCEDURES 012600 – CONTRACT MODIFICATION PROCEDURES 012900 – PAYMENT PROCEDURES 013100 – PROJECT MANAGEMENT AND COORDINATION 013200 – CONSTRUCTION PROGRESS DOCUMENTATION 013300 – SUBMITTAL PROCEEDURES 015000 – TEMPORARY FACILITIES AND CONTROLS 016000 – PRODUCT REQUIREMENTS 017300 – EXECUTION 017329 – CUTTING AND PATCHING 017700 – CLOSEOUT PROCEDURES 017823 – OPERATION AND MAINTENANCE DATA 017839 – PROJECT RECORD DOCUMENTS DIVISION 2 – EXISTING CONDITIONS 024119 – SELECTIVE DEMOLITION DIVISION 5 -- METALS 053100 – STEEL DECKING 055000 – METAL FABRICATIONS DIVISION 7 -- THERMAL AND MOISTURE PROTECTION 072100 -

GRAPHIC DESIGN COURSES HTML, CSS, and an Introduction to Javascript and Other Relevant Technologies

COURSES COURSES GRAPHIC DESIGN COURSES HTML, CSS, and an introduction to JavaScript and other relevant technologies. A basic understanding of computer system operation is required. GDS 011 The History of Modern Design (3.0 Lecture) 3.0 UNITS GDS 047 Web Animation (2.0 Lecture/1.0 Lab) 3.0 UNITS This introductory survey course focuses on the history, perception and Advisory: GDS 045 This introductory course focuses on the skills required development of design during the Twentieth Century. The students will to create effective web animations using a variety of software applications. develop an understanding of the evolution and role of the Modern Movement Principles of animation, visual communication, user interface design and web and how it affects society. The students will also learn about the evaluation optimization are explored. The student develops an understanding of the criteria of two-dimensional and three dimensional design while examining role of animation on the internet in a series of hands-on exercises. A basic examples of architecture, industrial, graphic, fashion and interior design. The understanding of computer systems is assumed. students will be introduced to influential Twentieth Century design figures and their work. GDS 049 Wordpress and Content Management System (2.0 Lecture/1.0 Lab) 3.0 UNITS GDS 012 History of Photography (3.0 Lecture) 3.0 UNITS Advisory: GDS 046 In this advanced web design and development class, This course surveys the history of photography from its origins to the present. students use WordPress to build dynamic websites that can be updated easily. Students examine the practice of photography as an art form and as a form Students are also introduced to PHP & MySQL, theme customization, child of visual communication in historical, socio-political and cultural contexts. -

Implementing Interpreters

One-Slide Summary • Object-Oriented Programming encapsulates state and methods together into objects. This hides implementation details (cf. inheritance) while allowing methods to operate on many types of input. • Python is a universal, imperative, object-oriented language. Scheme is a universal, functional language Objects Python with imperative features. Interpreters • Building an interpreter is a fundamental idea in computing. Eval and Apply are mutually recursive. • We can write a Python program that is an interpreter for Scheme programs. #2 Outline WhoWho waswas thethe • Object Lessons firstfirst object-object- • Ali G orientedoriented • Interpreters • Eval programmer?programmer? • Apply #3 #4 By the word operation, we mean any process which alters the mutual relation of two or more things, be this relation of what kind it may. This is the most general definition, ImplementingImplementing and would include all subjects in the universe. Again, it might act upon other things besides number, were objects InterpretersInterpreters found whose mutual fundamental relations could be expressed by those of the abstract science of operations, and which should be also susceptible of adaptations to the action of the operating notation and mechanism of the engine... Supposing, for instance, that the fundamental relations of pitched sounds in the science of harmony and of musical composition were susceptible of such expression and adaptations, the engine might compose elaborate and scientific pieces of music of any degree of complexity or extent. Ada, Countess of Lovelace, around 1843 #5 Learn Languages: Expand Minds Languages change the way we think. The more languages you know, the more different ways you have of thinking about (and solving) problems.