Retreat on Core Competencies: Quantitative Reasoning and Assessment in Majors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Supporting Assessment in Undergraduate Mathematics This Volume Is Based Upon Work Supported by the National Science Foundation (NSF) Under Grant No

Supporting Assessment in Undergraduate Mathematics This volume is based upon work supported by the National Science Foundation (NSF) under Grant No. DUE-0127694. Any opinions, findings, and conclusions or recommendations expressed are those of the authors and do not necessarily reflect the views of the NSF. Copyright ©2006 by The Mathematical Association of America (Incorporated) Library of Congress Catalog Card Number 2005936440 ISBN 0-88385-820-7 Printed in the United States of America Current printing (last digit): 10 9 8 7 6 5 4 3 2 1 Supporting Assessment in Undergraduate Mathematics Editor Lynn Arthur Steen Case Studies Editors Bonnie Gold Laurie Hopkins Dick Jardine William A. Marion Bernard L. Madison, Project Director William E. Haver, Workshops Director Peter Ewell, Project Evaluator Thomas Rishel, Principal Investigator (2001–02) Michael Pearson, Principal Investigator (2002–05) Published and Distributed by The Mathematical Association of America Contents Introduction Tensions and Tethers: Assessing Learning in Undergraduate Mathematics Bernard L. Madison, University of Arkansas, Fayetteville . 3 Asking the Right Questions Lynn Arthur Steen, St. Olaf College . 11 Assessing Assessment: The SAUM Evaluator’s Perspective Peter Ewell, National Center for Higher Education Management Systems (NCHEMS) . 19 Case Studies Developmental, Quantitative Literacy, and Precalculus Programs Assessment of Developmental, Quantitative Literacy, and Precalculus Programs Bonnie Gold, Monmouth University . 29 Assessing Introductory Calculus and Precalculus Courses Ronald Harrell & Tamara Lakins, Allegheny College . 37 Mathematics Assessment in the First Two Years Erica Johnson, Jeffrey Berg, & David Heddens, Arapahoe Community College . 41 Using Assessment to Troubleshoot and Improve Developmental Mathematics Roy Cavanaugh, Brian Karasek, & Daniel Russow, Arizona Western College . 47 Questions about College Algebra Tim Warkentin & Mark Whisler, Cloud County Community College . -

FOCUS 8 3.Pdf

Volume 8, Number 3 THE NEWSLETTER OF THE MATHEMATICAL ASSOCIATION OF AMERICA May-June 1988 More Voters and More Votes for MAA Mathematics and the Public Officers Under Approval Voting Leonard Gilman Over 4000 MAA mebers participated in the 1987 Association-wide election, up from about 3000 voters in the elections two years ago. Is it possible to enhance the public's appreciation of mathematics and Lida K. Barrett, was elected President Elect and Alan C. Tucker was mathematicians? Science writers are helping us by turning out some voted in as First Vice-President; Warren Page was elected Second first-rate news stories; but what about mathematics that is not in the Vice-President. Lida Barrett will become President, succeeding Leon news? Can we get across an idea here, a fact there, and a tiny notion ard Gillman, at the end of the MAA annual meeting in January of 1989. of what mathematics is about and what it is for? Is it reasonable even See the article below for an analysis of the working of approval voting to try? Yes. Herewith are some thoughts on how to proceed. in this election. LOUSY SALESPEOPLE Let us all become ambassadors of helpful information and good will. The effort will be unglamorous (but MAA Elections Produce inexpensive) and results will come slowly. So far, we are lousy salespeople. Most of us teach and think we are pretty good at it. But Decisive Winners when confronted by nonmathematicians, we abandon our profession and become aloof. We brush off questions, rejecting the opportunity Steven J. -

Achieving Quantitative Literacy

front.qxd 01/15/2004 4:17 PM Page i Achieving Quantitative Literacy An Urgent Challenge for Higher Education front.qxd 01/15/2004 4:17 PM Page ii Copyright ©2004 by The Mathematical Association of America (Incorporated) Library of Congress Catalog Card Number 2003115950 ISBN 0-88385-816-9 Printed in the United States of America Current printing (last digit): 10 9 8 7 6 5 4 3 2 1 front.qxd 01/15/2004 4:17 PM Page iii Achieving Quantitative Literacy An Urgent Challenge for Higher Education Lynn Arthur Steen Published and Distributed by The Mathematical Association of America front.qxd 01/15/2004 4:17 PM Page iv National Forum Quantitative Literacy: Why Numeracy Matters for Schools and Colleges Steering Committee Richelle (Rikki) Blair, Professor of Mathematics, Lakeland Community College, Kirtland, Ohio Peter Ewell, Senior Associate, National Center for Higher Education Management Systems Daniel L. Goroff, Professor of the Practice of Mathematics, Harvard University Ronald J. Henry, Provost and Vice President for Academic Affairs, Georgia State University Deborah Hughes Hallett, Professor of Mathematics, University of Arizona Jeanne L. Narum, Director of the Independent Colleges Office of Project Kaleidoscope Richard L. Scheaffer, Professor Emeritus of Statistics, University of Florida Janis Somerville, Senior Associate, National Association of System Heads Forum Organizers SUSAN L. GANTER, Associate Professor of Mathematical Sciences, Clemson University BERNARD L. MADISON, Visiting Mathematician, Mathematical Association of America ROBERT ORRILL, Executive Director, National Council on Education and the Disciplines (NCED) LYNN ARTHUR STEEN, Professor of Mathematics, St. Olaf College, Northfield, MN front.qxd 01/15/2004 4:17 PM Page v The MAA Notes Series, started in 1982, addresses a broad range of topics and themes of interest to all who are involved with undergraduate mathematics. -

Quantitative Literacy: Why Numeracy Matters for Schools and Colleges, Held at the National Academy of Sciences in Washington, D.C., on December 1–2, 2001

NATIONAL COUNCIL ON EDUCATION AND THE DISCIPLINES The goal of the National Council on Education and the Disciplines (NCED) is to advance a vision that will unify and guide efforts to strengthen K-16 education in the United States. In pursuing this aim, NCED especially focuses on the continuity and quality of learning in the later years of high school and the early years of college. From its home at The Woodrow Wilson National Fellowship Foundation, NCED draws on the energy and expertise of scholars and educators in the disciplines to address the school-college continuum. At the heart of its work is a national reexamination of the core literacies—quantitative, scientific, historical, and communicative — that are essential to the coherent, forward-looking education all students deserve. Copyright © 2003 by The National Council on Education and the Disciplines All rights reserved. International Standard Book Number: 0-9709547-1-9 Printed in the United States of America. Foreword ROBERT ORRILL “Quantitative literacy, in my view, means knowing how to reason and how to think, and it is all but absent from our curricula today.” Gina Kolata (1997) Increasingly, numbers do our thinking for us. They tell us which medication to take, what policy to support, and why one course of action is better than another. These days any proposal put forward without numbers is a nonstarter. Theodore Porter does not exaggerate when he writes: “By now numbers surround us. No important aspect of life is beyond their reach” (Porter, 1997). Numbers, of course, have long been important in the management of life, but they have never been so ubiquitous as they are now. -

View the Viking Update

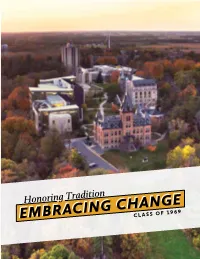

Honoring Tradition EMBRACING CHANGE CLASS OF ST. OLAF COLLEGE Class of 1969 – PRESENTS – The Viking Update in celebration of its 50th Reunion May 31 – June 2, 2019 Autobiographies and Remembrances of the Class stolaf.edu 1520 St. Olaf Avenue, Northfield, MN 55057 Advancement Division 800-776-6523 Student Editors Joshua Qualls ’19 Kassidy Korbitz ’22 Matthew Borque ’19 Student Designer Philip Shady ’20 Consulting Editor David Wee ’61, Professor Emeritus of English 50th Reunion Staff Members Ellen Draeger Cattadoris ’07 Cheri Floren Michael Kratage-Dixon Brad Hoff ’89 Printing Park Printing Inc., Minneapolis, MN Welcome to the Viking Update! Your th Reunion committee produced this commemorative yearbook in collaboration with students, faculty members, and staff at St. Olaf College. The Viking Update is the college’s gift to the Class of in honor of this milestone year. The yearbook is divided into three sections: Section I: Class Lists In the first section, you will find a complete list of everyone who submitted a bio and photo for the Viking Update. The list is alphabetized by last name while at St. Olaf. It also includes the classmate’s current name so you can find them in the Autobiographies and Photos section, which is alphabetized by current last name. Also included the class lists section: Our Other Classmates: A list of all living classmates who did not submit a bio and photo for the Viking Update. In Memoriam: A list of deceased classmates, whose bios and photos can be found in the third and final section of the Viking Update. Section II: Autobiographies and Photos Autobiographies and photos submitted by our classmates are alphabetized by current last name. -

Assessment Practices in Undergraduate Mathematics

Assessment Practices in Undergraduate Mathematics i ©1999 by The Mathematical Association of America ISBN 0-88385-161-X Library of Congress Catalog Number 99-63047 Printed in the United States of America Current Printing 10 9 8 7 6 5 4 3 2 ii MAA NOTES NUMBER 49 Assessment Practices in Undergraduate Mathematics BONNIE GOLD SANDRA Z. KEITH WILLIAM A. MARION EDITORS Published by The Mathematical Association of America iii The MAA Notes Series, started in 1982, addresses a broad range of topics and themes of interest to all who are involved with undergraduate mathematics. The volumes in this series are readable, informative, and useful, and help the mathematical community keep up with developments of importance to mathematics. Editorial Board Tina Straley, Editor Nancy Baxter-Hastings Sylvia T. Bozeman Donald W. Bushaw Sheldon P. Gordon Stephen B Maurer Roger B. Nelsen Mary R. Parker Barbara E. Reynolds (Sr) Donald G. Saari Anita E. Solow Philip D. Straffin MAA Notes 11. Keys to Improved Instruction by Teaching Assistants and Part-Time Instructors, Committee on Teaching Assistants and Part-Time Instructors, Bettye Anne Case, Editor. 13. Reshaping College Mathematics, Committee on the Undergraduate Program in Mathematics, Lynn A. Steen, Editor. 14. Mathematical Writing, by Donald E. Knuth, Tracy Larrabee, and Paul M. Roberts. 16. Using Writing to Teach Mathematics, Andrew Sterrett, Editor. 17. Priming the Calculus Pump: Innovations and Resources, Committee on Calculus Reform and the First Two Years, a subcomittee of the Committee on the Undergraduate Program in Mathematics, Thomas W. Tucker, Editor. 18. Models for Undergraduate Research in Mathematics, Lester Senechal, Editor. -

St. Olaf College

Emeritus faculty member Lynn Steen dies — St. Olaf College http://wp.stolaf.edu/blog/emeritus-faculty-member-lynn-steen-dies/ St. Olaf News Like 23K Tweet Emeritus faculty member Lynn Steen dies June 23, 2015 St. Olaf College Professor Emeritus of Mathematics Lynn Steen, who spent more than four decades making mathematics accessible to all students and shaping the way teachers approach the discipline, died June 21. Steen was born in Chicago and grew up on Staten Island, New York. In 1965, four years after graduating from Luther College, Steen completed a Ph.D. in mathematics at the Massachusetts Institute of Technology and joined the St. Olaf faculty. Early in his career, Steen focused on teaching and developing research experiences for undergraduates. One result was the widely used reference book Counterexamples in Topology, co-edited with J. Arthur Seebach Jr. and partly authored by St. Olaf students. Another was a gradual change in mathematics at St. Olaf from a narrow discipline for the few to an inviting major of value to any liberal arts graduate. By broadening the major and focusing student work on inquiry and investigation, Steen and his departmental colleagues grew mathematics into one of the top five majors at the college — and one of the nation’s largest undergraduate producers of Ph.D.s in the mathematical sciences. As his teaching led Steen to investigate links between mathematics and other fields, he began writing about new developments in mathematics for audiences of non-mathematicians. Many of his articles appeared in the weekly magazine Science News and in annual supplements to the Encyclopedia Britannica, and he penned a groundbreaking report for the National Research Council on the challenges facing mathematics education in the United States. -

Journal of Mathematics Education at Teachers College

Journal of Mathematics Education at Teachers College Spring – Summer 2013 A C!"#$%& '( L!)*!%+,-. -" M)#,!/)#-0+ )"* -#+ T!)0,-"1 © Copyright 2013 by the Program in Mathematics and Education Teachers College Columbia University in the City of New York TABLE OF CONTENTS Preface v Mathematics Education Leadership: Examples From the Past, Direction for the Future Christopher J. Huson Articles 7 Leading People: Leadership in Mathematics Education Jeremy Kilpatrick, Univerity of Georgia 15 Promoting Leadership in Doctoral Programs in Mathematics Education Robert Reys, University of Missouri 19 The Role of Ethnomathematics in Curricular Leadership in Mathematics Education Ubiratan D’Ambrosio, University of Campinas Beatriz Silva D’Ambrosio, Miami University 26 Distributed Leadership: Key to Improving Primary Students’ Mathematical Knowledge Matthew R. Larson, Lincoln Public Schools, Nebraska Wendy M. Smith, University of Nebraska-Lincoln 34 Leadership in Undergraduate Mathematics Education: An Example Joel Cunningham, Sewanee: The University of the South 40 The Role of the Mathematics Supervisor in K–12 Education Carole Greenes, Arizona State University 47 Leadership in Mathematics Education: Roles and Responsibilities Alfred S. Posamentier, Mercy College 52 Toward A Coherent Treatment of Negative Numbers Kurt Kreith and Al Mendle, University of California, Davis 55 Leadership Through Professional Collaborations Jessica Pfeil, Sacred Heart University Jenna Hirsch, Borough of Manhattan Community College 61 Leadership From Within Secondary Mathematics Classrooms: Vignettes Along a Teacher-Leader Continuum Jan A. Yow, University of South Carolina Journal of Mathematics Education at Teachers College Leadership Issue Spring–Summer 2013, Volume 4 iii TABLE OF CONTENTS 67 Strengthening a Country by Building a Strong Public School Teaching Profession Kazuko Ito West, Waseda University Institute of Teacher Education LEADERSHIP NOTES FROM THE FIELD 81 A School in Western Kenya J. -

1984 Mathematical Sciences Professional Directory

Notices of the American Mathematical Society April 1984, Issue 233 Volume 31, Number 3, Pages 241-360 Providence, Rhode Island USA ISSN 0002-9920 Calendar of AMS Meetings THIS CALENDAR lists all meetings which have been approved by the Council prior to the date this issue of the Notices was sent to press. The summer and annual meetings are joint meetings of the Mathematical Association of America and the Ameri· can Mathematical Society. The meeting dates which fall rather far in the future are subject to change; this is particularly true of meetings to which no numbers have yet been assigned. Programs of the meetings will appear in the issues indicated below. First and second announcements of the meetings will have appeared in earlier issues. ABSTRACTS OF PAPERS presented at a meeting of the Society are published in the journal Abstracts of papers presented to the American Mathematical Society in the issue corresponding to that of the Notices which contains the program of the meet· ing. Abstracts should be submitted on special forms which are available in many departments of mathematics and from the office of the Society in Providence. Abstracts of papers to be presented at the meeting must be received at the headquarters of the Society in Providence, Rhode Island, on or before the deadline given below for the meeting. Note that the deadline for ab· stracts submitted for consideration for presentation at special sessions is usually three weeks earlier than that specified below. For additional information consult the meeting announcement and the list of organizers of special sessions. -

Lynn Steen Obituary

Lynn Steen: 1941 - 2015 Lynn Steen 1941–2015 Northfield— general public. He wrote or edited scores of papers, Lynn Arthur Steen, emeritus books, and journal articles and was sought after as an professor of mathematics at St. Olaf expert consultant and lecturer. College, died of heart failure on Colleagues described him not only as a highly June 21, 2015, in Minneapolis. effective writer but also a much-appreciated leader in math education at the state, national, and international He was born on Jan. 1, levels. He served as president of the Mathematical 1941, in Chicago to Dietrich George Association of America, chairman of the Council of Berthold and Margery Mayer. His father died when he was Scientific Society Presidents, executive director of the an infant, and in 1946 his mother married Navy veteran Mathematical Sciences Education Board of the National and musician Sigvart J. Steen, who adopted him. Research Council, and Fellow of the American Association After a year in Decorah, Iowa, the family moved for the Advancement of Science. Steen gave lectures to to Staten Island, New York, where his father became chair mathematical societies in more than a dozen countries, of the music department at Wagner College and his including Australia, South Africa, Switzerland, China, mother, a contralto, sang with the New York City Opera. Russia and Hungary. He was an assistant leader on the U.S. Steen graduated from Luther College in 1961 Delegation on Mathematics Education to China in 1983. with majors in mathematics and physics and a minor in One colleague remembered him as "warm- philosophy; there he was also co-editor of the college hearted, generous, and kind. -

One Hundred Years of L'enseignement Mathématique

One Hundred Years of L'Enseignement Mathematique Moments of Mathematics Education in the Twentieth Century Proceedings of the EM±ICMI Symposium Geneva, 20±22 October 2000 edited by Daniel CORAY, Fulvia FURINGHETTI, Hel eneÁ GISPERT, Bernard R. HODGSON and Gert SCHUBRING L'ENSEIGNEMENT MATHEMA TIQUE GenevÁ e Ð 2003 c 2003 L'Enseignement Mathematique , GenevÁ e Tous droits de traduction et de reproduction reserv es pour tous pays ISSN : 0425-0818 Ð ISBN : 2-940264-06-6 TABLE OF CONTENTS List of participants . 5 The Authors . 6 INTRODUCTION : DANIEL CORAY ± BERNARD R. HODGSON . 9 L'ENSEIGNEMENT MATHEMA TIQUE : BIRTH AND STAKES FULVIA FURINGHETTI Mathematical instruction in an international perspective : the con- tribution of the journal L'Enseignement Mathematique . 19 GERT SCHUBRING L'Enseignement Mathematique and the ®rst International Commis- sion (IMUK) : the emergence of international communication and cooperation . 47 GILA HANNA Journals of mathematics education, 1900±2000 . 67 REACTION : JEAN-PIERRE BOURGUIGNON . 85 GENERAL DISCUSSION (reported by CHRIS WEEKS) . 89 GEOMETRY RUDOLPH BKOUCHE La geom etrie dans les premieresÁ annees de la revue L'Enseignement Mathematique . 95 GEOFFREY HOWSON Geometry : 1950±70 . 113 COLETTE LABORDE Geom etrie ± Periode 2000 et apresÁ . 133 REACTION : NICOLAS ROUCHE . 155 GENERAL DISCUSSION (reported by MARTA MENGHINI) . 160 4 EM±ICMI SYMPOSIUM ANALYSIS JEAN-PIERRE KAHANE L'enseignement du calcul differentiel et integral au deb ut du vingtiemeÁ siecleÁ . 167 MAN-KEUNG SIU Learning and teaching of analysis in the mid twentieth century : a semi-personal observation . 179 LYNN STEEN Analysis 2000 : challenges and opportunities . 191 REACTION : MICHELEÁ ARTIGUE . 211 GENERAL DISCUSSION (reported by MARTA MENGHINI) . -

Acknowledgments

Acknowledgments The development of the Minnesota K-12 Mathematics Framework is the result of the combined efforts of many persons. Many stakeholders have worked collaboratively toward improving mathematics and science education in Minnesota. We are grateful to the members of the Board of SciMathMN, under the direction of Cyndy Crist and Kristi Rollag Wangstad, for affirming the impor- tance of this work and approving financial support for this effort. Special thanks goes to Sharon Stenglein, Mathematics Specialist at the Minnesota Department of Children, Families, and Learning. In her role at MDCFL, Sharon was responsible for facilitating the work to delineate state standards for mathematics when the process of establishing graduation standards began. She has worked continuously since then to guarantee that the teachers of math- ematics in Minnesota have had input into those standards and the revision process. Her vision for the Framework and her commitment to its implementation have been a source of inspiration and “quality improvement” in its development. The Framework’s Leadership Team met three years ago to outline the present state of mathematics and science education in Minnesota and set goals that could be met in this state document. They have worked collaboratively since then to forge a common outline for both the mathematics and science documents and have continued to provide valuable input, direction, and guidance to both the process and the product. Over one hundred thoughtful, talented, and committed K-16 educators from around the state par- ticipated in the difficult task of developing Chapter 3. During intense writing conferences, they brainstormed, argued and worked together to reach consensus on what K-12 Minnesota students should know and be able to do in mathematics.