Steffen Nygaard

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Track Thursday, March 30Th, 2017 BOARDGAMES

Track Location Thursday, March 30th, 2017 12:00 PM 12:30 PM 1:00 PM 1:30 PM 2:00 PM 2:30 PM 3:00 PM 3:30 PM 4:00 PM 4:30 PM 5:00 PM 5:30 PM 6:00 PM 6:30 PM 7:00 PM 7:30 PM 8:00 PM 8:30 PM 9:00 PM 9:30 PM 10:00 PM 10:30 PM 11:00 PM 11:30 PM 12:00 AM 12:30 AM 1:00 AM 1:30 AM 2:00 AM 2:30 AM 3:00 AM 01 Outpost 02 1862 Railway Mania in Eastern Counties 03 Arkham Horror Superfight! Apples to Apples 04 Forbidden Desert Corrupt Kingdom Terraforming Mars PowerGrid 05 Valeria Card Kingdoms Castle Panic Chrononauts Villages of Valleria TIME Stories 06 Ca$h 'n Guns Ca$h 'n Guns Evolution Colt Express Sailing Toward Osiris Star Wars: Rebellion 13 Mystic Vale: Vale of Magic Blazing Inferno Arkham Horror: The Card Game 14 Legenday Ascension War of Shadows Marvel Heroes! 15 Feast For Odin Gravwell Palaces of Carrara King of Tokyo/NY 16 Fairy Tale Villages of Valleria Pax Porfiriana Collector's Edition Takenoko 17 Ys The Pillars of the Earth Merchants and Marauders 23 Lanterns Demo Castles Caladale Covert Demo Clank! Demo Castles Caladale Lanterns Demo Clank! Demo Lanterns Demo 24 La Granja Shadow Hunters Elder Sign Mansions of Madness Second Edition 25 Age of Discovery Orcs Must Die!: Order - The Boardgame Stone Age 26 Kingdom Builder San Juan Vye Dominion 2E Iron Dragon Betrayal at House on Hill 27 Learn Rome: City of Argent: the Consortium Rome: City of Marble 28 Marble 31 Caylus Lords of Vegas Lanterns 32 Dalmutti The Best of Chronology Zendo 33 Dominant Species 34 Conflict of Heroes: Guadalcanal Conflict of Heroes: Guadalcanal 35 Raiders of the North -

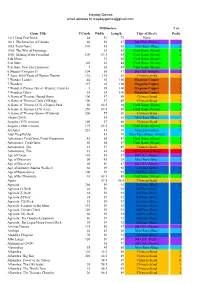

Mayday Games Email Updates to [email protected] # Of

Mayday Games email updates to [email protected] Millimeters # of Game Title # Cards Width Length Type of Sleeve Packs 1313 Dead End Drive 48 53 73 None 1812: The Invasion of Canada 60 56 87 Std USA (Purple) 1 1853 Train Game 110 45 68 Mini Euro (Blue) 2 1955: The War of Espionage 63 88 Card Game (Green) 0 1960: Making of the President 109 63.5 88 Card Game (Green) 2 2 de Mayo 63 88 Card Game (Green) 51st State 126 63 88 Card Game (Green) 2 51st State New Era Expansion 7 63 88 Card Game (Green) 1 6 Nimmt (Category 5) 104 56 87 Std USA (Purple) 2 7 Ages: 6000 Years of Human History 110 110 89 Chimera Sized 2 7 Wonder Leaders 42 65 100 Magnum Copper 1 7 Wonders 157 65 100 Magnum Copper 2 7 Wonders Promos (Stevie Wonder, Catan Island & Mannekin3 Pis) 65 100 Magnum Copper 1 7 Wonders Cities 38 65 100 Magnum Copper 1 A Game of Thrones -Board Game 100 57 89 Chimera Sized 1 A Game of Thrones Clash Of Kings 100 57 89 Chimera Sized 1 A Game of Thrones LCG -Chapter Pack 50 63.5 88 Card Game (Green) 1 A Game of Thrones LCG -Core 250 63.5 88 Card Game (Green) 3 A Game of Thrones Storm Of Swords 200 57 89 Chimera Sized 2 Abetto (2010) 45 68 Mini Euro (Blue) Acquire (1976 version) 180 57 88 Chimera Sized 2 Acquire (2008 version) 175 63.5 88 Card Game (Green) 2 Ad Astra 216 45 68 Mini Euro (Blue) 3 Adel Verpflichtet 45 70 Mini Euro (Blue) -Almost Adventurer Card Game Portal Expansion 45 64 89 Card Game (Green) 1 Adventurer: Card Game 80 64 89 Card Game (Green) 1 Adventurers, The 12 57 89 Chimera Sized 1 Adventurers, The 83 42 64 Mini Chimera (Red) -

7 Csoda Keltis

10 2014 / január Játékismertetők Guildhall DIXIT DOMINION KELTIS Pandemic speicherstadt KiegészítőK » OKKO 7 Csoda Kingdom Builder Tzolk’in Gondolatébresztő Mire jók a kiegészítők? Bevezető Kedves Olvasó! Amikor az olvasók „kezükbe veszik” (vagyis nak máshogy, vagy mit tennének még hozzá a já- letöltik) a JEM magazin mostani számát, addigra tékhoz. Ez természetes gondolat azok után, hogy már 2014-et írunk, és az előző szám megjelenése valaki jól kiismeri egy-egy játék mechanizmusát. óta bizony sok emlékezetes társasjátékos Így vannak ezzel a tervezők és a kiadók is, bár ré- eseményen vagyunk túl. Amikor ezeket a sorokat szükről a kiegészítők kiadásának motivációja ösz- írom, még nem tudjuk, hogy „mit hoz a Jézuska”, szetettebb. Amikor egy-egy kiegészítővel találko- de szinte biztos, hogy lesz a fa alatt társasjáték zik az ember, tapasztalatból már sejthető, hogy mi szép számmal. Még az is lehet, hogy ezek között indokolta annak kiadását: a korábbi, sikeres alap- lesznek a gamer szakzsargonban csak kiegnek játék továbbgondolása azért, hogy még élmény- becézett ún. kiegészítők, azaz egy-egy korábban dúsabbá váljon egy parti; az alapjáték hibáinak megjelent alapjátékhoz általában később kiadott felismerése és korrigálása; a játék alapgondolatá- játékvariációk. A kiegészítőket több csoportra nak továbbvitele és néha a teljesen új utakra lépés lehet osztani. Vannak olyanok, amelyek magát az vágya; vagy egyszerűen gazdasági ok, vagyis hogy alapjáték mechanizmusát is megváltoztatják, akár a kiadó még egy bőrt húzzon le egy branddé vált annyira, hogy az eredeti játék már csak az alapok játékról… szintjén lelhető fel a későbbi variációban (ilyen A mostani szám segít abban, hogy eligazodjatok pl. a decemberi JEM magazinban bemutatott ezekben a kérdésekben. -

Toy & Gift Account Catalog

TOY & GIFT ACCOUNT CATALOG EFFECTIVE AUGUST 1ST, 2017 TOY & GIFT ACCOUNT CATALOG Efectve August 1st, 2017. If you have questons about your account, please contact your authorized ANA distributor or visit www.asmodeena.com for more informaton. SKU PRODUCT NAME STUDIOS MSRP AD02 Android: Mainframe Fantasy Flight Games $34.95 BLD02 The Builders: Antquity Bombyx $17.99 BRN01 Braintopia Captain Macaque $14.99 BRU01 Bruxelles 1893 Pearl Games $59.99 CARD01 Cardline Animals Bombyx $14.99 CE01 Cosmic Encounter Fantasy Flight Games $59.95 CG02 Cash n Guns (2nd Editon) Repos $39.99 CN3003 Star Trek Catan Catan Studio $55.00 CN3025 Catan Junior Catan Studio $30.00 CN3071 Catan Catan Studio $49.00 CN3103 Catan Traveler Catan Studio $45.00 CN3131 Rivals for Catan Catan Studio $25.00 CN3142 Struggle for Catan Catan Studio $13.00 COLT01 Colt Express Ludonaute $39.99 CONC01 Concept Repos $39.99 CPT01 Captain Sonar Matagot $49.99 CROS01 Crossing Space Cowboys $24.99 DIF01 Dice Forge Libellud $39.99 DIX01 Dixit Libellud $34.99 DO7001 Mystery of the Abbey Days of Wonder $59.99 DO7101 Pirate's Cove Days of Wonder $59.99 DO7201 TicKet to Ride Days of Wonder $49.99 DO7901 Small World Days of Wonder $49.99 DO8401 Five Tribes Days of Wonder $59.99 DO8501 Quadropolis Days of Wonder $49.99 DO8601 Yamatai Days of Wonder $59.99 DRM01 Dream Home Rebel $39.99 DSH102 DS: Std. Mate: 100 Count: BLACK Arcane Tinmen $10.99 FD01 Formula D Asmodee Studio $59.99 FFS01 BG Sleeves Mini American (Pack) Fantasy Flight Games $2.49 FT01 Final Touch Space Cowboys $16.99 GHO01 Ghost -

Here Be Books & Games News

Board Game Auction FAQ Books for $1 and 50% OFF! New Arrivals & Coming Everything you wanted to know Get books for $1 each and take Soon! No. You cannot use Store Credit, Trade Credit, or exciting event. If everyone enjoys it, we’ll do it again. Game Night at 6 p.m. at the store. around the Mediterranean Sea. Cottage Garden Our rst priorities are repairs, Board Game Auction FAQ New Arrivals & Coming Soon! the Forensic Scientist who holds the key to convicting the e Ringmaster, a new meeple for each player, leads a about our first-ever Board Game 50% OFF Hardback books in Several new games arrived in July. coupons to pay for auction purchases. Any discounts you With peace at the borders, improvements to make the build- We’re hosting our rst-ever Board Attending members submit a book title for the reading I’ve been looking forward to criminal, but is only able to express their knowledge fairly normal life, but he truly shines when he is Auction... most categories - now until we Check ’em out!... usually receive (military, teacher or rewards program) do Books On Sale! We’re still seeing lots of new summer arrivals. Here's a harmony inside the provinces, ing ADA compliant, oors, paint- Game Auction during August list. We pick one to read each month - usually the one getting a copy of Cottage Garden for through analysis of the scene. e rest are investigators, surrounded by acrobats and the circus. e Acrobats are move! More books added daily... not apply to auction purchases. -

Document 34334

rd Meeples Cafe Game List (KD) – Updated 23 June 2014 10 Days in Asia Carcassonne 10 Days in Europe Carcassonne: Big Box 2010 6 Takes! Cargo Noir 7 Wonders Caribbean 7 Wonders: Leaders Cartagena A Game of Thrones: The Board Game (2nd Edition) Castle Panic A Touch of Evil: The Supernatural Game Catan: Cities & Knights Abalone Catan: Cities & Knights – 5-6 Player Extension Abandon Ship Catan: Explorers & Pirates Acquire Catan: Seafarers Age of Discovery Catan: Seafarers 5-6 Player Extension Agricola Caylus Agricola: All Creatures Big and Small Chaos in the Old World Agricola: World Championship Deck - 2011 Chateau Roquefort Airlines Europe Chicago Express Alhambra: Big Box Chicago Poker Amazonas Chicken Cha Cha Cha Android Chromino Apples to Apples Citadels Aquaretto City of Horror Arkham Horror Cloud 9 At the Gates of Loyang Coney Island Atlantis Copycat Automobile Cosmic Cows Ave Caesar Cosmic Encounter Baker's Dozen Cowabunga Bamboleo CrossWise Banana Party Cuba Bang! Dead Panic Bang! The Bullet! Deluxe Pit Bang! The Dice Game Dice Town Battlestar Galactica Discworld: Ankh-Morpork Be Rhymed! Dixit Betrayal at House on the Hill Dixit 2 a.k.a. Dixit Quest Black Sheep Dixit Jinx Blokus Dixit Journey Blokus Duo Dixit Odyssey Blokus Trigon Dominant Species Blood Bowl: Team Manager - The Card Game Dominion Bohnanza Dominion: Hinterlands Bombay Dominion: Intrigue Boomtown Dos Rios Bora Bora Dragon Parade Bugs & Co Dream Factory Burrows Dumb Ass Ca$h 'n Gun$ Dungeon Petz Ca$h 'n Gun$: Yakuzas El Grande (Decennial Edition) Can't Stop Elder Sign -

Board Game Auction Results

Board Game Auction Results Learn to Play Modern Board Kingdomino Review Learn how it all turned out: Games Learn more about this auction: Roz for running the games back and forth between the • Hounded (Iron Druid series) by Kevin Hearne (Erik) same basic gameplay as the original. We’ve played most of window when you get stuck. Meet Objectives to earn richest kingdom lled with waving elds of grain; rich, • 2017 Nederlandse Spellenprijs Best Family Game Nomi- • Dixit - 6+ Games for Fun & Interactive Gatherings • Robinson Crusoe: Adventures on Our Favorite can pass if you want. jealousy inducing deals, This month we’re teach- light, fun and puzzly • El Gaucho - Round ’em Up and Move ’em Out! • Archipelago and solo expansion $45 Gate Room, where the games were on display, and the • Lord of Light by Roger Zelazny (Jonathan) them, including: Europe, Nordic Countries, USA 1910, points and win! Every game oers new challenges. dark forests; sparkling lakes; green pastures; a bit of nee the Cursed Island - A Cooperative 3rd Round, you can’t say any how Tim lost geek cred, ing the Ticket to Ride tile-placement game... • Fiery Dragons - 10 Games for Kids Party Game • Castles of Mad King Ludwig $30 Drgaon Room, where the auction was held. ank you so • Neuromancer by William Gibson (Tim) Rails & Sails, Asia, India, Heart of Africa, Nederlands, and at’s the Learn to Play lineup for May. marsh-land for that homey plu mud smell; towering • 2017 Gouden Ludo Best Family Game Winner Game Filled with Euro Game words at all. Just act it out! Your what sellers bought series and a variety of • Firefly: The Game - A Shiny • Kingdom Builder $17 much Roz, Liz and Diane for pulling games while I made * Note: you have to be attending meetings for your book U.K. -

Arcane Wonders Ape Games Ares Games Alliance Game

GAMES ALLIANCE GAME DISTRIBUTORS ARES GAMES DIVINITY DERBY Ready to place bets on a race of mythological proportions? Zeus has invited you, along with other divine friends from across the Multiverse, for GAMES a little get–together on Mount Olympus. After a legendary lunch and a few pints of Ambrosia, you start wagering bets on which mythic creature is the fastest - and the only way to know for GAME TRADE MAGAZINE #211 certain is to summon all those creatures GTM contains articles on gameplay, for an epic race, with the Olympic “All- previews and reviews, game related father”, Zeus, as the ultimate judge! fiction, and self contained games and In Divinity Derby, players assume the game modules, along with solicitation role of a god (Anansi, Horus, Marduk, information on upcoming game and Odin, Quetzalcoatl, and Yu Huang the hobby supply releases. Jade Emperor), betting on a race among GTM 211 ...................................$3.99 NOT FINAL ART mythological flying creatures. Scheduled to ship in September 2017. AGS AREU004 .............................................................................................. $39.90 ART FROM PREVIOUS ISSUE GALAXY DEFENDERS: FINAL COUNTDOWN The end of the war is imminent - let’s start the Final Countdown! Expand the Galaxy Defenders agency’s army with APE GAMES these powerful, new agents - Scandium, Vanadium, and Xeno-Warrior - and unleash their unique classes, powers, and items! Plus, take the battlefield to the Third Dimension with new Doors and Windows Stand-up tokens! Also included is an additional set of custom Galaxy Defenders dice. Scheduled to ship in September 2017. AGS GRPR008 ............................. $39.90 LAST FRIDAY: RETURN TO CAMP APACHE Only Evil Can Stop Evil! In the DARK IS THE NIGHT wake of the tragic Camp Apache massacre over a decade ago, an Hunt or be Hunted! A hunter has set up ancient Evil is awakening - again - camp in a dark and dangerous forest. -

2 Rooms and a Boom 3 Deluxe Jigsaw Puzzles 7 Card Slugfest 7

2 Rooms and a Boom Codenames Gloom 3 Deluxe Jigsaw Puzzles Collie of Duty Go Mental 7 Card Slugfest Colosseum Grave Business 7 Wonders Concept Grifters 7 Wonders Duel Cosmic Encounter Grimm Forest A Game of Thrones Coup Grimslingers A Game of Thrones 2nd Edition Cthulu Dice Guesstures Adventure Time Flux Cthulu Gloom Hanabi Age of Steam Cthulu in the House Hanamikoji Amerigo Cutthroat Caverns Hegemonic Apples to Apples D&D Miniatures Entry Pack Hoity Toity Apples to Apples: Disney Edition Dead Man's Treasure I've Never Aquadukt Dead of Winter Igels Arabian Nights Deluxe Hanabi Illuminati Arcadia Quest Dice of Crowns In the Year of the Dragon Arctic Scavengers Dice Throne Infected Arkham Horror Die Macher Inn-Fighting D&D Dice Game Arkham Horror: Black Goat of the Woods Diplomacy Innovation Arkham Horror: Curse of the Dark Pharaoh Dirty Minds Innovation: Figures in the Sand Arkham Horror: Dunwich Disneys Photomosaics Innovation: Echoes of the Past Aye, Dark Overlord Dixit Isaribi Bang! Dixit 2 Jenga Battle Lore Dixit Journey Jigsaw Puzzle Battlestar Galactica Dominion Karnage Betrayal at House on the Hill Dominion: Alchemy Karnage Expansion Between Two Cities Dominion: Base Khaos Ball Bloodbowl: Team Manager Dominion: Dark Ages Killer Bunnies Blue Moon City Dominion: Hinterlands King of the Beasts Bohnanza Dominion: Intrigue King of Tokyo Bootleggers Dominion: Intrigue Update Pack Kingdom Builder Boss Monster Dominion: Prosperity Kingdominos Boss Monster 2 Dominion: Seaside La Beast Boss Monster: Crash Landing Dominion: Update Pack Lanterns -

Evolution Gaming Memes

Tzolk'in Mystic Vale Kingdom Builder *Variable Setup* CodeNames (Card Building) *Variable Setup* King of Tokyo Tiananmen (Worker Placement) Dixit (Word Game) (Press Your Luck) (Area Control) Hanabi Secret Hitler *Shifting Alliances* *Cyclic vs. Radial Connection* (Win by Points) (Secret Information) (Uses Moderator) 2016 (Area Points) (Co-Operative) (Blind Moderator) (Press Your Luck) (Educational Game - History) 2012 (Perception) (Secret Information) Caylus (Connectivity Points) (Secret Information) (Secret Alliances) (Multiple Paths to Victory) (Asymmetric Player Powers) 2010 (Deduction Win Condition) (Win by Points) (Asymmetric Player Powers) 2011 (Worker Placement) Star Realms PatchWork (Pure Strategy) 2016 2013 Dvonn 2012 2016 (1D Linear Board) (Deck Building) (Cover the Board) 2014 (Shrinking Board) (Win by Points) (Card Synergy) 2014 2005 (~Hexagonal Grid) 2014 (Minimal Rules) Calculus (Neutral Pieces) (Continuous Board) Thurn and Taxis (Strategic Perception) (2D Board) (Area Control) WYPS Power Grid Prime Climb (Stackable Pieces - Top Piece Controls) (Edge to Edge Connection) Carcassonne (Catch-Up Mechanic) (Area Points) Lost Cities (Word Game) (Educational Game - Mathematics) 2001 (Minimal Rules) (Worker Placement) (Bidding) Dominion *Star (Connectivity Points) (Suits) (Hexagonal Grid) (Random Strategic Movement) 1997 (Revealed Board) (Map) *Deck Building* (2D Board) (Win by Points) (Win by Points) (Edge to Edge Connection) The Resistance / Avalon Coup (1D Linear Board) 2000 2004 (Card Synergy) (Minimalist Rules) 2006 1999 2009 -

Download Free Feedback Forms From

UK GAMES EXPO 2013 3 WELCOME WELL HERE WE ARE AT THE BRAND NEW VENUE FOR THE 7TH UK GAMES EXPO. It’s been quite a year putting the show together and with so many participants, events and elements to incorporate, an entire new venue to map, not to mention simply figuring out what goes where, there S TAY ON TARGET have been times when the five of 04 DESIGNING THE X-WING us have wondered if we would ever MINIATURES GAME manage to make it all work. If it does work it will down to the hard work of R ETURN OF many dozens of volunteers, games 06 WOTAN GAMES masters, umpires and demonstrators LAURENCE O’BRIEN TELLS and we thank them all for their hard work. This show started back in THE tale 2006 with two or three of us and an idea (or possibly a nightmare). The BOA RD GAME fact that we have come this far is 08 RE-DESIGN testimony to that work. COMPETITION FOR BUDDING GAMES DESIGNERS We also thank the traders for coming on board and supporting this major R ICHARD BREEZE move to a location we hope will be 11 MY LIFE IN GAMES our home for at least the next few years. We especially thank our major T HE GAMES sponsors Mayfair Games, Fantasy Flight games, Asmodee and our 15 DESIGN CHECKLIST Associate sponsors, A1 Comics, THE DOS AND DON’TS OF Chronicle City, Coiled Spring, Creating A GAME Chessex, Surprised Stare Games and Triple Ace Games for their support EXPO GUIDE and for the contents of the goody 19 what’S GOING ON at THE EXPO bags. -

Verlag Spiel 2F Spiele Frischfisch 2F Spiele Funkenschlag Deluxe

Verlag Spiel 2F Spiele Frischfisch 2F Spiele Funkenschlag Deluxe Abacus Spiele Ab in die Tonne Abacus Spiele Africana Abacus Spiele Bang the dice game Abacus Spiele Blindes Huhn extrem Abacus Spiele Cacao chocolatl Abacus Spiele Cacao Diamante Abacus Spiele Charly Abacus Spiele Chimera Abacus Spiele Coloretto Abacus Spiele Das Spiel Abacus Spiele Das Vermächtnis des Maharaja Abacus Spiele Deckscape Der Test Abacus Spiele FotoSafari Abacus Spiele Game of Trains Abacus Spiele Gold Abacus Spiele Hanabi Abacus Spiele Jolly & Roger Abacus Spiele Ladybohn Abacus Spiele Leo muss zum Friseur Abacus Spiele Micro Robots Abacus Spiele Neue Welten Abacus Spiele Nmbr9 Abacus Spiele Pick a dilly Abacus Spiele Royals Abacus Spiele Samurai Sword Rising Sun Abacus Spiele Shanghaien Abacus Spiele Stop Abacus Spiele Turandot Abacus Spiele Valdora mit Erweiterung Abacus Spiele Velo City Abacus Spiele Word Up Abacus Spiele Zooloretto Abacus Spiele Zooloretto Goodie-Box Abacus Spiele Zooloretto Würfelspiel Alea Die Burgen von Burgund Alea Die Fürsten von Florenz Alea San Juan Alea Tadsch Mahal Amigo 3 sind eine zu viel Amigo Alle meine Entchen Amigo Alle Vögel sind schon da Amigo Aufsteigend 23 ablegen Amigo Bauboom Amigo Blockers Amigo Bohn to be wild Amigo Bohnanza Amigo Bohnanza 20 Jahre Amigo Bohnanza Das Duell Amigo Bohnedikt Amigo Brain Storm Amigo Bubble Bomb Amigo Bullenparty Amigo Caramba Amigo Dao Amigo Deja-vu Amigo Die Brücke am Rio D'Oro Amigo Die Portale von Molthar Amigo Elfenland Amigo Expedition Neuauflage Amigo Five Crows Königs-Romme Amigo Flori Vielfraß Amigo Freaky Amigo Friesematenten Set 1 Amigo Friesematenten Set 2 Amigo Galapagos Amigo Halli Galli Party Amigo Hol's der Geier Amigo Hugo Das Schloßgespenst Amigo Ice cool Amigo Intrige Amigo Karma Amigo Kerflip Amigo KrabbelTrabbel Amigo Kuddel muddel Amigo Lecker Mammut! Amigo Matschig Amigo Mein erstes Quartett Amigo Mr.