Malaya Documentation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sentence Boundary Detection for Handwritten Text Recognition Matthias Zimmermann

Sentence Boundary Detection for Handwritten Text Recognition Matthias Zimmermann To cite this version: Matthias Zimmermann. Sentence Boundary Detection for Handwritten Text Recognition. Tenth International Workshop on Frontiers in Handwriting Recognition, Université de Rennes 1, Oct 2006, La Baule (France). inria-00103835 HAL Id: inria-00103835 https://hal.inria.fr/inria-00103835 Submitted on 5 Oct 2006 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Sentence Boundary Detection for Handwritten Text Recognition Matthias Zimmermann International Computer Science Institute Berkeley, CA 94704, USA [email protected] Abstract 1) The summonses say they are ” likely to persevere in such In the larger context of handwritten text recognition sys- unlawful conduct . ” <s> They ... tems many natural language processing techniques can 2) ” It comes at a bad time , ” said Ormston . <s> ”A singularly bad time ... potentially be applied to the output of such systems. How- ever, these techniques often assume that the input is seg- mented into meaningful units, such as sentences. This pa- Figure 1. Typical ambiguity for the position of a sen- per investigates the use of hidden-event language mod- tence boundary token <s> in the context of a period els and a maximum entropy based method for sentence followed by quotes. -

Multiple Segmentations of Thai Sentences for Neural Machine Translation

Proceedings of the 1st Joint SLTU and CCURL Workshop (SLTU-CCURL 2020), pages 240–244 Language Resources and Evaluation Conference (LREC 2020), Marseille, 11–16 May 2020 c European Language Resources Association (ELRA), licensed under CC-BY-NC Multiple Segmentations of Thai Sentences for Neural Machine Translation Alberto Poncelas1, Wichaya Pidchamook2, Chao-Hong Liu3, James Hadley4, Andy Way1 1ADAPT Centre, School of Computing, Dublin City University, Ireland 2SALIS, Dublin City University, Ireland 3Iconic Translation Machines 4Trinity Centre for Literary and Cultural Translation, Trinity College Dublin, Ireland {alberto.poncelas, andy.way}@adaptcentre.ie [email protected], [email protected], [email protected] Abstract Thai is a low-resource language, so it is often the case that data is not available in sufficient quantities to train an Neural Machine Translation (NMT) model which perform to a high level of quality. In addition, the Thai script does not use white spaces to delimit the boundaries between words, which adds more complexity when building sequence to sequence models. In this work, we explore how to augment a set of English–Thai parallel data by replicating sentence-pairs with different word segmentation methods on Thai, as training data for NMT model training. Using different merge operations of Byte Pair Encoding, different segmentations of Thai sentences can be obtained. The experiments show that combining these datasets, performance is improved for NMT models trained with a dataset that has been split using a supervised splitting tool. Keywords: Machine Translation, Word Segmentation, Thai Language In Machine Translation (MT), low-resource languages are 1. Combination of Segmented Texts especially challenging as the amount of parallel data avail- As the encoder-decoder framework deals with a sequence able to train models may not be enough to achieve high of tokens, a way to address the Thai language is to split the translation quality. -

Marzuki Malaysiakini 24 Februari 2020 Wartawan Malaysiakini

LANGSUNG: Serah Dewan Rakyat tentukan Dr M jadi PM – Marzuki Malaysiakini 24 Februari 2020 Wartawan Malaysiakini LANGSUNG | Dengan pelbagai perkembangan semasa yang berlaku pantas dalam negara sejak semalam, Malaysiakini membawakan laporan langsung mengenainya di sini. Ikuti laporan wartawan kami mengenai gerakan penjajaran baru politik Malaysia di sini. Terima kasih kerana mengikuti laporan langsung Malaysiakini Sekian liputan kami mengenai keruntuhan kerajaan PH, Dr Mahathir Mohamad meletak jawatan sebagai perdana menteri dan pengerusi Bersatu. Terus ikuti kami untuk perkembangan penjajaran baru politik negara esok. Jika anda suka laporan kami, sokong media bebas dengan melanggan Malaysiakini dengan harga serendah RM0.55 sehari. Maklumat lanjut di sini. LANGSUNG 12.20 malam - Setiausaha Agung Bersatu Marzuki Yahya menafikan terdapat anggota parlimen atau pimpinan Bersatu yang di war-warkan keluar parti. Ini selepas Dr Mahathir Mohamad mengumumkan peletakan jawatan sebagai pengerusi Bersatu tengah hari tadi. Menurut Marzuki keputusan Bersatu keluar PH dibuat sebulat suara oleh MT parti. Ketika ditanya pemberita, beliau juga menafikan kemungkinan wujudnya kerjasama Pakatan Nasional. Menjelaskan lanjut, kata Marzuki tindakan Dr Mahathir itu "menyerahkan kepada Dewan Rakyat untuk menentukan beliau sebagai PM Malaysia. "Tidak timbul soal Umno, PAS atau mana-mana pihak. Ini terserah MP untuk sokong Tun (Dr Mahathir Mohamad) sebagai PM," katanya. 11.40 malam: Hampir 100 penyokong Bersatu berkumpul di luar ibu pejabat parti itu sementara menunggu para pemimpin mereka yang bermesyuarat malam ini. Apabila Ketua Pemuda Bersatu Syed Saddiq Abdul Rahman keluar dari bangunan itu, beliau dikerumuni para penyokongnya yang mula berteriak dan menolak pemberita bagi memberi laluan kepada Saddiq berjalan ke arah keretanya, yang menyebabkan sedikit keadaan sedikit kecoh. -

A Clustering-Based Algorithm for Automatic Document Separation

A Clustering-Based Algorithm for Automatic Document Separation Kevyn Collins-Thompson Radoslav Nickolov School of Computer Science Microsoft Corporation Carnegie Mellon University 1 Microsoft Way 5000 Forbes Avenue Redmond, WA USA Pittsburgh, PA USA [email protected] [email protected] ABSTRACT For text, audio, video, and still images, a number of projects have addressed the problem of estimating inter-object similarity and the related problem of finding transition, or ‘segmentation’ points in a stream of objects of the same media type. There has been relatively little work in this area for document images, which are typically text-intensive and contain a mixture of layout, text-based, and image features. Beyond simple partitioning, the problem of clustering related page images is also important, especially for information retrieval problems such as document image searching and browsing. Motivated by this, we describe a model for estimating inter-page similarity in ordered collections of document images, based on a combination of text and layout features. The features are used as input to a discriminative classifier, whose output is used in a constrained clustering criterion. We do a task-based evaluation of our method by applying it the problem of automatic document separation during batch scanning. Using layout and page numbering features, our algorithm achieved a separation accuracy of 95.6% on the test collection. Keywords Document Separation, Image Similarity, Image Classification, Optical Character Recognition contiguous set of pages, but be scattered into several 1. INTRODUCTION disconnected, ordered subsets that we would like to recombine. Such scenarios are not uncommon when The problem of accurately determining similarity between scanning large volumes of paper: for example, one pages or documents arises in a number of settings when document may be accidentally inserted in the middle of building systems for managing document image another in the queue. -

An Incremental Text Segmentation by Clustering Cohesion

An Incremental Text Segmentation by Clustering Cohesion Raúl Abella Pérez and José Eladio Medina Pagola Advanced Technologies Application Centre (CENATAV), 7a #21812 e/ 218 y 222, Rpto. Siboney, Playa, C.P. 12200, Ciudad de la Habana, Cuba {rabella, jmedina} @cenatav.co.cu Abstract. This paper describes a new method, called IClustSeg, for linear text segmentation by topic using an incremental overlapped clustering algorithm. Incremental algorithms are able to process new objects as they are added to the collection and, according to the changes, to update the results using previous information. In our approach, we maintain a structure to get an incremental overlapped clustering. The results of the clustering algorithm, when processing a stream, are used any time text segmentation is required, using the clustering cohesion as the criteria for segmenting by topic. We compare our proposal against the best known methods, outperforming significantly these algorithms. 1 Introduction Topic segmentation intends to identify the boundaries in a document with goal of capturing the latent topical structure. The automatic detection of appropriate subtopic boundaries in a document is a very useful task in text processing. For example, in information retrieval and in passages retrieval, to return documents, segments or passages closer to the user’s queries. Another application of topic segmentation is in summarization, where it can be used to select segments of texts containing the main ideas for the summary requested [6]. Many text segmentation methods by topics have been proposed recently. Usually, they obtain linear segmentations, where the output is a document divided into sequences of adjacent segments [7], [9]. -

A Text Denormalization Algorithm Producing Training Data for Text Segmentation

A Text Denormalization Algorithm Producing Training Data for Text Segmentation Kilian Evang Valerio Basile University of Groningen University of Groningen [email protected] [email protected] Johan Bos University of Groningen [email protected] As a first step of processing, text often has to be split into sentences and tokens. We call this process segmentation. It is often desirable to replace rule-based segmentation tools with statistical ones that can learn from examples provided by human annotators who fix the machine's mistakes. Such a statistical segmentation system is presented in Evang et al. (2013). As training data, the system requires the original raw text as well as information about the boundaries between tokens and sentences within this raw text. Although raw as well as segmented versions of text corpora are available for many languages, this required information is often not trivial to obtain because the segmented version differs from the raw one also in other respects. For example, punctuation marks and diacritics have been normalized to canonical forms by human annotators or by rule-based segmentation and normalization tools. This is the case with e.g. the Penn Treebank, the Dutch Twente News Corpus and the Italian PAISA` corpus. This problem of missing alignment between raw and segmented text is also noted by Dridan and Oepen (2012). We present a heuristic algorithm that recovers the alignment and thereby produces standoff annotations marking token and sentence boundaries in the raw test. The algorithm is based on the Levenshtein algorithm and is general in that it does not assume any language-specific normalization conventions. -

Naik Taraf Swettenham Pier Cruise Terminal Jana Pertumbuhan Ekonomi Dan Pelancongan

Wawancara : Lindungi bekalan air YDP MAINPP MS 3 & 7 MS 15 FEBRUARI 16-29, 2020 Naik taraf Swettenham Pier Cruise Terminal jana pertumbuhan ekonomi dan pelancongan Oleh : AINUL WARDAH oleh Kerajaan Negeri terhadap SOHILLI industri ini jelas melalui Gambar : AHMAD ADIL kelulusan yang diberikan MUHAMAD & PPC terhadap pengasingan sebidang tanah kepada GEORGE TOWN – Projek PPC selama 30 tahun untuk menaik taraf Terminal Kapal membangunkan SPCT,” Persiaran Swettenham Pier katanya. atau lebih dikenali sebagai Difahamkan, projek menaik Swettenham Pier Cruise taraf berkenaan merangkumi Terminal (SPCT) bakal peluasan kawasan dermaga memberi impak positif sedia ada di bahagian utara terhadap pertumbuhan yang membolehkan dua kapal ekonomi dan pelancongan persiaran terbesar di dunia negeri apabila siap kelak. (Oasis-Class) berlabuh pada Ketua Menteri, Y.A.B. satu-satu masa berbanding Tuan Chow Kon Yeow kini hanya sebuah sahaja. berkata, projek mencecah Selain itu, penstrukturan RM155 juta bagi Fasa 1 semula terminal semasa untuk bakal disempurnakan antara meningkatkan kecekapan 24 hingga 36 bulan dengan pengendalian penumpang, menambah baik nilai-nilai pembangunan semula gudang warisan yang terdapat di Tapak lama untuk aktiviti komersial Warisan Dunia UNESCO dan mewujudkan kawasan George Town dekat sini. pengangkutan darat (GTA) “Projek menaik taraf antara komponen yang ini merupakan salah satu terdapat dalam projek ini. pencapaian dalam industri Apabila siap kelak, SPCT KETUA Menteri dan barisan jemputan kehormat pada Majlis Pelancaran Projek Naik Taraf SPCT di sini baru-baru ini. kapal persiaran di Pulau dapat menampung sehingga Pinang. 12,000 penumpang dalam pengendali bas pelancongan, “(Dan) saya ingin berterima satu-satu masa berbanding teksi, beca dan lain-lain kasih kepada Kerajaan kini hanya 8,000 penumpang. -

Teks-Ucapan-Pengumuman-Kabinet

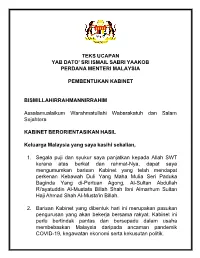

TEKS UCAPAN YAB DATO’ SRI ISMAIL SABRI YAAKOB PERDANA MENTERI MALAYSIA PEMBENTUKAN KABINET BISMILLAHIRRAHMANNIRRAHIM Assalamualaikum Warahmatullahi Wabarakatuh dan Salam Sejahtera KABINET BERORIENTASIKAN HASIL Keluarga Malaysia yang saya kasihi sekalian, 1. Segala puji dan syukur saya panjatkan kepada Allah SWT kerana atas berkat dan rahmat-Nya, dapat saya mengumumkan barisan Kabinet yang telah mendapat perkenan Kebawah Duli Yang Maha Mulia Seri Paduka Baginda Yang di-Pertuan Agong, Al-Sultan Abdullah Ri'ayatuddin Al-Mustafa Billah Shah Ibni Almarhum Sultan Haji Ahmad Shah Al-Musta'in Billah. 2. Barisan Kabinet yang dibentuk hari ini merupakan pasukan pengurusan yang akan bekerja bersama rakyat. Kabinet ini perlu bertindak pantas dan bersepadu dalam usaha membebaskan Malaysia daripada ancaman pandemik COVID-19, kegawatan ekonomi serta kekusutan politik. 3. Umum mengetahui saya menerima kerajaan ini dalam keadaan seadanya. Justeru, pembentukan Kabinet ini merupakan satu formulasi semula berdasarkan situasi semasa, demi mengekalkan kestabilan dan meletakkan kepentingan serta keselamatan Keluarga Malaysia lebih daripada segala-galanya. 4. Saya akui bahawa kita sedang berada dalam keadaan yang getir akibat pandemik COVID-19, kegawatan ekonomi dan diburukkan lagi dengan ketidakstabilan politik negara. 5. Berdasarkan ramalan Pertubuhan Kesihatan Sedunia (WHO), kita akan hidup bersama COVID-19 sebagai endemik, yang bersifat kekal dan menjadi sebahagian daripada kehidupan manusia. 6. Dunia mencatatkan kemunculan Variant of Concern (VOC) yang lebih agresif dan rekod di seluruh dunia menunjukkan mereka yang telah divaksinasi masih boleh dijangkiti COVID- 19. 7. Oleh itu, kerajaan akan memperkasa Agenda Nasional Malaysia Sihat (ANMS) dalam mendidik Keluarga Malaysia untuk hidup bersama virus ini. Kita perlu terus mengawal segala risiko COVID-19 dan mengamalkan norma baharu dalam kehidupan seharian. -

Actress with a Big Heart

20% jump Double in clinical blow for TELLING IT AS IT IS page waste page jobseeker 2 3 INSIDE ON WEDNESDAY NOVEMBER 4, 2020 No. 7652 PP 2644/12/2012 (031195) www.thesundaily.my FOOD FOR THOUGHT ... A police officer feeds a cat found loitering outside Plaza Hentian Kajang yesterday while patrolling the complex, which is now under an enhanced movement control order. EPF lifeline? █ BY ALISHA NUR MOHD NOOR [email protected] oDebate hots up over proposal to ETALING JAYA: The proposal to allow employees to dip into their allow withdrawal from Account 1 retirement fund has turned into a debate of meeting short-term needs such as medical fees or to meet their immediate financial Ppecuniary needs versus long-term financial purchase a house. needs,” he told theSun yesterday. security. Prime Minister Tan Sri Muhyiddin “This is better than a one-off Unions are all for giving workers access Yassin had said earlier that more people withdrawal that can have a to their Employees Provident Fund (EPF) were seeking the green light to withdraw negative impact on overall Account 1 but employers have warned that from Account 1 of their EPF savings. dividends,” he added. such a move could be devastating for the However, he said, it would be difficult In May, the EPF approved country’s future. to implement given that the government applications from 3.5 The Malaysian Trades Union Congress has already disbursed billions in cash million contributors to (MTUC) has suggested that cash-strapped aid. withdraw RM500 each from EPF contributors be allowed a monthly Halim conceded that it is a tough their Account 2 under the i- withdrawal from their Account 1 savings to decision for EPF to make given that it Lestari scheme. -

EDISI 84 • 7 - 13 JANUARI 2018 • Amanah

BIL 84 7 - 13 JANUARI 2018 Azam Konvensyen 2018: PH bakal Tukar umum kerajaan tawaran perompak 'kerajaan Umno BN menanti' PM sementara atau kekal bukan isu dalam Perlembagaan ms2 ms6 ms9 PROF Redzuan membentangkan apa dibuat terhadap kawasan pilihan raya di seluruh negara melalui persempadanan semula. Bagi beliau ini tindakan mencuri kemenangan, bukan kerana sokongan rakyat tetapi kerana design (rekabentuk sempa- dan pilihanraya) dan mapping (pemetaan). SELEPAS mencuri wang 1MDB, kini usaha dibuat untuk mencuri keputusan pilihan raya pula melalui persempa- danan semula kawasan pilihan raya. Kajian mendapati Barisan Nasional (BN) akan mengam- bil alih Selangor dan mengembalikan kuasa dua pertiga BN di negara ini dalam pilihanraya umum akan datang jika persempadanan semula kawasan pilihan raya digunakan dalam pilihan raya umum ke 14 nanti. Ia boleh berlaku jika ia berjalan tanpa tentangan menyeluruh dari rakyat terhadap BN dalam pilihan raya umum akan datang. Bagaimanapun di Kelantan, persempadanan ini tidak akan jejaskan hasrat rakyat untuk menukar kerajaan, dakwa AMANAH. Laporan penuh muka 2 2 NASIONALNASIONAL EDISI 84 • 7 - 13 JANUARI 2018 • Amanah Azam 2018: Tukar kerajaan kleptokrat Umno BN EHADIRAN tahun baru 2018 yang itu sangat miskin pada tahun 60 an lalu?” belakang,” katanya. bakal berlangsung di dalamnya Pili- soal beliau. Puncanya, kata beliau, adalah kerana Khan Raya Umum Ke-14 (PRU14) tidak Indonesia juga, katanya, serupa dengan rasuah berleluasa oleh pemimpin negara, lama lagi, merupakan peluang terbaik bagi menjadi negara yang mengeksport peker- pegawai kerajaan, pemimpin politik yang seluruh rakyat Malaysia untuk menyelamat- janya ke luar negara termasuk ke Malaysia menyebabkan khazanah negara lesap kan negara dari segala masalah yang sedang dan Timur Tengah dan jurang kaya miskin dirompak, nilai mata wang jatuh merudum membelenggu rakyat ketika ini. -

Battle Against the Bug Asia’S Ght to Contain Covid-19

Malaysia’s political turmoil Rohingyas’ grim future Parasite’s Oscar win MCI(P) 087/05/2019 Best New Print Product and Best News Brand in Asia-Pacic, International News Media Association (INMA) Global Media Awards 2019 Battle against the bug Asia’s ght to contain Covid-19 Countries race against time to contain the spread of coronavirus infections as fears mount of further escalation, with no sign of a vaccine or cure yet WE BRING YOU SINGAPORE AND THE WORLD UP TO DATE IN THE KNOW News | Live blog | Mobile pushes Web specials | Newsletters | Microsites WhatsApp | SMS Special Features IN THE LOOP ON THE WATCH Facebook | Twitter | Instagram Videos | FB live | Live streams To subscribe to the free newsletters, go to str.sg/newsletters All newsletters connect you to stories on our straitstimes.com website. Data Digest Bats: furry friends or calamitous carriers? SUPPOSEDLY ORIGINATING IN THE HUANAN WHOLESALE On Jan 23, a team led by coronavirus specialist Shi Zheng-Li at Seafood Market in Wuhan, the deadly Covid-19 outbreak has the Wuhan Institute of Virology, reported on life science archive opened a pandora’s box around the trade of illegal wildlife and bioRxiv that the Covid-19 sequence was 96.2 per cent similar to the sale of exotic animals. a bat virus and had 79.5 per cent similarity to the coronavirus Live wolf pups, civets, hedgehogs, salamanders and crocodiles that caused severe acute respiratory syndrome (Sars). were among many listed on an inventory at one of the market’s Further findings in the Chinese Medical Journal also discovered shops, said The Guardian newspaper. -

Malaysia Politics

March 10, 2020 Malaysia Politics PN Coalition Government Cabinet unveiled Analysts PM Tan Sri Muhyiddin Yassin unveiled his Cabinet with no DPM post and replaced by Senior Ministers, and appointed a banker as Finance Minister. Suhaimi Ilias UMNO and PAS Presidents are not in the lineup. Cabinet formation (603) 2297 8682 reduces implementation risk to stimulus package. Immediate challenges [email protected] are navigating politics and managing economy amid risk of a no Dr Zamros Dzulkafli confidence motion at Parliament sitting on 18 May-23 June 2020 and (603) 2082 6818 economic downsides as crude oil price slump adds to the COVID-19 [email protected] outbreak. Ramesh Lankanathan No DPM but Senior Ministers instead; larger Cabinet (603) 2297 8685 reflecting coalition makeup; and a banker as [email protected] Finance Minister William Poh Chee Keong Against the long-standing tradition, there is no Deputy PM post in this (603) 2297 8683 Cabinet. The Constitution also makes no provision on DPM appointment. [email protected] ECONOMICS Instead, Senior Minister status are assigned to the Cabinet posts in charge of 1) International Trade & Industry, 2) Defence, 3) Works, and 4) Education portfolios. These Senior Minister posts are distributed between what we see as key representations in the Perikatan Nasional (PN) coalition i.e. former PKR Deputy President turned independent Datuk Seri Azmin Ali (International Trade & Industry), UMNO Vice President Datuk Seri Ismail Sabri Yaakob (Defence), Gabungan Parti Sarawak (GPS) Chief Whip Datuk Seri Fadillah Yusof (Works) and PM’s party Parti Pribumi Malaysia Bersatu Malaysia (Bersatu) Supreme Council member Dr Mohd Radzi Md Jidin (Education).