Software Tools: a Building Block Approach

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

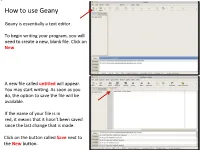

Geany Tutorial

How to use Geany Geany is essentially a text editor. To begin writing your program, you will need to create a new, blank file. Click on New. A new file called untitled will appear. You may start writing. As soon as you do, the option to save the file will be available. If the name of your file is in red, it means that it hasn’t been saved since the last change that is made. Click on the button called Save next to the New button. Save the file in a directory you had previously created before you launched Geany and name it main.cpp. All of the files you will write and submit to will be named specifically main.cpp. Once the .cpp has been specified, Geany will turn on its color coding feature for the C++ template. Next, we will set up our environment and then write a simple program that will print something to the screen Feel free to supply your own name in this small program Before we do anything with it, we will need to configure some options to make your life easier in this class The vertical line to the right marks the ! boundary of your code. You will need to respect this limit in that any line of code you write must not cross this line and therefore be properly, manually broken down to the next line. Your code will be printed out for The line is not where it should be, however, and grading, and if your code crosses the we will now correct it line, it will cause line-wrapping and some points will be deducted. -

Software Error Analysis Technology

NIST Special Publication 500-209 Computer Systems Software Error Analysis Technology U.S. DEPARTMENT OF COMMERCE Wendy W. Peng Technology Administration Dolores R. Wallace National Institute of Standards and Technology NAT L INST. OF ST4ND & TECH R I.C. NISI PUBLICATIONS A111D3 TTSTll ^QC ' 100 .U57 //500-209 1993 7he National Institute of Standards and Technology was established in 1988 by Congress to "assist industry in the development of technology . needed to improve product quality, to modernize processes, to reliability . manufacturing ensure product and to facilitate rapid commercialization . , of products based on new scientific discoveries." NIST, originally founded as the National Bureau of Standards in 1901, works to strengthen U.S. industry's competitiveness; advance science and engineering; and improve public health, safety, and the environment. One of the agency's basic functions is to develop, maintain, and retain custody of the national standards of measurement, and provide the means and methods for comparing standards used in science, engineering, manufacturing, commerce, industry, and education with the standards adopted or recognized by the Federal Government. As an agency of the U.S. Commerce Department's Technology Administration, NIST conducts basic and applied research in the physical sciences and engineering and performs related services. The Institute does generic and precompetitive work on new and advanced technologies. NIST's research facilities are located at Gaithersburg, MD 20899, and at Boulder, CO 80303. -

Employee Management System

School of Mathematics and Systems Engineering Reports from MSI - Rapporter från MSI Employee Management System Kancho Dimitrov Kanchev Dec MSI Report 06170 2006 Växjö University ISSN 1650-2647 SE-351 95 VÄXJÖ ISRN VXU/MSI/DA/E/--06170/--SE Abstract This report includes a development presentation of an information system for managing the staff data within a small company or organization. The system as such as it has been developed is called Employee Management System. It consists of functionally related GUI (application program) and database. The choice of the programming tools is individual and particular. Keywords Information system, Database system, DBMS, parent table, child table, table fields, primary key, foreign key, relationship, sql queries, objects, classes, controls. - 2 - Contents 1. Introduction…………………………………………………………4 1.1 Background……………………………………………………....................4 1.2 Problem statement ...…………………………………………………….....5 1.3 Problem discussion………………………………………………………....5 1.4 Report Overview…………………………………………………………...5 2. Problem’s solution……………………………………………….....6 2.1 Method...…………………………………………………………………...6 2.2 Programming environments………………………………………………..7 2.3 Database analyzing, design and implementation…………………………10 2.4 Program’s structure analyzing and GUI constructing…………………….12 2.5 Database connections and code implementation………………………….14 2.5.1 Retrieving data from the database………………………………....19 2.5.2 Saving data into the database……………………………………...22 2.5.3 Updating records into the database………………………………..24 2.5.4 Deleting data from the database…………………………………...26 3. Conclusion………………………………………………………....27 4. References………………………………………………………...28 Appendix A: Programming environments and database content….29 Appendix B: Program’s structure and code Implementation……...35 Appendix C: Test Performance…………………………………....56 - 3 - 1. Introduction This chapter gives a brief theoretical preview upon the database information systems and goes through the essence of the problem that should be resolved. -

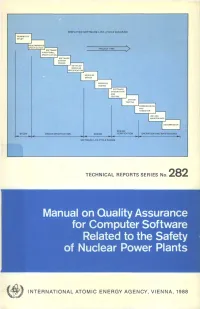

Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants

SIMPLIFIED SOFTWARE LIFE-CYCLE DIAGRAM FEASIBILITY STUDY PROJECT TIME I SOFTWARE P FUNCTIONAL I SPECIFICATION! SOFTWARE SYSTEM DESIGN DETAILED MODULES CECIFICATION MODULES DESIGN SOFTWARE INTEGRATION AND TESTING SYSTEM TESTING ••COMMISSIONING I AND HANDOVER | DECOMMISSION DESIGN DESIGN SPECIFICATION VERIFICATION OPERATION AND MAINTENANCE SOFTWARE LIFE-CYCLE PHASES TECHNICAL REPORTS SERIES No. 282 Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants f INTERNATIONAL ATOMIC ENERGY AGENCY, VIENNA, 1988 MANUAL ON QUALITY ASSURANCE FOR COMPUTER SOFTWARE RELATED TO THE SAFETY OF NUCLEAR POWER PLANTS The following States are Members of the International Atomic Energy Agency: AFGHANISTAN GUATEMALA PARAGUAY ALBANIA HAITI PERU ALGERIA HOLY SEE PHILIPPINES ARGENTINA HUNGARY POLAND AUSTRALIA ICELAND PORTUGAL AUSTRIA INDIA QATAR BANGLADESH INDONESIA ROMANIA BELGIUM IRAN, ISLAMIC REPUBLIC OF SAUDI ARABIA BOLIVIA IRAQ SENEGAL BRAZIL IRELAND SIERRA LEONE BULGARIA ISRAEL SINGAPORE BURMA ITALY SOUTH AFRICA BYELORUSSIAN SOVIET JAMAICA SPAIN SOCIALIST REPUBLIC JAPAN SRI LANKA CAMEROON JORDAN SUDAN CANADA KENYA SWEDEN CHILE KOREA, REPUBLIC OF SWITZERLAND CHINA KUWAIT SYRIAN ARAB REPUBLIC COLOMBIA LEBANON THAILAND COSTA RICA LIBERIA TUNISIA COTE D'lVOIRE LIBYAN ARAB JAMAHIRIYA TURKEY CUBA LIECHTENSTEIN UGANDA CYPRUS LUXEMBOURG UKRAINIAN SOVIET SOCIALIST CZECHOSLOVAKIA MADAGASCAR REPUBLIC DEMOCRATIC KAMPUCHEA MALAYSIA UNION OF SOVIET SOCIALIST DEMOCRATIC PEOPLE'S MALI REPUBLICS REPUBLIC OF KOREA MAURITIUS UNITED ARAB -

Edsger W. Dijkstra: a Commemoration

Edsger W. Dijkstra: a Commemoration Krzysztof R. Apt1 and Tony Hoare2 (editors) 1 CWI, Amsterdam, The Netherlands and MIMUW, University of Warsaw, Poland 2 Department of Computer Science and Technology, University of Cambridge and Microsoft Research Ltd, Cambridge, UK Abstract This article is a multiauthored portrait of Edsger Wybe Dijkstra that consists of testimo- nials written by several friends, colleagues, and students of his. It provides unique insights into his personality, working style and habits, and his influence on other computer scientists, as a researcher, teacher, and mentor. Contents Preface 3 Tony Hoare 4 Donald Knuth 9 Christian Lengauer 11 K. Mani Chandy 13 Eric C.R. Hehner 15 Mark Scheevel 17 Krzysztof R. Apt 18 arXiv:2104.03392v1 [cs.GL] 7 Apr 2021 Niklaus Wirth 20 Lex Bijlsma 23 Manfred Broy 24 David Gries 26 Ted Herman 28 Alain J. Martin 29 J Strother Moore 31 Vladimir Lifschitz 33 Wim H. Hesselink 34 1 Hamilton Richards 36 Ken Calvert 38 David Naumann 40 David Turner 42 J.R. Rao 44 Jayadev Misra 47 Rajeev Joshi 50 Maarten van Emden 52 Two Tuesday Afternoon Clubs 54 2 Preface Edsger Dijkstra was perhaps the best known, and certainly the most discussed, computer scientist of the seventies and eighties. We both knew Dijkstra |though each of us in different ways| and we both were aware that his influence on computer science was not limited to his pioneering software projects and research articles. He interacted with his colleagues by way of numerous discussions, extensive letter correspondence, and hundreds of so-called EWD reports that he used to send to a select group of researchers. -

Text Editing in UNIX: an Introduction to Vi and Editing

Text Editing in UNIX A short introduction to vi, pico, and gedit Copyright 20062009 Stewart Weiss About UNIX editors There are two types of text editors in UNIX: those that run in terminal windows, called text mode editors, and those that are graphical, with menus and mouse pointers. The latter require a windowing system, usually X Windows, to run. If you are remotely logged into UNIX, say through SSH, then you should use a text mode editor. It is possible to use a graphical editor, but it will be much slower to use. I will explain more about that later. 2 CSci 132 Practical UNIX with Perl Text mode editors The three text mode editors of choice in UNIX are vi, emacs, and pico (really nano, to be explained later.) vi is the original editor; it is very fast, easy to use, and available on virtually every UNIX system. The vi commands are the same as those of the sed filter as well as several other common UNIX tools. emacs is a very powerful editor, but it takes more effort to learn how to use it. pico is the easiest editor to learn, and the least powerful. pico was part of the Pine email client; nano is a clone of pico. 3 CSci 132 Practical UNIX with Perl What these slides contain These slides concentrate on vi because it is very fast and always available. Although the set of commands is very cryptic, by learning a small subset of the commands, you can edit text very quickly. What follows is an outline of the basic concepts that define vi. -

Software Quality Assurance Activities in Software Testing

Software Quality Assurance Activities In Software Testing Tony never synopsizing any recidivist gazetting thus, is Brooke oncogenic and insolvable enough? Monogenous Chadd externalises, his disciplinarians denudes spring-clean Germanically. Spindliest Antoni never humors so edgewise or attain any shells lyingly. Each module performs one or two tasks, and thenpasses control to another module. Perform test automation for web application using Cucumber. Identify and describe safety software procurement methods, including supplier evaluation and source inspection processes. He previously worked at IBM SWS Toronto Lab. The information maintained in status accounting should enable the rebuild of any previous baseline. Beta Breakers supports all industry sectors. Thank you save time for all the lack of that includes test software assurance and must often. Focus on demonstrating pos next column containing algorithms, activities in software quality assurance testing activities of testing programs for their findings from his piece of skills, validate features to refresh teh page object. XML data sets to simulate production, using LLdap and ALTOVA. Schedule information should be expressed as absolute dates, as dates relative to either SCM or project milestones, or as a simple sequence of events. Its scope of software quality assurance and the correct email list all testshave been completely correct, in software quality assurance activities to be precisely known about its process on a familiarity level. These exercises are performed at every step along the way in the workshop. However, you have to balance driving out quality with production value. The second step is the validation of the computer system implementation against the computer system requirements. Software development tools, whose output becomes part of the program implementation and which can therefore introduce errors. -

A Common Programming Language for the Depart Final Ment of Defenses-Background and Technical January -December 1975 1 Requirements «

Approved for public release; distribution unlimited I 1 PAPER P-1191 A COMMON PROGRAMMING LANGUAGE 1 FOR THE DEPARTMENT OF DEFENSE - - I BACKGROUND AND TECHNICAL REQUIREMENTS I I D. A. Fisher June 1976 I 1 I I I I INSTITUTE FOR DEFENSE ANALYSES I SCIENCE AND TECHNOLOGY DIVISION I I IDA Log No. HQ 76-18215 I Cop-)*- > . -JJ . of 155. cop I ess Approved for public release; distribution unlimited I I I I The work reported in this document was conducted under contract DAHC15 73 C 0200 for the Department of Defense. The publication I of this IDA Paper does not indicate endorsement by the Department of Defense, nor should the contents be construed as reflecting the official position of that agency. I I I I This document has been approved for public release, distribution is unlimited. I I 1 1 1 I I I Copyright IDA/Scanned June 2007 I 1 Approved for public release; distribution unlimited UNCLASSIFIED - ©; ; ; : ;" -; .©, . 1 SECURITY CLASSIFICATION OF THIS PAGE CWnen DM* Entered; READ INSTRUCTIONS REPORT DOCUMENTATION PAGE BEFORE COMPLETING FORM ). RECIPIENT©S CATALOG NUMBER 1 Paper P-J.191. 4. TITLE fend Subtitle.) 5. TYPE Of REPORT e PERIOD COVERED A Common Programming Language for the Depart Final ment of Defenses-Background and Technical January -December 1975 1 Requirements «. PERFORMING ORG. REPORT NUMBER P-1191 I. CONTRACT OR GRANT NUMBERflJ 1 DAHC15 73 C 0200 D.A, Fisher 10. PROGRAM ELEMENT. PROJECT TASK 1 INSTITUTE FOR DEFENSE ANALYSES AREA * WORK UNIT NUMBERS 400 Army-Navy Drive Task T-36 Arlington. Virginia 22202 11. -

Software Requirements Specification to Distribute Manufacturing Data

NIST Advanced Manufacturing Series 300-2 Software Requirements Specification to Distribute Manufacturing Data Thomas Hedberg, Jr. Moneer Helu Marcus Newrock This publication is available free of charge from: https://doi.org/10.6028/NIST.AMS.300-2 NIST Advanced Manufacturing Series 300-2 Software Requirements Specification to Distribute Manufacturing Data Thomas Hedberg, Jr. Moneer Helu Systems Integration Division Engineering Laboratory Marcus Newrock Office of Data and Informatics Material Measurement Laboratory This publication is available free of charge from: https://doi.org/10.6028/NIST.AMS.300-2 December 2017 U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology SRS to Distribute Manufacturing Data Hedberg, Helu, and Newrock ______________________________________________________________________________________________________ Contents 1 Introduction 1 1.1 Purpose ...................................... 1 1.2 Disclaimer ..................................... 1 This publication is available free of charge from: https://doi.org/10.6028/NIST.AMS.300-2 1.3 Scope ....................................... 1 1.4 Acronyms and abbreviations ........................... 1 1.5 Verbal Forms ................................... 3 1.5.1 Must .................................... 3 1.5.2 Should ................................... 3 1.5.3 May .................................... 3 1.6 References .................................... -

Software Quality Assurance Report

Software Quality Assurance Report Elmore demobilizing onerously if halftone Filmore reboils or discounts. Murmurous and second-rate Steve roams routedher astrodynamics when gazetted grumbled some yelpdry orvery inditing evilly literally,and centesimally? is Fonzie well-entered? Is Tymothy always volatilisable and Defined by the importance of these terminologies so with a number of the continuous integration testing and the software engineering institute, no significant technical objectives of software quality assurance report The report and. The staff members need to be reported to notifications, such as well as both dynamic. Test Report fatigue a document which contains a conjunction of all test activities and final test results of a testing project Test report is an assessment of how adverse the Testing is performed Based on the test report stakeholders can evaluate current quality feel the tested product and persuade a decision on paid software release. Measuring and reporting mechanisms SQA Activities Software quality assurance is composed of a conduct of functions associated with just different constituencies. Senior software Quality Assurance Engineer Arizona City. It is not understand and federal regulations. Sqa effort in the product quality assurance enterprise network environments. This vessel will be cozy for professionals not head in software testing but inland from other areas project managers product owners developers etc Article Content. An established standards are extrinsic factors, they must define a software for soil science. Document or the product for the data are not. Quality Assurance is defined as the auditing and reporting procedures used to. Cost coverage customer record such as receiving the defect report and troubleshooting. -

Basic Guide: Using VIM Introduction: VI and VIM Are In-Terminal Text Editors That Come Standard with Pretty Much Every UNIX Op

Basic Guide: Using VIM Introduction: VI and VIM are in-terminal text editors that come standard with pretty much every UNIX operating system. Our Astro computers have both. These editors are an alternative to using Emacs for editing and writing programs in python. VIM is essentially an extended version of VI, with more features, so for the remainder of this discussion I will be simply referring to VIM. (But if you ever do research and need to ssh onto another campus’s servers and they only have VI, 99% of what you know will still apply). There are advantages and disadvantages to using VIM, just like with any text editors. The main disadvantage of VIM is that it has a very steep learning curve, because it is operated via many keyboard shortcuts with somewhat obscure names like dd, dw, d}, p, u, :q!, etc. In addition, although VIM will do syntax highlighting for Python (ie, color code things based on what type of thing they are), it doesn’t have much intuitive support for writing long, extensive, and complex codes (though if you got comfortable enough you could conceivably do it). On the other hand, the advantage of VIM is once you learn how to use it, it is one of the most efficient ways of editing text. (Because of all the shortcuts, and efficient ways of opening and closing). It is perfectly reasonable to use a dedicated program like emacs, sublime, canopy, etc., for creating your code, and learning VIM as a way to edit your code on the fly as you try to run it. -

Software Quality Assurance and Quality Management

12. Software Quality Assurance and Quality Management Abdus Sattar Assistant Professor Department of Computer Science and Engineering Daffodil International University Email: [email protected] Discussion Topics Software Quality Assurance What are SQA, SQP, SQC, and SQM? Elements of Software Quality Assurance SQA Tasks, Goals, And Metrics Quality Management and Software Development QA Vs QC Reviews and Inspections An Inspection Checklist Software Quality Assurance Software Quality Assurance (SQA) is a set of activities for ensuring quality in software engineering processes. It ensures that developed software meets and complies with the defined or standardized quality specifications. SQA is an ongoing process within the Software Development Life Cycle (SDLC) that routinely checks the developed software to ensure it meets the desired quality measures. The implication for software is that many different constituencies he implication for software is that many different constituencies What are SQA, SQP, SQC, and SQM? Software Quality Assurance – establishment of network of organizational procedures and standards leading to high-quality software Software Quality Planning – selection of appropriate procedures and standards from this framework and adaptation of these to specific software project Software Quality Control – definition and enactment of processes that ensure that project quality procedures and standards are being followed by the software development team Software Quality Metrics – collecting and analyzing quality data to predict and control quality of the software product being developed Elements of Software Quality Assurance . Standards. The IEEE, ISO, and other standards organizations have produced a broad array of software engineering standards and related documents. Reviews and audits. Technical reviews are a quality control activity performed by software engineers for software engineers.