High-Quality Spatial Interpolation of Interlaced Video

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Problems on Video Coding

Problems on Video Coding Guan-Ju Peng Graduate Institute of Electronics Engineering, National Taiwan University 1 Problem 1 How to display digital video designed for TV industry on a computer screen with best quality? .. Hint: computer display is 4:3, 1280x1024, 72 Hz, pro- gressive. Supposing the digital TV display is 4:3 640x480, 30Hz, Interlaced. The problem becomes how to convert the video signal from 4:3 640x480, 60Hz, Interlaced to 4:3, 1280x1024, 72 Hz, progressive. First, we convert the video stream from interlaced video to progressive signal by a suitable de-interlacing algorithm. Thus, we introduce the de-interlacing algorithms ¯rst in the following. 1.1 De-Interlacing Generally speaking, there are three kinds of methods to perform de-interlacing. 1.1.1 Field Combination Deinterlacing ² Weaving is done by adding consecutive ¯elds together. This is ¯ne when the image hasn't changed between ¯elds, but any change will result in artifacts known as "combing", when the pixels in one frame do not line up with the pixels in the other, forming a jagged edge. This technique retains full vertical resolution at the expense of half the temporal resolution. ² Blending is done by blending, or averaging consecutive ¯elds to be displayed as one frame. Combing is avoided because both of the images are on top of each other. This instead leaves an artifact known as ghosting. The image loses vertical resolution and 1 temporal resolution. This is often combined with a vertical resize so that the output has no numerical loss in vertical resolution. The problem with this is that there is a quality loss, because the image has been downsized then upsized. -

Comparison of HDTV Formats Using Objective Video Quality Measures

Multimed Tools Appl DOI 10.1007/s11042-009-0441-2 Comparison of HDTV formats using objective video quality measures Emil Dumic & Sonja Grgic & Mislav Grgic # Springer Science+Business Media, LLC 2010 Abstract In this paper we compare some of the objective quality measures with subjective, in several HDTV formats, to be able to grade the quality of the objective measures. Also, comparison of objective and subjective measures between progressive and interlaced video signal will be presented to determine which scanning emission format is better, even if it has different resolution format. Several objective quality measures will be tested, to examine the correlation with the subjective test, using various performance measures. Keywords Video quality . PSNR . VQM . SSIM . TSCES . HDTV. H.264/AVC . RMSE . Correlation 1 Introduction High-Definition Television (HDTV) acceptance in home environments directly depends on two key factors: the availability of adequate HDTV broadcasts to the consumer’s home and the availability of HDTV display devices at mass market costs [6]. Although the United States, Japan and Australia have been broadcasting HDTV for some years, real interest of the general public appeared recently with the severe reduction of HDTV home equipment price. Nowadays many broadcasters in Europe have started to offer HDTV broadcasts as part of Pay-TV bouquets (like BSkyB, Sky Italia, Premiere, Canal Digital ...). Other major public broadcasters in Europe have plans for offering HDTV channels in the near future. The announcement of Blu-Ray Disc and Game consoles with HDTV resolutions has also increased consumer demand for HDTV broadcasting. The availability of different HDTV image formats such as 720p/50, 1080i/25 and 1080p/50 places the question for many users which HDTV format should be used with which compression algorithm, together with the corresponding bit rate. -

Viarte Remastering of SD to HD/UHD & HDR Guide

Page 1/3 Viarte SDR-to-HDR Up-conversion & Digital Remastering of SD/HD to HD/UHD Services 1. Introduction As trends move rapidly towards online content distribution and bigger and brighter progressive UHD/HDR displays, the need for high quality remastering of SD/HD and SDR to HDR up-conversion of valuable SD/HD/UHD assets becomes more relevant than ever. Various technical issues inherited in legacy content hinder the immersive viewing experience one might expect from these new HDR display technologies. In particular, interlaced content need to be properly deinterlaced, and frame rate converted in order to accommodate OTT or Blu-ray re-distribution. Equally important, film grain or various noise conditions need to be addressed, so as to avoid noise being further magnified during edge-enhanced upscaling, and to avoid further perturbing any future SDR to HDR up-conversion. Film grain should no longer be regarded as an aesthetic enhancement, but rather as a costly nuisance, as it not only degrades the viewing experience, especially on brighter HDR displays, but also significantly increases HEVC/H.264 compressed bit-rates, thereby increases online distribution and storage costs. 2. Digital Remastering and SDR to HDR Up-Conversion Process There are several steps required for a high quality SD/HD to HD/UHD remastering project. The very first step may be tape scan. The digital master forms the baseline for all further quality assessment. isovideo's SD/HD to HD/UHD digital remastering services use our proprietary, state-of-the-art award- winning Viarte technology. Viarte's proprietary motion processing technology is the best available. -

HTS3450/77 Philips DVD Home Theater System with Video

Philips DVD home theater system with Video Upscaling up to 1080i DivX Ultra HTS3450 Turn up your experience with HDMI and video upscaling This stylish High Definition digital home entertainment system plays practically any disc in high quality Dolby and DTS multi-channel surround sound. So just relax and fully immerse yourself in movies and music at home. Great audio and video performance • HDMI digital output for easy connection with only one cable • Video Upscaling for improved resolution of up to 1080i • High definition JPEG playback for images in true resolution • DTS, Dolby Digital and Pro Logic II surround sound • Progressive Scan component video for optimized image quality Play it all • Movies: DVD, DVD+R/RW, DVD-R/RW, (S)VCD, DivX • Music: CD, MP3-CD, CD-R/RW & Windows Media™ Audio • DivX Ultra Certified for enhanced playback of DivX videos • Picture CD (JPEG) with music (MP3) playback Quick and easy set-up • Easy-fit™ connectors with color-coding for a simple set-up DVD home theater system with Video Upscaling up to 1080i HTS3450/77 DivX Ultra Highlights HDMI for simple AV connection High definition JPEG playback Progressive Scan HDMI stands for High Definition Multimedia High definition JPEG playback lets you view Progressive Scan doubles the vertical Interface. It is a direct digital connection that your pictures on your television in resolutions resolution of the image resulting in a noticeably can carry digital HD video as well as digital as high as two megapixels. Now you can view sharper picture. Instead of sending a field multichannel audio. By eliminating the your digital pictures in absolute clarity, without comprising the odd lines to the screen first, conversion to analog signals it delivers perfect loss of quality or detail - and share them with followed by the field with the even lines, both picture and sound quality, completely free friends and family in the comfort of your living fields are written at one time. -

BA(Prog)III Yr 14/04/2020 Displays Interlacing and Progressive Scan

BA(prog)III yr 14/04/2020 Displays • Colored phosphors on a cathode ray tube (CRT) screen glow red, green, or blue when they are energized by an electron beam. • The intensity of the beam varies as it moves across the screen, some colors glow brighter than others. • Finely tuned magnets around the picture tube aim the electrons onto the phosphor screen, while the intensity of the beamis varied according to the video signal. This is why you needed to keep speakers (which have strong magnets in them) away from a CRT screen. • A strong external magnetic field can skew the electron beam to one area of the screen and sometimes caused a permanent blotch that cannot be fixed by degaussing—an electronic process that readjusts the magnets that guide the electrons. • If a computer displays a still image or words onto a CRT for a long time without changing, the phosphors will permanently change, and the image or words can become visible, even when the CRT is powered down. Screen savers were invented to prevent this from happening. • Flat screen displays are all-digital, using either liquid crystal display (LCD) or plasma technologies, and have replaced CRTs for computer use. • Some professional video producers and studios prefer CRTs to flat screen displays, claiming colors are brighter and more accurately reproduced. • Full integration of digital video in cameras and on computers eliminates the analog television form of video, from both the multimedia production and the delivery platform. • If your video camera generates a digital output signal, you can record your video direct-to-disk, where it is ready for editing. -

What the Heck Is HDTV?

05_096734 ch01.qxp 12/4/06 10:58 PM Page 9 Chapter 1 What the Heck Is HDTV? In This Chapter ᮣ Understanding the acronyms ᮣ Transmitting from ATSC to the world ᮣ Going wide ᮣ Avoiding the pitfalls ince the transition to color TV in the 1950s and ’60s, nothing — nothing!! — Shas had as much impact on the TV world as HDTV (high-definition TV) and digital TV. That’s right. TV is going digital, following in the footsteps of, well, everything. We’re in the early days of this transition to a digital TV world (a lot of TV programming is still all-analog, for example), and this stage of the game can be confusing. In this chapter, we alleviate HDTV anxiety by telling you what you need to know about HDTV, ATSC, DTV, and a bunch of other acronyms and tech terms. We also tell you why you’d want to know these terms and concepts, how great HDTV is, and what an improvement it is over today’s analog TV (as you can see when you tune in to HDTV). Finally, we guide you through the confusing back alleys of HDTV and digital TV, making sure you know what’s HDTV and what’s not. Almost everyone involved with HDTV has noticed that consumer interest is incredibly high with all things HDTV! As a result, a lot of device makers and other manufacturers are trying to cash in on the action by saying their products are “HDTV” (whenCOPYRIGHTED they are not) or talking about MATERIAL such things as “HDTV-compatible” when it might be meaningless (like on a surge protector/electrical plug strip). -

A Review and Comparison on Different Video Deinterlacing

International Journal of Research ISSN NO:2236-6124 A Review and Comparison on Different Video Deinterlacing Methodologies 1Boyapati Bharathidevi,2Kurangi Mary Sujana,3Ashok kumar Balijepalli 1,2,3 Asst.Professor,Universal College of Engg & Technology,Perecherla,Guntur,AP,India-522438 [email protected],[email protected],[email protected] Abstract— Video deinterlacing is a key technique in Interlaced videos are generally preferred in video broadcast digital video processing, particularly with the widespread and transmission systems as they reduce the amount of data to usage of LCD and plasma TVs. Interlacing is a widely used be broadcast. Transmission of interlaced videos was widely technique, for television broadcast and video recording, to popular in various television broadcasting systems such as double the perceived frame rate without increasing the NTSC [2], PAL [3], SECAM. Many broadcasting agencies bandwidth. But it presents annoying visual artifacts, such as made huge profits with interlaced videos. Video acquiring flickering and silhouette "serration," during the playback. systems on many occasions naturally acquire interlaced video Existing state-of-the-art deinterlacing methods either ignore and since this also proved be an efficient way, the popularity the temporal information to provide real-time performance of interlaced videos escalated. but lower visual quality, or estimate the motion for better deinterlacing but with a trade-off of higher computational cost. The question `to interlace or not to interlace' divides the TV and the PC communities. A proper answer requires a common understanding of what is possible nowadays in deinterlacing video signals. This paper outlines the most relevant methods, and provides a relative comparison. -

Video Terminology Video Standards Progressive Vs

VIDEO TERMINOLOGY VIDEO STANDARDS 1. NTSC - 525 Scanlines/frame rate - 30fps North & Central America, Phillipines & Taiwan . NTSC J - Japan has a darker black 2. PAL - 625 scanlines 25 fps Europe, Scandinavia parts of Asia, Pacific & South Africa. PAL in Brazil is 30fps and PAL colours 3. SECAM France Russia Middle East and North Africa PROGRESSIVE VS INTERLACED VIDEO All computer monitors use a progressive scan - each scan line in sequence. Interlacing is only for CRT monitors. LCD monitors work totally differently - no need to worry about. Interlacing is for broadcast TV. Every other line displayed alternatively. FRAME RATES As we transition from analogue video to digitla video. Film is 24 fps, PAL video 25 fps. NTSC 30fps. Actually film and NTSC are slightly different but we don't need to worry about that for now. IMAGE SIZE All video is shot at 72 px/inch - DV NTSC - 720 x 480 (SD is 720 x 486) DV PAL - 720 x 576 (SD PAL is 720 x 576) HD comes in both progressive and interlaced. HD480i is usual broadcast TV 480p is 480 progressive. 720i is 720 interlaced 720p is progressive. 720 means 720 vertical lines 1080 is 1080 vertical lines. 1080i is most popular. 720p is 1280 x 720, HD 1080 is 1920x1080px. All HD formats are 16:9 aspect ratio. Traditional TV is 4:3 aspect ratio. HDV is 1440 x 1080. New format - is it the new HD version of DV? Cameras like the Sony and JVC make minor alterations to this format when shooting In summary HD 1080i = 1920 x 1080 HD 720p = 1280 x 720 Traditional = 720 x 480 (NTSC) 720 x 576 (PAL) VIDEO OUTPUTS Analog Composite, S-Video, Component in increasing quality. -

Docketfilecopyoriginal

Before the FEDERAL COMMUNICATIONS COMMISSION Washington, D.C. 20554 In the Matter of ) ) Advanced Television Systems and ) Their Impact Upon the Existing ) Television Broadcast Service ) DOCKET FILE COpy ORIGINAl REPLY COMMENTS OF BUSINESS SOFTWARE ALLIANCE The Business Software Alliance ("BSA"), on behalf of its members, hereby submits the following Reply Comments in response to the Federal Communications Commission's ("Commission") Fourth Further Notice ofProposed Rule Making and Third Notice ofInquiry in the above-referenced proceeding.! BSA believes that the Commission should refrain from at this stage implementing prematurely decisions that have the potential to preempt a full technical analysis of the proper technology to be deployed for the Advanced Television service ("ATV"). It is critical for achievement ofthe Commission's goals in this proceeding that the Commission have the benefit of the input from a broad range ofparticipants in the computer industry on the fundamental technical issues that will determine the future of ATV. BSA believes that computer industry input can be particularly helpful to the Commission in analyzing such issues as its technical options for promoting robust data delivery and high image fidelity. Advanced Television Systems and Their Impact Upon the Existing Television Broadcast Service, FCC 95-315, MM Docket No. 87-268, released August 9, 1995 ("A TV Fourth NPRM'). On October 11, 1995, the Commission granted parties an extension oftime until January 12, 1996, in which to file reply comments in this proceeding. On January 11, 1996, the Commission further extended the deadline for reply comments until January 22, 1996. No. ot Copiea rec'd~ ListABCDE ~ ___. -

Compatible Television System with Companding Of

^ ^ H ^ I H ^ H ^ H ^ II HI II ^ II ^ H ^ ^ ^ ^ ^ ^ ^ ^ ^ I ^ (19) European Patent Office Office europeen des brevets EP 0 377 668 B2 (12) NEW EUROPEAN PATENT SPECIFICATION (45) Date of publication and mention (51) mtci.6: H04N 11/00, H04N 7/00 of the opposition decision: 09.07.1997 Bulletin 1997/28 (86) International application number: PCT/US88/03015 (45) Mention of the grant of the patent: 08.06.1994 Bulletin 1994/23 (87) International publication number: WO 89/02689 (23.03.1989 Gazette 1989/07) (21) Application number: 88908846.4 (22) Date of filing: 09.09.1988 (54) COMPATIBLE TELEVISION SYSTEM WITH COMPANDING OF AUXILIARY SIGNAL ENCODING INFORMATION KOMPATIBLES FERNSEHSYSTEM MIT KOMPANDIERUNG VON HILFSIGNAL KODIERTER INFORMATION SYSTEME COMPATIBLE DE TELEVISION A COMPRESSION D' IN FORMATIONS DE CODAGE DE SIGNAUX AUXILIAIRES (84) Designated Contracting States: (56) References cited: AT DE FR IT SE EP-A- 0 213 911 DE-A- 3 244 524 DE-A- 3 427 668 GB-A- 2 115 640 (30) Priority: 14.09.1987 GB 8721565 29.12.1987 US 139339 • IEEE Transactions on Communications, volume COM-31, no. 11, November 1983, IEEE, (New (43) Date of publication of application: York, US), R.L. Schmidt et al.: "Transmission of 18.07.1990 Bulletin 1990/29 two NTSC color television signals over a single satellite transponder via time-frequency (73) Proprietor: GENERAL ELECTRIC COMPANY multiplexing", pages 1257-1266- see page 1260, Princeton, New Jersey 08540 (US) left-hand column, line 4 - page 1260, right-hand column, line 10; page 1261, left-hand column, (72) Inventor: FUHRER, Jack, Selig line 59 - page 1262, right-hand column, line 16; Princeton Junction, NJ 08550 (US) page 1265, left-hand column, lines 5-8; figures 6-9 (74) Representative: Powell, Stephen David et al • Patent Abstracts of Japan, volume 10, no. -

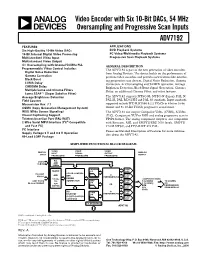

PDF 652 Kb ADV7192: Video Encoder with Six 10-Bit

Video Encoder with Six 10-Bit DACs, 54 MHz a Oversampling and Progressive Scan Inputs ADV7192 FEATURES APPLICATIONS Six High-Quality 10-Bit Video DACs DVD Playback Systems 10-Bit Internal Digital Video Processing PC Video/Multimedia Playback Systems Multistandard Video Input Progressive Scan Playback Systems Multistandard Video Output ؋ 4 Oversampling with Internal 54 MHz PLL GENERAL DESCRIPTION Programmable Video Control Includes: The ADV7192 is part of the new generation of video encoders Digital Noise Reduction from Analog Devices. The device builds on the performance of Gamma Correction previous video encoders and provides new features like interfac- Black Burst ing progressive scan devices, Digital Noise Reduction, Gamma LUMA Delay Correction, 4× Oversampling and 54 MHz operation, Average CHROMA Delay Brightness Detection, Black Burst Signal Generation, Chroma Multiple Luma and Chroma Filters Delay, an additional Chroma Filter, and other features. Luma SSAF™ (Super Subalias Filter) Average Brightness Detection The ADV7192 supports NTSC-M, NTSC-N (Japan), PAL N, Field Counter PAL M, PAL-B/D/G/H/I and PAL-60 standards. Input standards Macrovision Rev. 7.1 supported include ITU-R.BT656 4:2:2 YCrCb in 8-bit or 16-bit × CGMS (Copy Generation Management System) format and 3 10-Bit YCrCb progressive scan format. WSS (Wide Screen Signaling) The ADV7192 can output Composite Video (CVBS), S-Video Closed Captioning Support. (Y/C), Component YUV or RGB and analog progressive scan in Teletext Insertion Port (PAL-WST) YPrPb format. The analog component output is also compatible 2-Wire Serial MPU Interface (I2C®-Compatible with Betacam, MII, and SMPTE/EBU N10 levels, SMPTE and Fast I2C) 170 M NTSC, and ITU–R.BT 470 PAL. -

EBU Tech 3315-2006 Archiving: Experiences with TK Transfer to Digital

EBU – TECH 3315 Archiving: Experiences with telecine transfer of film to digital formats Source: P/HDTP Status: Report Geneva April 2006 1 Page intentionally left blank. This document is paginated for recto-verso printing Tech 3315 Archiving: Experiences with telecine transfer of film to digital formats Contents Introduction ......................................................................................................... 5 Decisions on Scanning Format .................................................................................... 5 Scanning tests ....................................................................................................... 6 The Results .......................................................................................................... 7 Observations of the influence of elements of film by specialists ........................................ 7 Observations on the results of the formal evaluations .................................................... 7 Overall conclusions .............................................................................................. 7 APPENDIX : Details of the Tests and Results ................................................................... 9 3 Archiving: Experiences with telecine transfer of film to digital formats Tech 3315 Page intentionally left blank. This document is paginated for recto-verso printing 4 Tech 3315 Archiving: Experiences with telecine transfer of film to digital formats Archiving: Experience with telecine transfer of film to digital formats