中国科技论文在线 Model Checking Go

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Drago Document

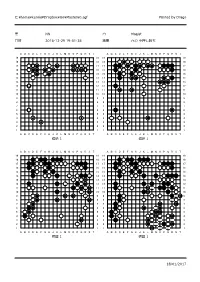

C:\home\kuroki\Dropbox\Go\Master60.sgf Printed by Drago ⿊ NN ⽩ Magist ⽇時 2016-12-29 19:01:34 結果 ⽩の 中押し勝ち A B C D E F G H J K L M N O P Q R S T A B C D E F G H J K L M N O P Q R S T 19 19 19 19 18 18 1849 48 41 51 37 33 39 18 1723 3 20 15 14 10 9 11 17 17 1750 35 34 38 40 17 1619 18 22 28 29 26 12 8 16 1 16 16 16 1521 24 27 30 15 1531 15 1425 13 14 1436 32 43 56 55 14 13 13 1344 58 54 13 127 12 1245 57 12 11 11 1152 46 53 11 10 10 1047 10 9999 8888 7777 665 6642 5555 444 2 4459 60 336 33 2222 1111 A B C D E F G H J K L M N O P Q R S T A B C D E F G H J K L M N O P Q R S T 棋譜 1 棋譜 1 A B C D E F G H J K L M N O P Q R S T A B C D E F G H J K L M N O P Q R S T 19 19 19 19 18 18 18 18 17 17 17 17 16 16 16 16 15 15 15 15 1479 77 14 14 14 1376 13 13 13 1273 81 82 12 12 12 1171 85 80 75 84 11 11114 115 11 1083 86 10 10116 10 9969 70 74 78 87 99117 118 8867 68 72 88 88119 7765 66 77102 120 6664 6696 101 98 5563 62 5595 108 106 444493 110 97 104 3361 90 3392 91 107 103 2289 2294 105 109 99 100 1111112 111 113 A B C D E F G H J K L M N O P Q R S T A B C D E F G H J K L M N O P Q R S T 棋譜 1 棋譜 1 18/01/2017 C:\home\kuroki\Dropbox\Go\Master60.sgf Printed by Drago A B C D E F G H J K L M N O P Q R S T 19 19 18 18 17 17 16 16 15 15 14138 136 140 14 13139 137 135 130 134 133 144 125 121 13 12124 123 131 132 127 122 12 11129 126 11 10143 146 10 99141 145 88142 77 66 55 44 33128 22 11 A B C D E F G H J K L M N O P Q R S T 棋譜 1 18/01/2017 C:\home\kuroki\Dropbox\Go\Master60.sgf Printed by Drago ⿊ NN ⽩ Magist ⽇時 2016-12-29 19:20:57 結果 ⽩の 中押し勝ち A B C D E F G -

Wipo-Wto Colloquium Papers Research Papers from the Wipo-Wto Colloquium for Teachers of Intellectual Property Law 20182018 Apers

8 201 WIPO-WTO COLLOQUIUM PAPERS RESEARCH PAPERS FROM THE WIPO-WTO COLLOQUIUM FOR TEACHERS OF INTELLECTUAL PROPERTY LAW 20182018 APERS P World Trade Organization (WTO) World Intellectual Property Organization (WIPO) Rue de Lausanne 154 34, chemin des Colombettes CH-1211 Geneva 21 P.O. Box 18 Switzerland CH-1211 Geneva 20 OLLOQUIUM Switzerland C Telephone: TO +4122 739 51 14 W Fax: Telephone: +4122 739 57 90 +4122 338 91 11 Email: Fax: WIPO- [email protected] +4122 733 54 28 www.wto.org www.wipo.int WIPO-WTO COLLOQUIUM PAPERS RESEARCH PAPERS FROM THE 2018 WIPO-WTO COLLOQUIUM FOR TEACHERS OF INTELLECTUAL PROPERTY LAW Compiled by the WIPO Academy and the WTO Intellectual Property, Government Procurement and Competition Division DISCLAIMER The views and opinions expressed in this compilation are those of the individual authors of each article. They do not necessarily reflect the positions of the organizations cooperating on this publication. In particular, no views or legal analysis included in these papers should be attributed to WIPO or the WTO, or to their respective Secretariats. http://www.wto.org/index.htm© 2019 Copyright in this compilation is jointly owned by the World Intellectual Property Organization (WIPO) and the World Trade Organization (WTO). The contributing authors retain copyright in their individual works. ISBN 978-92-870-4418-1 i EDITORS Irene Calboli, Lee Chedister, Megan Pharis, Victoria Gonzales, Harrison Davis, John Yoon, and Carolina Tobar Zarate, Ali Akbar Modabber and Nishant Anurag (Copy Editor) EDITORIAL BOARD Mr Frederick -

Mandarin Chinese 2

® Mandarin Chinese 2 Reading Booklet & Culture Notes Mandarin Chinese 2 Travelers should always check with their nation’s State Department for current advisories on local conditions before traveling abroad. Booklet Design: Maia Kennedy © and ‰ Recorded Program 2002 Simon & Schuster, Inc. © Reading Booklet 2016 Simon & Schuster, Inc. Pimsleur® is an imprint of Simon & Schuster Audio, a division of Simon & Schuster, Inc. Mfg. in USA. All rights reserved. ii Mandarin Chinese 2 ACKNOWLEDGMENTS VOICES Audio Program English-Speaking Instructor. Ray Brown Mandarin-Speaking Instructor . Qing Rao Female Mandarin Speaker . Mei Ling Diep Male Mandarin Speaker . Yaohua Shi Reading Lessons Female Mandarin Speaker . Xinxing Yang Male Mandarin Speaker . Jay Jiang AUDIO PROGRAM COURSE WRITERS Yaohua Shi Christopher J. Gainty READING LESSON WRITERS Xinxing Yang Elizabeth Horber REVIEWER Zhijie Jia EDITORS Joan Schoellner Beverly D. Heinle PRODUCER & DIRECTOR Sarah H. McInnis RECORDING ENGINEERS Peter S. Turpin Kelly Saux Simon & Schuster Studios, Concord, MA iiiiii Mandarin Chinese 2 Table of Contents Introduction Mandarin .............................................................. 1 Pictographs ........................................................ 2 Traditional and Simplified Script ....................... 3 Pinyin Transliteration ......................................... 3 Readings ............................................................ 4 Tonality ............................................................... 5 Tone Change or Tone Sandhi -

Pimsleur® Mandarin Ii

RECORDED BOOKS™ PRESENTS PIMSLEUR® LANGUAGE PROGRAMS MANDARIN II SUPPLEMENTAL READING BOOKLET TABLE OF CONTENTS Notes Unit 1 Regional Accents . .5 Particles . .6 Unit 2 Dining Out . 7 Chengde . 7 Unit 3 Alcoholic Beverages . 8 Friends and Family . 9 Unit 4 Teahouses . 10 Women in the Workforce . 10 Unit 5 Travel in China by Train and Plane . 11 Unit 6 Stores and Shopping . 14 Taiwan . 14 Unit 7 Days and Months . 16 Unit 8 Movies . 18 Popular Entertainment . 19 Unit 9 Beijing Opera . 20 TABLE OF CONTENTS Notes (continued) Unit 10 Business Travel . 21 Coffee . 22 Unit 11 Holidays and Leisure Time . 23 Chinese Pastries . 24 Modesty and Politeness . 24 Unit 12 Hangzhou . 26 Suzhou . 27 Unit 13 Combating the Summer Heat . 28 Forms of Address . 29 Unit 14 Travel outside China . 30 The “Three Links” . 31 Unit 15 Personal Questions . 32 China’s One Child Policy . 34 Unit 16 The Phone System . 35 Banks . 36 Unit 17 Zhongguo . 37 Measurements . 38 TABLE OF CONTENTS Notes (continued) Unit 18 Chinese Students Abroad . 39 Unit 19 Medical Care . 41 Traditional Chinese Medicine . 42 Unit 20 E-mail and Internet Cafés . 44 Shanghai . 45 Unit 21 The Postal System . 46 Chinese Word Order . 47 Unit 22 Sports and Board Games . 49 Unit 23 Getting Around . 51 Unit 24 Parks . 53 Unit 25 Currency . 54 Unit 26 Feng shui . 56 Unit 27 Qingdao . 57 Beijing . 57 TABLE OF CONTENTS Notes (continued) Unit 28 Boat Travel . 58 Unit 29 Nanjing . 59 Tianjin . 60 Unit 30 English in China . 61 Acknowledgments . 63 5 Mandarin II Unit 1 Regional Accents Mandarin, China’s standard spoken language, is taught in schools throughout Mainland China and Taiwan. -

Heft 6/2018 93. Jahrgang

Heft 6/2018 93. Jahrgang 1 DGoZ 6/2018 Inhalt Vorwort Ein Herbst-Titelbild mitten im Winter? Leider habe Go-Herbst (Foto: Ola Sundberg, gobutiken.se) Titel ich das abgedruckte Foto von Ola Sundberg erst im Vorwort, Inhalt, Nachrichten ......................2–3 vergangenen November auf Facebook entdeckt, ihn Turnierberichte ......................................4–7 dann aber sofort um eine Abdruckerlaubnis gebeten. Intern. Paar-Go-Turnier 2018 ...............8–14 Daraufhin wollte ich nicht ein ganzes Jahr warten, bis ich den DGoZ-Lesern dieses schöne Motiv Der Norden denkt quer ............................ 15 präsentiere. Zudem wird das nächste Herbst-Heft Kinderseite(n) ....................................16–18 5/2019 die 100. DGoZ-Ausgabe unter meiner Ver- Vorwort zum DV-Bericht .......................... 19 antwortung sein (1-5/1988 + 1/2004 bis 5/2019 = Bericht von der Delegiertenversammlung ...20–22 100) und dafür möchte ich mir das Titelbild-Motiv Morgens um halb11 in Wandsbek ............. 23 gerne noch freihalten. Also habe ich das Foto von Rezension: Level Up ........................... 24–25 Ola für diese Ausgabe genommen – und es herrscht ja, zumindest aktuell, in Deutschland auch noch Yoon Young Sun kommentiert (43) .......... 26–31 eher Herbst- denn Winterwetter, oder? Die kommentierte Bundesligapartie ....32–37 Einen großen inhaltlichen Block stellt in dieser Pokale .................................................38–39 Ausgabe die Berichterstattung von der Delegierten- Durchbruch zum 12. Kyu (7) .............40–41 versammlung -

EXPANDING the LANDSCAPE of EARLY and HIGH TANG LITERATURE by XIAOJING MIAO B.A., Minzu University of China, 2011 M.A., Minzu University of China, 2014

BEYOND THE LYRIC: EXPANDING THE LANDSCAPE OF EARLY AND HIGH TANG LITERATURE by XIAOJING MIAO B.A., Minzu University of China, 2011 M.A., Minzu University of China, 2014 A thesis submitted to the Faculty of the Graduate School of the University of Colorado in partial fulfillment of the requirement for the degree of Doctor of Philosophy Department of Asian Languages and Civilizations 2019 This thesis entitled: Beyond the Lyric: Expanding the Landscape of Early and High Tang Literature written by Xiaojing Miao has been approved for the Department of Asian Languages and Civilizations Dr. Paul W. Kroll, Professor of Chinese, Committee Chair Dr. Antje Richter, Associate Professor of Chinese Dr. Ding Xiang Warner, Professor of Chinese Dr. Matthias L. Richter, Associate Professor of Chinese Dr. Katherine Alexander, Assistant Professor of Chinese Dr. David Atherton, Assistant Professor of Japnanese Date The final copy of this thesis has been examined by the signatories, and we find that both the content and the form meet acceptable presentation standards of scholarly work in the above mentioned discipline. ii Miao, Xiaojing (Ph.D., Asian Languages and CivilizationEnglish) Beyond the Lyric: Expanding the Landscape of Early and High Tang Literature Thesis directed by Professor Paul W. Kroll This dissertation investigates what Tang (618-907) literature was in its own time, as opposed to how it has been constructed at later times and for different critical purposes. The core of this dissertation is to diversify and complicate our understanding of Tang literature, including Tang poetry, from the perspective of self-(re)presentation, and by bringing out certain genres, works, and literati that have been overlooked. -

Heft 5/2015 90. Jahrgang

Heft 5/2015 90. Jahrgang 1 DGoZ 5/2015 Inhalt Vorwort Viele Berichte stecken in dieser Ausgabe, doppelt sogar Die Go-Verkündigung (nach Leonardo) Titel vom HPM in Berlin und über das Projekt „Ostasien Vorwort, Inhalt, Fangen und Retten, hautnah“, ein Artikel über Go-Livestreaming und ein Interview mit einem der Gründer von WeiqiTV. Da Nachrichten .................................2–3 gibt es Spannendes zu lesen! Turnierberichte ..........................3–11 In der nächsten Ausgabe wird dann die Deutsche Go-Livestreaming ........................ 12–13 Go-Einzelmeisterschaft breiten Raum einnehmen, Erlebnisbericht „Ostasien hautnah“ 14–16 mit Vor- und Endrunde, die erstmalig beide erst im vierten Quartal des Jahres ausgetragen werden. Bilanz „Ostasien hautnah“ ........... 17–24 Und freuen darf sich die Leserschaft auch schon Anzeige: Omikron Data Quality GmbH . 25 auf einen langen Reisebericht von Thomas Pittner, Pokale ..........................................26–27 der seinen Sohn Arved nach Asien begleitet hat … Kinderseite ................................... 28–29 Tobias Berben Yoon Young Sun kommentiert (27) ... 30–33 Weiqi-TV-Interview ..................... 34–35 Tag der Sachsen in Wurzen Der etwas andere Zug (11) ..........36–41 Zum dritten Mal in Folge nahm der AdYouKi e.V. Impressum ......................................... 41 unter der Leitung von Janine Böhme mit einem Fernostnachrichten ...................... 42–45 Go-Stand am Tag der Sachsen teil, der vom 4. bis Go-Probleme .............................. 46–48 6.9. in Wurzen -

AGA AGM Officer Reports and Finacials

Australian Go Association Inc. Sunday, 24 November 2019 AGA Vice-President’s Report for 2018/19 The 2019 World Amateur Go Championship was held in Matsue Japan in May. Our player was Cary Jin; Cary had 4 wins out of 8, a creditable result given it was a particularly strong field this year. Ken Xie from New Zealand played particularly well with 5 wins, placing 13th. The 2019 Korean Prime Ministers Cup was held in Yeongwol Korea in September. Tony Purcell was the Australian player, attaining 3 wins out of 6, narrowly losing a couple of very close games. Tony stayed on in Korea for an extra week to participate in the An-Dong Baduk Festival. The 2019 World Student Pair Go Championship, for University level students, is being held in Tokyo in December. Our team selected was Amy Song and Daniel Li. Unfortunately, Daniel has had to withdraw, but luckily Aaron Chen was able to come to our rescue to partner Amy. Next year two events are of particular interest: the WAGC will be held in Vladivostok, Russia, only the second time the WAGC has been held outside Japan, China or Korea. And to celebrate the 2020 Olympics in Japan, the Nihon Kiin is planning to hold a World Women’s Amateur Go Championship, which will hopefully become a regular event. As we probably will also be invited to participate in the World Pair Go Championship (as well as the Student version) we urgently need to encourage more women players! Neville Smythe VicePresident AGA Inc Australian Go Association Inc. -

Challenge Match Game 2: “Invention”

Challenge Match 8-15 March 2016 Game 2: “Invention” Commentary by Fan Hui Go expert analysis by Gu Li and Zhou Ruiyang Translated by Lucas Baker, Thomas Hubert, and Thore Graepel Invention AlphaGo's victory in the first game stunned the world. Many Go players, however, found the result very difficult to accept. Not only had Lee's play in the first game fallen short of his usual standards, but AlphaGo had not even needed to play any spectacular moves to win. Perhaps the first game was a fluke? Though they proclaimed it less stridently than before, the overwhelming majority of commentators were still betting on Lee to claim victory. Reporters arrived in much greater numbers that morning, and with the increased attention from the media, the pressure on Lee rose. After all, the match had begun with everyone expecting Lee to win either 50 or 41. I entered the playing room fifteen minutes before the game to find Demis Hassabis already present, looking much more relaxed than the day before. Four minutes before the starting time, Lee came in with his daughter. Perhaps he felt that she would bring him luck? As a father myself, I know that feeling well. By convention, the media is allowed a few minutes to take pictures at the start of a major game. The room was much fuller this time, another reflection of the increased focus on the match. Today, AlphaGo would take Black, and everyone was eager to see what opening it would choose. Whatever it played would represent what AlphaGo believed to be best for Black. -

Hybrid Intelligence: to Automate Or Not to Automate, That Is the Question

ISSN (print):2182-7796, ISSN (online):2182-7788, ISSN (cd-rom):2182-780X Available online at www.sciencesphere.org/ijispm Hybrid Intelligence: to automate or not to automate, that is the question Wil M.P. van der Aalst RWTH Aachen University Viewpoint Fraunhofer-Institut für Angewandte Informationstechnik Ahornstr. 55, D-52074, Aachen, Germany [email protected] Abstract: There used to be a clear separation between tasks done by machines and tasks done by people. Applications of machine learning in speech recognition (e.g., Alexa and Siri), image recognition, automated translation, autonomous driving, and medical diagnosis, have blurred the classical divide between human tasks and machine tasks. Although current Artificial Intelligence (AI) and Machine Learning (ML) technologies outperform humans in many areas, tasks requiring common sense, contextual knowledge, creativity, adaptivity, and empathy are still best performed by humans. Hybrid Intelligence (HI) blends human intelligence and machine intelligence to combine the best of both worlds. Hence, current and future Business Process Management (BPM) initiatives need to consider HI and the changing boundaries between work done by people and work done by software robots. Consider, for example, the success of Robotic Process Automation (RPA), which demonstrates that gradually taking away repetitive tasks from workers is possible. In this viewpoint paper, we argue that process mining is a key technology to decide what to automate and what not. Moreover, using process mining, it is possible to systematically monitor and manage processes where work is distributed over human workers and software robots. Keywords: Hybrid intelligence; data science; process science; machine learning; business process management. -

8 0Zkzbz0z 7 Opoqz0z0 6 Po0zps0z 5 Z0s0apop 4 0Zpzpzpz 3 Zko0mpz0 2 Naroqz0z 1 Znz0z0z0 a B C D E F G H

BEL GO • 89 Bulletin de la Fédération Belge de Go a.s.b.l. Bulletin van de Belgische Go Federatie v.z.w. Janvier – Avril 2017 Januari – April 2017 · Ed. resp. : Thomas Connor, 213 avenue du Roi, 1190 Forest 8 0ZkZbZ0Z 7 opoqZ0Z0 6 PO0Zps0Z 5 Z0S0ApoP 4 0ZPZpZPZ 3 ZKO0mPZ0 2 NAroQZ0Z 1 ZnZ0Z0Z0 a b c d e f g h Noir joue et tue. Zwart speelt en doodt. Président – Voorzitter Joost Vannieuwenhuyse [email protected] Secrétaire – Secretaris Thomas Connor [email protected] Trésorier – Penningmeester Jan Ramon [email protected] Administrateurs – Beheerders Catherine Fricheteau Jean-Denis Hennebert Michael Silcher Philippe Tranchida Fédération Belge de Go - a.s.b.l. Belgische Go Federatie - v.z.w. Contact [email protected] [email protected] www.gofed.be Rédaction – Redactie Thomas Connor Catherine Fricheteau Jean-Denis Hennebert Relecture – Correctie Juliette Brault Marie David Olivier Drouot Laurens Teirlinck Traduction – Vertaling Alistair Fronhoffs Nelis Vets Ce document a été réalisé avec le logiciel libre LATEX et utilise le package igo disponible à l’adresse http://www.ctan.org/pkg/igo. L’export des fichiers .sgf au format .tex a été produit avec le logiciel GoWrite 2 disponible à l’adresse http://gowrite.net. Imprimé en octobre 2017 chez AJM Print-Shop, Bruxelles. - 2 - Table des matières – Inhoud Edito 4 Nouvelles de la scène européenne de mai à août 5 Rétrospective du 2ème quadrimestre 2017 (mai à août) chez les pros 10 Quarante ans de classement européen : une plongée dans l’histoire du go en Europe 15 KPMC 2017 22 Commented game : François -

(Link to Korean Version) Thirdparty Comments

English version Korean version (link to Korean version) Thirdparty comments FINAL VERSION(PLEASE LET EMILY OR LOIS KNOW FOR FURTHER CHANGE) Release: 9am GMT Monday 22nd February English version Details announced for Google DeepMind Challenge Match Landmark fivematch tournament between the Grand Masterdefeating Go computer program, AlphaGo, and best human player of the last decade, Lee Sedol, will be held from March 9 to March 15 at the Four Seasons Hotel Seoul. February 22, 2016 (Seoul, South Korea) — Following breakthrough research on AlphaGo, the first computer program to beat a professional human Go player, Google DeepMind has announced details of the ultimate challenge to play the legendary Lee Sedol — the top Go player in the world over the past decade. AlphaGo will play Lee Sedol in a fivegame challenge match to be held from Wednesday, March 9 to Tuesday, March 15 in Seoul, South Korea. The games will be even (no handicap), with $1 million USD in prize money for the winner. If AlphaGo wins, the prize money will be donated to UNICEF, STEM and Go charities. Regarded as the outstanding grand challenge for artificial intelligence, Go has been viewed as one of the hardest games for computers to master due to its sheer complexity which makes bruteforce exhaustive search intractable (for example there are more possible board configurations than the number of atoms in the universe). DeepMind released details of the breakthrough in a paper published in the scientific journal Nature last month. The matches will be held at the Four Seasons Hotel Seoul, starting at 1pm local time (4am GMT; day before 11pm ET, 8pm PT) on the following days: 1.