Full Text (PDF)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Kevin Koga CS465 9/5/08 Final Project Proposal (First Draft, First Idea)

Kevin Koga CS465 9/5/08 Final Project Proposal (First Draft, First Idea) Inspiration: The idea for my project was inspired by guitar hero. In guitar hero, players press buttons in time with a visual display of corresponding buttons and an audio track. Correctly pressing buttons scores you points, and you hear the guitar portion of the audio track. As someone who can actually read music, guitar hero always seemed too easy to me. Obviously the game is designed so that anyone can play it; even if they’ve never before seen written music. I was thinking about how Guitar Hero was eventually expanded to make Rock Band. I thought of how revolutionary those games have been. And my idea then occurred to me. Idea: When people learn to sight-sing music, the only feedback they have is their own ear. If you sing the wrong note, it sounds wrong. With the voice, there is no integral number of notes: no fingerings like on a trumpet, no frets like on a guitar. With voice, as with fretless string instruments such as violin or cello, you could be missing the note by only a quarter-step or less. To a trained ear, this makes a big difference. So I thought, why not add visual feedback? A microphone could be connected to a computer measuring frequency data in real time. The frequency or pitch of the note played or sung could move a cursor on the screen up or down. The cursor could sit atop musical staff, and point to the current note being played. -

UPC Platform Publisher Title Price Available 730865001347

UPC Platform Publisher Title Price Available 730865001347 PlayStation 3 Atlus 3D Dot Game Heroes PS3 $16.00 52 722674110402 PlayStation 3 Namco Bandai Ace Combat: Assault Horizon PS3 $21.00 2 Other 853490002678 PlayStation 3 Air Conflicts: Secret Wars PS3 $14.00 37 Publishers 014633098587 PlayStation 3 Electronic Arts Alice: Madness Returns PS3 $16.50 60 Aliens Colonial Marines 010086690682 PlayStation 3 Sega $47.50 100+ (Portuguese) PS3 Aliens Colonial Marines (Spanish) 010086690675 PlayStation 3 Sega $47.50 100+ PS3 Aliens Colonial Marines Collector's 010086690637 PlayStation 3 Sega $76.00 9 Edition PS3 010086690170 PlayStation 3 Sega Aliens Colonial Marines PS3 $50.00 92 010086690194 PlayStation 3 Sega Alpha Protocol PS3 $14.00 14 047875843479 PlayStation 3 Activision Amazing Spider-Man PS3 $39.00 100+ 010086690545 PlayStation 3 Sega Anarchy Reigns PS3 $24.00 100+ 722674110525 PlayStation 3 Namco Bandai Armored Core V PS3 $23.00 100+ 014633157147 PlayStation 3 Electronic Arts Army of Two: The 40th Day PS3 $16.00 61 008888345343 PlayStation 3 Ubisoft Assassin's Creed II PS3 $15.00 100+ Assassin's Creed III Limited Edition 008888397717 PlayStation 3 Ubisoft $116.00 4 PS3 008888347231 PlayStation 3 Ubisoft Assassin's Creed III PS3 $47.50 100+ 008888343394 PlayStation 3 Ubisoft Assassin's Creed PS3 $14.00 100+ 008888346258 PlayStation 3 Ubisoft Assassin's Creed: Brotherhood PS3 $16.00 100+ 008888356844 PlayStation 3 Ubisoft Assassin's Creed: Revelations PS3 $22.50 100+ 013388340446 PlayStation 3 Capcom Asura's Wrath PS3 $16.00 55 008888345435 -

654614 R52164ef1ecb53.Pdf

Christiane Jost Date of Birth: June 16th, 1965 Contact Information: [email protected] +55 (21) 8040-9673 Skype: chrjost ProZ.com profile: http://www.proz.com/profile/654614 Location: Teresópolis-RJ/Brazil Language pairs: English/Brazilian Portuguese, Brazilian Portuguese/English CAT Tools: MemoQ, TRADOS/SDL, Passolo, Idiom, Transit, IBM TM, POEdit Qualifications/Experience: Certified in 1981 by the Brazilian Ministry of Education and Culture as Translator/Interpreter. AutoCAD training, with 6 years' working experience. 25 years of experience in the computer/networking areas, during which period I've worked for the Brazilian Government as a programmer/system's analyst/Wireless and Cabled Network Designer. 3 years of Physics major degree at the University of Brasilia, as well as 1 year of a History minor degree at the same University. In-house experience as Lead Linguist for Alpha CRC Brasil for the localization of Blizzard’s World of Warcraft and expansions, Jun 2010~Apr 2011. Founder/Owner (since Dec/2008) and full time translator/linguist (since April/2011) at TranslaCAT (www.translacat.com). Translation/Localization Experience: Engineering/Misc projects • Translation of the Sahana Project - Disaster Management FOSS (www.sahana.lk). • Translation of HR training manuals (main end clients: Abbott Inc., American Express, Quality Safety Edge, Johnson Controls, IBM) • Translation and software localization for the BIlling and Metering Tool (including user manual). • Translation of the service and owner manuals for several GM/Chevrolet car -

From an Ethnic Island to a Transnational Bubble: a Reflection on Korean Americans in Los Angeles

Asian and Asian American Studies Faculty Works Asian and Asian American Studies 2012 From an Ethnic Island to a Transnational Bubble: A Reflection on Korean Americans in Los Angeles Edward J.W. Park Loyola Marymount University, [email protected] Follow this and additional works at: https://digitalcommons.lmu.edu/aaas_fac Part of the East Asian Languages and Societies Commons Recommended Citation Edward J.W. Park (2012) From an Ethnic Island to a Transnational Bubble: A Reflection on orK ean Americans in Los Angeles, Amerasia Journal, 38:1, 43-47. This Article is brought to you for free and open access by the Asian and Asian American Studies at Digital Commons @ Loyola Marymount University and Loyola Law School. It has been accepted for inclusion in Asian and Asian American Studies Faculty Works by an authorized administrator of Digital Commons@Loyola Marymount University and Loyola Law School. For more information, please contact [email protected]. From an Ethnic Island to a Transnational Bubble Transnational a to Island an Ethnic From So much more could be said in reflecting on Sa-I-Gu. My main goal in this brief essay has simply been to limn the ways in which the devastating fires of Sa-I-Gu have produced a loamy and fecund soil for personal discovery, community organizing, political mobilization, and, ultimately, a remaking of what it means to be Korean and Asian in the United States. From an Ethnic Island to a Transnational Bubble: A Reflection on Korean Americans in Los Angeles Edward J.W. Park EDWARD J.W. PARK is director and professor of Asian Pacific American Studies at Loyola Marymount University in Los Angeles. -

Access All Areas? the Evolution of Singstar from the PS2 to PS3 Platform

This may be the author’s version of a work that was submitted/accepted for publication in the following source: Fletcher, Gordon, Light, Ben, & Ferneley, Elaine (2008) Access all areas? The evolution of SingStar from the PS2 to PS3 platform. In Loader, B (Ed.) Internet Research 9.0: Rethinking Community, Rethink- ing Space (2008) - 9th Annual Conference of the Association of Internet Researchers. Association of Internet Researchers, Denmark, pp. 1-13. This file was downloaded from: https://eprints.qut.edu.au/75684/ c Copyright 2008 the authors This work is covered by copyright. Unless the document is being made available under a Creative Commons Licence, you must assume that re-use is limited to personal use and that permission from the copyright owner must be obtained for all other uses. If the docu- ment is available under a Creative Commons License (or other specified license) then refer to the Licence for details of permitted re-use. It is a condition of access that users recog- nise and abide by the legal requirements associated with these rights. If you believe that this work infringes copyright please provide details by email to [email protected] Notice: Please note that this document may not be the Version of Record (i.e. published version) of the work. Author manuscript versions (as Sub- mitted for peer review or as Accepted for publication after peer review) can be identified by an absence of publisher branding and/or typeset appear- ance. If there is any doubt, please refer to the published source. Access All Areas? The -

Animal Crossing

Alice in Wonderland Harry Potter & the Deathly Hallows Adventures of Tintin Part 2 Destroy All Humans: Big Willy Alien Syndrome Harry Potter & the Order of the Unleashed Alvin & the Chipmunks Phoenix Dirt 2 Amazing Spider-Man Harvest Moon: Tree of Tranquility Disney Epic Mickey AMF Bowling Pinbusters Hasbro Family Game Night Disney’s Planes And Then There Were None Hasbro Family Game Night 2 Dodgeball: Pirates vs. Ninjas Angry Birds Star Wars Hasbro Family Game Night 3 Dog Island Animal Crossing: City Folk Heatseeker Donkey Kong Country Returns Ant Bully High School Musical Donkey Kong: Jungle beat Avatar :The Last Airbender Incredible Hulk Dragon Ball Z Budokai Tenkaichi 2 Avatar :The Last Airbender: The Indiana Jones and the Staff of Kings Dragon Quest Swords burning earth Iron Man Dreamworks Super Star Kartz Backyard Baseball 2009 Jenga Driver : San Francisco Backyard Football Jeopardy Elebits Bakugan Battle Brawlers: Defenders of Just Dance Emergency Mayhem the Core Just Dance Summer Party Endless Ocean Barnyard Just Dance 2 Endless Ocean Blue World Battalion Wars 2 Just Dance 3 Epic Mickey 2:Power of Two Battleship Just Dance 4 Excitebots: Trick Racing Beatles Rockband Just Dance 2014 Family Feud 2010 Edition Ben 10 Omniverse Just Dance 2015 Family Game Night 4 Big Brain Academy Just Dance 2017 Fantastic Four: Rise of the Silver Surfer Bigs King of Fighters collection: Orochi FIFA Soccer 09 All-Play Bionicle Heroes Saga FIFA Soccer 12 Black Eyed Peas Experience Kirby’s Epic Yarn FIFA Soccer 13 Blazing Angels Kirby’s Return to Dream -

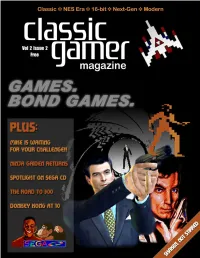

Cgm V2n2.Pdf

Volume 2, Issue 2 July 2004 Table of Contents 8 24 Reset 4 Communist Letters From Space 5 News Roundup 7 Below the Radar 8 The Road to 300 9 Homebrew Reviews 11 13 MAMEusements: Penguin Kun Wars 12 26 Just for QIX: Double Dragon 13 Professor NES 15 Classic Sports Report 16 Classic Advertisement: Agent USA 18 Classic Advertisement: Metal Gear 19 Welcome to the Next Level 20 Donkey Kong Game Boy: Ten Years Later 21 Bitsmack 21 Classic Import: Pulseman 22 21 34 Music Reviews: Sonic Boom & Smashing Live 23 On the Road to Pinball Pete’s 24 Feature: Games. Bond Games. 26 Spy Games 32 Classic Advertisement: Mafat Conspiracy 35 Ninja Gaiden for Xbox Review 36 Two Screens Are Better Than One? 38 Wario Ware, Inc. for GameCube Review 39 23 43 Karaoke Revolution for PS2 Review 41 Age of Mythology for PC Review 43 “An Inside Joke” 44 Deep Thaw: “Moortified” 46 46 Volume 2, Issue 2 July 2004 Editor-in-Chief Chris Cavanaugh [email protected] Managing Editors Scott Marriott [email protected] here were two times a year a kid could always tures a firsthand account of a meeting held at look forward to: Christmas and the last day of an arcade in Ann Arbor, Michigan and the Skyler Miller school. If you played video games, these days writer's initial apprehension of attending. [email protected] T held special significance since you could usu- Also in this issue you may notice our arti- ally count on getting new games for Christmas, cles take a slight shift to the right in the gaming Writers and Contributors while the last day of school meant three uninter- timeline. -

Virtual Animals

Reviews of software, tech toys, video games & web sites January 2007 Issue 82 • Volume 15, No. 1 Adventures In Odyssey and The Great Escape VirtualVirtual AnimalsAnimals Arena Football Babar To The Rescue Backyard Sports Baseball 2007 A Roundup of the Latest Pet Sims (Windows) Bratz: Forever Diamonds (GBA) Bratz: Forever Diamonds (PS2) Camtasia Studio 4 PLUS: The year ahead in Children’s Capt'N Gravity Ranger Program, The Catz (DS) Catz (PC) Interactive Media Charlotte's Web (PC) Children of Mana Coby V.Zon TF-DVD560 Crash Boom Bang! Dance Dance Revolution Disney Mix Disney pix-click Disney pix-max Disney Pixar Cars, The Video Game for Xbox 360 Disney's Kim Possible: What's the Switch Dogz (DS) Dogz (PC) Educorock Español: Rockin' and Hip Hoppin' in Spanish Elebits (Wii) Fullmetal Alchemist: Dual Sympathy GameSnacks: Kids Trivia Hamsterz Life Horsez I SPY Treasure Hunt DVD Game Juka and the Monophonic Menace Kirby: Squeak Squad Leapster: Clifford the Big Red Dog Reading Legend of Zelda, The: Twilight Princess Morton Subotnick's Playing Music Nascar 06 Total Team Control NASCAR: The DVD Board Game New Standard Keyboard Open Season Pet Pals Animal Doctor Phlinx To Go Rampage: Total Destruction (PS2, GameCube) Rampage: Total Destruction (Wii) Rayman: Raving Rabbids (Wii) Scene It? James Bond Collector's Edition Singstar Rocks! SpellingTime.com Star Wars Lethal Alliance Strawberry Shortcake and Her Berry Best Friends Strawberry Shortcake Dance Dance Revolution Strollometer Suite Life of Zack & Cody: Tipton Caper, The Suite Life of Zack & Cody: Tipton Trouble, The Tamagotchi Connection: Corner Shop 2 Pet Pals Animal Doctor by Legacy Interactive, page 24 TheSo, Year it’s January, Ahead the instart Children’s of 2007, and we’reInteractive sitting on theMedia tip of a fresh new year. -

TITLES = (Language: EN Version: 20101018083045

TITLES = http://wiitdb.com (language: EN version: 20101018083045) 010E01 = Wii Backup Disc DCHJAF = We Cheer: Ohasta Produce ! Gentei Collabo Game Disc DHHJ8J = Hirano Aya Premium Movie Disc from Suzumiya Haruhi no Gekidou DHKE18 = Help Wanted: 50 Wacky Jobs (DEMO) DMHE08 = Monster Hunter Tri Demo DMHJ08 = Monster Hunter Tri (Demo) DQAJK2 = Aquarius Baseball DSFE7U = Muramasa: The Demon Blade (Demo) DZDE01 = The Legend of Zelda: Twilight Princess (E3 2006 Demo) R23E52 = Barbie and the Three Musketeers R23P52 = Barbie and the Three Musketeers R24J01 = ChibiRobo! R25EWR = LEGO Harry Potter: Years 14 R25PWR = LEGO Harry Potter: Years 14 R26E5G = Data East Arcade Classics R27E54 = Dora Saves the Crystal Kingdom R27X54 = Dora Saves The Crystal Kingdom R29E52 = NPPL Championship Paintball 2009 R29P52 = Millennium Series Championship Paintball 2009 R2AE7D = Ice Age 2: The Meltdown R2AP7D = Ice Age 2: The Meltdown R2AX7D = Ice Age 2: The Meltdown R2DEEB = Dokapon Kingdom R2DJEP = Dokapon Kingdom For Wii R2DPAP = Dokapon Kingdom R2DPJW = Dokapon Kingdom R2EJ99 = Fish Eyes Wii R2FE5G = Freddi Fish: Kelp Seed Mystery R2FP70 = Freddi Fish: Kelp Seed Mystery R2GEXJ = Fragile Dreams: Farewell Ruins of the Moon R2GJAF = Fragile: Sayonara Tsuki no Haikyo R2GP99 = Fragile Dreams: Farewell Ruins of the Moon R2HE41 = Petz Horse Club R2IE69 = Madden NFL 10 R2IP69 = Madden NFL 10 R2JJAF = Taiko no Tatsujin Wii R2KE54 = Don King Boxing R2KP54 = Don King Boxing R2LJMS = Hula Wii: Hura de Hajimeru Bi to Kenkou!! R2ME20 = M&M's Adventure R2NE69 = NASCAR Kart Racing -

Karaoke Catalog Updated On: 11/01/2019 Sing Online on in English Karaoke Songs

Karaoke catalog Updated on: 11/01/2019 Sing online on www.karafun.com In English Karaoke Songs 'Til Tuesday What Can I Say After I Say I'm Sorry The Old Lamplighter Voices Carry When You're Smiling (The Whole World Smiles With Someday You'll Want Me To Want You (H?D) Planet Earth 1930s Standards That Old Black Magic (Woman Voice) Blackout Heartaches That Old Black Magic (Man Voice) Other Side Cheek to Cheek I Know Why (And So Do You) DUET 10 Years My Romance Aren't You Glad You're You Through The Iris It's Time To Say Aloha (I've Got A Gal In) Kalamazoo 10,000 Maniacs We Gather Together No Love No Nothin' Because The Night Kumbaya Personality 10CC The Last Time I Saw Paris Sunday, Monday Or Always Dreadlock Holiday All The Things You Are This Heart Of Mine I'm Not In Love Smoke Gets In Your Eyes Mister Meadowlark The Things We Do For Love Begin The Beguine 1950s Standards Rubber Bullets I Love A Parade Get Me To The Church On Time Life Is A Minestrone I Love A Parade (short version) Fly Me To The Moon 112 I'm Gonna Sit Right Down And Write Myself A Letter It's Beginning To Look A Lot Like Christmas Cupid Body And Soul Crawdad Song Peaches And Cream Man On The Flying Trapeze Christmas In Killarney 12 Gauge Pennies From Heaven That's Amore Dunkie Butt When My Ship Comes In My Own True Love (Tara's Theme) 12 Stones Yes Sir, That's My Baby Organ Grinder's Swing Far Away About A Quarter To Nine Lullaby Of Birdland Crash Did You Ever See A Dream Walking? Rags To Riches 1800s Standards I Thought About You Something's Gotta Give Home Sweet Home -

Music Games Rock: Rhythm Gaming's Greatest Hits of All Time

“Cementing gaming’s role in music’s evolution, Steinberg has done pop culture a laudable service.” – Nick Catucci, Rolling Stone RHYTHM GAMING’S GREATEST HITS OF ALL TIME By SCOTT STEINBERG Author of Get Rich Playing Games Feat. Martin Mathers and Nadia Oxford Foreword By ALEX RIGOPULOS Co-Creator, Guitar Hero and Rock Band Praise for Music Games Rock “Hits all the right notes—and some you don’t expect. A great account of the music game story so far!” – Mike Snider, Entertainment Reporter, USA Today “An exhaustive compendia. Chocked full of fascinating detail...” – Alex Pham, Technology Reporter, Los Angeles Times “It’ll make you want to celebrate by trashing a gaming unit the way Pete Townshend destroys a guitar.” –Jason Pettigrew, Editor-in-Chief, ALTERNATIVE PRESS “I’ve never seen such a well-collected reference... it serves an important role in letting readers consider all sides of the music and rhythm game debate.” –Masaya Matsuura, Creator, PaRappa the Rapper “A must read for the game-obsessed...” –Jermaine Hall, Editor-in-Chief, VIBE MUSIC GAMES ROCK RHYTHM GAMING’S GREATEST HITS OF ALL TIME SCOTT STEINBERG DEDICATION MUSIC GAMES ROCK: RHYTHM GAMING’S GREATEST HITS OF ALL TIME All Rights Reserved © 2011 by Scott Steinberg “Behind the Music: The Making of Sex ‘N Drugs ‘N Rock ‘N Roll” © 2009 Jon Hare No part of this book may be reproduced or transmitted in any form or by any means – graphic, electronic or mechanical – including photocopying, recording, taping or by any information storage retrieval system, without the written permission of the publisher. -

Es Uggestifs Par L'esrb

Mild Lyrics Mild Suggestive Themes Join the Community at Chansons grossières Joignez-vous à la Communauté au Thèmes légèrement suggestifs Únase a la Comunidad www.konami.com/kraie2 © 2008 Konami Digital Entertainment Co., Inc. “BEMANI”, “DanceDanceRevolution”, “DanceDanceRevolution Online Interactions Not Rated by the ESRB Universe 3” KONAMI is a registered trademark of KONAMI CORPORATION. Microsoft, Xbox, Xbox 360, Xbox LIVE, and the Xbox logos are trademarks of the Microsoft group of companies and are used under license from Microsoft. Les échanges en ligne ne sont pas classés par l’ESRB WARNING Before playing this game, read the Xbox 360® Instruction Manual and any peripheral manuals for important safety and health information. Keep all TABLE OF CONTENTS manuals for future reference. For replacement manuals, see www.xbox.com/support or call Xbox Customer Support. CONTROLS .......................................................................................... 2 MAIN MENU ....................................................................................... 4 Important Health Warning About Playing Video Games PREPARE TO SING ............................................................................ 5 Photosensitive seizures A very small percentage of people may experience a seizure when exposed to certain HOW TO PLAY .................................................................................... 8 visual images, including flashing lights or patterns that may appear in video games. Even people who have no history of seizures