Synthesis Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ghana 2020 List of Members in Good Standing As at 31 St October 2020

CHARTERED INSTITUTE OF ADMINISTRATORS AND MANAGEMENT CONSULTANTS (CIAMC)–GHANA 2020 LIST OF MEMBERS IN GOOD STANDING AS AT 31 ST OCTOBER 2020 Name & Place of Work Honorary Fellows 1. Allotey Robertson Akwei, Public Services Commission 2. Brown Gaisie Albert, Ghana National Fire Service, Headquarters, Accra 3. Fofie Ampadu Janet, Public Services Commission 4. Gabah Michael, Public Services Commission 5. Kannae Lawrence (Dr), Public Services Commission 6. Katsriku Bridget, Public Services Commission 7. Mohammed Ahmed Alhassan, Ghana Police Service 8. Ehunabobrim Prah Agyensaim VI, Industrial & Engineering Services, Accra 9. Nana Kwasi Agyekum-Dwamena, Head of Civil Service 10. Nana Kobina Nketia V, Chief of Essikado-Sekondi 11. Stephen Adei, (Prof.) Pentecost University, Accra 12. Takyiwaa Manuh, (Prof), Accra Professional Fellows 1. Adjei Kwabena, Kasapreko Company Limited 2. Aheto John B. K.(Prof), Aheto And Associates Limited, Accra 3. Buatsi N. Paul (Prof) International Leadership Foundation, Accra 4. Hammond Paul, Baj Freight and Logistics Limited, Tema 5. O. A. Feyi-Sobanjo (Chief Mrs.), Feyson Company Ltd., Accra 6. O. T. Feyi-Sobanjo (Prof.), Feyson Company Ltd, Accra 7. Okudzeto Sam, Sam Okudzeto and Associates Limited 8. Smith-Aidoo Richard, Smith Richards LLP, Accra 9. Asafo Samuel Mawusi, CIAMC, Accra 10. Baiden Yaa Pokuaa, National Health Insurance Authority, Accra 11. Garr David Kwashie (Dr), LUCAS College, Accra 12. Niboi Bennet Elvis (Rev), Bennet & Bennet Consulting, Takoradi 13. Hammond A.L Sampson, Consultant, Accra 14. Dogbegah Rockson Kwesi, Berock Ventures Ltd, Accra 15. Smile Dzisi, Koforidua Technical University 16. Nicholas Apreh Siaw, Koforidua Technical University 17. Ike Joe Nii Annang Mensah-Livingstone, (Dr.) Koforidua Technical University 1 18. -

Ghana Gazette

GHANA GAZETTE Published by Authority CONTENTS PAGE Facility with Long Term Licence … … … … … … … … … … … … 1236 Facility with Provisional Licence … … … … … … … … … … … … 201 Page | 1 HEALTH FACILITIES WITH LONG TERM LICENCE AS AT 12/01/2021 (ACCORDING TO THE HEALTH INSTITUTIONS AND FACILITIES ACT 829, 2011) TYPE OF PRACTITIONER DATE OF DATE NO NAME OF FACILITY TYPE OF FACILITY LICENCE REGION TOWN DISTRICT IN-CHARGE ISSUE EXPIRY DR. THOMAS PRIMUS 1 A1 HOSPITAL PRIMARY HOSPITAL LONG TERM ASHANTI KUMASI KUMASI METROPOLITAN KPADENOU 19 June 2019 18 June 2022 PROF. JOSEPH WOAHEN 2 ACADEMY CLINIC LIMITED CLINIC LONG TERM ASHANTI ASOKORE MAMPONG KUMASI METROPOLITAN ACHEAMPONG 05 October 2018 04 October 2021 MADAM PAULINA 3 ADAB SAB MATERNITY HOME MATERNITY HOME LONG TERM ASHANTI BOHYEN KUMASI METRO NTOW SAKYIBEA 04 April 2018 03 April 2021 DR. BEN BLAY OFOSU- 4 ADIEBEBA HOSPITAL LIMITED PRIMARY HOSPITAL LONG-TERM ASHANTI ADIEBEBA KUMASI METROPOLITAN BARKO 07 August 2019 06 August 2022 5 ADOM MMROSO MATERNITY HOME HEALTH CENTRE LONG TERM ASHANTI BROFOYEDU-KENYASI KWABRE MR. FELIX ATANGA 23 August 2018 22 August 2021 DR. EMMANUEL 6 AFARI COMMUNITY HOSPITAL LIMITED PRIMARY HOSPITAL LONG TERM ASHANTI AFARI ATWIMA NWABIAGYA MENSAH OSEI 04 January 2019 03 January 2022 AFRICAN DIASPORA CLINIC & MATERNITY MADAM PATRICIA 7 HOME HEALTH CENTRE LONG TERM ASHANTI ABIREM NEWTOWN KWABRE DISTRICT IJEOMA OGU 08 March 2019 07 March 2022 DR. JAMES K. BARNIE- 8 AGA HEALTH FOUNDATION PRIMARY HOSPITAL LONG TERM ASHANTI OBUASI OBUASI MUNICIPAL ASENSO 30 July 2018 29 July 2021 DR. JOSEPH YAW 9 AGAPE MEDICAL CENTRE PRIMARY HOSPITAL LONG TERM ASHANTI EJISU EJISU JUABEN MUNICIPAL MANU 15 March 2019 14 March 2022 10 AHMADIYYA MUSLIM MISSION -ASOKORE PRIMARY HOSPITAL LONG TERM ASHANTI ASOKORE KUMASI METROPOLITAN 30 July 2018 29 July 2021 AHMADIYYA MUSLIM MISSION HOSPITAL- DR. -

Small and Medium Forest Enterprises in Ghana

Small and Medium Forest Enterprises in Ghana Small and medium forest enterprises (SMFEs) serve as the main or additional source of income for more than three million Ghanaians and can be broadly categorised into wood forest products, non-wood forest products and forest services. Many of these SMFEs are informal, untaxed and largely invisible within state forest planning and management. Pressure on the forest resource within Ghana is growing, due to both domestic and international demand for forest products and services. The need to improve the sustainability and livelihood contribution of SMFEs has become a policy priority, both in the search for a legal timber export trade within the Voluntary Small and Medium Partnership Agreement (VPA) linked to the European Union Forest Law Enforcement, Governance and Trade (EU FLEGT) Action Plan, and in the quest to develop a national Forest Enterprises strategy for Reducing Emissions from Deforestation and Forest Degradation (REDD). This sourcebook aims to shed new light on the multiple SMFE sub-sectors that in Ghana operate within Ghana and the challenges they face. Chapter one presents some characteristics of SMFEs in Ghana. Chapter two presents information on what goes into establishing a small business and the obligations for small businesses and Ghana Government’s initiatives on small enterprises. Chapter three presents profiles of the key SMFE subsectors in Ghana including: akpeteshie (local gin), bamboo and rattan household goods, black pepper, bushmeat, chainsaw lumber, charcoal, chewsticks, cola, community-based ecotourism, essential oils, ginger, honey, medicinal products, mortar and pestles, mushrooms, shea butter, snails, tertiary wood processing and wood carving. -

Managing Traffic Congestion in the Accra Central Market, Ghana

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Agyapong, Frances; Ojo, Thomas Kolawole Article Managing traffic congestion in the Accra Central Market, Ghana Journal of Urban Management Provided in Cooperation with: Chinese Association of Urban Management (CAUM), Taipei Suggested Citation: Agyapong, Frances; Ojo, Thomas Kolawole (2018) : Managing traffic congestion in the Accra Central Market, Ghana, Journal of Urban Management, ISSN 2226-5856, Elsevier, Amsterdam, Vol. 7, Iss. 2, pp. 85-96, http://dx.doi.org/10.1016/j.jum.2018.04.002 This Version is available at: http://hdl.handle.net/10419/194440 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen -

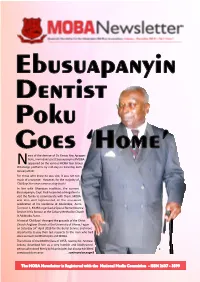

MOBA Newsletter 1 Jan-Dec 2019 1 260420 1 Bitmap.Cdr

Ebusuapanyin Dentist Poku Goes ‘Home’ ews of the demise of Dr. Fancis Yaw Apiagyei Poku, immediate past Ebusuapanyin of MOBA Nappeared on the various MOBA Year Group WhatsApp plaorms by mid-day on Saturday 26th January 2019. For those who knew he was sick, it was not too much of a surprise. However, for the majority of 'Old Boys' the news came as a big shock! In line with Ghanaian tradion, the current Ebusuapanyin, Capt. Paul Forjoe led a delegaon to visit the family to commiserate with them. MOBA was also well represented at the one-week celebraon at his residence at Abelenkpe, Accra. To crown it, MOBA organised a Special Remembrance Service in his honour at the Calvary Methodist Church in Adabraka, Accra. A host of 'Old Boys' thronged the grounds of the Christ Church Anglican Church at the University of Ghana, Legon on Saturday 24th April 2019 for the Burial Service and more importantly to pay their last respects to the man who had done so much for Mfantsipim and MOBA. The tribute of the MOBA Class of 1955, read by Dr. Andrew Arkutu, described him as a very humble and kindhearted person who stood firmly by his principles but always exhibited a composed demeanor. ...connued on page 8 The MOBA Newsletter is Registered with the National Media Commision - ISSN 2637 - 3599 Inside this Issue... Comments 5 Editorial: ‘Old Boys’ - Let’s Up Our Game 6 From Ebusuapanyin’s Desk Cover Story 8. Dr. Poku Goes Home 9 MOBA Honours Dentist Poku From the School 10 - 13 News from the Hill MOBA Matters 15 MOBA Elections 16 - 17 Facelift Campaign Contributors 18 - 23 2019 MOBA Events th 144 Anniversary 24 - 27 Mfantsipim Celebrates 144th Anniversary 28 2019 SYGs Project - Staff Apartments Articles 30 - 31 Reading, O Reading! Were Art Thou Gone? 32 - 33 2019 Which Growth? Lifestyle Advertising Space Available 34 - 36 Journey from Anumle to Kotokuraba Advertising Space available for Reminiscences businesses, products, etc. -

Prevalence of Onchocerciasis and Associated Clinical Manifestations in Selected Hypoendemic Communities in Ghana Following Long

Otabil et al. BMC Infectious Diseases (2019) 19:431 https://doi.org/10.1186/s12879-019-4076-2 RESEARCH ARTICLE Open Access Prevalence of onchocerciasis and associated clinical manifestations in selected hypoendemic communities in Ghana following long-term administration of ivermectin Kenneth Bentum Otabil1,2* , Samuel Fosu Gyasi1, Esi Awuah3, Daniels Obeng-Ofori4, Robert Junior Atta-Nyarko5, Dominic Andoh5, Beatrice Conduah5, Lawrence Agbenyikey5, Philip Aseidu5, Comfort Blessing Ankrah5, Abdul Razak Nuhu5 and H. D. F. H. Schallig2 Abstract Background: Onchocerciasis is a neglected tropical disease which is still of immense major public health concern in several areas of Africa and the Americas. The disease manifests either as ocular or as dermal onchocerciasis with several symptoms including itching, nodules, skin thickening, visual impairment and blindness. Ivermectin has been an efficient microfilaricide against the causative agent of the disease (Onchocerca volvulus) but reports from some areas in Africa suggest the development of resistance to this drug. The aim of this study was to determine the prevalence of onchocerciasis and associated clinical conditions frequently associated with the disease in three endemic communities in Ghana which have been subjected to 18 to 20 rounds of mass drug administration of ivermectin. This was to help determine whether or not onchocerciasis persists in these communities. Methods: A cross-sectional study design was adopted. Three communities (Tanfiano, Senya and Kokompe) in the Nkoranza North District of Ghana where mass drug administration of ivermectin had been ongoing for more than two decades were selected for the study. The population was randomly sampled and 114 participants recruited for the study based on the eligibility criteria. -

2009 Budget.Pdf

REPUBLIC OF GHANA THE BUDGET STATEMENT AND ECONOMIC POLICY of the GOVERNMENT OF GHANA for the 2009 FINANCIAL YEAR presented to PARLIAMENT on Thursday, 5th March, 2009 by Dr. Kwabena Duffuor MINISTER OF FINANCE AND ECONOMIC PLANNING on the authority of H. E. John Evans Atta Mills PRESIDENT OF THE REPUBLIC OF GHANA Investing in A BETTER GHANA For Copies of the statement, please contact the Public Relations Office of the Ministry: Ministry of Finance and Economic Planning Public Relations Office – (Room 303 or 350) P.O. Box MB 40, Accra, Ghana. The 2009 Budget Statement and Economic Policies of the Government is also available on the internet at: www.mofep.gov.gh ii Investing in A BETTER GHANA ACRONYMS AND ABBREVIATIONS 3G Third Generation ADR Alternate Dispute Resolution AEAs Agricultural Extension Agents AFSAP Agriculture Finance Strategy and Action Plan APR Annual Progress Report APRM African Peer Review Mechanism ART Anti-Retroviral Therapy ASF African Swine Fever ATM Average Term to Maturity AU African Union BECE Basic Education Certificate Examination BoG Bank of Ghana BOST Bulk Oil Storage and Transportation BPO Business Process Outsourcing CAHWs Community Animal Health Workers CBD Central Business District CBPP Contagious Bovine Pleuropneumonia CCE Craft Certificate Examination CDD Centre for Democratic Development CEDAW Convention on the Elimination of All forms of Discrimination Against Women CEDECOM Central Regional Development Commission CEPA Centre for Policy Analysis CEPS Customs Excise and Preventive Service CFMP Community -

Mining Community Benefits in Ghana: a Case of Unrealized Potential

Mining Community Benefits in Ghana: A Case of Unrealized Potential Andy Hira and James Busumtwi-Sam, Simon Fraser University [email protected], [email protected], A project funded by the Canadian International Resources and Development Institute1 December 18, 2018 1 All opinions are those of the authors alone TABLE OF CONTENTS Acknowledgements List of Abbreviations Map of Ghana showing location of Mining Communities Map of Ghana showing major Gold Belts Executive Summary ……………………………………………………………………. 1 Chapter 1 Introduction ……………………………………………………………….... 4 1.1 Overview of the Study………………………………………………………… 4 1.2 Research Methods and Data Collection Activities …………………………… 5 Part 1 Political Economy of Mining in Ghana …………………………... 7 Chapter 2 Ghana’s Political Economy………………………………………………... 7 2.1 Society & Economy …………………………………………………………… 7 2.2 Modern History & Governance ……………………………………………….. 8 2.3 Governance in the Fourth Republic (1993-2018) ……………………………... 9 Chapter 3 Mining in Ghana ……………………………………………………………12 3.1 Overview of Mining in Ghana ……………………….……………………...... 13 3.2 Mining Governance…………………………………………………………… 13 3.3 The Mining Fiscal Regime …………………………………………………… 17 3.4 Distribution of Mining Revenues …………………………………………….. 18 Part 2 Literature Review: Issues in Mining Governance ……………... 21 Chapter 4 Monitoring and Evaluation of Community Benefit Agreements …… 21 4.1 Community Benefit Agreements (CBA) ……………………………………… 20 4.2 How Monitoring and Evaluation (M&E) Can Help to Improve CBAs ……….. 29 Chapter 5 Key Governance Issues in Ghana’s Mining Sector ……………………. 34 5.1 Coherence in Mining Policies & Laws/Regulations …………………………... 34 5.2 Mining Revenue Collection …………………………………………………… 35 5.3 Distribution & Use of Mining Revenues ………………………………………. 36 5.4 Mining Governance Capacity ………………………………………………….. 37 5.5 Mining and Human Rights ……………………………………………………... 38 5.6 Artisanal & Small Scale Mining and Youth Employment ……………………...39 5.7 Other Key Issues: Women in Mining, Privatization of Public Services, Land Resettlement, Environmental Degradation ……………………………………. -

"National Integration and the Vicissitudes of State Power in Ghana: the Political Incorporation of Likpe, a Border Community, 1945-19B6"

"National Integration and the Vicissitudes of State Power in Ghana: The Political Incorporation of Likpe, a Border Community, 1945-19B6", By Paul Christopher Nugent A Thesis Submitted for the Degree of Doctor of Philosophy (Ph.D.), School of Oriental and African Studies, University of London. October 1991 ProQuest Number: 10672604 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a com plete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. uest ProQuest 10672604 Published by ProQuest LLC(2017). Copyright of the Dissertation is held by the Author. All rights reserved. This work is protected against unauthorized copying under Title 17, United States C ode Microform Edition © ProQuest LLC. ProQuest LLC. 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106- 1346 Abstract This is a study of the processes through which the former Togoland Trust Territory has come to constitute an integral part of modern Ghana. As the section of the country that was most recently appended, the territory has often seemed the most likely candidate for the eruption of separatist tendencies. The comparative weakness of such tendencies, in spite of economic crisis and governmental failure, deserves closer examination. This study adopts an approach which is local in focus (the area being Likpe), but one which endeavours at every stage to link the analysis to unfolding processes at the Regional and national levels. -

Ghanaian Methodist Spirituality in Relation with Neo- Pentecostalism

Ghanaian Methodist Spirituality GHANAIAN METHODIST SPIRITUALITY IN RELATION WITH NEO- PENTECOSTALISM Doris E. Yalley Abstract: Contemporary Ghanaian Methodist spirituality exhibits varied religious tendencies. A cursory look at some activities which take place at the Church’s prayer centres reveals worship patterns of the historical Wesleyan Tradition, patterns of the Pentecostal and Neo-Charismatic. To understand how worship patterns promote har- monious religious environments for members of the Church, the study examined some of the practices which could have possibly informed such religious expressions within the Methodist Church Ghana (MCG). The findings revealed a religious disposition fashioned to re- flect the Church’s foundational heritage, tempered with ecumenical models integrated to address the Ghanaian cultural context. Keywords: Wesleyan Spirituality, Neo-Pentecostalism, Ghanaian Methodism Introduction A careful examination of the contemporary Ghanaian Methodist reli- gious scene reveals varied worship patterns.1 The phenomenon raises the question whether the contemporary liturgical praxis is a rebirth of the historical Wesleyan Tradition or a manifestation of the Pentecos- tal/Neo-Pentecostal waves blowing over the Ghanaian religious scene, or a ‘locally brewed’ spirituality emerging from Indigenous Ghanaian cultures. This paper argues that the Wesleyan Tradition practised by the founding Fathers can be described as ‘Pentecostal.’ Furthermore, against the view of some Ghanaian Methodists, including a former Director of the Meth- odist Prayer and Renewal Programme (MPRP), that the MCG has com- promised its Wesleyan identity, a three-fold aim is pursued: a) To evaluate the nature of Methodism in contemporary Ghana; b) To examine the vision and mission of the Wesleyan Methodist Missionary Society (WMMS); 1 The Church is one of the largest and oldest denomination in Ghana, birthed out of the early Christian missionary activities in the then Gold Coast in the year 1835. -

GNHR) P164603 CR No 6337-GH REF No.: GH-MOGCSP-190902-CS-QCBS

ENGAGEMENT OF A FIRM FOR DATA COLLECTION IN THE VOLTA REGION OF GHANA FOR THE GHANA NATIONAL HOUSEHOLD REGISTRY (GNHR) P164603 CR No 6337-GH REF No.: GH-MOGCSP-190902-CS-QCBS I. BACKGROUND & CONTEXT The Ministry of Gender, Children and Social Protection (MGCSP) as a responsible institution to coordinate the implementation of the country’s social protection system has proposed the establishment of the Ghana National Household Registry (GNHR), as a tool that serves to assist social protection programs to identify, prioritize, and select households living in vulnerable conditions to ensure that different social programs effectively reach their target populations. The GNHR involves the registry of households and collection of basic information on their social- economic status. The data from the registry can then be shared across programs. In this context, the GNHR will have the following specific objectives: a) Facilitate the categorization of potential beneficiaries for social programs in an objective, homogeneous and equitable manner. b) Support the inter-institutional coordination to improve the impact of social spending and the elimination of duplication c) Allow the design and implementation of accurate socioeconomic diagnoses of poor people, to support development of plans, and the design and development of specific programs targeted to vulnerable and/or low-income groups. d) Contribute to institutional strengthening of the MoGCSP, through the implementation of a reliable and central database of vulnerable groups. For the implementation of the Ghana National Household Registry, the MoGCSP has decided to use a household evaluation mechanism based on a Proxy Means Test (PMT) model, on which welfare is determined using indirect indicators that collectively approximate the socioeconomic status of individuals or households. -

World Bank Document

December 2011 PPIAF Assistance in Ghana PPIAF has supported the government of Ghana since 2001 when it provided assistance for three transactions in the water sector, with the objective of pioneering private distribution of water supply in the country. PPIAF has since provided funding in the transport sector, which supported a subsequent World Bank-funded Urban Transport Project in Accra, the capital of Ghana. In other sectors, a small activity was Public Disclosure Authorized funded in 2006 to promote private sector solutions to Accra’s housing sector, particularly among low- income residents. Finally, PPIAF recently supported activities that provided policy support to Ghana’s nascent public-private partnership (PPP) program, and a study on the effects of the financial crisis on the PPP market in Ghana. Technical Assistance for Ghana’s Transport Sector PPIAF has supported three activities in the transport sector in Ghana. The first activity focused on the roads sector, while two subsequent activities aimed to improve the provision of urban transport in Accra. PPIAF Support to the Roads Sector Since the formal initiation of the Road Sector Development Strategy in 1996, considerable progress has been made in expanding the role of the private sector in the development of the road sector in Ghana. Subject to Ministry of Transport approval, private consultants are now responsible for most road design Public Disclosure Authorized work, with all construction work carried out by private contractors. The size of contracts that could be handled by local firms steadily increased, and by 2003 around 90–95% of routine and periodic maintenance work was carried out by private contractors, while private companies collected tolls on twelve roads and bridges in exchange for the payment of a fixed monthly fee to the Road Fund.