And GPU-Based DNN Training on Modern Architectures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

C5ENPA1-DS, C-5E NETWORK PROCESSOR SILICON REVISION A1

Freescale Semiconductor, Inc... SILICON REVISION A1 REVISION SILICON C-5e NETWORK PROCESSOR Sheet Data Rev 03 PRELIMINARY C5ENPA1-DS/D Freescale Semiconductor,Inc. F o r M o r G e o I n t f o o : r w m w a t w i o . f n r e O e n s c T a h l i e s . c P o r o m d u c t , Freescale Semiconductor, Inc... Freescale Semiconductor,Inc. F o r M o r G e o I n t f o o : r w m w a t w i o . f n r e O e n s c T a h l i e s . c P o r o m d u c t , Freescale Semiconductor, Inc... Freescale Semiconductor,Inc. Silicon RevisionA1 C-5e NetworkProcessor Data Sheet Rev 03 C5ENPA1-DS/D F o r M o r Preli G e o I n t f o o : r w m w a t w i o . f n r e O e n s c T a h l i e s . c P o r o m m d u c t , inary Freescale Semiconductor, Inc... Freescale Semiconductor,Inc. F o r M o r G e o I n t f o o : r w m w a t w i o . f n r e O e n s c T a h l i e s . c P o r o m d u c t , Freescale Semiconductor, Inc. C5ENPA1-DS/D Rev 03 CONTENTS . -

Design and Implementation of a Stateful Network Packet Processing

610 IEEE/ACM TRANSACTIONS ON NETWORKING, VOL. 25, NO. 1, FEBRUARY 2017 Design and Implementation of a Stateful Network Packet Processing Framework for GPUs Giorgos Vasiliadis, Lazaros Koromilas, Michalis Polychronakis, and Sotiris Ioannidis Abstract— Graphics processing units (GPUs) are a powerful and TCAMs, have greatly reduced both the cost and time platform for building the high-speed network traffic process- to develop network traffic processing systems, and have ing applications using low-cost hardware. The existing systems been successfully used in routers [4], [7] and network tap the massively parallel architecture of GPUs to speed up certain computationally intensive tasks, such as cryptographic intrusion detection systems [27], [34]. These systems offer a operations and pattern matching. However, they still suffer from scalable method of processing network packets in high-speed significant overheads due to critical-path operations that are still environments. However, implementations based on special- being carried out on the CPU, and redundant inter-device data purpose hardware are very difficult to extend and program, transfers. In this paper, we present GASPP, a programmable net- and prohibit them from being widely adopted by the industry. work traffic processing framework tailored to modern graphics processors. GASPP integrates optimized GPU-based implemen- In contrast, the emergence of commodity many-core tations of a broad range of operations commonly used in the architectures, such as multicore CPUs and modern graph- network traffic processing applications, including the first purely ics processors (GPUs) has proven to be a good solution GPU-based implementation of network flow tracking and TCP for accelerating many network applications, and has led stream reassembly. -

Embedded Multi-Core Processing for Networking

12 Embedded Multi-Core Processing for Networking Theofanis Orphanoudakis University of Peloponnese Tripoli, Greece [email protected] Stylianos Perissakis Intracom Telecom Athens, Greece [email protected] CONTENTS 12.1 Introduction ............................ 400 12.2 Overview of Proposed NPU Architectures ............ 403 12.2.1 Multi-Core Embedded Systems for Multi-Service Broadband Access and Multimedia Home Networks . 403 12.2.2 SoC Integration of Network Components and Examples of Commercial Access NPUs .............. 405 12.2.3 NPU Architectures for Core Network Nodes and High-Speed Networking and Switching ......... 407 12.3 Programmable Packet Processing Engines ............ 412 12.3.1 Parallelism ........................ 413 12.3.2 Multi-Threading Support ................ 418 12.3.3 Specialized Instruction Set Architectures ....... 421 12.4 Address Lookup and Packet Classification Engines ....... 422 12.4.1 Classification Techniques ................ 424 12.4.1.1 Trie-based Algorithms ............ 425 12.4.1.2 Hierarchical Intelligent Cuttings (HiCuts) . 425 12.4.2 Case Studies ....................... 426 12.5 Packet Buffering and Queue Management Engines ....... 431 399 400 Multi-Core Embedded Systems 12.5.1 Performance Issues ................... 433 12.5.1.1 External DRAMMemory Bottlenecks ... 433 12.5.1.2 Evaluation of Queue Management Functions: INTEL IXP1200 Case ................. 434 12.5.2 Design of Specialized Core for Implementation of Queue Management in Hardware ................ 435 12.5.2.1 Optimization Techniques .......... 439 12.5.2.2 Performance Evaluation of Hardware Queue Management Engine ............. 440 12.6 Scheduling Engines ......................... 442 12.6.1 Data Structures in Scheduling Architectures ..... 443 12.6.2 Task Scheduling ..................... 444 12.6.2.1 Load Balancing ................ 445 12.6.3 Traffic Scheduling ................... -

Network Processors: Building Block for Programmable Networks

NetworkNetwork Processors:Processors: BuildingBuilding BlockBlock forfor programmableprogrammable networksnetworks Raj Yavatkar Chief Software Architect Intel® Internet Exchange Architecture [email protected] 1 Page 1 Raj Yavatkar OutlineOutline y IXP 2xxx hardware architecture y IXA software architecture y Usage questions y Research questions Page 2 Raj Yavatkar IXPIXP NetworkNetwork ProcessorsProcessors Control Processor y Microengines – RISC processors optimized for packet processing Media/Fabric StrongARM – Hardware support for Interface – Hardware support for multi-threading y Embedded ME 1 ME 2 ME n StrongARM/Xscale – Runs embedded OS and handles exception tasks SRAM DRAM Page 3 Raj Yavatkar IXP:IXP: AA BuildingBuilding BlockBlock forfor NetworkNetwork SystemsSystems y Example: IXP2800 – 16 micro-engines + XScale core Multi-threaded (x8) – Up to 1.4 Ghz ME speed RDRAM Microengine Array Media – 8 HW threads/ME Controller – 4K control store per ME Switch MEv2 MEv2 MEv2 MEv2 Fabric – Multi-level memory hierarchy 1 2 3 4 I/F – Multiple inter-processor communication channels MEv2 MEv2 MEv2 MEv2 Intel® 8 7 6 5 y NPU vs. GPU tradeoffs PCI XScale™ Core MEv2 MEv2 MEv2 MEv2 – Reduce core complexity 9 10 11 12 – No hardware caching – Simpler instructions Î shallow MEv2 MEv2 MEv2 MEv2 Scratch pipelines QDR SRAM 16 15 14 13 Memory – Multiple cores with HW multi- Controller Hash Per-Engine threading per chip Unit Memory, CAM, Signals Interconnect Page 4 Raj Yavatkar IXPIXP 24002400 BlockBlock DiagramDiagram Page 5 Raj Yavatkar XScaleXScale -

Intel® IXP42X Product Line of Network Processors with ETHERNET Powerlink Controlled Node

Application Brief Intel® IXP42X Product Line of Network Processors With ETHERNET Powerlink Controlled Node The networked factory floor enables the While different real-time Ethernet solutions adoption of systems for remote monitoring, are available or under development, EPL, long-distance support, diagnostic services the real-time protocol solution managed and the integration of in-plant systems by the open vendor and user group EPSG with the enterprise. The need for flexible (ETHERNET Powerlink Standardization Group), connectivity solutions and high network is the only deterministic data communication bandwidth is driving a fundamental shift away protocol that is fully conformant with Ethernet from legacy industrial bus architectures and networking standards. communications protocols to industry EPL takes the standard approach of IEEE standards and commercial off-the shelf (COTS) 802.3 Ethernet with a mixed polling and time- solutions. Standards-based interconnect slicing mechanism to transfer time-critical data technologies and communications protocols, within extremely short and precise isochronous especially Ethernet, enable simpler and more cycles. In addition, EPL provides configurable cost-effective integration of network elements timing to synchronize networked nodes with and applications, from the enterprise to the www.intel.com/go/embedded high precision while asynchronously transmitting factory floor. data that is less time-critical. EPL is the ideal The Intel® IXP42X product line of network solution for meeting the timing demands of processors with ETHERNET Powerlink (EPL) typical high performance industrial applications, software helps manufacturers of industrial such as automation and motion control. control and automation devices bridge Current implementations have reached 200 µs between real-time Ethernet on the factory cycle-time with a timing deviation (jitter) less floor and standard Ethernet IT networks in than 1 µs. -

Effective Compilation Support for Variable Instruction Set Architecture

Effective Compilation Support for Variable Instruction Set Architecture Jack Liu, Timothy Kong, Fred Chow Cognigine Corporation 6120 Stevenson Blvd. Fremont, CA 94538, USA g fjackl,timk,fredc @cognigine.com Abstract running embedded applications. these application specific instruction set processors (ASIPs) [1, 2] use instruction sets customized towards a specific type of application so they Traditional compilers perform their code generation can deliver efficient run-time performance for typical pro- tasks based on a fixed, pre-determined instruction set. This grams written for that application area. Because the instruc- paper describes the implementation of a compiler that de- tion set is pre-determined, the compiler is built and config- termines the best instruction set to use for a given pro- ured to generate code based on a custom, fixed instruction gram and generates efficient code sequence based on it. We set [16]. first give an overview of the VISC Architecture pioneered at Cognigine that exemplifies a Variable Instruction Set Ar- The Variable Instruction Set Communications Architec- chitecture. We then present three compilation techniques ture (VISC Architecture ÌÅ ) from Cognigine represents a that, when combined, enable us to provide effective com- very different approach in the attempt to provide greater pilation and optimization support for such an architecture. configurability in compiling embedded software. The VISC The first technique involves the use of an abstract opera- Architecture can perform a complex set of instructions con- tion representation that enables the code generator to op- sisting of multiple, fine and coarse grain operations that op- timize towards the core architecture of the processor with- erate on multiple operands at the same time in one fixed out committing to any specific instruction format. -

NP-5™ Network Processor

NETWORK PROCESSOR PRODUCT BRIEF † NP-5™ Network Processor 240Gbps NPU for Carrier Ethernet Applications HIGHLIGHTS Mellanox’s NP-5 is a highly-flexible network processor with integrated traffic management, targeting Carrier Ethernet Switches and Routers (CESR) and other Carrier Ethernet platforms – 240Gbps wire-speed network processor aimed that require high performance, flexible packet processing and fine-grained traffic management. at Carrier Ethernet applications TARGET APPLICATIONS – Integrated 240Gbps traffic management NP-5’s flexibility and integration allows system vendors to deliver cost effective solutions that providing granular bandwidth control can easily adapt to changing market requirements. – Up to 480Gbps peak processing data path and Typical applications include: Stand-alone pizza box solutions: CoS classification • Line cards in modular chassis: • Ethernet Aggregation Nodes – Suited for line card, services card and pizza box • Edge and Core Routers • Server Load Balancing Switches applications • Transport Switches • Multi-10Gbps Firewalls & VPN – On-chip control CPU for host CPU offload • Data Center Switches • Intrusion Detection Appliances • 3G/4G Wireless Infrastructure Equipment • Network Monitoring and Analysis Services – Power management for minimizing line card and system power dissipation SOFTWARE TOOLS – Operations, Administration and Management Mellanox supplies a comprehensive set of software tools to facilitate system design for NP-5 (OAM) processing offload based products. The toolset manages the data plane and allows designers to edit, build and – Synchronous Ethernet and IEEE1588v2 offload debug microcode applications for the Mellanox’s network processors with a unified GUI. for Circuit Emulation Services Designers can quickly develop and test the microcode using cycle-accurate simulation and debugging tools including breakpoints, single-step program execution and access to internal – IP reassembly for advanced packet processing resources. -

Network Processors the Morgan Kaufmann Series in Systems on Silicon Series Editor: Wayne Wolf, Georgia Institute of Technology

Network Processors The Morgan Kaufmann Series in Systems on Silicon Series Editor: Wayne Wolf, Georgia Institute of Technology The Designer’s Guide to VHDL, Second Edition Peter J. Ashenden The System Designer’s Guide to VHDL-AMS Peter J. Ashenden, Gregory D. Peterson, and Darrell A. Teegarden Modeling Embedded Systems and SoCs Axel Jantsch ASIC and FPGA Verification: A Guide to Component Modeling Richard Munden Multiprocessor Systems-on-Chips Edited by Ahmed Amine Jerraya and Wayne Wolf Functional Verification Bruce Wile, John Goss, and Wolfgang Roesner Customizable and Configurable Embedded Processors Edited by Paolo Ienne and Rainer Leupers Networks-on-Chips: Technology and Tools Edited by Giovanni De Micheli and Luca Benini VLSI Test Principles & Architectures Edited by Laung-Terng Wang, Cheng-Wen Wu, and Xiaoqing Wen Designing SoCs with Configured Processors Steve Leibson ESL Design and Verification Grant Martin, Andrew Piziali, and Brian Bailey Aspect-Oriented Programming with e David Robinson Reconfigurable Computing: The Theory and Practice of FPGA-Based Computation Edited by Scott Hauck and André DeHon System-on-Chip Test Architectures Edited by Laung-Terng Wang, Charles Stroud, and Nur Touba Verification Techniques for System-Level Design Masahiro Fujita, Indradeep Ghosh, and Mukul Prasad VHDL-2008: Just the New Stuff Peter J. Ashenden and Jim Lewis On-Chip Communication Architectures: System on Chip Interconnect Sudeep Pasricha and Nikil Dutt Embedded DSP Processor Design: Application Specific Instruction Set Processors Dake Liu Processor Description Languages: Applications and Methodologies Edited by Prabhat Mishra and Nikil Dutt Network Processors Architecture, Programming, and Implementation Ran Giladi Ben-Gurion University of the Negev and EZchip Technologies Ltd. -

Synchronized MIMD Computing Bradley C. Kuszmaul

Synchronized MIMD Computing by Bradley C. Kuszmaul SB mathematics Massachusetts Institute of Technology SB computer science and engineering Massachusetts Institute of Technology SM electrical engineering and computer science Massachusetts Institute of Technology Submitted to the Department of Electrical Engineering and Computer Science in partial ful®llment of the requirements for the degree of Doctor of Philosophy at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY May 1994 c Massachusetts Institute of Technology 1994. All rights reserved. : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : : :: : :: : :: : : :: Author : Department of Electrical Engineering and Computer Science May 22, 1994 :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : : :: : :: Certi®ed by :: Charles E. Leiserson Professor of Computer Science and Engineering Thesis Supervisor :: : :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : :: : : :: : :: : : :: Accepted by : F. R. Morgenthaler Chair, Department Committee on Graduate Students 1 2 Synchronized MIMD Computing by Bradley C. Kuszmaul Submitted to the Department of Electrical Engineering and Computer Science on May 22, 1994, in partial ful®llment of the requirements for the degree of Doctor of Philosophy Abstract Fast global synchronization provides simple, ef®cient solutions to many of the system problems of parallel computing. It achieves this by providing composition -

A Network Processor Architecture for High Speed Carrier Grade Ethernet Networks

TECHNISCHE UNIVERSITAT¨ MUNCHEN¨ Lehrstuhl f¨urIntegrierte Systeme A Network Processor Architecture for High Speed Carrier Grade Ethernet Networks Kimon Karras Vollst¨andigerAbdruck der von der Fakult¨atf¨urElektrotechnik und Informationstechnik der Technischen Universit¨atM¨unchen zur Erlangung des akademischen Grades eines Doktor-Ingenieurs (Dr.-Ing.) genehmigten Dissertation. Vorsitzender: Univ.-Prof. Dr.-Ing. Wolfgang Kellerer Pr¨uferder Dissertation: 1. Univ.-Prof. Dr. sc.techn. Andreas Herkersdorf 2. Univ.-Prof. Dr.-Ing. Andreas Kirst¨adter,Universit¨atStuttgart Die Dissertation wurde am 13.05.2013 bei der Technischen Universit¨atM¨unchen einge- reicht und durch die Fakult¨atf¨urElektrotechnik und Informationstechnik am 24.03.2014 angenommen. Preface This work was performed at the Institute for Integrated Systems of the Technical University of Munich under the auspices of Prof. Dr. sc.techn. Andreas Herkersdorf and Dr.-Ing. Thomas Wild, both of whom I have to whole-heartedly thank for giving me the opportunity of working with them and for their invaluable help and guidance throughout the five years of my stay at the institute. A further thanks goes to all the people at the institute with whom I've cooperated over the years and who have made life enjoyable both on a professional and on a personal level. A very great thanks is due to Daniel Llorente for keeping me company and bearing with me during most of this time. His contribution to this thesis is immense and without him it wouldn't have been possible. Muchas gracias, companero~ ! Furthermore I would like to thank all the partner companies and participants of the 100GET project, which formed the basis for this disseration. -

Object-Oriented Reconfigurable Processing for Wireless Networks Andrew A

Object-Oriented Reconfigurable Processing for Wireless Networks Andrew A. Gray, Clement Lee, Payman Arabshahi, Jeffrey Srinivasan Jet Propulsion Laboratory, California Institute of Technology 4800 Oak Grove Drive, MS 238-343, Pasadena, CA 91101 USA Abstract – We present an outline of reconfigurable processor while at the same time being sensitive and potentially error technologies and design methods with emphasis on an object- prone and costly. oriented approach, and both full and partial dynamic To address these issues, we present design methods reconfiguration. A specific broadly applicable architecture for that facilitate flexibility (leading to prolonged processor implementing a software reconfigurable network processor for lives), and dynamic reconfigurability, along with a generic wireless communication applications is presented; a prototype of which is currently operating in the laboratory. This reconfigurable processor architecture. Dynamic partial architecture, its associated object oriented design methods, and reconfiguration of the network processor will greatly partial reconfiguration techniques enable rapid-prototyping increase the value of the processor within the network. For and rapid implementations of communications and navigation instance the processor’s ability to perform a very large signal processing functions; provide long-life communications number of temporally separated (time-multiplexed) infrastructure; and result in dynamic operation within functions will dramatically increase network elasticity and networks with heterogeneous nodes, as well as compatibility compatibility (see Fig. 1). with other networks. This work builds upon numerous The paradigms will also empower the design engineer advances in commercial industry as well as military software with tools required for rapid prototyping and radio developments to space-based radios and network processing. The development of such radios and the network implementation of a wide variety of signal processing processor presented here require defining the correct functions. -

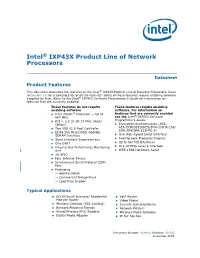

IXP43X Product Line of Network Processors Datasheet December 2008 2 Document Number: 316842; Revision: 003US Contents

Intel® IXP43X Product Line of Network Processors Datasheet Product Features This document describes the features of the Intel® IXP43X Product Line of Network Processors. Refer to Section 1.0 for a complete list of all the features. Some of these features require enabling software supplied by Intel. Refer to the Intel® IXP400 Software Programmer’s Guide for information on features that are currently enabled. These features do not require These features require enabling enabling software software. For information on ® Intel XScale Processor — Up to features that are currently enabled ® 667 MHz see the Intel IXP400 Software Programmer’s Guide. PCI v. 2.2 32-bit 33 MHz (Host/ Option) Encryption/Authentication (AES/ AES-CCM/3DES/DES/SHA-1/SHA-256/ Two USB v2.0 Host Controller SHA-384/SHA-512/MD-5) DDRI-266 MHz/DDRII-400MHz SDRAM Interface One High-Speed Serial Interface Two Network Processor Engines Slave Interface Expansion bus Up to two MII Interfaces One UART One UTOPIA Level 2 Interface Internal Bus Performance Monitoring Unit IEEE-1588 Hardware Assist 16 GPIO Four Internal Timers Synchronous Serial Protocol (SSP) Port Packaging —460-Pin PBGA — Commercial Temperature — Lead-Free Support Typical Applications SOHO-Small Business/ Residential VoIP Router Modular Router Video Phone Wireless Gateway (802.11a/b/g) Security Gateway/Router Network-Attached Storage Network Printers Wired/Wireless RFID Readers Wireless Media Gateway Digital Media Adapter IP Set Top box Document Number: 316842; Revision: 003US December 2008 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT.