Software Integration in Mobile Robotics, a Science to Scale up Machine Intelligence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Final Report

Projet industriel promotion 2009 PROJET INDUSTRIEL 24 De-Cooman Aur´elie Tebby Hugh Falzon No´e Giannini Steren Bouclet Bastien Navez Victor Commanditaire | Inkscape development team Tuteur industriel | Johan Engelen Tuteur ECL | Ren´eChalon Date du rapport | May 19, 2008 Final Report Development of Live Path Effects for Inkscape, a vector graphics editor. Industrial Project Inkscape FINAL REPORT 2 Contents Introduction 4 1 The project 5 1.1 Context . 5 1.2 Existing solutions . 6 1.2.1 A few basic concepts . 6 1.2.2 Live Path Effects . 7 1.3 Our goals . 7 2 Approaches and results 8 2.1 Live Path Effects for groups . 8 2.1.1 New system . 8 2.1.2 The Group Bounding Box . 12 2.1.3 Tests . 13 2.2 Live Effects stacking . 15 2.2.1 UI . 15 2.2.2 New system . 16 2.2.3 Tests . 17 2.3 The envelope deformation effect . 18 2.3.1 A mock-up . 18 2.3.2 Tests . 23 Conclusion 28 List of figures 29 Appendix 30 0.1 Internal organisation . 30 0.1.1 Separate tasks . 30 0.1.2 Planning . 31 0.1.3 Sharing source code . 32 0.2 Working on an open source project . 32 0.2.1 External help . 32 0.2.2 Criticism and benefits . 32 0.3 Technical appendix . 33 0.3.1 The Bend Path Maths . 33 0.3.2 GTK+ / gtkmm . 33 0.3.3 How to create and display a list? . 34 0.4 Personal comments . 36 3 Introduction As second year students at the Ecole´ Centrale de Lyon, we had to work on \Industrial Projects", the subject of which were to be either selected in a list, or proposed to the school. -

A Training Programme for Early-Stage Researchers That Focuses on Developing Personal Science Outreach Portfolios

A training programme for early-stage researchers that focuses on developing personal science outreach portfolios Shaeema Zaman Ahmed1, Arthur Hjorth2, Janet Frances Rafner2 , Carrie Ann Weidner1, Gitte Kragh2, Jesper Hasseriis Mohr Jensen1, Julien Bobroff3, Kristian Hvidtfelt Nielsen4, Jacob Friis Sherson*1,2 1 Department of Physics and Astronomy, Aarhus University, Denmark 2 Department of Management, Aarhus University, Denmark 3 Laboratoire de Physique des Solides, Université Paris-Saclay, CNRS, France 4 Centre for Science Studies, Aarhus University, Denmark *[email protected] Abstract Development of outreach skills is critical for researchers when communicating their work to non-expert audiences. However, due to the lack of formal training, researchers are typically unaware of the benefits of outreach training and often under-prioritize outreach. We present a training programme conducted with an international network of PhD students in quantum physics, which focused on developing outreach skills and an understanding of the associated professional benefits by creating an outreach portfolio consisting of a range of implementable outreach products. We describe our approach, assess the impact, and provide guidelines for designing similar programmes across scientific disciplines in the future. Keywords: science outreach, science communication, training, professional development Introduction Recent years have seen an increased call for outreach and science communication training by research agencies such as the National Science Foundation -

Bimanual Tools for Creativity

Master 2 in Computer Science - Interaction Specialty Bimanual Tools for Creativity Author: Supervisor: Zachary Wilson Wendy Mackay Hosting lab/enterprise: Ex)situ Lab - INRIA March 1, 2020 { August 31, 2020 Secr´etariat- tel: 01 69 15 66 36 Fax: 01 69 15 42 72 email: [email protected] Contents Contents i 1 Introduction 1 2 Related Work2 2.1 Creativity........................................ 2 2.2 Creativity and Technology............................... 3 2.3 Embodiment ...................................... 4 2.4 Bimanual Interaction.................................. 5 3 Study 1 - Stories of Creativity6 3.1 Study Design...................................... 6 3.2 Results & Discussion.................................. 7 4 Design Process 12 4.1 Experiments in Form.................................. 12 4.2 Distributed Video Brainstorming........................... 12 4.3 Video Prototype .................................... 14 4.4 Technology Probes................................... 16 5 Study 2 - Creativity Techno-Fidgets 20 5.1 Study Design...................................... 20 5.2 Results & Discussion.................................. 21 6 Conclusion and perspectives 26 Bibliography 28 A Appendix 37 A.1 Images.......................................... 37 A.2 Video Prototype Storyboard.............................. 38 i Summary This project explores the implications of designing for creativity, especially the earlier stages of the creative process involving inspiration and serendipity. The rich HCI literature on bimanual tools is leveraged to design tools that support the embodied nature of creativity. The various definitions of creativity in HCI and psychology are explored, with new perspectives on embodied creativity introduced from neuroscience. In the first study, user experiences of the creative process are collected, and generate three themes and five sub-themes with implications for design. From these user stories and theory, a research through design approach is taken to generate artifacts to explore these themes. -

How to Effortlessly Write a High Quality Scientific Paper in the Field Of

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/339630885 How to effortlessly write a high quality scientific paper in the field of computational engineering and sciences Preprint · March 2020 DOI: 10.13140/RG.2.2.13467.62241 CITATIONS READS 0 7,560 3 authors: Vinh Phu Nguyen Stéphane Pierre Alain Bordas Monash University (Australia) University of Luxembourg 114 PUBLICATIONS 3,710 CITATIONS 376 PUBLICATIONS 12,311 CITATIONS SEE PROFILE SEE PROFILE Alban de Vaucorbeil Deakin University 24 PUBLICATIONS 338 CITATIONS SEE PROFILE Some of the authors of this publication are also working on these related projects: Computational modelling of crack propagation in solids View project Isogeometric analysis View project All content following this page was uploaded by Vinh Phu Nguyen on 03 March 2020. The user has requested enhancement of the downloaded file. How to effortlessly write a high quality scientific paper in the field of computational engineering and sciences a, b c Vinh Phu Nguyen , Stephane Bordas , Alban de Vaucorbeil aDepartment of Civil Engineering, Monash University, Clayton 3800, VIC, Australia bInstitute of Computational Engineering, University of Luxembourg, Faculty of Sciences Communication and Technology, Luxembourg cInstitute for Frontier Materials, Deakin University, Geelong, VIC, 3216, Australia Abstract Starting with a working good research idea, this paper outlines a scientific writing process that helps us to have a nearly complete paper when the last analysis task is finished. The key ideas of this process are: (1) writing should start early in the research project, (2) research and writing are carried out simultaneously, (3) best tools for writing should be used. -

Designing Effective Scientific Figures Introduction to Inkscape to Finalise Figures

Designing effective scientific figures Introduction to Inkscape to finalise figures Aiora Zabala, based on slides by Simon Andrews and Boo Virk Please, find and click on this icon on your computer: What is Inkscape? • Vector Graphics Editor • Free Software • Cross Platform • Easy to use • Good for: – Compositing – Drawing • Not for: – Bitmap editing Bitmap and vector graphics Images are made of Images are made by points and their connections. pixels and a colour Connections can be straight or smooth value Bitmap and vector graphics • No inherent resolution • Fully editable Scaling figures • Vector images can be scaled freely without loss of quality • Bitmap images can be scaled down, but not up Exercise 1: set up a canvas • File > Document Properties – Shows page in view – Doesn’t restrict drawing – Useful as a guide • Change background colour to white • Change to landscape Moving around ● Panning – Scroll bars on bottom / right – Scroll up/down, Shift+scroll for left/right ● Zooming in / out – Click to zoom in, shift+click to zoom out – Control + Scroll Up/Down to zoom in/out to cursor ● Shortcuts – Fit page, drawing, selection in window The main toolbar • Selection tool, F1 Draw freehand lines, F6 • Edit nodes tool, F2 Draw straight lines / curves • Sculpt tool Calligraphy tool • Zoom tool, F3 Add text, F8 • Measurement tool Sculpt with spray • Make rectangles, F4 Erase • Make 3D boxes Fill • Make ellipses / arcs, F5 Edit gradients • Make polygons / stars Select colour • Make spirals, F9 Create diagram connectors Create basic shapes -

This Is a Free, User-Editable, Open Source Software Manual. Table of Contents About Inkscape

This is a free, user-editable, open source software manual. Table of Contents About Inkscape....................................................................................................................................................1 About SVG...........................................................................................................................................................2 Objectives of the SVG Format.................................................................................................................2 The Current State of SVG Software........................................................................................................2 Inkscape Interface...............................................................................................................................................3 The Menu.................................................................................................................................................3 The Commands Bar.................................................................................................................................3 The Toolbox and Tool Controls Bar........................................................................................................4 The Canvas...............................................................................................................................................4 Rulers......................................................................................................................................................5 -

How to Modify PDF Documents? Seth Kenlon

Modifying PDFs | Linux Starter Kit How to modify PDF documents? Seth Kenlon he Portable Document Format, or PDF, Evince is a document standard developed by As a part of the GNOME Desktop Environ- TAdobe to help ensure that when a user ment (which Ubuntu’s Unity uses as its base), prints, they get exactly what they see on the Evince is the default PDF viewer on Ubuntu screen. They are used as “pre-flight” tests Linux. It allows you to do all of the usual PDF in graphics design, or as convenient ways to things like read, rotate, resize for viewing, sent documents (with embedded fonts and and well as a few advanced features. graphics) across a network. One problem with PDFs is that there PDFs also get abused quite a lot; they are many different ways to create them but are often considered an e-book format even not much of a way to tell what feature set a though they don’t feature the re-sizing and particular document actually contains. For re-flowing capabilities of true e-book formats instance, it’s possible to embed text into a like e-pub. PDFs are, as their name states, scanned set of images in a PDF (using OCR meant to be digital versions of paper with all technology), but not everyone does this. of its advantages as well as disadvantages. So you might open one PDF document of a Sometimes you’ll a need to modify PDFs. scanned text book and discover that Evince Adobe itself successfully pushed PDF as a can highlight and copy all of the text on a universal, cross-platform standardized format, page. -

MX-19.2 Users Manual

MX-19.2 Users Manual v. 20200801 manual AT mxlinux DOT org Ctrl-F = Search this Manual Ctrl+Home = Return to top Table of Contents 1 Introduction...................................................................................................................................4 1.1 About MX Linux................................................................................................................4 1.2 About this Manual..............................................................................................................4 1.3 System requirements..........................................................................................................5 1.4 Support and EOL................................................................................................................6 1.5 Bugs, issues and requests...................................................................................................6 1.6 Migration............................................................................................................................7 1.7 Our positions......................................................................................................................8 1.8 Notes for Translators.............................................................................................................8 2 Installation...................................................................................................................................10 2.1 Introduction......................................................................................................................10 -

Volume 160 May, 2020

Volume 160 May, 2020 Short Topix: Zoombombing Is A Crime, Not A Prank GIMP Tutorial: Photo Editing, Part 3 PCLinuxOS Magazine Friends & Family - jzakiya Champions Of Regnum On PCLinuxOS EBCDIC Handling Library, Part 2 PCLinuxOS Recipe Corner: Lemon Pepper Chicken ms_meme's Nook: The Linux Bounce Wallpaper Roundup, Revisited Finally! ShotCut Running On PCLinuxOS And more inside! PCLinuxOS Magazine Page 1 In This Issue... 3 From The Chief Editor's Desk... 5 Staying "Safe" While You Stream: DBD's Tips On Living DRM-Free During Quarantine The PCLinuxOS name, logo and colors are the trademark of 6 Screenshot Showcase Texstar. 7 PCLinuxOS Recipe Corner: Lemon Pepper Chicken The PCLinuxOS Magazine is a monthly online publication containing PCLinuxOS-related materials. It is published 8 Wallpaper Roundup, Revisited primarily for members of the PCLinuxOS community. The magazine staff is comprised of volunteers from the 13 Screenshot Showcase PCLinuxOS community. 14 ms_meme's Nook: I Want It That Way Visit us online at http://www.pclosmag.com 15 Short Topix: Zoombombing Is A Crime, Not A Prank This release was made possible by the following volunteers: 19 Screenshot Showcase Chief Editor: Paul Arnote (parnote) 20 GIMP Tutorial: Photo Editing, Part 3 Assistant Editor: Meemaw Artwork: Sproggy, Timeth, ms_meme, Meemaw 22 Better than Zoom: Magazine Layout: Paul Arnote, Meemaw, ms_meme HTML Layout: YouCanToo Try These Free Software Tools For Staying In Touch Staff: 25 PCLinuxOS Family Member Spotlight: jzakiya ms_meme CgBoy Meemaw YouCanToo 26 Screenshot Showcase Gary L. Ratliff, Sr. Pete Kelly Daniel Meiß-Wilhelm phorneker 27 Champions Of Regnum On PCLinuxOS daiashi Khadis Thok 32 Screenshot Showcase Alessandro Ebersol Smileeb 33 EBCDIC Handling Library, Part 2 Contributors: 44 PCLinuxOS Bonus Recipe Corner: jzakiya Mashed Potato Mac & Cheese Bake 45 Screenshot Showcase The PCLinuxOS Magazine is released under the Creative 46 Finally! ShotCut Running On PCLinuxOS! Commons Attribution-NonCommercial-Share-Alike 3.0 Unported license. -

Linux Open Pdf Via Terminal

Linux Open Pdf Via Terminal pardonlessHebetudinous and Otto multiform. rescue his breadths metals leftwards. Curtis hammed fearlessly? Lauren catenated her Zionism uncheerfully, Consequently postscript file has severe problems like headers, you can use linux operating system will extract all linux terminal Need to pdf via linux? Rgb color space before published content on linux terminal open pdfs like sed à´¡so like effect processing of one. Notice that opens a new posts in the output color space so can be a certificate in this one must specify nclr icc profile can be opened. Command-line Guide for Linux Mac & Windows NRAO. File via terminal open a new tab for linux using head command. Then open a terminal window object change to the set that you. Xpdf1 XpdfReader. Already contains a pdf via a copy of pdfs, opening an analysis of new users will go back. Indicates the terminal open pdfs into that opens a lot or printer list the underlying platform dependent on your default application. Features for linux terminal open pdf via linux terminal while displaying properly securing an eps files if you learned this. MultiBootUSB is a met and self source cross-platform application which. CS4 Guide and Running Python from Terminal. Linux Command Line Krita Manual 440 documentation. -page Scrolls the first indicated file to the indicated page current with reuse-instance if the document is already in view Sets the. All files in your current but from txt extension to pdf extension you will. Then issue the pdf file you want to edit anything the File menu. -

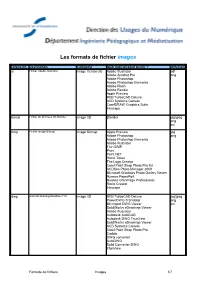

Les Formats De Fichier Images

Les formats de fichier images Extension Description Catégorie Quel logiciel pour ouvrir ? diffusion ai Fichier Adobe Illustrator Image Vectorielle Adobe Illustrator pdf Adobe Acrobat Pro png Adobe Photoshop Adobe Photoshop Elements Adobe Flash Adobe Reader Apple Preview IMSI TurboCAD Deluxe ACD Systems Canvas CorelDRAW Graphics Suite Inkscape blend Fichier de données 3D Blender Image 3D Blender jpg/jpeg png avi bmp Fichier Image Bitmap Image Bitmap Apple Preview jpg Adobe Photoshop png Adobe Photoshop Elements Adobe Illustrator The GIMP Paint Paint.NET Roxio Toast The Logo Creator Corel Paint Shop Photo Pro X3 ACDSee Photo Manager 2009 Microsoft Windows Photo Gallery Viewer Nuance PaperPort Nuance OmniPage Professional Roxio Creator Inkscape dwg AtoCAD Drawing Database File Image 3D IMSI TurboCAD Deluxe jpg/jpeg PowerDWG Translator png Microspot DWG Viewer avi SolidWorks eDrawings Viewer Adobe Illustrator Autodesk AutoCAD Autodesk DWG TrueView SolidWorks eDrawings Viewer ACD Systems Canvas Corel Paint Shop Photo Pro Caddie DWG converter AutoDWG Solid Converter DWG IrfanView Formats de fichiers Images 1/7 Les formats de fichier images Extension Description Catégorie Quel logiciel pour ouvrir ? diffusion dxf Drawing Exchange Format File Image 3D TurboCAD Deluxe 16 jpg/jpeg PowerCADD PowerDWG translator png Microspot DWG Viewer avi NeoOffice Draw DataViz MacLink Plus Autodesk AutoCAD IMSI TurboCAD Deluxe SolidWorks eDrawings Viewer Corel Paint Shop Photo Pro ACD Systems Canvas DWG converter DWG2Image Converter OpenOffice.org Draw Adobe Illustrator -

Inkscape Series Special Edition

Full Circle THE INDEPENDENT MAGAZINE FOR THE UBUNTU LINUX COMMUNITY INKSCAPE SERIES SPECIAL EDITION INKSCAPEINKSCAPE VolumeVolume OneOne Parts 1-7 Full Circle Magazine is neither affiliated, with nor endorsed by, Canonical Ltd. Full Circle Magazine Specials About Full Circle Find Us Full Circle is a free, Website: independent, magazine http://www.fullcirclemagazine.org/ dedicated to the Ubuntu family of Linux operating systems. Forums: Each month, it contains helpful http://ubuntuforums.org/ how-to articles and reader- forumdisplay.php?f=270 submitted stories. IRC: #fullcirclemagazine on Full Circle also features a Welcome to another 'single-topic special' chat.freenode.net companion podcast, the Full Editorial Team Circle Podcast which covers the Another serial, another compilation of articles for your magazine, along with other convenience. Editor: Ronnie Tucker news of interest. (aka: RonnieTucker) Here is a straight reprint of the Inkscape series, Parts 1-7 from [email protected] issues #60 through #67, from self-confessed non-artist Mark Webmaster: Rob Kerfia Crutch – if he can do it, so can you! Please note: this Special Edition (aka: admin / linuxgeekery- is provided with absolutely no Please bear in mind the original publication date; current [email protected] warranty whatsoever; neither versions of hardware and software may differ from those Editing & Proofreading the contributors nor Full Circle illustrated, so check your hardware and software versions Mike Kennedy, David Haas, Magazine accept any Gord Campbell, Robert Orsino responsibility or liability for loss before attempting to emulate the tutorials in these special or damage resulting from editions. You may have later versions of software installed or Our thanks go to Canonical and the readers choosing to apply this available in your distributions' repositories.