LAIF: AI, Deep Learning for Germany Suetterlin Letter Recognition and Generation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

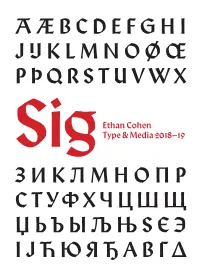

Sig Process Book

A Æ B C D E F G H I J IJ K L M N O Ø Œ P Þ Q R S T U V W X Ethan Cohen Type & Media 2018–19 SigY Z А Б В Г Ґ Д Е Ж З И К Л М Н О П Р С Т У Ф Х Ч Ц Ш Щ Џ Ь Ъ Ы Љ Њ Ѕ Є Э І Ј Ћ Ю Я Ђ Α Β Γ Δ SIG: A Revival of Rudolf Koch’s Wallau Type & Media 2018–19 ЯREthan Cohen ‡ Submitted as part of Paul van der Laan’s Revival class for the Master of Arts in Type & Media course at Koninklijke Academie von Beeldende Kunsten (Royal Academy of Art, The Hague) INTRODUCTION “I feel such a closeness to William Project Overview Morris that I always have the feeling Sig is a revival of Rudolf Koch’s Wallau Halbfette. My primary source that he cannot be an Englishman, material was the Klingspor Kalender für das Jahr 1933 (Klingspor Calen- dar for the Year 1933), a 17.5 × 9.6 cm book set in various cuts of Wallau. he must be a German.” The Klingspor Kalender was an annual promotional keepsake printed by the Klingspor Type Foundry in Offenbach am Main that featured different Klingspor typefaces every year. This edition has a daily cal- endar set in Magere Wallau (Wallau Light) and an 18-page collection RUDOLF KOCH of fables set in 9 pt Wallau Halbfette (Wallau Semibold) with woodcut illustrations by Willi Harwerth, who worked as a draftsman at the Klingspor Type Foundry. -

JAF Herb Specimen © Just Another Foundry, 2010 Page 1 of 9

JAF Herb specimen © Just Another Foundry, 2010 Page 1 of 9 Designer: Tim Ahrens Format: Cross platform OpenType Styles & weights: Regular, Bold, Condensed & Bold Condensed Purchase options : OpenType complete family €79 Single font €29 JAF Herb Webfont subscription €19 per year Tradition ist die Weitergabe des Feuers und nicht die Anbetung der Asche. Gustav Mahler www.justanotherfoundry.com JAF Herb specimen © Just Another Foundry, 2010 Page 2 of 9 Making of Herb Herb is based on 16th century cursive broken Introducing qualities of blackletter into scripts and printing types. Originally designed roman typefaces has become popular in by Tim Ahrens in the MA Typeface Design recent years. The sources of inspiration range course at the University of Reading, it was from rotunda to textura and fraktur. In order further refined and extended in 2010. to achieve a unique style, other kinds of The idea for Herb was to develop a typeface blackletter were used as a source for Herb. that has the positive properties of blackletter One class of broken script that has never but does not evoke the same negative been implemented as printing fonts is the connotations – a type that has the complex, gothic cursive. Since fraktur type hardly ever humane character of fraktur without looking has an ‘italic’ companion like roman types few conservative, aggressive or intolerant. people even know that cursive blackletter As Rudolf Koch illustrated, roman type exists. The only type of cursive broken script appears as timeless, noble and sophisticated. that has gained a certain awareness level is Fraktur, on the other hand, has different civilité, which was a popular printing type in qualities: it is displayed as unpretentious, the 16th century, especially in the Netherlands. -

Download Eskapade

TYPETOGETHER Eskapade Creating new common ground between a nimble oldstyle serif and an experimental Fraktur DESIGNED BY YEAR Alisa Nowak 2012 ESKAPADE & ESKAPADE FRAKTUR ABOUT The Eskapade font family is the result of Alisa Nowak’s unique script practiced in Germany in the vanishingly research into Roman and German blackletter forms, short period between 1915 and 1941. The new mainly Fraktur letters. The idea was to adapt these ornaments are also hybrid Sütterlin forms to fit with broken forms into a contemporary family instead the smooth roman styles. of creating a faithful revival of a historical typeface. Although there are many Fraktur-style typefaces On one hand, the ten normal Eskapade styles are available today, they usually lack italics, and their conceived for continuous text in books and magazines italics are usually slanted uprights rather than proper with good legibility in smaller sizes. On the other italics. This motivated extensive experimentation with hand, the six angled Eskapade Fraktur styles capture the italic Fraktur shapes and resulted in Eskapade the reader’s attention in headlines with its mixture Fraktur’s unusual and interesting solutions. In of round and straight forms as seen in ‘e’, ‘g’, and addition to standard capitals, it offers a second set ‘o’. Eskapade works exceptionally well for branding, of more decorative capitals with double-stroke lines logotypes, and visual identities, for editorials like to intensify creative application and encourage magazines, fanzines, and posters, and for packaging. experimental use. Eskapade roman adopts a humanist structure, but The Thin and Black Fraktur styles are meant for is more condensed than other oldstyle serifs. -

SOPHIA BAUERIN, Child's Calligraphy Sample Book

SOPHIA BAUERIN, Child’s Calligraphy Sample Book: ANONYMOUS, Drei junge Reisende (Three Young Travellers); ANONYMOUS, Der Menschenfreunde (The Philanthropist); arithmetic table In German, decorated manuscript on paper Germany, dated 1792 8 folios on paper (4 bifolios in a single quire), complete, with watermark: 2 crossed keys with 2-line stems, within a wreath that may contain letters or words, unidentified in the Bernstein database, and countermark: a banderole with ICL in simple serif script, similar contemporary countermark reading ‘ICL’ (same size and lettering but with a slightly different frame) is found in Berlin, Singakademie zu Berlin, SA 3343, where it is paired with a watermark labeled ‘Dresden’, first folio serves as a front cover with title and date in red and green blackletter script, surrounded by a painted baroque frame with a floral wreath, ff. 2-6 ruled in pencil, text written on recto only in long line, in two calligraphic scripts (f. 2 in German Kanzleischrift with first line in blackletter, ff. 3-6 in kurrent with first lines in Kanzleischrift) in black ink with large decorative initials in green, blue, and red, variable number of lines (7-12) and justification (90-130 x 180-185 mm.), generous margins contain decorative borders of filigree lozenges and swirls, f. 7 holds only a table of sums in 4 columns (11 lines), booksellers’s marks in pencil on f. 1v (‘8 Kr’) and f. 2 (‘142|69|5’). No binding; the quire is tacketed through the center fold with silk thread of twisted yellow, pink, blue, and green, with the folded edge at the head of the text and opening edge at bottom (i.e. -

A Brief History of the Blackletter Font Style

A Brief History of the Blackletter Font Style By: Linda Tseng ● Blackletter font also called Fraktur, Gothic, or Old English. ● From Western Europe such as Germany, Germany use Gothic until World War II. ● During twelfth century to twenty century. ● Blackletter hands were called Gothic by the modernist Lorenzo Valla and others in middle fifteenth century in Italy. ● Used to describe the scripts of the Middle Ages in which the darkness of the characters overpowers the whiteness of the page. ● In the 1500’s, blackletter became less popular for printing in a lots of countries except Germany and the Countries that speaking German. ● The best Textura specimen in the Gutenberg Museum Library’s collection is comes from Great Britain. Overview ● Rotunda types, the second oldest blackletter style never really caught on as a book type in German speaking lands, although twentieth century calligraphers, as well as arts and crafts designers, have used it quite well for display purposes. ● Cursiva- developed in the 14th century as a simple form of textualis. ● Hybrida( bastarda)- a mixture of textualis and cursiva, and it’s developed in the early 15th century. ● Donatus Kalender- the name for the metal type design that Gutenberg used in his earliest surviving printed works, and it’s from the early 1450s. Blackletters are difficult to read as body text so they are better used for headings, logos, posters and signs. ● Newspaper headlines, magazine, Fette Fraktur are used for old fashioned headlines and beer advertising. ● Wilhelm Klingspor Gotisch adorns many wine label. ● Linotext and Old English are popular choices for certificates. -

Fonts for Latin Paleography

FONTS FOR LATIN PALEOGRAPHY Capitalis elegans, capitalis rustica, uncialis, semiuncialis, antiqua cursiva romana, merovingia, insularis majuscula, insularis minuscula, visigothica, beneventana, carolina minuscula, gothica rotunda, gothica textura prescissa, gothica textura quadrata, gothica cursiva, gothica bastarda, humanistica. User's manual 5th edition 2 January 2017 Juan-José Marcos [email protected] Professor of Classics. Plasencia. (Cáceres). Spain. Designer of fonts for ancient scripts and linguistics ALPHABETUM Unicode font http://guindo.pntic.mec.es/jmag0042/alphabet.html PALEOGRAPHIC fonts http://guindo.pntic.mec.es/jmag0042/palefont.html TABLE OF CONTENTS CHAPTER Page Table of contents 2 Introduction 3 Epigraphy and Paleography 3 The Roman majuscule book-hand 4 Square Capitals ( capitalis elegans ) 5 Rustic Capitals ( capitalis rustica ) 8 Uncial script ( uncialis ) 10 Old Roman cursive ( antiqua cursiva romana ) 13 New Roman cursive ( nova cursiva romana ) 16 Half-uncial or Semi-uncial (semiuncialis ) 19 Post-Roman scripts or national hands 22 Germanic script ( scriptura germanica ) 23 Merovingian minuscule ( merovingia , luxoviensis minuscula ) 24 Visigothic minuscule ( visigothica ) 27 Lombardic and Beneventan scripts ( beneventana ) 30 Insular scripts 33 Insular Half-uncial or Insular majuscule ( insularis majuscula ) 33 Insular minuscule or pointed hand ( insularis minuscula ) 38 Caroline minuscule ( carolingia minuscula ) 45 Gothic script ( gothica prescissa , quadrata , rotunda , cursiva , bastarda ) 51 Humanist writing ( humanistica antiqua ) 77 Epilogue 80 Bibliography and resources in the internet 81 Price of the paleographic set of fonts 82 Paleographic fonts for Latin script 2 Juan-José Marcos: [email protected] INTRODUCTION The following pages will give you short descriptions and visual examples of Latin lettering which can be imitated through my package of "Paleographic fonts", closely based on historical models, and specifically designed to reproduce digitally the main Latin handwritings used from the 3 rd to the 15 th century. -

A Lively and Delicate Blackletter Modelled on a Bâtarde Flamande

TYPETOGETHER Givry A lively and delicate blackletter modelled on a bâtarde flamande. DESIGNED BY YEAR Tom Grace 2008 GIVRY ABOUT To create Givry, Tom Grace researched the bâtarde The bâtarde flamande is technically a writing hand flamande, a lively style of writing used predominantly that, with its syncopation and fluidity, produces a in France and what is present-day Belgium in the vibrance uncharacteristic of other blackletters. Simply 15th century. The style shares an ancestry with other put, the bâtarde flamande is the most expressive and writing styles traditionally grouped as blackletter: emotional of the blackletter options, and Givry plays fraktur, textura, rotunda, and schwabacher. The to this strength. bâtarde flamande, however, evolved into an aesthetic While suitable as an elegant and energetic display far removed from its relatives. face, Givry was created to set continuous text. Many The bâtarde flamande is strikingly distinct in almost refinements and adjustments were necessary to every way from its blackletter cousins. While high- balance both the style’s irregular nature with the contrast in nature, the bâtarde flamande is more consistency that continuous text typography requires. delicate and dynamic than the austere and condensed Carefully researched and developed in OpenType fraktur and textura. Its quick curves also lack the format for a wealth of typographic features and rigidity of the schwabacher and rotunda. Calligraphic support for more than forty languages, Givry is flair through swashes is thematic, as are the variations neither derivative nor experimental, but is historically in letterforms. accurate and textually enjoyable. The flowing rhythm, achieved through a slightly Givry comes in one weight, speaks multiple rightward lean, is most noticeable in the hallmark ‘f’ languages, and, along with our entire catalogue, and long ‘s’ and is undergirded by round forms which has been optimised for today’s varied screen uses. -

MEDIEVAL SCRIPTS I / Xxxix

GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS I / XXXIX Medieval Scripts 1 Overview 3 2 Gothic Architecture 19 3 Late Medieval Manuscripts 25 4 Blackletter 29 © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS II / XXXIX © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 3 / 39 1,000 CE – PRESENT Overview Blackletter—a collection of medieval scripts— has existed in numerous forms for over a thousand years and still remains in use today. © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 4 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 5 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 6 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 7 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 8 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 9 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 10 / 39 © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 11 / 39 © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 12 / 39 © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 13 / 39 1990 CE Old English Font • Designed by Monotype Corporation • A mash-up of historic styles • Modern interpretation of blackletter script • Includes anachronistic glyphs: Arabic numerals, -

From Scribes to Types Peter Van Der Weij.Indd

FROM SCRIBES TO TYPES THE INSPIRATIONS AND INFLUENCES FOR THE FIRST MOVABLE TYPES Research by: PETER VAN DER WEIJ Tutored by: ALBERT CORBETO Course: MÁSTER DE TIPOGRAFÍA AVANZADA (EINA Center de Disseny i Art de Barcelona) ABSTRACT This research is about exploring the period before and after the invention of the printing press. The journey that the first scripts made to be turned into movable type. The research consists of investigating and mapping the scripts that were chosen. The thesis has an analyti- cal view on each journey. Historical background, æsthetical influences, models, characteristics and comparison are criteria that have been investigated for each of these scripts. This research shed light on the aspects of the transition from script to moveable type and proves that the cul- tural and historical context, aesthetically choices play as important role as technical reasons for movable type to come to life. Knowing that as a type designer will help me to first under- stand and second revitalize the unexplored ideas in old scripts and typefaces. KEYWORDS: Printing press, Movable type, Scripts, History, Type design, Europe, Analytical, Adjustments, Transition. ii TABLE OF CONTENT INTRODUCTION 1 METHODOLOGY 3 THE GUTENBERG B42 5 THE FIRST PRINTED TYPE IN ITALY 8 THE FIRST ROMAN 12 ROMAN CAPITALS 12 PERFECTING THE SCRIPT 15 THE ITALIAN ROTUNDA 17 CAXTON TYPE 2 20 ITALIC 1 23 CONCLUSION 26 BIBLIOGRAPHY 27 INTRODUCTION This is a research about the first movable types in Europe and how they came to be. When I started my research about the transitional period from handwriting to printed books in Europe the focus was more on the technical challenges that the movable type makers ran into in order to create the first types. -

Fine Historic Typefaces

Walden Font Fine Historic Typefaces hen we formed the Walden Font WCompany in the Summer of 1994, it was with the understanding that most of the really great typefaces were created many centuries ago, and that only few of the later creations measure up to the standards set by the likes of Gutenberg, Aldus, Palatino and Caslon. Unfortunately, the work of these and other pioneers has been forgotten over time, and the rules of proportion that make type beautiful have given way to the shifting passions of “contemporary design.” For that reason, we have made it our mission to bring those old and long forgotten typefaces back to life. Our painstaking historical research and precision work has earned us the acclaim of Museums, National Historic Sites, various re- enacting and living history organizations and educational institutions around the country and the world. Our fonts and clip-art are easy to install and use, they work with most software applications on the market today, both for Windows and Macintosh platforms. A painless order process makes it easy for you to obtain our products — simply detach or copy the order form on the back cover of this catalog and send it to us, or order on-line at our website http://www.waldenfont.com. If you have questions or comments about anything, please don’t hesitate to contact us. Send e-mail to [email protected], write to Walden Font Co., P. O. Box 871, Winchester, MA 01890 or call us toll-free at 1-800-519- 4575. We look forward to hearing from you. -

From Law in Blackletter to “Blackletter Law”*

LAW LIBRARY JOURNAL Vol. 108:2 [2016-9] From Law in Blackletter to “Blackletter Law”* Kasia Solon Cristobal** Where does the phrase “blackletter law” come from? Chasing down its origins uncov- ers not only a surprising turnabout from blackletter law’s original meaning, but also prompts examination of a previously overlooked subject: the history of the law’s changing appearance on the page. This history ultimately provides a cautionary tale of how appearances have hindered access to the law. Introduction .......................................................181 What the Law Looked Like: The Lay of the Land .........................185 Handwriting .....................................................185 Print ...........................................................187 Difficulties in Reading the Law ........................................189 Handwriting .....................................................190 Print ...........................................................193 Why Gothic Persisted Longest in the Law ...............................195 Gothic’s Symbolism ...............................................196 State Authority .................................................198 National Identity ...............................................199 The Englishness of English Law ...................................201 Gothic’s Vested Interests. .203 Printers .......................................................204 Clerks ........................................................205 Lawyers .......................................................209 -

The Living Tradition of Medieval Scripts in JRR

Journal of Tolkien Research Volume 10 Issue 2 Article 8 2020 ‘Written in a Fair Hand’: The Living Tradition of Medieval Scripts in J.R.R. Tolkien’s Calligraphy Eduardo B. Kumamoto Independent scholar, [email protected] Follow this and additional works at: https://scholar.valpo.edu/journaloftolkienresearch Part of the Illustration Commons, Literature in English, British Isles Commons, and the Medieval History Commons Recommended Citation Kumamoto, Eduardo B. (2020) "‘Written in a Fair Hand’: The Living Tradition of Medieval Scripts in J.R.R. Tolkien’s Calligraphy," Journal of Tolkien Research: Vol. 10 : Iss. 2 , Article 8. Available at: https://scholar.valpo.edu/journaloftolkienresearch/vol10/iss2/8 This Article is brought to you for free and open access by the Christopher Center Library at ValpoScholar. It has been accepted for inclusion in Journal of Tolkien Research by an authorized administrator of ValpoScholar. For more information, please contact a ValpoScholar staff member at [email protected]. ‘Written in a Fair Hand’: The Living Tradition of Medieval Scripts in J.R.R. Tolkien’s Calligraphy Cover Page Footnote Figures 1–7, 9–17, 19, and 21–29 are reproduced by kind permission of the Tolkien Estate. This article is available in Journal of Tolkien Research: https://scholar.valpo.edu/journaloftolkienresearch/vol10/iss2/ 8 Kumamoto: The Living Tradition of Medieval Scripts in J.R.R. Tolkien’s Calligraphy INTRODUCTION In his Liner notes, W.H. Auden (2015: 1) confessed that among Tolkien’s ‘many gifts, the three which astound me most are his gift for inventing Proper Names, his gift for describing landscape, and (how I envy him this) his gift for calligraphy.’ It is not hard to see why Auden envied Tolkien’s calligraphic skills: despite the haste and illegibility of many drafts, Tolkien could and did produce several impressively accomplished manuscripts.