Insights Into Rooted and Non-Rooted Android Mobile Devices with Behavior Analytics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ten Steps to Smartphone Security

Ten Steps to Smartphone Security Smartphones continue to grow in popularity and are now as powerful and functional as many computers. It is important to protect your smartphone just like you protect your computer as mobile cybersecurity threats are growing. These mobile security tips can help you reduce the risk of exposure to mobile security threats: 1. Set PINs and passwords. To prevent unauthorized access to your phone, set a password or Personal Identification Number (PIN) on your phone’s home screen as a first line of defense in case your phone is lost or stolen. When possible, use a different password for each of your important log-ins (email, banking, personal sites, etc.). You should configure your phone to automatically lock after five minutes or less when your phone is idle, as well as use the SIM password capability available on most smartphones. 2. Do not modify your smartphone’s security settings. Do not alter security settings for convenience. Tampering with your phone’s factory settings, jailbreaking, or rooting your phone undermines the built-in security features offered by your wireless service and smartphone, while making it more susceptible to an attack. 3. Backup and secure your data. You should backup all of the data stored on your phone – such as your contacts, documents, and photos. These files can be stored on your computer, on a removal storage card, or in the cloud. This will allow you to conveniently restore the information to your phone should it be lost, stolen, or otherwise erased. 4. Only install apps from trusted sources. -

Sophos Mobile Security

Sophos Mobile Security Protect your Android devices against malware and other threats The market dominance of Android devices and the inherent openness of Android led to the proliferation of malware and Potentially Unwanted Applications (PUA). SophosLabs detected over a million new pieces of malware in 2014 alone, and the growth rate is accelerating. Malware can lead to data loss, reputation loss, additional costs, and reduced performance. Highlights Protection and performance The Sophos Mobile Security antivirus app protects your Android devices without compromising Ì Privacy Advisor warns performance or battery life. The app uses up-to-the-minute intelligence from SophosLabs and the Sophos of potential privacy core antivirus engine. This means your apps are automatically scanned for malware as you install them. breaches Sophos Mobile Security’s consistent performance has earned recognition from independent testing agencies including AV-Test. Ì Security Advisor gives tips to improve device Malware protection security Our Sophos Mobile Security antivirus app protects your Android devices with proactive identification of malware. Your apps are automatically scanned for malware as you install them. Sophos includes protection Ì Loss and Theft for PUAs and low-reputation apps—new apps that have no known history and may pose a threat. PUAs Protection include adware, rootkits, diallers, and any association the app may have to previous malicious behaviour such as a certificate that has been compromised. Ì App Protection with additional passwords for Web protection sensitive apps Surf securely on the internet from your Android device. Sophos Mobile Security checks all websites against a Ì Spam Filter SophosLabs database for known malicious sites and prevents users from accessing them. -

Total Defense Mobile Security

USER'S GUIDE Total Defense Mobile Security Total Defense Mobile Security User's Guide Publication date 2015.04.09 Copyright© 2015 Total Defense Mobile Security Legal Notice All rights reserved. No part of this book may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage and retrieval system, without written permission from an authorized representative of Total Defense. The inclusion of brief quotations in reviews may be possible only with the mention of the quoted source. The content can not be modified in any way. Warning and Disclaimer. This product and its documentation are protected by copyright. The information in this document is provided on an “as is” basis, without warranty. Although every precaution has been taken in the preparation of this document, the authors will not have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the information contained in this work. This book contains links to third-party Websites that are not under the control of Total Defense, therefore Total Defense is not responsible for the content of any linked site. If you access a third-party website listed in this document, you will do so at your own risk. Total Defense provides these links only as a convenience, and the inclusion of the link does not imply that Total Defense endorses or accepts any responsibility for the content of the third-party site. Trademarks. Trademark names may appear in this book. -

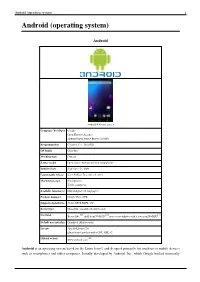

Android (Operating System) 1 Android (Operating System)

Android (operating system) 1 Android (operating system) Android Android 4.4 home screen Company / developer Google Open Handset Alliance Android Open Source Project (AOSP) Programmed in C (core), C++, Java (UI) OS family Unix-like Working state Current Source model Open source with proprietary components Initial release September 23, 2008 Latest stable release 4.4.2 KitKat / December 9, 2013 Marketing target Smartphones Tablet computers Available language(s) Multi-lingual (46 languages) Package manager Google Play, APK Supported platforms 32-bit ARM, MIPS, x86 Kernel type Monolithic (modified Linux kernel) [1] [2] [3] Userland Bionic libc, shell from NetBSD, native core utilities with a few from NetBSD Default user interface Graphical (Multi-touch) License Apache License 2.0 Linux kernel patches under GNU GPL v2 [4] Official website www.android.com Android is an operating system based on the Linux kernel, and designed primarily for touchscreen mobile devices such as smartphones and tablet computers. Initially developed by Android, Inc., which Google backed financially Android (operating system) 2 and later bought in 2005, Android was unveiled in 2007 along with the founding of the Open Handset Alliance: a consortium of hardware, software, and telecommunication companies devoted to advancing open standards for mobile devices. The first publicly available smartphone running Android, the HTC Dream, was released on October 22, 2008. The user interface of Android is based on direct manipulation, using touch inputs that loosely correspond to real-world actions, like swiping, tapping, pinching and reverse pinching to manipulate on-screen objects. Internal hardware such as accelerometers, gyroscopes and proximity sensors are used by some applications to respond to additional user actions, for example adjusting the screen from portrait to landscape depending on how the device is oriented. -

Mobile Security Companion to the CIS Critical Security Controls (Version 6)

Mobile Security Companion to the CIS Critical Security Controls (Version 6) 1 Mobile Security Companion to the CIS Critical Security Controls (Version 6) Introduction ................................................................................................................................................................................................................................................................. 3 Description ................................................................................................................................................................................................................................................................... 5 CIS Critical Security Controls (Version 6): Mobile Security .................................................................................................................................................................... 5 2 Introduction Mobile devices are starting to replace laptops for regular business use. Organizations are building or porting their applications to mobile platforms, so users are increasingly accessing the same data with mobile as with their laptops. Also, organizations have increasingly implemented Bring Your Own Device (BYOD) policies to manage this trend. However, many organizations have been struggling with the increase of personal mobile devices, and don’t fully understand the security risks they may bring. There are concerns that their compact size makes them easy to lose, that they run newer operating systems that don’t -

Securing and Managing Wearables in the Enterprise

White Paper: Securing and Managing Wearables in the Enterprise Streamline deployment and protect smartwatch data with Samsung Knox Configure White Paper: Securing and Managing Wearables in the Enterprise 2 Introduction: Smartwatches in the Enterprise As the wearable device market heats up, wrist-worn devices Industries as varied as healthcare, such as smartwatches are leading the pack. According to CCS Insight, forecasts for global sales of smart wearable devices finance, energy, transportation, will grow strongly over the next five years, with the global public safety, retail and hospitality market reaching nearly $30 billion by 2023.1 are deploying smartwatches for While smartwatches for fitness and activity tracking are popular, consumer demand is only part of the equation. added business value. Enterprises are also seeing business value in wearable devices. In a report by Robert Half Technology, 81 percent of CIOs surveyed expect wearable devices like smartwatches to Samsung has been working to address these concerns and become common tools in the workplace.2 has developed the tools to make its Galaxy and Galaxy Active smartwatches customizable, easily manageable and highly secure for enterprise users. This white paper will look at how these tools address key wearable security and manageability challenges, as well as considerations for smartwatch 81% deployments. of CIOs surveyed expect wearable devices like smartwatches to become common tools in the workplace. Industries as varied as healthcare, finance, energy, transportation, public safety, retail and hospitality are deploying smartwatches for added business value, such as hands-free communication for maintenance workers, task management, as well as physical monitoring of field workers in dangerous or remote locations. -

Threat Modeling Framework for Mobile Communication Systems

Threat modeling framework for mobile communication systems Siddharth Prakash Rao Silke Holtmanns Tuomas Aura Aalto University Nokia-Bell Labs Aalto University Espoo, Finland Espoo, Finland Espoo, Finland siddharth:rao@aalto: silke:holtmanns@nokia-bell- tuomas:aura@aalto: labs:com ABSTRACT protocols, which evolved in a more open setup, security tools avail- Due to the complex nature of mobile communication systems, most able in the public domain for the laer are now used by hackers to of the security eorts in its domain are isolated and scaered across exploit the mobile networks [9]. underlying technologies. is has resulted in an obscure view of Despite the odds, mobile communication systems have under- the overall security. In this work, we aempt to x this problem gone a fair amount of scrutiny from the security research com- by proposing a domain-specic threat modeling framework. By munity. For example, internal components of a mobile device [71, gleaning from a diverse and large body of security literature, we 74, 101], radio communication between the phone and the cell systematically organize the aacks on mobile communications into towers[37, 162], and mobile core network protocols [55, 80, 122] various tactics and techniques. Our framework is designed to model have been tested for security-critical issues. All these isolated adversarial behavior in terms of its aack phases and to be used as research eorts are scaered across underlying protocols and tech- a common taxonomy matrix. We also provide concrete examples nologies, and, it has resulted in an obscure and complex view of mo- of using the framework for modeling the aacks individually and bile communication security. -

Brightcloud® Mobile Security SDK

DATASHEET BrightCloud® Mobile Security SDK Simple, flexible, powerful detection and protection for Android® devices Overview • Malicious apps and PUAs are a threat to personal and corporate data, and can compromise Mobile Security SDK benefits: financial transactions 1) Industry-leading mobile threat protection • In 2019, Trojans accounted for 56.9% of Android threats detected1 2) Does not slow devices or hinder user productivity • The BrightCloud Mobile Security SDK offers 3) Simple, flexible development options for partners enhanced mobile security, including antivirus, antimalware, application scanning and interrogation, device root detection, and device risk scoring Individuals using smartphones and tablets tend to Webroot detects a variety of malicious apps, including malware, engage in activities that increase the risk of attacks spyware, and trojans. PUAs include commercial rooting tools, on themselves and networks. For instance, using hacking tools, aggressive advertising, and data leakage apps. unsecured public WiFi or downloading apps from Security administrators may consider eliminating PUAs, as they untrustworthy third-party sites can infect a device have the potential to impact data loss or incur unwanted mobile with mobile malware. These could lead to unwanted usage fees. consequences, including data exfiltration, camera and microphone hijacking, financial extortion, or acting as a The BrightCloud Mobile Security SDK addresses mobile device Trojan horse into the WiFi network it connects to. threats by enabling technology partners to offer enhanced mobile security for their customers within their solutions. It features antivirus, antimalware, application scanning and interrogation, Spyware 1.6% Rootkits 0.3% device root detection, and device risk scoring, all while utilizing System Monitor 0.3% very little memory, bandwidth, or battery life. -

A Survey on Smartphones Security: Software Vulnerabilities, Malware, and Attacks

(IJACSA) International Journal of Advanced Computer Science and Applications Vol. 8, No. 10, 2017 A Survey on Smartphones Security: Software Vulnerabilities, Malware, and Attacks Milad Taleby Ahvanooey*, Prof. Qianmu Li*, Mahdi Rabbani, Ahmed Raza Rajput School of Computer Science and Engineering, Nanjing University of Science and Technology, Nanjing, P.O. Box 210094 P.R. China. Abstract—Nowadays, the usage of smartphones and their desktop usage (desktop usage, web usage, overall is down to applications have become rapidly popular in people’s daily life. 44.9% in the first quarter of 2017). Further, based on the latest Over the last decade, availability of mobile money services such report released by Kaspersky on December 2016 [3], 36% of as mobile-payment systems and app markets have significantly online banking attacks have targeted Android devices and increased due to the different forms of apps and connectivity increased 8% compared to the year 2015. In all online banking provided by mobile devices, such as 3G, 4G, GPRS, and Wi-Fi, attacks in 2016, have been stolen more than $100 million etc. In the same trend, the number of vulnerabilities targeting around the world. Although Android OS becomes very popular these services and communication networks has raised as well. today, it is exposing more and more vulnerable encounter Therefore, smartphones have become ideal target devices for attacks due to having open-source software, thus everybody malicious programmers. With increasing the number of can develop apps freely. A malware writer (or developer) can vulnerabilities and attacks, there has been a corresponding ascent of the security countermeasures presented by the take advantage of these features to develop malicious apps. -

Features of Norton Mobile Security

Norton Mobile Security User Guide DOWNLOAD AND INSTALL NORTON MOBILE SECURITY .................................................................. 1 ACTIVATE NORTON MOBILE SECURITY............................................................................................ 4 FEATURES OF NORTON MOBILE SECURITY ...................................................................................... 5 KEY FEATURES FOR IPHONES OR IPADS .......................................................................................... 7 SYSTEM REQUIREMENTS FOR NORTON MOBILE SECURITY ............................................................. 7 Download and install Norton Mobile Security You can directly download and install Norton Mobile Security on your mobile devices, or you can send an email with download instructions and access it on your mobile device. Select the device you are installing from: I am on a computEr and want to sEnd instructions to install Norton MobilE SEcurity to my devicE 1. Sign In to Norton. 2. If you are not signed in to Norton already, you are prompted to sign in. Type in your email address and password for Norton, and click Sign In. If you do not have a Norton account, click Create account, and complete the sign-up process. 3. In the Norton Setup window, move your mouse over Download Norton, and then click Send a Download Link. 4. Type an email address you can access on your device, and click the send icon . An email is sent to the email address with instructions to install the Norton product. Click DonE. 1 5. On the device where you want to install Norton, find and open the email that you received from "The Norton Team", and tap Download Now. The download link opens the Norton Setup page in your browser. 6. In the Norton Setup window, tap Download Now or Install. 7. You are redirected to the Norton Security and Antivirus page on the Google Play Store or App Store. -

Mobile Security Tip Card

MOBILE SECURITY TIP CARD Mobile devices enable Americans to get online wherever they are. Although mobile devices — from smart watches to phones and tablets — can be extremely useful and convenient, there are also potential threats users may face with such technology. It’s important to understand how to protect yourself when connecting on the go. DID YOU KNOW? • 56 percent of American adults own a smartphone.1 • More than half of mobile application (app) users have uninstalled or decided not to install an app due to concerns about their personal information.2 SIMPLE TIPS 1. Use strong passwords. Change any default passwords on your mobile device to ones that would be difficult for someone to guess. Use different passwords for different programs and devices. Do not choose options that allow your device to remember your passwords. 2. Keep software up to date. Install updates for apps and your device’s operating system as soon as they are available. Keeping the software on your mobile device up to date will prevent attackers from being able to take advantage of known vulnerabilities. 3. Disable remote connectivity. Some mobile devices are equipped with wireless technologies, such as Bluetooth, that can connect to other devices. Disable these features when they are not in use. 4. Be careful what you post and when. Wait to post pictures from trips and events so that people do not know where to find you. Posting where you are also reminds others that your house is empty. 5. Guard your mobile device. In order to prevent theft and unauthorized access, never leave your mobile device unattended in a public place and lock your device when it is not in use. -

Mobile Banking Security Tips

Mobile Banking Security Tips Phones and other mobile devices often contain sensitive data. Providence Bank & Trust understands this and makes protecting your information and your assets one of our top concerns. The following mobile banking security tips and precautions will help to protect the information on your phone and in the event your mobile device is lost, stolen, or otherwise compromised. Password protect your mobile device and lock your device when it’s not in use. Most devices have a security setting that makes it mandatory that you trace a pattern or insert a PIN to access the device – we highly recommend setting this password protection. Install mobile security software on your mobile device when possible. Some mobile security solutions include: Norton Smartphone Security, and Lookout Security & Antivirus, avast! Mobile Security. We highly recommend downloading mobile security software. Download only the official app from Providence Bank & Trust. You can download the Providence Bank & Trust Mobile Banking App from our website www.providencebank.com or from the Apple App market and Android Google Play Market. When downloading from the Apple or Android Market, verify that the app publisher is Providence Bank & Trust and/or CSI. Be Careful of What you download. While there aren’t as many examples of malware out in the mobile device market as there are on traditional PCs, the fact remains that mobile devices are just specialized computers. That means it’s possible for someone to design an app that could try to access your information. You should be careful when downloading apps – not just your banking app, but all apps.