Methodological Issues Related to Radio Measurement and Ratings in Romania: Solutions and Perspectives

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CONSILIUL NATIONAL AL AUDIOVIZUALULUI Adresa: Bd

CONSILIUL NAŢIONAL AL AUDIOVIZUALULUI, AUTORITATE PUBLICĂ AUTONOMĂ ŞI GARANT AL INTERESULUI PUBLIC ÎN DOMENIUL COMUNICĂRII AUDIOVIZUALE, ÎŞI EXERCITĂ ATRIBUŢIILE SUB CONTROLUL PARLAMENTULUI ROMÂNIEI, CĂRUIA ÎI PREZINTĂ, ANUAL, UN RAPORT DE ACTIVITATE CONSILIUL NATIONAL AL AUDIOVIZUALULUI 2018 RAPORTUL DE ACTIVITATE pe anul 2018 RAPORTUL DE ACTIVITATE AL CONSILIULUI NAȚIONAL AL AUDIOVIZUALULUI PE ANUL 2018 CNA - RAPORT ANUAL – 2018 1 CUPRINS MEMBRII CONSILIULUI IN ANUL 2018 ............................................................... 3 ROLUL ȘI MISIUNEA CNA. PREZENTARE GENERALĂ A ACTIVITĂŢII ÎN ANUL 2018 ....................................................................................................................... 6 TENDINŢE DE EVOLUŢIE A PIEŢEI AUDIOVIZUALE ....................................... 19 ACTIVITATEA DE REGLEMENTARE ........................................................................ 44 RELAŢII EUROPENE .................................................................................................... 53 COMUNICARE ŞI RELAŢII CU PUBLICUL ............................................................ 71 MONITORIZARE TV/RADIO ..................................................................................... 82 CONTROL ȘI DIGITALIZARE .................................................................................... 99 ACTIVITATEA JURIDICĂ ......................................................................................... 110 SITUAŢIA LITIGIILOR ÎN CARE CNA A FOST IMPLICAT ÎN 2018 ........... 125 POLITICA -

Simpozion 2011

UNIVERSITATEA DE STIINTE AGRICOLE SI MEDICINA VETERINARA “ION IONESCU DE LA BRAD” IASI FACULTATEA DE ZOOTEHNIE Aleea M. Sadoveanu nr.8 Iaşi – 700489 Tel.: 0040 – 0232.407.300 FAX: 0040-0232267.504 E-mail : [email protected] SIMPOZION INTERNAŢIONAL „TRADIŢIE, PERFORMANŢĂ ŞI EFICIENŢĂ ÎN CREŞTEREA ANIMALELOR - 60 DE ANI DE ÎNVĂŢĂMÂNT SUPERIOR ZOOTEHNIC ÎN MOLDOVA” IAŞI 14 – 15 APRILIE 2011 1 RAPORT CU PRIVIRE LA DESFĂŞURAREA SIMPOZIONULUI INTERNAŢIONAL „TRADIŢIE, PERFORMANŢĂ ŞI EFICIENŢĂ ÎN CREŞTEREA ANIMALELOR - 60 DE ANI DE ÎNVĂŢĂMÂNT SUPERIOR ZOOTEHNIC ÎN MOLDOVA” IAŞI, 14 – 15 APRILIE 2011 Eveniment tradiţional, Simpozionul Ştiinţific Internaţional, aflat in acest an sub genericul : „Tradiţie, performanţă şi eficienţă în creşterea animalelor - 60 de ani de învăţământ superior zootehnic în Moldova”, a fost organizat de către Facultatea de Zootehnie, din cadrul Universităţii de Ştiinţe Agricole şi Medicină Veterinară „Ion Ionescu de la Brad” din Iaşi. Tematica manifestării a fost în deplin consens cu activitatea didactică şi ştiinţifică desfăşurată în această instituţie de învăţământ superior şi s-a raportat la împlinirea a 60 ani de învăţământ superior zootehnic, la Iaşi. Fiind la a 54-a ediţie, manifestările ştiinţifice organizate de către Facultatea de Zootehnie din Iaşi, datorită tematicii complexe, a nivelului înalt al lucrărilor ştiinţifice şi a gradului ridicat de participare, constituie repere importante pentru mediul academic din ţara noastră, iar în ultimul timp au atras şi atenţia cercetătorilor din instituţii academice aflate în afara graniţelor ţării. Simpozionul ştiinţific organizat de către Facultatea de Zootehnie, din cadrul USAMV Iaşi, a crescut în valoare şi datorită faptului că lucrarile ştiinţifice sunt evaluate după cele mai exigente cerinţe. -

Valul De Toamnă 2020

Rezultatele Studiului de Audienţă Radio Valul de toamnă 2020 În perioada 31 august – 13 decembrie 2020, a fost realizat valul de toamnă al Studiului de Audienţă Radio de către IMAS – Marketing şi Sondaje SA şi MERCURY RESEARCH S.R.L. În cadrul cercetării s-a măsurat audienţa posturilor de radio la nivel naţional, urban şi în municipiul Bucureşti. Eşantionul are un volum de 11.065 de persoane, cu o eroare maximă de eşantionare de +/- 0,9%. În mediul urban s-au realizat 7.705 interviuri (eroare maximă de eşantionare +/-1,1%), în mediul rural s-au realizat 3.360 interviuri (eroare maximă de eşantionare +/-1,7%), iar în Bucureşti s-au realizat 1.799 interviuri (eroarea maximă de eşantionare este +/- 2,3%). Universul Universul Studiului de Audienţă Radio este format din persoanele în vârstă de 11 ani şi peste, care nu lucrează în domeniul radio, rezidente în locuinţe neinstituţionalizate din toate localităţile urbane şi rurale din România. Conform datelor statistice ale Institutului Naţional de Statistică, privind populația rezidentă la data de 1 ianuarie 2019, mărimea acestei populaţii la nivel național este de 17.213.341 persoane1. Eşantionul Eşantionul acestui val este compus din două subeşantioane: subeşantionul realizat prin interviuri telefonice (10.183 interviuri - în mediul rural şi mediul urban) şi cel realizat în teren (882 interviuri - în mediul rural). În eşantion au fost incluse 1.765 localităţi, dintre care 67 (toate) localităţi urbane de peste 30.000 de locuitori, 243 de localităţi urbane cu mai puţin de 30.000 de locuitori din cele 253 existente, 322 din cele 399 de localităţi rurale cu peste 5.000 de locuitori şi 1133 din cele 2.462 de localităţi rurale cu mai puţin de 5.000 de locuitori. -

Rezultatele Studiului De Audienţă Radio În Bucureşti

Rezultatele Studiului de Audienţă Radio Valul de vară 2020 În perioada 27 aprilie – 16 august 2020, a fost realizat valul de vară al Studiului de Audienţă Radio de către IMAS – Marketing şi Sondaje SA şi MERCURY RESEARCH S.R.L. În cadrul cercetării s-a măsurat audienţa posturilor de radio la nivel naţional, urban şi în municipiul Bucureşti. Eşantionul are un volum de 11.055 de persoane, cu o eroare maximă de eşantionare de +/- 0,9%. În mediul urban s-au realizat 7.704 interviuri (eroare maximă de eşantionare +/-1,1%), în mediul rural s-au realizat 3.351 interviuri (eroare maximă de eşantionare +/-1,7%), iar în Bucureşti s-au realizat 1.805 interviuri (eroarea maximă de eşantionare este +/- 2,3%). Universul Universul Studiului de Audienţă Radio este format din persoanele în vârstă de 11 ani şi peste, care nu lucrează în domeniul radio, rezidente în locuinţe neinstituţionalizate din toate localităţile urbane şi rurale din România. Conform datelor statistice ale Institutului Naţional de Statistică, privind populația rezidentă la data de 1 ianuarie 2019, mărimea acestei populaţii la nivel național este de 17.213.341 persoane1. Eşantionul Eşantionul acestui val este compus din două subeşantioane: subeşantionul realizat prin interviuri telefonice (10.183 interviuri - în mediul rural şi mediul urban) şi cel realizat în teren (872 interviuri - în mediul rural). În eşantion au fost incluse 1.792 localităţi, dintre care 67 (toate) localităţi urbane de peste 30.000 de locuitori, 240 de localităţi urbane cu mai puţin de 30.000 de locuitori din cele 253 existente, 321 din cele 399 de localităţi rurale cu peste 5.000 de locuitori şi 1164 din cele 2.462 de localităţi rurale cu mai puţin de 5.000 de locuitori. -

Rezultatele Studiului De Audienţă Radio În Bucureşti

Rezultatele Studiului de Audienţă Radio Valul de primăvară 2011 În perioada 10 ianuarie – 1 mai 2011 a fost realizat valul de primăvară al Studiului de Audienţă Radio de către IMAS – Marketing şi Sondaje SA şi Mercury Research SRL. În cadrul cercetării s-a măsurat audienţa posturilor de radio la nivel naţional, urban şi în municipiul Bucureşti. Eşantionul are un volum de 8,409 de persoane, cu o eroare maximă de eşantionare de +/- 1.1%. În mediul urban s-au realizat 6,340 interviuri (eroare maximă de eşantionare +/-1.2%), iar în Bucureşti s-au realizat 1,800 interviuri (eroarea maximă de eşantionare este +/- 2.3%). Universul Universul Studiului de Audienţă Radio este format din persoanele în vârstă de 11 ani şi peste, rezidente în locuinţe neinstituţionalizate din toate localităţile urbane şi rurale din România. Conform datelor statistice ale INSEE disponibile la data de 01.01.2011, mărimea acestei populaţii este de 19,057,743 persoane. Eşantionul Studiul de Audienţă Radio s-a realizat pe baza unui eşantion de tip probabilist, stratificat, cu extracţie multistadială. În eşantion au fost incluse 1,141 localităţi din care 69 (toate) localităţi urbane de peste 30,000 de locuitori, 221 de localităţi urbane cu mai puţin de 30,000 de locuitori din cele 251 existente, 253 din cele 461 de localităţi rurale cu peste 5,000 de locuitori din România, şi 598 din cele 2,400 de localităţi rurale cu mai puţin de 5,000 de locuitori. Localităţile din eşantionul SAR au reieşit din generarea aleatoare a numerelor de telefon pentru realizarea interviurilor şi au o distribuţie geografică echilibrată la nivel naţional. -

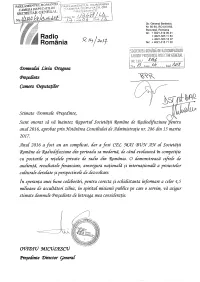

2016 SRR.Pdf

SUNTEM RADIO ROMÂNIA RADIO ROMÂNIA 2016 RAPORT RAPORT ANUAL ANUAL 2016 str. General Berthelot 60-64, 010165 București e-mail: [email protected] telefon: 021 314 77 70 | 021 303 12 97 fax: 021 303 17 26 www.radioromania.ro 25.000.000 20.000.000 15.000.000 10.000.000 -5.000.000 5.000.000 0 1995 139.444 1995 PERIOADA ÎN SRR ALE NETE FINANCIARE REZULTATELOR EVOLUȚIA 1996 -882.932 1997 65.854 1998 3.693.587 1999 380.552 2000 4.521.916 2001 129.955 2002 911.636 2003 5.061.340 2004 4.305.861 2005 428.275 2006 1.751.227 2007 87.961 2008 258.065 2009 448.980 2010 334.454 2011 4.209.533 2012 62.970 - 5.560.962 2016 2013 2014 7.022.538 2015 18.455.131 2016 22.643.428 RAPORTUL DE ACTIVITATE AL SOCIETĂȚII ROMÂNE DE RADIODIFUZIUNE PE ANUL 2016 RADIO ROMÂNIA CUVÂNT ÎNAINTE 2016 a fost CEL MAI BUN AN al Societæflii Române de Radiodifuziune din perioada sa modernæ, de când evolueazæ în competiflie cu posturile øi reflelele private de radio din România. CUPRINS Dar 2016 a fost øi un an greu, un an cu douæ runde de alegeri – locale øi parlamentare, cu multe atacuri øi încercæri de destabilizare a Radioului Public øi, nu în ultimul rând, cu dezbaterea naflionalæ care a avut ca subiect menflinerea sau anularea taxei radio-tv. Toate acestea s-au întâmplat în contextul în care Radio România a atins cele mai mari niveluri de audienflæ la scaræ naflionalæ øi localæ, a generat cel mai bun exercifliu financiar din 1928 încoace, a dezvoltat cele mai performante proiecte publice culturale øi educaflionale, atât la nivel naflional 1 SINTEZĂ 4-6 cât øi internaflional. -

Rezultatele Studiului De Audienţă Radio În Bucureşti

Rezultatele Studiului de Audienţă Radio Valul de primăvară 2020 În perioada 6 ianuarie – 26 aprilie 2020, a fost realizat valul de primăvară al Studiului de Audienţă Radio de către IMAS – Marketing şi Sondaje SA şi MERCURY RESEARCH S.R.L. În cadrul cercetării s-a măsurat audienţa posturilor de radio la nivel naţional, urban şi în municipiul Bucureşti. Eşantionul are un volum de 11.010 de persoane, cu o eroare maximă de eşantionare de +/- 0,9%. În mediul urban s-au realizat 7.708 interviuri (eroare maximă de eşantionare +/-1,1%), în mediul rural s-au realizat 3.302 interviuri (eroare maximă de eşantionare +/-1,7%), iar în Bucureşti s-au realizat 1.809 interviuri (eroarea maximă de eşantionare este +/- 2,3%). Universul Universul Studiului de Audienţă Radio este format din persoanele în vârstă de 11 ani şi peste, care nu lucrează în domeniul radio, rezidente în locuinţe neinstituţionalizate din toate localităţile urbane şi rurale din România. Conform datelor statistice ale Institutului Naţional de Statistică, privind populația rezidentă la data de 1 ianuarie 2019, mărimea acestei populaţii la nivel național este de 17.213.341 persoane1. Eşantionul Eşantionul acestui val este compus din două subeşantioane: subeşantionul realizat prin interviuri telefonice (10.480 interviuri - în mediul rural şi mediul urban) şi cel realizat în teren (530 interviuri - în mediul rural). În eşantion au fost incluse 1.875 localităţi, dintre care 67 (toate) localităţi urbane de peste 30.000 de locuitori, 239 de localităţi urbane cu mai puţin de 30.000 de locuitori din cele 253 existente, 340 din cele 399 de localităţi rurale cu peste 5.000 de locuitori şi 1229 din cele 2.462 de localităţi rurale cu mai puţin de 5.000 de locuitori. -

Rezultatele Studiului De Audienţă Radio În Bucureşti

Rezultatele Studiului de Audienţă Radio Valul de vară 2019 În perioada 22 aprilie – 11 august 2019, a fost realizat valul de vară al Studiului de Audienţă Radio de către IMAS – Marketing şi Sondaje SA şi MERCURY RESEARCH S.R.L. În cadrul cercetării s-a măsurat audienţa posturilor de radio la nivel naţional, urban şi în municipiul Bucureşti. Eşantionul are un volum de 10.139 de persoane, cu o eroare maximă de eşantionare de +/- 1%. În mediul urban s-au realizat 7.400 interviuri (eroare maximă de eşantionare +/-1,1%), în mediul rural s-au realizat 2.739 interviuri (eroare maximă de eşantionare +/-1,9%), iar în Bucureşti s-au realizat 1.805 interviuri (eroarea maximă de eşantionare este +/- 2,3%). Universul Universul Studiului de Audienţă Radio este format din persoanele în vârstă de 11 ani şi peste, care nu lucrează în domeniul radio, rezidente în locuinţe neinstituţionalizate din toate localităţile urbane şi rurale din România. Conform datelor statistice ale Institutului Naţional de Statistică, obținute în urma Recensământului populației din anul 2011, mărimea acestei populaţii este de 17.808.907 persoane1 la nivel național, pentru mediul urban este de 9.710.428 persoane, pentru București este de 1.701.186 persoane, iar pentru mediul rural este de 8.098.479 persoane. Eşantionul Studiul de Audienţă Radio s-a realizat pe baza unui eşantion de tip probabilist, stratificat, cu extracţie multistadială. În eşantion au fost incluse 1.533 localităţi, dintre care 67 (toate) localităţi urbane de peste 30.000 de locuitori, 244 de localităţi urbane cu mai puţin de 30.000 de locuitori din cele 253 existente, 289 din cele 399 de localităţi rurale cu peste 5.000 de locuitori şi 933 din cele 2.462 de localităţi rurale cu mai puţin de 5.000 de locuitori. -

Rezultatele Studiului De Audienţă Radio În Bucureşti

Rezultatele Studiului de Audienţă Radio Valul de primăvară 2012 În perioada 16 ianuarie – 29 aprilie 2012 a fost realizat valul de primăvară al Studiului de Audienţă Radio de către IMAS – Marketing şi Sondaje SA şi GfK România – Institut de Cercetare de Piaţă SRL. În cadrul cercetării, s-a măsurat audienţa posturilor de radio la nivel naţional, urban şi în municipiul Bucureşti. Eşantionul are un volum de 9,458 de persoane, cu o eroare maximă de eşantionare de +/- 1.0%. În mediul urban s-au realizat 7,428 interviuri (eroare maximă de eşantionare +/-1.1%), iar în Bucureşti s-au realizat 1,801 interviuri (eroarea maximă de eşantionare este +/- 2.3%). Universul Universul Studiului de Audienţă Radio este format din persoanele în vârstă de 11 ani şi peste, rezidente în locuinţe neinstituţionalizate din toate localităţile urbane şi rurale din România. Conform datelor statistice ale Institutului Naţional de Statistică disponibile la data de 01.01.2012, mărimea acestei populaţii este de 19,030,601 persoane. Eşantionul Studiul de Audienţă Radio s-a realizat pe baza unui eşantion de tip probabilist, stratificat, cu extracţie multistadială. În eşantion au fost incluse 1,148 localităţi dintre care 69 (toate) localităţi urbane de peste 30,000 de locuitori, 236 de localităţi urbane cu mai puţin de 30,000 de locuitori din cele 251 existente, 244 din cele 464 de localităţi rurale cu peste 5,000 de locuitori din România, şi 599 din cele 2,397 de localităţi rurale cu mai puţin de 5,000 de locuitori. Localităţile din eşantionul SAR au reieşit din generarea aleatoare a numerelor de telefon pentru realizarea interviurilor şi au o distribuţie geografică echilibrată la nivel naţional. -

Consiliul National Al Audiovizualului

CONSILIUL NAŢIONAL AL AUDIOVIZUALULUI, AUTORITATE PUBLICĂ AUTONOMĂ ŞI GARANT AL INTERESULUI PUBLIC ÎN DOMENIUL COMUNICĂRII AUDIOVIZUALE, ÎŞI EXERCITĂ ATRIBUŢIILE SUB CONTROLUL PARLAMENTULUI ROMÂNIEI, CĂRUIA ÎI PREZINTĂ, ANUAL, UN RAPORT DE ACTIVITATE CONSILIUL NATIONAL 2015 AL AUDIOVIZUALULUI RAPORTUL DE ACTIVITATE pe anul 2015 RAPORTUL DE ACTIVITATE AL CONSILIULUI NAȚIONAL AL AUDIOVIZUALULUI PE ANUL 2015 CNA - RAPORT ANUAL – 2015 1 CUPRINS MEMBRII CONSILIULUI IN ANUL 2015 ............................................................... 3 PREZENTARE GENERALĂ A ACTIVITĂŢII CNA ÎN ANUL 2015 ..................... 5 TENDINŢE DE EVOLUŢIE A PIEŢEI AUDIOVIZUALE ....................................... 13 ACTIVITATEA DE REGLEMENTARE ........................................................................ 40 RELAŢII EUROPENE .................................................................................................... 55 COMUNICARE ŞI RELAŢII CU PUBLICUL ............................................................ 64 TRANSPARENŢA DECIZIONALĂ ............................................................................. 70 MEDIATIZAREA CNA ÎN MASS-MEDIA ON-LINE .............................................. 71 MONITORIZARE TV ..................................................................................................... 73 MONITORIZARE RADIO ............................................................................................ 84 CONTROL ȘI DIGITALIZARE .................................................................................. -

Suntem Radio România

SUNTEM RADIO ROMÂNIA 2015 RAPORT ANUAL 1 RAPORTUL DE ACTIVITATE AL SOCIETĂȚII ROMÂNE DE RADIODIFUZIUNE PE ANUL 2015 RADIO ROMÂNIA CUVÂNT ÎNAINTE Anul 2015 a fost pentru Radio România un an al consolidării, al confi rmării, din toate CUPRINS punctele de vedere. O creştere consecutivă și consistentă de trei ani reprezintă un atu ce susţine, incontestabil, poziţia de lider pe piaţa media din România a radioului public. Rezultatul remarcabil din 2015 se regăseşte în audienţe solide, într-o misiune culturală şi educativă dusă la un nivel de notorietate și recunoaștere fără precedent, un exerciţiu fi nanciar foarte bun, cu venituri în continuă creştere şi o remarcabilă imagine externă. În 2015, Radio România şi-a consolidat prezenţa şi importanţa în organismele internaţionale din Europa şi 1 SINTEZĂ 4-6 Asia şi rămâne, incontestabil, cel mai important actor cultural media pe plan naţional, cu ecouri foarte importante în întreaga lume. Radio România își urmează strategia și PERFORMEAZĂ. 2 ACTIVITATEA MANAGERIALĂ 7-13 Am putea spune simplu că 2015 a fost un an bun, dar am nedreptăţi efortul conjugat al administrației, al managementului şi al tuturor profesioniştilor din radioul public dacă nu am menționa că anul 2015 a fost în aceeaşi măsură unul foarte greu şi 3 ECONOMIC ȘI FINANCIAR 14-25 încărcat de tensiuni, un an care a dovedit că radioul public are nu numai prieteni, ci şi adversari. Am încheiat cu bine această perioadă, datorită căreia suntem mai puternici, pentru că am devenit mai înţelepţi, mai determinați și mai performanți. 4 EDITORIAL 26-47 Noi, Radio România, am înţeles că misiunea publică este cea care ne pune în slujba ascultătorilor. -

CONSILIUL NATIONAL AL AUDIOVIZUALULUI Adresa: Bd

CONSILIUL NAŢIONAL AL AUDIOVIZUALULUI, AUTORITATE PUBLICĂ AUTONOMĂ ŞI GARANT AL INTERESULUI PUBLIC ÎN DOMENIUL COMUNICĂRII AUDIOVIZUALE, ÎŞI EXERCITĂ ATRIBUŢIILE SUB CONTROLUL PARLAMENTULUI ROMÂNIEI, CĂRUIA ÎI PREZINTĂ, ANUAL, UN RAPORT DE ACTIVITATE CONSILIUL NATIONAL AL AUDIOVIZUALULUI 2020 RAPORTUL DE ACTIVITATE pe anul 2020 RAPORTUL DE ACTIVITATE AL CONSILIULUI NAȚIONAL AL AUDIOVIZUALULUI PE ANUL 2020 CNA - RAPORT ANUAL – 2020 1 CUPRINS MEMBRII CONSILIULUI IN ANUL 2020 ............................................................... 3 ROLUL ȘI MISIUNEA CNA ........................................................................................... 5 PREZENTARE GENERALĂ A ACTIVITĂȚII CNA ÎN ANUL 2020 .................... 11 TENDINŢE DE EVOLUŢIE A PIEŢEI AUDIOVIZUALE ....................................... 19 ACTIVITATEA DE REGLEMENTARE ........................................................................ 44 RELAŢII EUROPENE .................................................................................................... 54 INFORMARE ȘI RELAȚII PUBLICE ......................................................................... 65 MONITORIZARE TV/RADIO ..................................................................................... 75 CONTROL ........................................................................................................................ 97 ACTIVITATEA JURIDICĂ ......................................................................................... 110 SITUAŢIA LITIGIILOR ÎN CARE CNA A FOST IMPLICAT ÎN