TR-08 Transport of JPEG XS Video in ST 2110-22

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Improved SPSIM Index for Image Quality Assessment

S S symmetry Article An Improved SPSIM Index for Image Quality Assessment Mariusz Frackiewicz * , Grzegorz Szolc and Henryk Palus Department of Data Science and Engineering, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland; [email protected] (G.S.); [email protected] (H.P.) * Correspondence: [email protected]; Tel.: +48-32-2371066 Abstract: Objective image quality assessment (IQA) measures are playing an increasingly important role in the evaluation of digital image quality. New IQA indices are expected to be strongly correlated with subjective observer evaluations expressed by Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS). One such recently proposed index is the SuperPixel-based SIMilarity (SPSIM) index, which uses superpixel patches instead of a rectangular pixel grid. The authors of this paper have proposed three modifications to the SPSIM index. For this purpose, the color space used by SPSIM was changed and the way SPSIM determines similarity maps was modified using methods derived from an algorithm for computing the Mean Deviation Similarity Index (MDSI). The third modification was a combination of the first two. These three new quality indices were used in the assessment process. The experimental results obtained for many color images from five image databases demonstrated the advantages of the proposed SPSIM modifications. Keywords: image quality assessment; image databases; superpixels; color image; color space; image quality measures Citation: Frackiewicz, M.; Szolc, G.; Palus, H. An Improved SPSIM Index 1. Introduction for Image Quality Assessment. Quantitative domination of acquired color images over gray level images results in Symmetry 2021, 13, 518. https:// the development not only of color image processing methods but also of Image Quality doi.org/10.3390/sym13030518 Assessment (IQA) methods. -

Creating 4K/UHD Content Poster

Creating 4K/UHD Content Colorimetry Image Format / SMPTE Standards Figure A2. Using a Table B1: SMPTE Standards The television color specification is based on standards defined by the CIE (Commission 100% color bar signal Square Division separates the image into quad links for distribution. to show conversion Internationale de L’Éclairage) in 1931. The CIE specified an idealized set of primary XYZ SMPTE Standards of RGB levels from UHDTV 1: 3840x2160 (4x1920x1080) tristimulus values. This set is a group of all-positive values converted from R’G’B’ where 700 mv (100%) to ST 125 SDTV Component Video Signal Coding for 4:4:4 and 4:2:2 for 13.5 MHz and 18 MHz Systems 0mv (0%) for each ST 240 Television – 1125-Line High-Definition Production Systems – Signal Parameters Y is proportional to the luminance of the additive mix. This specification is used as the color component with a color bar split ST 259 Television – SDTV Digital Signal/Data – Serial Digital Interface basis for color within 4K/UHDTV1 that supports both ITU-R BT.709 and BT2020. 2020 field BT.2020 and ST 272 Television – Formatting AES/EBU Audio and Auxiliary Data into Digital Video Ancillary Data Space BT.709 test signal. ST 274 Television – 1920 x 1080 Image Sample Structure, Digital Representation and Digital Timing Reference Sequences for The WFM8300 was Table A1: Illuminant (Ill.) Value Multiple Picture Rates 709 configured for Source X / Y BT.709 colorimetry ST 296 1280 x 720 Progressive Image 4:2:2 and 4:4:4 Sample Structure – Analog & Digital Representation & Analog Interface as shown in the video ST 299-0/1/2 24-Bit Digital Audio Format for SMPTE Bit-Serial Interfaces at 1.5 Gb/s and 3 Gb/s – Document Suite Illuminant A: Tungsten Filament Lamp, 2854°K x = 0.4476 y = 0.4075 session display. -

AN9717: Ycbcr to RGB Considerations (Multimedia)

YCbCr to RGB Considerations TM Application Note March 1997 AN9717 Author: Keith Jack Introduction Converting 4:2:2 to 4:4:4 YCbCr Many video ICs now generate 4:2:2 YCbCr video data. The Prior to converting YCbCr data to R´G´B´ data, the 4:2:2 YCbCr color space was developed as part of ITU-R BT.601 YCbCr data must be converted to 4:4:4 YCbCr data. For the (formerly CCIR 601) during the development of a world-wide YCbCr to RGB conversion process, each Y sample must digital component video standard. have a corresponding Cb and Cr sample. Some of these video ICs also generate digital RGB video Figure 1 illustrates the positioning of YCbCr samples for the data, using lookup tables to assist with the YCbCr to RGB 4:4:4 format. Each sample has a Y, a Cb, and a Cr value. conversion. By understanding the YCbCr to RGB conversion Each sample is typically 8 bits (consumer applications) or 10 process, the lookup tables can be eliminated, resulting in a bits (professional editing applications) per component. substantial cost savings. Figure 2 illustrates the positioning of YCbCr samples for the This application note covers some of the considerations for 4:2:2 format. For every two horizontal Y samples, there is converting the YCbCr data to RGB data without the use of one Cb and Cr sample. Each sample is typically 8 bits (con- lookup tables. The process basically consists of three steps: sumer applications) or 10 bits (professional editing applica- tions) per component. -

Color Image Enhancement with Saturation Adjustment Method

Journal of Applied Science and Engineering, Vol. 17, No. 4, pp. 341-352 (2014) DOI: 10.6180/jase.2014.17.4.01 Color Image Enhancement with Saturation Adjustment Method Jen-Shiun Chiang1, Chih-Hsien Hsia2*, Hao-Wei Peng1 and Chun-Hung Lien3 1Department of Electrical Engineering, Tamkang University, Tamsui, Taiwan 251 2Department of Electrical Engineering, Chinese Culture University, Taipei, Taiwan 111 3Commercialization and Service Center, Industrial Technology Research Institute, Taipei, Taiwan 106 Abstract In the traditional color adjustment approach, people tried to separately adjust the luminance and saturation. This approach makes the color over-saturate very easily and makes the image look unnatural. In this study, we try to use the concept of exposure compensation to simulate the brightness changes and to find the relationship among luminance, saturation, and hue. The simulation indicates that saturation changes withthe change of luminance and the simulation also shows there are certain relationships between color variation model and YCbCr color model. Together with all these symptoms, we also include the human vision characteristics to propose a new saturation method to enhance the vision effect of an image. As results, the proposed approach can make the image have better vivid and contrast. Most important of all, unlike the over-saturation caused by the conventional approach, our approach prevents over-saturation and further makes the adjusted image look natural. Key Words: Color Adjustment, Human Vision, Color Image Processing, YCbCr, Over-Saturation 1. Introduction over-saturated images. An over-saturated image looks unnatural due to the loss of color details in high satura- With the advanced development of technology, the tion area of the image. -

![Arxiv:1902.00267V1 [Cs.CV] 1 Feb 2019 Fcmue Iin Oto H Aaesue O Mg Classificat Image Th for in Used Applications Datasets Fundamental the Most of the Most Vision](https://docslib.b-cdn.net/cover/0817/arxiv-1902-00267v1-cs-cv-1-feb-2019-fcmue-iin-oto-h-aaesue-o-mg-classi-cat-image-th-for-in-used-applications-datasets-fundamental-the-most-of-the-most-vision-1150817.webp)

Arxiv:1902.00267V1 [Cs.CV] 1 Feb 2019 Fcmue Iin Oto H Aaesue O Mg Classificat Image Th for in Used Applications Datasets Fundamental the Most of the Most Vision

ColorNet: Investigating the importance of color spaces for image classification⋆ Shreyank N Gowda1 and Chun Yuan2 1 Computer Science Department, Tsinghua University, Beijing 10084, China [email protected] 2 Graduate School at Shenzhen, Tsinghua University, Shenzhen 518055, China [email protected] Abstract. Image classification is a fundamental application in computer vision. Recently, deeper networks and highly connected networks have shown state of the art performance for image classification tasks. Most datasets these days consist of a finite number of color images. These color images are taken as input in the form of RGB images and clas- sification is done without modifying them. We explore the importance of color spaces and show that color spaces (essentially transformations of original RGB images) can significantly affect classification accuracy. Further, we show that certain classes of images are better represented in particular color spaces and for a dataset with a highly varying number of classes such as CIFAR and Imagenet, using a model that considers multi- ple color spaces within the same model gives excellent levels of accuracy. Also, we show that such a model, where the input is preprocessed into multiple color spaces simultaneously, needs far fewer parameters to ob- tain high accuracy for classification. For example, our model with 1.75M parameters significantly outperforms DenseNet 100-12 that has 12M pa- rameters and gives results comparable to Densenet-BC-190-40 that has 25.6M parameters for classification of four competitive image classifica- tion datasets namely: CIFAR-10, CIFAR-100, SVHN and Imagenet. Our model essentially takes an RGB image as input, simultaneously converts the image into 7 different color spaces and uses these as inputs to individ- ual densenets. -

Color Space Analysis for Iris Recognition

Graduate Theses, Dissertations, and Problem Reports 2007 Color space analysis for iris recognition Matthew K. Monaco West Virginia University Follow this and additional works at: https://researchrepository.wvu.edu/etd Recommended Citation Monaco, Matthew K., "Color space analysis for iris recognition" (2007). Graduate Theses, Dissertations, and Problem Reports. 1859. https://researchrepository.wvu.edu/etd/1859 This Thesis is protected by copyright and/or related rights. It has been brought to you by the The Research Repository @ WVU with permission from the rights-holder(s). You are free to use this Thesis in any way that is permitted by the copyright and related rights legislation that applies to your use. For other uses you must obtain permission from the rights-holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/ or on the work itself. This Thesis has been accepted for inclusion in WVU Graduate Theses, Dissertations, and Problem Reports collection by an authorized administrator of The Research Repository @ WVU. For more information, please contact [email protected]. Color Space Analysis for Iris Recognition by Matthew K. Monaco Thesis submitted to the College of Engineering and Mineral Resources at West Virginia University in partial ful¯llment of the requirements for the degree of Master of Science in Electrical Engineering Arun A. Ross, Ph.D., Chair Lawrence Hornak, Ph.D. Xin Li, Ph.D. Lane Department of Computer Science and Electrical Engineering Morgantown, West Virginia 2007 Keywords: Iris, Iris Recognition, Multispectral, Iris Anatomy, Iris Feature Extraction, Iris Fusion, Score Level Fusion, Multimodal Biometrics, Color Space Analysis, Iris Enhancement, Iris Color Analysis, Color Iris Recognition Copyright 2007 Matthew K. -

Application of Contrast Sensitivity Functions in Standard and High Dynamic Range Color Spaces

https://doi.org/10.2352/ISSN.2470-1173.2021.11.HVEI-153 This work is licensed under the Creative Commons Attribution 4.0 International License. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/. Color Threshold Functions: Application of Contrast Sensitivity Functions in Standard and High Dynamic Range Color Spaces Minjung Kim, Maryam Azimi, and Rafał K. Mantiuk Department of Computer Science and Technology, University of Cambridge Abstract vision science. However, it is not obvious as to how contrast Contrast sensitivity functions (CSFs) describe the smallest thresholds in DKL would translate to thresholds in other color visible contrast across a range of stimulus and viewing param- spaces across their color components due to non-linearities such eters. CSFs are useful for imaging and video applications, as as PQ encoding. contrast thresholds describe the maximum of color reproduction In this work, we adapt our spatio-chromatic CSF1 [12] to error that is invisible to the human observer. However, existing predict color threshold functions (CTFs). CTFs describe detec- CSFs are limited. First, they are typically only defined for achro- tion thresholds in color spaces that are more commonly used in matic contrast. Second, even when they are defined for chromatic imaging, video, and color science applications, such as sRGB contrast, the thresholds are described along the cardinal dimen- and YCbCr. The spatio-chromatic CSF model from [12] can sions of linear opponent color spaces, and therefore are difficult predict detection thresholds for any point in the color space and to relate to the dimensions of more commonly used color spaces, for any chromatic and achromatic modulation. -

Basics of Video

Analog and Digital Video Basics Nimrod Peleg Update: May. 2006 1 Video Compression: list of topics • Analog and Digital Video Concepts • Block-Based Motion Estimation • Resolution Conversion • H.261: A Standard for VideoConferencing • MPEG-1: A Standard for CD-ROM Based App. • MPEG-2 and HDTV: All Digital TV • H.263: A Standard for VideoPhone • MPEG-4: Content-Based Description 2 1 Analog Video Signal: Raster Scan 3 Odd and Even Scan Lines 4 2 Analog Video Signal: Image line 5 Analog Video Standards • All video standards are in • Almost any color can be reproduced by mixing the 3 additive primaries: R (red) , G (green) , B (blue) • 3 main different representations: – Composite – Component or S-Video (Y/C) 6 3 Composite Video 7 Component Analog Video • Each primary is considered as a separate monochromatic video signal • Basic presentation: R G B • Other RGB based: – YIQ – YCrCb – YUV – HSI To Color Spaces Demo 8 4 Composite Video Signal Encoding the Chrominance over Luminance into one signal (saving bandwidth): – NTSC (National TV System Committee) North America, Japan – PAL (Phased Alternation Line) Europe (Including Israel) – SECAM (Systeme Electronique Color Avec Memoire) France, Russia and more 9 Analog Standards Comparison NTSC PAL/SECAM Defined 1952 1960 Scan Lines/Field 525/262.5 625/312.5 Active horiz. lines 480 576 Subcarrier Freq. 3.58MHz 4.43MHz Interlacing 2:1 2:1 Aspect ratio 4:3 4:3 Horiz. Resol.(pel/line) 720 720 Frames/Sec 29.97 25 Component Color TUV YCbCr 10 5 Analog Video Equipment • Cameras – Vidicon, Film, CCD) • Video Tapes (magnetic): – Betacam, VHS, SVHS, U-matic, 8mm ... -

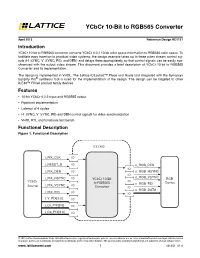

Ycbcr 10-Bit to RGB565 Converter

YCbCr 10-Bit to RGB565 Converter April 2013 Reference Design RD1151 Introduction YCbCr 10-bit to RGB565 converter converts YCbCr 4:2:2 10-bit color space information to RGB565 color space. To facilitate easy insertion to practical video systems, the design example takes up to three video stream control sig- nals (H_SYNC, V_SYNC, FID, and DEN) and delays them appropriately, so that control signals can be easily syn- chronized with the output video stream. This document provides a brief description of YCbCr 10-bit to RGB565 Converter and its implementation. The design is implemented in VHDL. The Lattice iCEcube2™ Place and Route tool integrated with the Synopsys Synplify Pro® synthesis tool is used for the implementation of the design. The design can be targeted to other iCE40™ FPGA product family devices. Features • 10-bit YCbCr 4:2:2 input and RGB565 output • Pipelined implementation • Latency of 4 cycles • H_SYNC, V_SYNC, FID and DEN control signals for video synchronization • VHDL RTL and functional test bench Functional Description Figure 1. Functional Description iCE CMD i_PIX_CLK IO i_RESET_B IO o_RGB_DE N IO i_PIX_DEN IO o_RGB_HSYNC IO o_RGB_VSYNC i_PIX_HSYN C IO YCbCr 10-Bit RGB IO YCbCr to RGB565 Device IO o_RGB_FID Source i_PIX_VSYNC Converter IO o_RGB_DATA i_PIX_FID IO IO i_Y_PIX[9:0] IO i_Cr_PIX[9:0] IO i_Cb_PIX[9:0] IO © 2013 Lattice Semiconductor Corp. All Lattice trademarks, registered trademarks, patents, and disclaimers are as listed at www.latticesemi.com/legal. All other brand or product names are trademarks or registered trademarks of their respective holders. The specifications and information herein are subject to change without notice. -

Color Spaces

RGB Color Space 15 Chapter 3: Color Spaces Chapter 3 Color Spaces A color space is a mathematical representation RGB Color Space of a set of colors. The three most popular color models are RGB (used in computer graphics); The red, green, and blue (RGB) color space is YIQ, YUV, or YCbCr (used in video systems); widely used throughout computer graphics. and CMYK (used in color printing). However, Red, green, and blue are three primary addi- none of these color spaces are directly related tive colors (individual components are added to the intuitive notions of hue, saturation, and together to form a desired color) and are rep- brightness. This resulted in the temporary pur- resented by a three-dimensional, Cartesian suit of other models, such as HSI and HSV, to coordinate system (Figure 3.1). The indicated simplify programming, processing, and end- diagonal of the cube, with equal amounts of user manipulation. each primary component, represents various All of the color spaces can be derived from gray levels. Table 3.1 contains the RGB values the RGB information supplied by devices such for 100% amplitude, 100% saturated color bars, as cameras and scanners. a common video test signal. BLUE CYAN MAGENTA WHITE BLACK GREEN RED YELLOW Figure 3.1. The RGB Color Cube. 15 16 Chapter 3: Color Spaces Red Blue Cyan Black White Green Range Yellow Nominal Magenta R 0 to 255 255 255 0 0 255 255 0 0 G 0 to 255 255 255 255 255 0 0 0 0 B 0 to 255 255 0 255 0 255 0 255 0 Table 3.1. -

Color Image Segmentation Using Perceptual Spaces Through Applets for Determining and Preventing Diseases in Chili Peppers

African Journal of Biotechnology Vol. 12(7), pp. 679-688, 13 February, 2013 Available online at http://www.academicjournals.org/AJB DOI: 10.5897/AJB12.1198 ISSN 1684–5315 ©2013 Academic Journals Full Length Research Paper Color image segmentation using perceptual spaces through applets for determining and preventing diseases in chili peppers J. L. González-Pérez1 , M. C. Espino-Gudiño2, J. Gudiño-Bazaldúa3, J. L. Rojas-Rentería4, V. Rodríguez-Hernández5 and V.M. Castaño6 1Computer and Biotechnology Applications, Faculty of Engineering, Autonomous University of Queretaro, Cerro de las Campanas s/n, C.P. 76010, Querétaro, Qro., México. 2Faculty of Psychology, Autonomous University of Queretaro, Cerro de las Campanas s/n, C.P. 76010, Querétaro, Qro., México. 3Faculty of Languages Letters, Autonomous University of Queretaro, Cerro de las Campanas s/n, C.P. 76010, Querétaro, Qro., México. 4New Information and Communication Technologies, Faculty of Engineering, Department of Intelligent Buildings, Autonomous University of Queretaro, Cerro de las Campanas s/n, C.P. 76010, Querétaro, Qro., México. 5New Information and Communication Technologies, Faculty of Computer Science, Autonomous University of Queretaro, Cerro de las Campanas s/n, C.P. 76010, Querétaro, Qro., México. 6Center for Applied Physics and Advanced Technology, National Autonomous University of Mexico. Accepted 5 December, 2012 Plant pathogens cause disease in plants. Chili peppers are one of the most important crops in the world. There are currently disease detection techniques classified as: biochemical, microscopy, immunology, nucleic acid hybridization, identification by visual inspection in vitro or in situ but these have the following disadvantages: they require several days, their implementation is costly and highly trained. -

Image Chroma Enhancement with Quality Preservation

International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 Vol. 3 Issue 8, August - 2014 Image Chroma Enhancement with Quality Preservation Haripriya A P Riyas K K MTech student (Communication Engineering Asst. Professor, Department of ECE, and signal processing), Department of ECE, Govt. Engineering College Thrissur, Govt. Engineering College Thrissur, Thrissur District, Thrissur District, Kerala, India Kerala, India Abstract— The goal of Image enhancement in general is to automated image processing techniques. Image enhancement provide a more appealing image or video to achieve more vivid processes consist of a collection of techniques that seek to or realistic colors and to increase the visibility of details. The improve the visual appearance of an image or to convert the chroma enhancements are intimately related to different image to a form better suited for analysis by a human or a attributes of visual sensation and it helps to achieve the best machine [1]. possible combinations of colorfulness and contrast in an efficient The goal of chroma enhancement can be either to increase manner. In practice one of the key issues in the image chroma the colorfulness, or to increase the saturation. The best way to enhancement applications is the strong contrast reduction of the increase the chroma without a lightness change is to use a enhanced image. While there exists several contrast more sophisticated color model, such as CIELAB or enhancement approaches for improving the image contrast, it is CIECAM02, rather than YCbCr. Any color algorithms using still an active field of research. Nowadays most of the High Definition industrial cameras and projectors offer images with the CIELAB or CIECAM02 models guarantee the same color high chroma details.