Data Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Communication and Computer Network Unit 1

Department of Collegiate Education GOVERNMENT FIRST GRADE COLLEGE RAIBAG-591317 Department of Computer Science Lecture Notes SUBJECT : DATA COMMUNICATIONS AND COMPUTER NETWORKS SUBJECT CODE : 17BScCST61 CLASS : BSC VI Sem Paper-1 Subject In charge Smt Bhagirathi Halalli Assistant Professor 2019-20 Data Communication and Computer Network Unit 1 Unit 1: Introduction Content: 1.1. Data communications, 1.2. Networks, 1.3. The internet, 1.4. Protocols and standards, 1.5. Network models – OSI model, 1.6. TCP/IP protocol suite, 1.7. Addressing. 1.1.Data Communications, Data refers to the raw facts that are collected while information refers to processed data that enables us to take decisions. Ex. When result of a particular test is declared it contains data of all students, when you find the marks you have scored you have the information that lets you know whether you have passed or failed. The word data refers to any information which is presented in a form that is agreed and accepted upon by is creators and users. Data Communication Data Communication is a process of exchanging data or information In case of computer networks this exchange is done between two devices over a transmission medium. This process involves a communication system which is made up of hardware and software. The hardware part involves the sender and receiver devices and the intermediate devices through which the data passes. The software part involves certain rules which specify what is to be communicated, how it is to be communicated and when. It is also called as a Protocol. The following sections describe the fundamental characteristics that are important for the effective working of data communication process and are followed by the components that make up a data communications system. -

The OSI Model

Data Encapsulation & OSI & TCP/IP Models Week 2 Lecturer: Lucy White [email protected] Office : 324 1 Network Protocols • A protocol is a formal description of a set of rules and conventions that govern a particular aspect of how devices on a network communicate. Protocols determine the format, timing, sequencing, flow control and error control in data communication. Without protocols, the computer cannot make or rebuild the stream of incoming bits from another computer into the original format. • Protocols control all aspects of data communication, which include the following: - How the physical network is built - How computers connect to the network - How the data is formatted for transmission - The setting up and termination of data transfer sessions - How that data is sent - How to deal with errors 2 Protocol Suites & Industry Standard • Many of the protocols that comprise a protocol suite reference other widely utilized protocols or industry standards • Institute of Electrical and Electronics Engineers (IEEE) or the Internet Engineering Task Force (IETF) • The use of standards in developing and implementing protocols ensures that products from different manufacturers can work together for efficient communications 3 Function of Protocol in Network Communication A standard is a process or protocol that has been endorsed by the networking industry and ratified by a standards organization 4 Protocol Suites TCP/IP Protocol Suite and Communication Function of Protocol in Network Communication 6 Function of Protocol in Network Communication • Technology independent Protocols -Many diverse types of devices can communicate using the same sets of protocols. This is because protocols specify network functionality, not the underlying technology to support this functionality. -

Logical Link Control and Channel Scheduling for Multichannel Underwater Sensor Networks

ICST Transactions on Mobile Communications and Applications Research Article Logical Link Control and Channel Scheduling for Multichannel Underwater Sensor Networks Jun Li ∗, Mylene` Toulgoat, Yifeng Zhou, and Louise Lamont Communications Research Centre Canada, 3701 Carling Avenue, Ottawa, ON. K2H 8S2 Canada Abstract With recent developments in terrestrial wireless networks and advances in acoustic communications, multichannel technologies have been proposed to be used in underwater networks to increase data transmission rate over bandwidth-limited underwater channels. Due to high bit error rates in underwater networks, an efficient error control technique is critical in the logical link control (LLC) sublayer to establish reliable data communications over intrinsically unreliable underwater channels. In this paper, we propose a novel protocol stack architecture featuring cross-layer design of LLC sublayer and more efficient packet- to-channel scheduling for multichannel underwater sensor networks. In the proposed stack architecture, a selective-repeat automatic repeat request (SR-ARQ) based error control protocol is combined with a dynamic channel scheduling policy at the LLC sublayer. The dynamic channel scheduling policy uses the channel state information provided via cross-layer design. It is demonstrated that the proposed protocol stack architecture leads to more efficient transmission of multiple packets over parallel channels. Simulation studies are conducted to evaluate the packet delay performance of the proposed cross-layer protocol stack architecture with two different scheduling policies: the proposed dynamic channel scheduling and a static channel scheduling. Simulation results show that the dynamic channel scheduling used in the cross-layer protocol stack outperforms the static channel scheduling. It is observed that, when the dynamic channel scheduling is used, the number of parallel channels has only an insignificant impact on the average packet delay. -

Communications

The Essentials of Datalink Communications The origins and course of Air-to-Ground Messaging The Essentials of Datalink Communications Contents international Trip Support | international Trip The Technology that HR Created 02 Inmarsat Satellite 06 Growing into an Operational Necessity 03 Iridium Satellite 07 UAS Communications Mechanisms 04 Upcoming Regulations 10 VHF Radio 05 The Future of ACARS 11 © Copyright 2016 Page 1/12 The Technology that HR Created t one time in the not-so-distant past, pilots and other ARINC’s solution was an automated system, called the A flight crew members were paid different rates for the ARINC Communications Addressing and Reporting System, time they were airborne versus the time they were performing or ACARS for short, which sent short text data from the ground operations. Events like aircraft pushback, taxi, takeoff, avionics of the aircraft directly to the ground-based entities landing, and gate arrival were transmitted via voice over radio through Very High Frequency (VHF) radio frequencies frequencies to operators who would relay this information back without any crewmember involvement. The aircraft was to the airlines. The pilots were responsible for self-reporting programmed to take advantage of switches and automation their own times and movements. Understanding that people points on the aircraft, resulting in the creation of a set of can sometimes be forgetful, or worse, willfully manipulative, messages referred to as the OOOI report. An OOOI report is the major airlines began searching for a solution that tracked any of four messages: Out, Off, On and In. Still in wide-scale crewmember pay in a more structured and accurate way. -

Telematics Chapter 3: Physical Layer

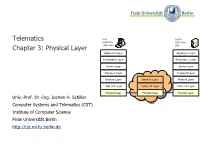

Telematics User Server watching with video Chapter 3: Physical Layer video clip clips Application Layer Application Layer Presentation Layer Presentation Layer Session Layer Session Layer Transport Layer Transport Layer Network Layer Network Layer Network Layer Data Link Layer Data Link Layer Data Link Layer Physical Layer Physical Layer Physical Layer Univ.-Prof. Dr.-Ing. Jochen H. Schiller Computer Systems and Telematics (CST) Institute of Computer Science Freie Universität Berlin http://cst.mi.fu-berlin.de Contents ● Design Issues ● Theoretical Basis for Data Communication ● Analog Data and Digital Signals ● Data Encoding ● Transmission Media ● Guided Transmission Media ● Wireless Transmission (see Mobile Communications) ● The Last Mile Problem ● Multiplexing ● Integrated Services Digital Network (ISDN) ● Digital Subscriber Line (DSL) ● Mobile Telephone System Univ.-Prof. Dr.-Ing. Jochen H. Schiller ▪ cst.mi.fu-berlin.de ▪ Telematics ▪ Chapter 3: Physical Layer 3.2 Design Issues Univ.-Prof. Dr.-Ing. Jochen H. Schiller ▪ cst.mi.fu-berlin.de ▪ Telematics ▪ Chapter 3: Physical Layer 3.3 Design Issues ● Connection parameters ● mechanical OSI Reference Model ● electric and electronic Application Layer ● functional and procedural Presentation Layer ● More detailed ● Physical transmission medium (copper cable, Session Layer optical fiber, radio, ...) ● Pin usage in network connectors Transport Layer ● Representation of raw bits (code, voltage,…) Network Layer ● Data rate ● Control of bit flow: Data Link Layer ● serial or parallel transmission of bits Physical Layer ● synchronous or asynchronous transmission ● simplex, half-duplex, or full-duplex transmission mode Univ.-Prof. Dr.-Ing. Jochen H. Schiller ▪ cst.mi.fu-berlin.de ▪ Telematics ▪ Chapter 3: Physical Layer 3.4 Design Issues Transmitter Receiver Source Transmission System Destination NIC NIC Input Abcdef djasdja dak jd ashda kshd akjsd asdkjhasjd as kdjh askjda Univ.-Prof. -

Radio Communications in the Digital Age

Radio Communications In the Digital Age Volume 1 HF TECHNOLOGY Edition 2 First Edition: September 1996 Second Edition: October 2005 © Harris Corporation 2005 All rights reserved Library of Congress Catalog Card Number: 96-94476 Harris Corporation, RF Communications Division Radio Communications in the Digital Age Volume One: HF Technology, Edition 2 Printed in USA © 10/05 R.O. 10K B1006A All Harris RF Communications products and systems included herein are registered trademarks of the Harris Corporation. TABLE OF CONTENTS INTRODUCTION...............................................................................1 CHAPTER 1 PRINCIPLES OF RADIO COMMUNICATIONS .....................................6 CHAPTER 2 THE IONOSPHERE AND HF RADIO PROPAGATION..........................16 CHAPTER 3 ELEMENTS IN AN HF RADIO ..........................................................24 CHAPTER 4 NOISE AND INTERFERENCE............................................................36 CHAPTER 5 HF MODEMS .................................................................................40 CHAPTER 6 AUTOMATIC LINK ESTABLISHMENT (ALE) TECHNOLOGY...............48 CHAPTER 7 DIGITAL VOICE ..............................................................................55 CHAPTER 8 DATA SYSTEMS .............................................................................59 CHAPTER 9 SECURING COMMUNICATIONS.....................................................71 CHAPTER 10 FUTURE DIRECTIONS .....................................................................77 APPENDIX A STANDARDS -

Global Operational Data Link Document (GOLD)

Global Operational Data Link Document (GOLD) This edition has been issued by the GOLD ad hoc Working Group for the Asia/Pacific Air Navigation Planning and Implementation Regional Group (APANPIRG), the North Atlantic Systems Planning Group (NAT SPG), the European Air Navigation Planning Group (EANPG), the South American Region Implementation Group (SAM/IG) and the African-Indian Ocean Planning and Implementation Regional Group (APIRG). Second Edition — 26 April 2013 International Civil Aviation Organization GOLD (1) Second Edition — 26 April 2013 This document is available by accessing any of the following ICAO regional websites. Asia and Pacific (APAC) Office http://www.icao.int/apac Eastern and Southern African (ESAF) Office www.icao.int/esaf European and North Atlantic (EUR/NAT) Office http://www.paris.icao.int Middle East (MID) Office www.icao.int/mid North American, Central American and Caribbean (NACC) Office http://www.mexico.icao.int South American (SAM) Office http://www.lima.icao.int Western and Central African (WACAF) Office http://www.icao.int/wacaf For more information, contact the ICAO regional office. Global Operational Data Link Document (GOLD) This edition has been issued by the GOLD ad hoc Working Group for the Asia/Pacific Air Navigation Planning and Implementation Regional Group (APANPIRG), the North Atlantic Systems Planning Group (NAT SPG), the European Air Navigation Planning Group (EANPG), the South American Region Implementation Group (SAM/IG) and the African-Indian Ocean Planning and Implementation Regional Group (APIRG). Second Edition — 26 April 2013 International Civil Aviation Organization GOLD (i) Second Edition — 26 April 2013 (ii) Global Operational Data Link Document (GOLD) AMENDMENTS The issue of amendments is announced by the ICAO Regional Offices concerned, which holders of this publication should consult. -

Android and Wireless Data-Extraction Using Wi-Fi

Android and Wireless data-extraction using Wi-Fi Bert Busstra N-A. Le-Khac, M-Tahar Kechadi School of Computer Science & Informatics School of Computer Science & Informatics University College Dublin University College Dublin Dublin 4, Ireland Dublin 4, Ireland [email protected] {an.lekhac, tahar.kechadi}@ucd.ie Abstract—Today, mobile phones are very popular, fast growing To investigate a mobile device an investigator needs to technology. Mobile phones of the present day are more and more interact with the device directly. The examiner must be like small computers. The so-called "smartphones" contain a sufficiently trained to examine the device. He has to think wealth of information each. This information has been proven to before he acts, because he must know exactly what effects his be very useful in crime investigations, because relevant evidence actions have on the data, and he must be prepared to prove it. If can be found in data retrieved from mobile phones used by criminals. In traditional methods, the data from mobile phones the examiner did change the data, he must be prepared to can be extracted using an USB-cable. However, for some reason explain why it was necessary and what data has changed. For this USB-cable connection cannot be made, the data should be this reason the examiner should document every action taken. extracted in an alternative way. In this paper, we study the In a traditional way, the data from mobile devices can be possibility of extracting data from mobile devices using a Wi-Fi extracted using an USB-cable. -

Sharing a Common Medium: Media Access Protocols

MIT 6.02 DRAFT Lecture Notes Fall 2010 (Last update: October 18, 2010) Comments, questions or bug reports? Please contact [email protected] LECTURE 10 Sharing a Common Medium: Media Access Protocols These are the lecture notes for Lectures 10 and 11 in Fall 2010. In this course so far, we have studied various techniques to develop a point-to-point link between two nodes communicating over a channel. The link includes techniques to: synchronize the receiver with the sender; ensure that there are enough transitions between voltage levels (e.g., using 8b/10b encoding); to cope with inter-symbol interference and noise; and to use channel coding to correct and detect bit errors. There are many communication channels, notably radio (wireless) and certain kinds of wired links (coaxial cables), where multiple nodes can all be connected and hear each other’s transmissions (either perfectly or to varying degrees). The next few lectures ad- dress the fundamental question of how such a common communication channel—also called a shared medium—can be shared between the different nodes. We will study two fundamental ways of sharing a medium: time sharing and frequency sharing. The idea in time sharing is to have the nodes coordinate with each other to divide up the access to the medium one at a time, in some fashion. The idea in frequency sharing is to divide up the frequency range available between the different transmitting nodes in a way that there is little or no interference between concurrently transmitting nodes. This lecture and the next one focus on approaches to time sharing. -

Data Links Functions, Attributes and Latency

Nichols, Ryan, Mumm, Lonstein, & Carter Chapter 13: Data Links Functions, Attributes and Latency Student Learning Objectives The student will learn about the data-link function of the UAS which allows for bi-directional communication and data transmissions between UAV and its ground station. The Data Link is a vital component of an UAS. The student will learn the respective functions of each component part and considerations are necessary and how to evaluate their importance when developing a UAS. While the focus of the lesson will be on military applications, the considerations will be equally important for those designing and deploying UAS for civilian purposes. While the design of the Datalink must have requisite attributes that allow the system to function as intended in various environments globally, it must be able to do so securely and effectively. Issues such as Data Link security, interception, deception and signal latency are all attributes that must be balanced to achieve fast and secure data communications between the components of the UAS. What are the Types of UAV’s and how are they Categorized? UAV’s are most often divided into four categories based upon their mission duration and operational radius. High Altitude, Long Endurance (“HALE”) most often deployed for reconnaissance, interception or attack; Medium altitude, moderate range most often used for reconnaissance and combat effect assessment; Low cost, short range small UAV’s. (See Figure 13-1.) Components of the UAS Data-Link and their functions The UAV and Ground Control Station There are four essential communication and data processing operations the UAS must be able to efficiently and effectively carry out. -

High-Level Data Link Control

ELEC3030 (EL336) Computer Networks S Chen High-Level Data Link Control • This class of data link layer protocols includes High-level Data Link Control (HDLC), Link Access Procedure Balanced (LAPB) for X.25, Link Access Procedure for D-channel (LAPD) for ISDN, and Logic Link Control (LLC) for FDDI • The frame format is: Flag Address Control Data FCS Flag Note that address and control bits 8 8 8 variable 16 8 can be extended to 16 bits, so bit position 12 3 4 5 6 7 8 N(S)=send sequence number N(R)=receive sequence number that sequence number is 7-bit Information: 0 N(S) P/F N(R) S=supervisory function bits P/F • Frame flag: 01111110, so bit Supervisory: 1 0 S N(R) M=unumbered function bits stuffing is used Unumbered: 1 1 M P/F M P/F=poll/final bit • Address: For multipoint operation, it is used to identify the terminal that transmits or receives the frame and, in point-to-point link, it is used to distinguish Commands from Responses (2nd bit for C/R: 0/1). The address is now extended to 16 bits (1st bit indicates long/short 16/8 bits) • Checksum: FCS contains the remainder of a 16-bit CRC calculation of the frame. It may be extended to 32 bits, using a 32-bit CRC • Control: Three types of frames, I, S and U frames. The old protocol uses a sliding window with 3-bit sequence number and the maximum window size is N = 7. The control field is now extended to 16 bits with 7-bit sequence number 60 ELEC3030 (EL336) Computer Networks S Chen HDLC (continue) • I-frames: carry user data. -

Sprint Pcs Data Link - Wireless Wan Product Annex

SPRINT PCS DATA LINK - WIRELESS WAN PRODUCT ANNEX The following terms and conditions in this Sprint PCS Data Link – Wireless WAN Product Annex (“Annex”), together with the Sprint Standard Terms and Conditions for Communications Services (“Standard Terms and Conditions”), the agreement (“Agreement”) under which Customer is purchasing Sprint PCS Data Link – Wireless WAN, and the agreement (“Wireline Agreement”) under which Customer purchased wireline services from Sprint needed to operate Sprint PCS Data Link – Wireless WAN (if purchased from Sprint), govern Sprint’s provision of Sprint PCS Data Link – Wireless WAN to Customer. Terms not otherwise defined herein will have the meanings set forth in the Standard Terms and Conditions, Agreement, the Wireless Services Product Annex and Wire Line Agreement. 1. DESCRIPTION 1.1. Sprint PCS Data Link - Wireless WAN requires a dedicated connection between the Nationwide Sprint PCS® Network and Customer’s wireline network. Customer has three options for this dedicated connection: MPLS VPN, IP VPN or SprintLink Frame Relay. A CDMA wireless modem or other Sprint- certified CDMA wireless telemetry device is used to connect wireless devices to Customer’s wireline network. Customer must obtain MPLS VPN, IP VPN or SprintLink Frame Relay services under the Wireline Agreement or through another provider acceptable to Sprint, in its sole discretion. 1.2. Connection from the wireless device is established through a user name and password-protected login. Keying on the domain portion of the user name – for example, @yourcompany.com – the Sprint PCS Authentication, Authorization, Accounting (“AAA”) Server proxies authentication to the AAA Server hosted by Sprint, or to the AAA behind Customer’s firewall through a secure IPSec tunnel MPLS VPN or SprintLink Frame Relay PVC that's established between the Nationwide Sprint PCS® Network and the Customer’s wireline network.