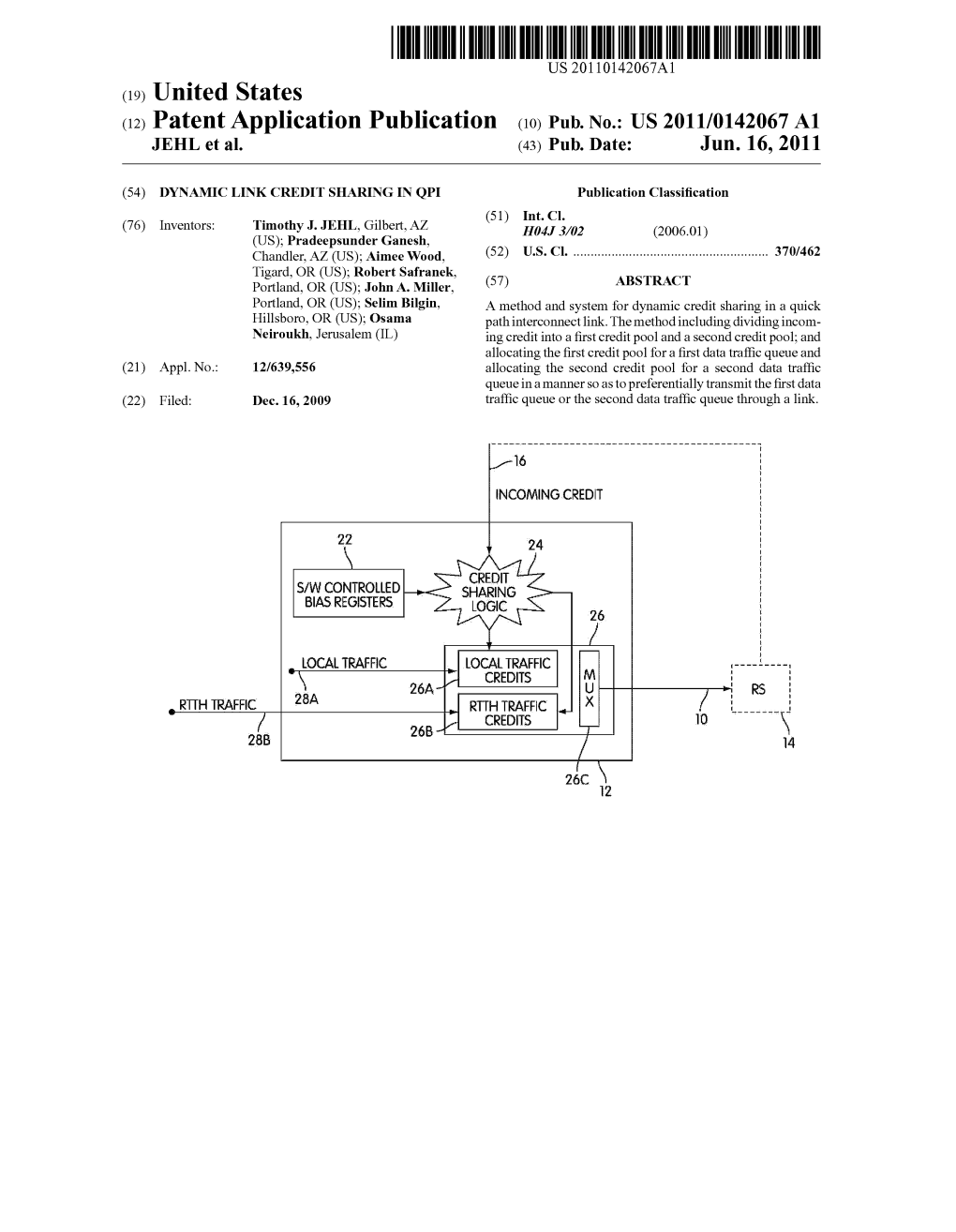

(12) Patent Application Publication (10) Pub. No.: US 2011/0142067 A1 JEHL Et Al

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Extended Lifecycle Series

MAINBOARD D2778-D Issue April 2010 ATX Extended Lifecycle Series Pages 2 ® Intel X58 Express Chipset for DDR3 - 1333 SDRAM Memory (ECC Support) ® TM and Intel Xeon® (“Nehalem” and “Westmere”) and Core i7 Processors Chipset: Memory: Intel® X58 Express Chipset DDR3 800 SDRAM (Tylersburg-36S / ICH10-R) DDR3 1066 SDRAM DDR3 1333 SDRAM Processors: with ECC Support (depends on processor) ® ® Intel Xeon Processor 5500 / 5600 series ® ® Product Features: Intel Xeon Processor 3500 / 3600 series Intel® CoreTM i7 Processor series Dual PCIe x16 (Gen2) onboard 5.1 multichannel audio onboard Compatible with Intel® processors LGA1366 (up to ® LSI FW322 FireWire™ onboard 130W TDP) supporting Intel QuickPath USB 2.0 onboard Interconnect GbE LAN onboard supporting ASF 2.0 Serial ATA II RAID onboard Trusted Platform Modul V1.2 onboard Intel® QuickPath technology Data Sheet Issue: April 2010 Datasheet D2778-D Page 2 / 2 Audio Realtek ALC663, 5.1 Multichannel, High Definition Audio Board Size ATX: 12“ x 9.6“ (304.8 x 243.8 mm) ® LAN Chipset Intel “Tylersburg” X58 Express Chipset / ICH10-R Realtek 8111DP Ethernet Controller with 10/100/1000 MBit/s, DASH 1.1, Memory Wake-on-LAN (WoL) by interesting Packets, Link Status Change and Magic 6 DIMM Sockets DDR3, max. 24GB, Single / Dual / Triple Channel Packet™, PXE Support, BIOS MAC Address Display 800/1066/1333MHz, single rank / dual rank unbuffered, ECC / no n-ECC Drives support (depends on processor) - 6 Serial ATA II 300 Interfaces (up to 3GBit/s, NCQ); RAID 0, 1, 10, 5 Processors Intel® Xeon® Processor 5500 / 5600 series (Nehalem-EP / Westmere) Features D2778-D Intel® Xeon® Processor 3500 /3600 series (Nehalem-WS / Westmere) Chipset Intel X58 / ICH10R Intel® Core™ i7 Processor series Board Size ATX Socket B (LGA1366), max. -

User Guide XFX X58i Motherboard

User Guide XFX X58i Motherboard Table of Contents Chapter 1 Introduction .............................................................................................3 1.1 Package Checklist .................................................................................................3 1.2 Specifi cations .......................................................................................................4 1.3 Mainboard Layout .................................................................................................5 1.4 Connecting Rear Panel I/O Devices ........................................................................6 Chapter 2 Hardware Setup .......................................................................................7 2.1 Choosing a Computer Chassis ................................................................................7 2.2 Installing the Mainboard .......................................................................................7 2.3 Installation of the CPU and CPU Cooler ..................................................................8 2.3.1 Installation of the CPU ....................................................................................8 2.3.2 Installation of the CPU Cooler .........................................................................9 2.4 Installation of Memory Modules .............................................................................9 2.5 Connecting Peripheral Devices .............................................................................10 2.5.1 -

Fuzhouxiang Import/Export (Shunxiang) Co., Ltd

Fuzhouxiang Import/Export (Shunxiang) Co., Ltd. We always insist on the principle “Quality of product & benefit for customer at first forever”, assure that all products which we supply are quality goods absolutely. We have built good relationships with our customers with 100% quality guarantee, most competitive price, prompt delivery & best service for the past several years. Please join us, you will feel our sincerity, fairy & conscience. Apple'Mac-Book Pro MGXA2LL/A 15-Inch Laptop Notebook Computer with Retina Display (2.2 GHz Intel Core i7 Processor, 16 GB RAM, 256 GB HDD) Mac-Book Pro MGXA2LL/A 15- Inch Laptop with Retina Display (2.2 GHz Intel Core i7 Processor, 16 GB RAM, 256 GB HDD) 2.2 GHz Quad-Core Intel Core i7 Processor (Turbo Boost up to 3.4 GHz, 6 MB shared L3 cache) 16 GB 1600 MHz DDR3L RAM; 256 GB PCIe-based Flash Storage 15.4- inch IPS Retina Display, 2880-by-1800 resolution Intel Iris Pro Graphics OS X Mavericks, Up to 8 Hours of Battery Life Apple'Mac-Book Pro 15" with Apple'Care+ Z0SH0000N CTO with Touch Bar: 2.9GHz quad-core Intel Core i7, 2TB - Space Gray Mac-Book Pro with Touch Bar 2.9GHz quad-core Intel Core i7, Turbo Boost up to 3.8GHz, with 6MB shared L3 cache (Configurable to 2.9GHz quad-core Intel Core i7, Turbo Boost up to 3.8GHz, with 8MB shared L3 cache) 2TB PCIe-based onboard SSD 16GB of 2133MHz LPDDR3 onboard memory 15.4-inch (diagonal) LED-backlit display with IPS technology; 2880-by- 1800 native resolution at 220 pixels per inch with support for millions of colors 500 nits brightness Radeon Pro 460 with -

Intel® Desktop Board DX58SO Technical Product Specification

Взято с сайта www.wit.ru Intel® Desktop Board DX58SO Technical Product Specification October 2008 Order Number: E51290-001US The Intel® Desktop Board DX58SO may contain design defects or errors known as errata that may cause the product to deviate from published specifications. Current characterized errata are documented in the Intel Desktop Board DX58SO Specification Update. Revision History Revision Revision History Date -001 First release of the Intel® Desktop Board DX58SO Technical Product October 2008 Specification This product specification applies to only the standard Intel® Desktop Board DX58SO with BIOS identifier SOX5810J.86A. Changes to this specification will be published in the Intel Desktop Board DX58SO Specification Update before being incorporated into a revision of this document. INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. All Intel® desktop boards are evaluated as Information Technology Equipment (I.T.E.) for use in personal computers (PC) for installation in homes, offices, schools, computer rooms, and similar locations. -

Intel® Desktop Board DX58SO2 Technical Product Specification

Intel® Desktop Board DX58SO2 Technical Product Specification November 2010 Order Number: G13828-001US The Intel Desktop Board DX58SO2 may contain design defects or errors known as errata that may cause the product to deviate from published specifications. Current characterized errata are documented in the Intel Desktop Board DX58SO2 Specification Updates. Revision History Revision Revision History Date -001 First release of the Intel® Desktop Board DX58SO2 Technical Product November 2010 Specification This product specification applies to only the standard Intel® Desktop Board DX58SO2 with BIOS identifier SOX5820J.86A. Changes to this specification will be published in the Intel Desktop Board DX58SO2 Specification Update before being incorporated into a revision of this document. INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. All Intel® desktop boards are evaluated as Information Technology Equipment (I.T.E.) for use in personal computers (PC) for installation in homes, offices, schools, computer rooms, and similar locations. -

Shuttle XPC Barebone SX58J3 – Special Product Features

Product Specification High-Performance Mini-PC Barebone Barebone for Intel Core i7 CPUs up to 6 cores SX58J3 Faster and smaller than probably any other machine on the market! This has been achieved thanks to the use of Intel Core i7 processors with 4 or 6 cores and up to 16 GB DDR3 memory on an X58 Express platform. The SX58J3 Barebone also includes two PCI- Express interfaces that support ATI CrossFire and NVIDIA SLI technologies. For ultra-high speeds, even in network settings, this model comes supplied with two Gigabit interfaces that can be combined if need be. Users will also welcome the huge range of easy-access connection options, with 10x USB and eSATA on front and back panels. Finally, the 80-PLUS-certified 500W power supply guarantees reliable performance. Feature Highlights • Black aluminium chassis cover J3 chassis • Drive bays: 1x 5.25“, 2x 3.5“ Chipset • Intel X58 Express + ICH10R • Socket 1366 • Supports Intel® Core™ i7 processors only. purposes ation CPU • Supports Quad-Core and Six-Core • Supports 4.8/6.4 GT/s QPI • Shuttle I.C.E. Heatpipe cooling • 2x PCI-Express x16 (v2.0) Slots • Supports Ati CrossFire & NVIDIA SLI • Supports 4x DDR3-1066/1333/1600(OC)* Memory • Supports Triple Channel + 1 Note: optical drive sold • Up to 16 GBytes total size separately. Images for illustration purposes only. • 4x SATA (internal) Drive • 2x External SATA (1x front, 1x rear) connectors • Supports RAID, NCQ, eSATA power supply • 7.1-ch HD-audio Other • Dual GigaBit LAN (supports Teaming) connectors • USB 2.0 (2x front, 8x rear, 1x onboard) One front USB is combined with eSATA Supports Intel Core i7-980X Six-Core • 500 Watt mini power supply Power supply 80 PLUS Bronze compliant Application • Performance Produktname: SX58J3 Bestellnummer: PC-SX58J3 © 2009 by Shuttle Computer Handels GmbH (Germany). -

In the United States District Court for the District of Delaware

Case 1:14-cv-00377-LPS Document 96 Filed 03/23/15 Page 1 of 73 PageID #: 2291 IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF DELAWARE INTEL CORPORATION, ) C.A. No. 14-377 (LPS) (CJB) ) Plaintiff, ) DEMAND FOR JURY TRIAL ) v. ) ) FUTURE LINK SYSTEMS, LLC, ) ) Defendant. ) FIRST AMENDED COMPLAINT FOR DECLARATORY JUDGMENT MORRIS, NICHOLS, ARSHT & TUNNELL LLP Jack B. Blumenfeld (#1014) Maryellen Noreika (#3208) 1201 North Market Street P.O. Box 1347 OF COUNSEL: Wilmington, DE 19899 (302) 658-9200 Adam Alper [email protected] KIRKLAND & ELLIS LLP [email protected] 555 California Street San Francisco, CA 94194 Attorneys for Plaintiff (415) 439-1400 Michael W. De Vries Tim G. Majors KIRKLAND & ELLIS LLP 333 S. Hope Street Los Angeles, CA 90071 (213) 680-8400 Gregory S. Arovas KIRKLAND & ELLIS LLP 601 Lexington Avenue New York, NY 10022 (212) 446-4766 Case 1:14-cv-00377-LPS Document 96 Filed 03/23/15 Page 2 of 73 PageID #: 2292 Plaintiff Intel Corporation (“Intel”), for its First Amended Complaint against Defendant Future Link Systems, LLC (“Future Link”), hereby alleges as follows: NATURE OF THE ACTION 1. This is an action for declaratory judgment that nine United States patents are not infringed, invalid, licensed, and/or exhausted pursuant to the Declaratory Judgment Act, 28 U.S.C. §§ 2201-02, and the Patent Laws of the United States, 35 U.S.C. § 100 et seq., and for such other relief as the Court deems just and proper. THE PARTIES 2. Plaintiff Intel is a corporation organized and existing under the laws of the State of Delaware having its principal place of business at 2200 Mission College Boulevard, Santa Clara, California, 95054. -

Intel® Desktop Board DX58SO2 Product Guide

Intel® Desktop Board DX58SO2 Product Guide Order Number: G13825-001 Revision History Revision Revision History Date ® -001 First release of the Intel Desktop Board DX58SO2 Product Guide November 2010 Disclaimer INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel may make changes to specifications and product descriptions at any time, without notice. Intel Desktop Board DX58SO2 may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order. Copies of documents which have an ordering number and are referenced in this document, or other Intel literature, may be obtained from Intel Corporation by going to the World Wide Web site at: http://www.intel.com/ or by calling 1-800-548-4725. Intel, Intel Core, and Xeon are trademarks of Intel Corporation in the United States and other countries. * Other names and brands may be claimed as the property of others. -

La Placa Base Del Pc

MANTENIMIENTO DE EQUIPOS INFORMÁTICOS Ignacio Moreno Velasco UNIVERSIDAD DE BURGOS Versión 7.4. Octubre 2019 4.- LA PLACA BASE DEL PC. Ignacio Moreno Velasco Apuntes Mantenimiento de Equipos Informáticos Tabla de contenido 4.1.- INTRODUCCIÓN 3 4.2.- CHIPSET 5 4.2.1.- Buses: 6 4.2.1.1.- Interfaz de bus 6 4.2.2.- Puente Norte 7 4.2.2.1.- Bus del sistema 7 Front Side Bus (FSB). Intel® ............................................................................................................................. 7 QuickPath Interconnect (QPI)Intel®: ................................................................................................................ 9 DMI (Direct Media Interface) ........................................................................................................................ 10 Bus del sistema de AMD: Hypertransport .................................................................................................... 11 4.2.3.- Puente Sur 13 4.2.3.1.- Bus de enlace 13 Ejemplo: Intel Direct Media Interface (DMI) ................................................................................................ 13 Ejemplo: Bus Hypertransport ........................................................................................................................ 14 4.2.4.- Evolución del chipset 17 4.2.4.1.- Pentium II-III, K6-Athlon 17 Bus del sistema (host bus) ........................................................................................................................... 17 Bus de enlace ............................................................................................................................................... -

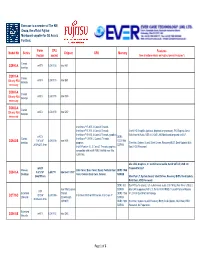

Of 8 Form CPU Features Model No Series Chipset CPU Memory Factor Socket (See at Bottom Details on Fujitsu Special Features*) Intel®Core™I7-2Xxx

Evercase is a member of The KM Group, the official Fujitsu Mainboard supplier for SE Asia & Far East. Form CPU Features Model No Series Chipset CPU Memory Factor socket (See at bottom details on Fujitsu Special Features*) Classic mATX LGA1155 Intel H61 D2990-A desktop D2991-A Classic mATX LGA1155 Intel B65 12V only PSU desktop necessary D3061-A Classic mATX LGA1155 Intel Q65 12V only PSU desktop necessary D3062-A Classic mATX LGA1155 Intel Q67 12V only PSU desktop necessary Intel®core™i7-800. 4 Cores/8 Threads Intel®core™i5-700. 4 Cores/4 Threads Intel® HD Graphics (optional, depends on processor), PCI Express Gen2, Intel®core™i5-600. 2 Cores/4 Threads, graphics Multichannel Audio, USB 2.0, GbE LAN, Mainboard prepared for EuP. mATX DDR3 Classic Intel®core™ i3-500. 2 Cores/4 Threads, 9.6"x8.4" LGA1156 Intel H55 1333/1066 D2942-B desktop graphics Silent fan, System Guard, Silent Drives, Recovery BIOS, Desk Update, Multi 243.8x213.3mm SDRAM Intel® Pentium G., 2 Cores/2 Threads, graphics Boot, HDD Password. compatible with intel® FMB 09a/09b max 95w (LGA156) Gbe LAN, Graphics, 5.1 multichannel audio, Serial SATA II, USB 2.0. mATX Prepared for EuP. Classic Intel Core 2 Duo, Core 2 Quad, Pentium Dual DDR3 1066 D3041-A 9.6”x7.6” LGA775 Intel G41 / ICH7 Desktop Core, Celeron Dual Core, Celeron. SDRAM 244x193mm Silent fan LT, System Guard, Silent Drives, Recovery BIOS, Desk Update, Multi Boot, HDD Password. DDR3 800 Dual PCIex16 (Gen2), 5.1 multichannel audio, LSI FW322 Fire Wire, USB 2.0, Intel X58 Express SDRAM Gbe LAN supporting ASF 2.0, Serial ATA II RAID, Trusted Platform Module ATX Extended Chipset DDR3 1066 V1.2, Intel QuickPath technology. -

Intel X58 Product Brief.Qxp

Product Brief Intel® X58 Express Chipset Highest performing desktop platform for extreme gamers and demanding enthusiasts Desktop PC platforms based on the Intel® X58 Express Chipset and Intel® Core™ i7 processor family drive breakthrough gaming and digital media content creation performance with state-of-the-art technology transitions targeting extreme gamers, demand enthusiasts and mainstream PC users. The Intel X58 Express Chipset The Intel X58 Express Chipset continues to push innovation with capabilities designed to deliver quality, performance and headroom The Intel X58 Express Chipset achieves this per- formance by supporting the latest Intel® Core™ i7 family of processors at 6.4 GT/s and 4.8 GT/s speeds via the Quick Path Interconnect (QPI), and enabling increased system band- width by supporting industry leading technologies, such as PCI Express 2.0 graphics, Intel® Turbo Memory and support for Intel® High-Performance Solid State drives. PCI Express* 2.0 Intel’s high-end desktop chipset continues support for PCI Express 2.0 and adds flexibility with support of dual x16 and up to quad x8 graphics card configurations and combinations in between. The greatly improved 32GB/s of graphics band- width capability enables much higher levels of performance on graphics intensive applications such as high end gaming and video rendering for digital content creation. Faster System Performance With the growing imbalance between processor and memory DDR3 memory 8.5 Gb/s performance, it is critical to optimize the memory controller Intel® Core™ i7 Processor family DDR3 memory 8.5 Gb/s design to obtain the maximum possible performance from the DDR3 memory 8.5 Gb/s memory subsystem. -

Intel® Desktop Board DX58SO Product Guide

Intel® Desktop Board DX58SO Product Guide Order Number: E43166-001 Revision History Revision Revision History Date -001 First release of the Intel® Desktop Board DX58SO Product Guide August 2008 If an FCC declaration of conformity marking is present on the board, the following statement applies: FCC Declaration of Conformity This device complies with Part 15 of the FCC Rules. Operation is subject to the following two conditions: (1) this device may not cause harmful interference, and (2) this device must accept any interference received, including interference that may cause undesired operation. For questions related to the EMC performance of this product, contact: Intel Corporation, 5200 N.E. Elam Young Parkway, Hillsboro, OR 97124 1-800-628-8686 This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC Rules. These limits are designed to provide reasonable protection against harmful interference in a residential installation. This equipment generates, uses, and can radiate radio frequency energy and, if not installed and used in accordance with the instructions, may cause harmful interference to radio communications. However, there is no guarantee that interference will not occur in a particular installation. If this equipment does cause harmful interference to radio or television reception, which can be determined by turning the equipment off and on, the user is encouraged to try to correct the interference by one or more of the following measures: • Reorient or relocate the receiving antenna. • Increase the separation between the equipment and the receiver. • Connect the equipment to an outlet on a circuit other than the one to which the receiver is connected.